Abstract

The unique features of quantum theory offer a powerful new paradigm for information processing. Translating these mathematical abstractions into useful algorithms and applications requires quantum systems with significant complexity and sufficiently low error rates. Such quantum systems must be made from robust hardware that can coherently store, process, and extract the encoded information, as well as possess effective quantum error correction (QEC) protocols to detect and correct errors. Circuit quantum electrodynamics (cQED) provides a promising hardware platform for implementing robust quantum devices. In particular, bosonic encodings in cQED that use multi-photon states of superconducting cavities to encode information have shown success in realizing hardware-efficient QEC. Here, we review recent developments in the theory and implementation of QEC with bosonic codes and report the progress made toward realizing fault-tolerant quantum information processing with cQED devices.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

A quantum computer harnesses unique features of quantum theory, such as superposition and entanglement, to tackle classically challenging tasks. To perform faithful computation, quantum information must be protected against errors due to decoherence mechanisms and operational imperfections. While these errors are relatively insignificant individually, they can quickly accumulate to completely scramble the information.

To protect quantum information from scrambling, the theoretical frameworks of quantum error correction (QEC) [1, 2] and fault-tolerant quantum computation [3] were developed in the early days of quantum computing. Essentially, these frameworks devise encodings which map a collection of physical elements onto a single 'logical' bit of quantum information. Such a logical qubit is endowed with cleverly chosen symmetry properties that allow us to extract error syndromes and enact error correction without disturbing the encoded information.

An important metric for evaluating the effectiveness of QEC implementations is the break-even point, which is achieved when the lifetime of a logical qubit exceeds that of the best single physical element in the system. Achieving the break-even point entails that additional physical elements and operations introduced to a QEC process do not cause more degradation than the protection they afford. Hence, reaching the break-even point is a critical pre-requisite for implementing fault-tolerant gates and eventually performing robust quantum information processing on a large scale.

In the conventional approach to QEC, the physical elements are realized by discrete two-level systems. In this approach, even a simple QEC scheme designed to correct single errors, such as the Steane code [2], requires tens of two-level systems, ancillary qubits, and measurement elements. Constructing physical devices that contain these many interconnected elements can be a significant engineering challenge. More crucially, having many interconnected elements often degrades the device performance and introduces new uncorrectable errors such as cross-talk due to undesired couplings between the elements. Over the last decade, many proof-of-principle demonstrations of QEC schemes have been realized with encoding schemes based on two-level systems [4–10]. However, given the practical challenges described above, these demonstrations have not deterministically extended the performance of the logical qubits beyond that of the best available physical qubit in the system.

A promising alternative with the potential to realize robust universal quantum computing with effective QEC beyond the break-even point involves encoding logical qubits in continuous variables [11–13]. In particular, superconducting microwave cavities coupled to one or more anharmonic elements in the circuit quantum electrodynamics (cQED) architecture provide a valuable resource for the hardware-efficient encoding of logical qubits [14, 15]. These cavities have a large Hilbert space for encoding information in multi-photon states compactly and thus form a logical qubit in a single piece of hardware. The anharmonic element, typically in the form of a transmon and henceforth referred to as the 'ancilla', provides the necessary non-linearity to control and measure cavity states. This strategy of using multi-photon states of superconducting cavities to encode logical information is also known as bosonic codes. Implementations of bosonic codes in cQED have thus far not only demonstrated QEC at the break-even point [16], but also robust operations [17–21] and fault-tolerant measurement of error syndromes [22], thus making rapid progress in recent years.

In this article, we review the recent developments in bosonic codes in the cQED setting. In particular, we highlight the progress made in demonstrating effective QEC and information processing with logical elements implemented using bosonic codes. These recent works provide compelling evidence for the vast potential of bosonic codes in cQED as the fundamental building blocks for robust universal quantum computing.

1.1. Organization of the article

In section 2, we begin by outlining the basic principles of QEC as well as bosonic codes. Here, we highlight their nature by comparing a bosonic code with a multi-qubit code in the presence of similar errors. In section 3, we present various bosonic encoding schemes proposed in literature and compare their respective strengths and limitations. In particular, we wish to emphasize crucial considerations for constructing these codes and evaluating their performance in the presence of naturally-occurring errors.

In section 4, we introduce the key hardware building blocks required for cQED implementations of bosonic encoding schemes. In this section, we also consolidate the progress made in improving the intrinsic quality factors of superconducting microwave cavities over the last decade. Subsequently, in section 5, we explore the latest developments in implementing robust universal control on bosonic qubits encoded in superconducting cavities, both in terms of single-mode gates as well as novel two-mode operations. We then describe the different strategies for detecting and correcting quantum errors on bosonic logical qubits encoded in superconducting cavities.

In section 6, we discuss the concept of fault-tolerance and how that might be realized with protected bosonic qubits. Here, we also feature some novel schemes that concatenate bosonic codes with other QEC codes to protect against quantum errors more comprehensively. Finally, in section 7, we provide some perspectives for achieving QEC on a larger scale. We conclude by remarking on the appeal of the modular architecture, which offers a promising path for practical and robust quantum information processing with individually protected bosonic logical elements.

2. Concepts of bosonic quantum error correction

The general principle of QEC is to encode logical quantum information redundantly in a large Hilbert space with certain symmetry properties, which can be used to detect errors. In particular, logical code states are designed such that they can be mapped onto orthogonal subspaces under distinct errors. Crucially, the logical information can be recovered faithfully only if the mapping between the logical and the error states does not distort the code words.

Mathematically, these requirements can be succinctly described by the Knill–Laflamme condition [23], which states that an error-correcting code  can correct any error operators in the span of an error set

can correct any error operators in the span of an error set  if and only if it satisfies:

if and only if it satisfies:

for all  , where

, where  is the size of the error set,

is the size of the error set,  is the projection operator to the code space

is the projection operator to the code space  , and αℓℓ' are matrix elements of a Hermitian and positive semi-definite matrix. A derivation of the Knill–Laflamme condition is given in reference [24].

, and αℓℓ' are matrix elements of a Hermitian and positive semi-definite matrix. A derivation of the Knill–Laflamme condition is given in reference [24].

Bosonic codes achieve these requirements by cleverly configuring the excitations in a harmonic oscillator mode. For instance, in the cQED architecture, information is encoded in multi-photon states of superconducting microwave cavities. We will illustrate how bosonic QEC codes work by describing the simplest binomial code [25], also known as the 'kitten' code. We will then compare this code with its multi-qubit cousin, the 'four-qubit code' (or the [[4, 1, 2]] code or the distance-2 surface code) [26], in the presence of similar errors in the cQED architecture. This comparison, adapted from reference [27], emphasizes the hardware-efficient nature of the bosonic QEC approach.

The kitten code is designed to correct only single photon loss events, or  , which are the dominant error channel in superconducting cavities. This scheme encodes logical information in the even photon number parity subspace of a harmonic oscillator (figure 1(a)):

, which are the dominant error channel in superconducting cavities. This scheme encodes logical information in the even photon number parity subspace of a harmonic oscillator (figure 1(a)):

Figure 1. Hardware comparison between the kitten code and the four-qubit code. (a) Encoding a single logical qubit using multiple energy levels of a single harmonic oscillator, where the only dominant error channel is photon loss. (b) The four-qubit scheme based on a collection of two-level systems. Individually, each two-level system can experience errors due to spontaneous absorption and emission. Moreover, additional errors can be introduced due to undesired couplings between these systems. (c) The typical hardware for implementing a bosonic logical qubit. The logical information is encoded in a single superconducting cavity (orange) in the kitten code, which can be fully controlled by a single non-linear ancilla (green) and read out via another cavity (gray). (d) Example of a system designed to implement the four-qubit code, where the logical information is spread out across four data qubits (orange) checked by three ancillary ones (green). The interactions between each two-level system are mediated by coupling cavities (teal), and their respective states are read out via seven planar cavities (gray).

Download figure:

Standard image High-resolution imageWith these code words, a single photon loss event maps an even parity logical state |ψL⟩ = α|0L⟩ + β|1L⟩ to an odd parity error state  . Because of the parity difference, the code space and the error space are mutually orthogonal. Therefore, single photon loss events can be detected by measuring the photon number parity operator

. Because of the parity difference, the code space and the error space are mutually orthogonal. Therefore, single photon loss events can be detected by measuring the photon number parity operator  . Importantly, since the error states

. Importantly, since the error states  and

and  have the same normalization constant,

have the same normalization constant,

or equivalently, the logical states |0L⟩ and |1L⟩ have the same average photon number, a single photon loss event does not distort the encoded information. In other words, by mapping the normalized error states |3⟩ and |1⟩ back to the original code states |0L⟩ and |1L⟩, we can recover the input logical state up to an overall normalization constant:

Note that we cannot faithfully recover logical information if the logical states have different average photon numbers. For instance, with  , which has 3 photons on average, as opposed to 2 photons for |1L⟩ = |2⟩, a single photon loss and recovery event yields a state which is not proportional to |ψL⟩:

, which has 3 photons on average, as opposed to 2 photons for |1L⟩ = |2⟩, a single photon loss and recovery event yields a state which is not proportional to |ψL⟩:

Specifically, the error and recovery process distorts the relative phase between |0L⟩ and |1L⟩. An intuitive way to understand such a phase distortion is by considering the role of the environment. When |0L⟩ and |1L⟩ have different average photon numbers, the environment gains partial information on whether |ψL⟩ was in |0L⟩ or in |1L⟩. In the example above, |0L⟩ has more photons than |1L⟩ and hence has a higher probability of losing a photon. When one photon is lost, the environment knows that the encoded state was more likely to be |0L⟩ than |1L⟩ and the weights in equation (5) are therefore adjusted accordingly. Alternatively, we can say that the environment performs a weak measurement on the logical state in the |0L/1L⟩ basis, thus leading to the dephasing of |ψL⟩ in the |0L/1L⟩ basis.

Hence, while the even parity structure allows the detection of single photon loss events, it does not guarantee the recoverability of the logical information without any distortion. Faithful recovery is ensured by selecting |0L⟩ and |1L⟩ to have the same average photon number. In the case of the kitten code, average photon numbers are matched by choosing equal coefficients for the vacuum and the four photon state components in the logical zero state.

More generally, a code  can correct single photon loss events if it satisfies the Knill–Laflamme condition for the error set

can correct single photon loss events if it satisfies the Knill–Laflamme condition for the error set  . That is, the projection operator to the code space should satisfy

. That is, the projection operator to the code space should satisfy  for all

for all  . The first condition with

. The first condition with  is trivially satisfied for any code. For even parity codes, which are composed of logical states of even photon number parity, the second and the third conditions with

is trivially satisfied for any code. For even parity codes, which are composed of logical states of even photon number parity, the second and the third conditions with  and

and  are satisfied due to the parity structure. This implies that even parity codes are capable of detecting, but not necessarily correcting, any single photon loss and gain events. Single photon loss events can be corrected if the fourth condition is met, that is,

are satisfied due to the parity structure. This implies that even parity codes are capable of detecting, but not necessarily correcting, any single photon loss and gain events. Single photon loss events can be corrected if the fourth condition is met, that is,  , or equivalently, if all logical states have the same average photon number.

, or equivalently, if all logical states have the same average photon number.

Now, using an example inspired by reference [27], we compare the kitten code with its multi-qubit cousin, the four-qubit code, whose logical states consist of four distinct two-level systems (figure 1(b)):

The four-qubit code is stabilized by three stabilizers:  ,

,  , and

, and  . This scheme is capable of detecting any arbitrary single-qubit errors. Moreover, it can correct single excitation loss errors,

. This scheme is capable of detecting any arbitrary single-qubit errors. Moreover, it can correct single excitation loss errors,  , via approximate QEC [26], which happens when the Knill–Laflamme condition is approximately satisfied only to a certain low order in the error parameters. This capability is comparable to the protection afforded by the kitten code against single photon loss errors.

, via approximate QEC [26], which happens when the Knill–Laflamme condition is approximately satisfied only to a certain low order in the error parameters. This capability is comparable to the protection afforded by the kitten code against single photon loss errors.

Despite their comparable error-correcting capability, the four-qubit code and the kitten code incur significantly different hardware overheads. A cQED implementation of the kitten code (figure 1(c)) requires a single bosonic mode to store logical information, a single ancilla (typically a transmon) to measure and control the cavity state, and a single readout cavity mode to measure the ancilla state. In contrast, a cQED realization of the four-qubit code (figure 1(d)) uses four data qubits to encode logical information, 3 ancillae to measure the three stabilizers, and additional cavity modes to connect and measure all 7 physical qubits. Apart from the pure complexity of realizing such a device, the presence of additional elements introduces other error channels such as cross-talk arising from spurious couplings between the physical qubits. While these effects can be calibrated and mitigated on a small scale with clever techniques [28, 29], they can quickly become intractable for more complex devices. This comparison thus illustrates the advantage of using the multiple levels of a single bosonic mode over using multiple two-level systems as a redundant resource for QEC.

While we focus on bosonic codes that encode a qubit in a single bosonic mode, there are proposals for encoding a qubit in many bosonic modes, namely permutation-invariant codes [30–33]. These codes are tailored for excitation loss errors and generalize the simple four-qubit code. Other codes of a similar nature have also been studied in references [34–37].

3. Performance of bosonic codes for loss and dephasing errors

In general, bosonic modes in cQED systems typically undergo both photon loss and dephasing errors. Photon loss is considered to be the dominant error-channel. The rate of photon loss is determined by the internal quality factor (Qint) of the superconducting cavity. Intrinsic dephasing is usually insignificant for such cavities [38].

However, as the cavity is dispersively coupled to a non-linear ancilla, if the ancilla experiences undesired absorption (Γ↑) or emission (Γ↓) of excitations due to stray radiation, then the encoded logical information undergoes induced dephasing. The rate of this dephasing depends on Γ↑, Γ↓, and the coupling strength χ. In general, a transition of the ancilla state leads to a rotation on the logical qubit by an angle ∼χt, where t is the time the ancilla spends in the resulting state after the transition. In the limit where this rotation is small, we can apply the Markovian approximation and use the following Lindblad master equation to describe the evolution of the encoded qubit:

where κ and κϕ

are the photon loss and dephasing rates respectively, and ![$\mathcal{D}\left[\hat{A}\right]\left(\hat{\rho }\right)\equiv \hat{A}\hat{\rho }{\hat{A}}^{{\dagger}}-\frac{1}{2}\left\{{\hat{A}}^{{\dagger}}\hat{A},\hat{\rho }\right\}$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn25.gif) is the dissipation superoperator. Note that loss and dephasing errors are generated by the jump operators

is the dissipation superoperator. Note that loss and dephasing errors are generated by the jump operators  and

and  respectively. We say that a system is loss dominated if κ ≫ κϕ

and dephasing dominated if κ ≪ κϕ

. Importantly, in typical experimental regimes, dephasing errors induced by transitions of the ancilla state are generally non-Markovian. This limits the performance of some current implementations [39] of bosonic QEC codes.

respectively. We say that a system is loss dominated if κ ≫ κϕ

and dephasing dominated if κ ≪ κϕ

. Importantly, in typical experimental regimes, dephasing errors induced by transitions of the ancilla state are generally non-Markovian. This limits the performance of some current implementations [39] of bosonic QEC codes.

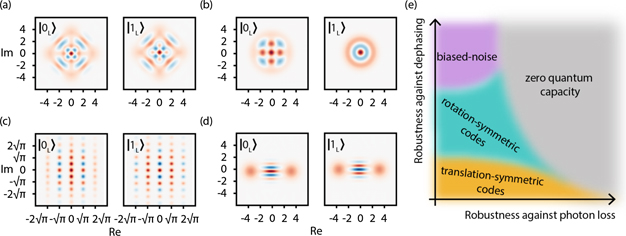

In this section, we review the various single-mode bosonic codes, namely, cat (figure 2(a)) and binomial (figure 2(b)) codes (rotation-symmetric), Gottesman–Kitaev–Preskill (GKP) codes (translation-symmetric) (figure 2(c)), and two-component cat codes (biased-noise bosonic qubits) (figure 2(d)), and discuss their error-correcting capability against both photon loss and dephasing errors.

Figure 2. Visualization and performance of different bosonic codes. (a)–(d) Wigner functions of the code words for the four-component cat, binomial kitten, GKP square lattice, and two-component cat code, respectively. (e) A qualitative description of the robustness of various classes of bosonic codes against dephasing and photon loss errors. Note that for the four-component cat code and the binomial kitten code, the logical states in the |0/1L⟩ basis are invariant under a 90° rotation, up to a global phase. However, a general code state, that is, an arbitrary superposition of |0L⟩ and |1L⟩), is invariant only under the 180° rotation.

Download figure:

Standard image High-resolution image3.1. Rotation-symmetric codes: binomial and cat codes

The class of rotation-symmetric bosonic codes [40] refers to encodings that remain invariant under a set of discrete rotations in phase space. Encoding schemes with rotation-symmetry, such as the cat and binomial codes, are stabilized by a photon number super-parity operator  , which is equivalent to the 360/N° rotation operator. As such, these codes are, by design, capable of detecting N − 1 photon loss events.

, which is equivalent to the 360/N° rotation operator. As such, these codes are, by design, capable of detecting N − 1 photon loss events.

Furthermore, these rotation-symmetric codes can also be made robust against dephasing errors in addition to photon loss errors [41]. For instance, the kitten code may be modified to have the following logical states:

This version of the binomial code is also stabilized by the parity operator and is invariant under the 180° rotation, thus making it robust against single photon loss errors. However, the modified code is now also higher in energy as its logical states have 3 photons on average, as opposed to 2 in the case of the kitten code. This additional redundancy makes the modified binomial code robust against single dephasing events as well. In other words, the logical states of the modified binomial code satisfy the Knill–Laflamme condition for an extended error set  describes single dephasing errors in the cavity. This means that the two logical code words also have the same second moment of the photon number probability distribution besides having the same average photon number. Note that when more moments of the photon number probability distribution are the same for the two code words, distinguishing them becomes more difficult for the environment, which enhances the error-correcting capability of the code.

describes single dephasing errors in the cavity. This means that the two logical code words also have the same second moment of the photon number probability distribution besides having the same average photon number. Note that when more moments of the photon number probability distribution are the same for the two code words, distinguishing them becomes more difficult for the environment, which enhances the error-correcting capability of the code.

Another way to generalize rotation-symmetric codes is by considering different rotation angles. For instance, we can design codes that are invariant under a 120° rotation instead of a 180° rotation and are thus stabilized by the super-parity modulo 3 operator  . By taking advantage of a larger spacing in the photon number basis, 120°-rotation-symmetric codes can detect two-photon loss events as well as single photon loss events, thus providing enhanced protection to the logical information. For instance, a variant of the binomial code with the logical states

. By taking advantage of a larger spacing in the photon number basis, 120°-rotation-symmetric codes can detect two-photon loss events as well as single photon loss events, thus providing enhanced protection to the logical information. For instance, a variant of the binomial code with the logical states

is invariant under the 120° rotation, or equivalently, has photon numbers that are integer multiples of 3. Moreover, the particular coefficients associated with each photon number state in the code words, which are derived from the binomial coefficients (hence the name 'binomial' code), ensure that the code satisfies the Knill–Laflamme condition for an error set  . This indicates that the code is robust against both single and two-photon loss events, as well as single dephasing errors. The binomial code can be further generalized to protect against higher-order effects of loss and dephasing errors [25] as well as used for autonomous QEC [42].

. This indicates that the code is robust against both single and two-photon loss events, as well as single dephasing errors. The binomial code can be further generalized to protect against higher-order effects of loss and dephasing errors [25] as well as used for autonomous QEC [42].

Cat codes are another important example of rotation-symmetric bosonic codes. The four-component cat codes (or four-cat codes), which are composed of four coherent states |±α⟩, |±iα⟩, are the simplest variants of the cat codes that are robust against photon loss errors [43, 44]. In this encoding, the logical states are defined by superpositions of coherent states:

Note that the amplitude of the coherent states |α| determines the size of the cat code. Similar to the kitten code (equation (2)), the logical states of the four-cat code have an even number of photons. Thus, the four-cat code is invariant under the 180° rotation and is able to detect single photon loss events. Here, we reinforce that the parity (or rotation-symmetry) alone does not ensure the recoverability of logical information against single photon loss errors, which is only guaranteed when the Knill–Laflamme condition is satisfied for the error set  . For even parity codes, as explained in section 2, recoverability requires the two logical states to have the same number of photons on average.

. For even parity codes, as explained in section 2, recoverability requires the two logical states to have the same number of photons on average.

For large cat codes with |α| ≫ 1, the average photon number is approximately given by  for both logical states, and the Knill–Laflamme condition is approximately fulfilled. More importantly, the average photon numbers of the two logical states are exactly the same for certain values of |α| (also known as 'sweet spots' [45]) such that:

for both logical states, and the Knill–Laflamme condition is approximately fulfilled. More importantly, the average photon numbers of the two logical states are exactly the same for certain values of |α| (also known as 'sweet spots' [45]) such that:

The smallest such |α| is given by |α| = 1.538, which corresponds to the average photon number  . By increasing the size of the cat codes, we can construct logical states that are robust against both single photon loss and dephasing errors. In particular, cat codes with a large average photon number |α|2 satisfy the Knill–Laflamme condition for the dephasing error set

. By increasing the size of the cat codes, we can construct logical states that are robust against both single photon loss and dephasing errors. In particular, cat codes with a large average photon number |α|2 satisfy the Knill–Laflamme condition for the dephasing error set  approximately modulo an inaccuracy that scales as

approximately modulo an inaccuracy that scales as  [46]. However, an extra error-correcting mechanism, such as a multi-photon engineered dissipation [44], is required to exploit the intrinsic error-correcting capability of the cat codes against dephasing errors. Moreover, increasing the number of coherent state components in the cat code introduces further protection [36, 47]. For instance, with six coherent state components, the code words become 120°-rotation-symmetric and can thus detect up to two-photon loss events. Generalizations of cat codes and analyses of their performance can be found in reference [45], while a multi-mode generalization is available in reference [48].

[46]. However, an extra error-correcting mechanism, such as a multi-photon engineered dissipation [44], is required to exploit the intrinsic error-correcting capability of the cat codes against dephasing errors. Moreover, increasing the number of coherent state components in the cat code introduces further protection [36, 47]. For instance, with six coherent state components, the code words become 120°-rotation-symmetric and can thus detect up to two-photon loss events. Generalizations of cat codes and analyses of their performance can be found in reference [45], while a multi-mode generalization is available in reference [48].

3.2. Translation-symmetric codes: GKP codes

Another class of bosonic codes are translation-symmetric, with the GKP codes [49] being a prominent example. The simplest variant of the GKP codes is the square lattice GKP code, which encodes a logical qubit in the phase space of a harmonic oscillator stabilized by two commuting displacement operators:

where  and

and  are the position and momentum operators respectively.

are the position and momentum operators respectively.

A key motivation for choosing these stabilizers is to circumvent the Heisenberg uncertainty principle, which dictates that the position and momentum operators cannot be measured simultaneously as they do not commute. Since the two displacement operators in equation (12) commute with each other, we can measure them simultaneously. Measuring the displacement operators ![$\mathrm{exp}\left[\mathrm{i}2\sqrt{\pi }\hat{q}\right]$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn39.gif) and

and ![$\mathrm{exp}\left[-\mathrm{i}2\sqrt{\pi }\hat{p}\right]$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn40.gif) is equivalent to measuring their phases

is equivalent to measuring their phases  and

and  modulo 2π, which is in turn the same as simultaneously measuring

modulo 2π, which is in turn the same as simultaneously measuring  and

and  modulo

modulo  . Therefore, the square lattice GKP code is, by design, capable of addressing two non-commuting quadrature operators by simultaneously measuring them within a unit cell of a square lattice. The uncertainty now lies in the fact that we do not know which unit cell the state is in.

. Therefore, the square lattice GKP code is, by design, capable of addressing two non-commuting quadrature operators by simultaneously measuring them within a unit cell of a square lattice. The uncertainty now lies in the fact that we do not know which unit cell the state is in.

The two logical states of the square lattice GKP code are explicitly given by:

and satisfy  modulo

modulo  , thus clearly illustrating that a periodic simultaneous quadrature measurement is indeed possible if the spacing is chosen appropriately. Additionally, the code states are invariant under discrete translations of length

, thus clearly illustrating that a periodic simultaneous quadrature measurement is indeed possible if the spacing is chosen appropriately. Additionally, the code states are invariant under discrete translations of length  in both the position and momentum directions, which makes the code symmetric under translations.

in both the position and momentum directions, which makes the code symmetric under translations.

Conceptually, ideal GKP code states consist of infinitely many infinitely squeezed states where each component is described by a Dirac delta function. However, precisely implementing these ideal states is not feasible in realistic quantum systems. In practice, only approximate GKP states can be realized where each position or momentum eigenstate is replaced by a finitely squeezed state and large position and momentum components are suppressed by a Gaussian envelope [49–51]. Many proposals to realize approximate GKP states in various physical platforms [49, 50, 52–65] and ways to simulate approximate states efficiently [66–71] have been explored in the field. The quality of such states can be characterized by the degree of squeezing in both the position and momentum quadratures. As the squeezing, and hence the average photon number, increases, the approximate state will converge toward an ideal code state. Recently, approximate states of squeezing 5.5–9.5 dB have been realized in trapped ion [72, 73] and cQED [74] systems. The cQED realization is discussed further in section 5.

With a discrete translation-symmetry, GKP codes are naturally robust against random displacement errors as long as the size of the displacement is small compared to the separation between distinct logical states. For instance, the square lattice GKP code is robust against any displacements of size less than  as they can be identified via the quadrature measurements modulo

as they can be identified via the quadrature measurements modulo  and then countered accordingly. For moderately squeezed approximate GKP states that contain a small number of photons, photon loss errors can be decomposed as small shift errors and therefore can be effectively addressed by the code [49, 50]. On the other hand, for large approximate GKP states that are highly squeezed, even a tiny fraction of photon loss results in large shift errors which cannot be corrected by the code. Thus, naively using the standard GKP error correction protocol to decode does not work for large GKP states under photon loss errors. Nevertheless, studies have observed that if an optimal decoding scheme is adopted, excellent performance against photon loss errors can be achieved even with large GKP states [46, 75]. This improvement happens because photon loss errors can be converted into random displacement errors via amplification. This implies that for highly squeezed large GKP codes, a suitable decoding strategy is to first amplify the contracted states and then correct the resulting random shift errors by measuring the quadrature operators modulo

and then countered accordingly. For moderately squeezed approximate GKP states that contain a small number of photons, photon loss errors can be decomposed as small shift errors and therefore can be effectively addressed by the code [49, 50]. On the other hand, for large approximate GKP states that are highly squeezed, even a tiny fraction of photon loss results in large shift errors which cannot be corrected by the code. Thus, naively using the standard GKP error correction protocol to decode does not work for large GKP states under photon loss errors. Nevertheless, studies have observed that if an optimal decoding scheme is adopted, excellent performance against photon loss errors can be achieved even with large GKP states [46, 75]. This improvement happens because photon loss errors can be converted into random displacement errors via amplification. This implies that for highly squeezed large GKP codes, a suitable decoding strategy is to first amplify the contracted states and then correct the resulting random shift errors by measuring the quadrature operators modulo  [76]. We note that at present, no analogous techniques are known for dephasing errors. Thus, highly squeezed GKP codes are not robust against dephasing errors since even a small random rotation can result in large shift errors [50].

[76]. We note that at present, no analogous techniques are known for dephasing errors. Thus, highly squeezed GKP codes are not robust against dephasing errors since even a small random rotation can result in large shift errors [50].

While the preparation of GKP states is challenging, implementing logical operations on GKP states is relatively straightforward. Any logical Pauli or Clifford operation on GKP states can be realized by a displacement or Gaussian operation (via a linear drive or a bilinear coupling). Moreover, magic states [77] encoded in the GKP code, which are necessary for implementing non-Clifford operations, can be prepared with only Gaussian operations and GKP states [50, 78]. Thus, the preparation of code words is the only required non-Gaussian operation for performing universal quantum computation with the GKP code. Non-Clifford operations can be directly enacted on GKP qubits [49], although the cubic phase gate suggested in the original proposal has been recently shown to perform poorly for this purpose [79].

Furthermore, GKP states can be defined over lattices other than the square lattice. For instance, hexagonal GKP codes can correct any shift errors of size less than  , which is larger than the size of shifts that are correctable by the square lattice GKP code [49, 75]. A recent work has also explored the rectangular GKP code [80]. More generally, a multi-mode GKP code can be defined over any symplectic lattice [81]. Lastly, the GKP code may also be used for building robust quantum repeaters for long-distance quantum communication [82, 83].

, which is larger than the size of shifts that are correctable by the square lattice GKP code [49, 75]. A recent work has also explored the rectangular GKP code [80]. More generally, a multi-mode GKP code can be defined over any symplectic lattice [81]. Lastly, the GKP code may also be used for building robust quantum repeaters for long-distance quantum communication [82, 83].

3.3. Biased-noise bosonic qubits: two-component cat codes

Recently, there has been growing interest in biased-noise bosonic qubits, where a quantum system is engineered to have one type of error occur with a much higher probability than other types of errors. This noise bias can simplify the next layer of error correction [84]. For instance, we can design a biased-noise code that suppresses bit-flips and then correct the dominant phase-flip errors by using repetition codes [85, 86]. Alternatively, we can also tailor the surface code to leverage the advantages of biased-noise models to increase fault-tolerance thresholds and reduce resource overheads [87–90].

A promising candidate for biased-noise bosonic qubits is the two-component cat code [91–95], or two-cat code, whose logical code words are given by:

If α is large enough, two-cat codes are capable of correcting dephasing errors. One way to implement two-cat codes is by autonomously stabilizing them via an engineered dissipation of the form ![${\kappa }_{2}\mathcal{D}\left[{\hat{a}}^{2}-{\alpha }^{2}\right]$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn53.gif) [44]. This engineered two-photon dissipation can exponentially suppress the logical bit-flip error in the code states due to dephasing in superconducting cavities

[44]. This engineered two-photon dissipation can exponentially suppress the logical bit-flip error in the code states due to dephasing in superconducting cavities ![$\left({\kappa }_{\phi }\mathcal{D}\left[{\hat{a}}^{{\dagger}}\hat{a}\right]\right)$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn54.gif) , i.e.,

, i.e.,

where |α|2 is the size of the cat code, κ2 is the two-photon dissipation rate, and κϕ is the dephasing rate.

However, the dominant error source in superconducting cavities is photon loss. While dephasing of the cavity does not change the photon number parity, a single photon loss can directly flip the photon number parity of the state. In other words, a single photon loss maps an even parity state |+L⟩ to an odd parity state |−L⟩, thus causing a phase-flip error. As such, phase-flip errors due to single photon loss cannot be mitigated by the two-cat code. Nevertheless, bit-flip errors due to single photon loss can be suppressed exponentially in the photon number α2 provided that the two-photon dissipation is strong enough. Once bit-flip errors are countered, the next layer of QEC will only need to tackle the phase-flip errors, which can be induced by spurious transitions of the ancilla states. In particular, in the stabilized two-cat codes, thermal excitation of the ancilla during idle times can result in a significant rotation of the cavity state and complete dephasing of the encoded qubit. Currently, such events cannot be corrected by the code and are observed to be a limiting factor for the logical lifetime of the encoded qubit [39].

Biased-noise two-cat qubits can also be realized by using an engineered Hamiltonian  , which has two coherent states |±α⟩ as degenerate eigenstates [96–98]. A crucial general consideration for these biased-noise bosonic codes is that the asymmetry in the noise must be preserved during the implementation of gates. The theoretical framework to achieve a universal gate set on the two-cat codes in a bias-preserving manner has been proposed recently [85, 98].

, which has two coherent states |±α⟩ as degenerate eigenstates [96–98]. A crucial general consideration for these biased-noise bosonic codes is that the asymmetry in the noise must be preserved during the implementation of gates. The theoretical framework to achieve a universal gate set on the two-cat codes in a bias-preserving manner has been proposed recently [85, 98].

3.4. Comparison of various bosonic codes for loss and dephasing errors

In summary, rotation-symmetric codes (e.g., four-cat codes and binomial codes) are robust against both photon loss and dephasing errors. Translation-symmetric codes such as the GKP codes can be made highly robust against photon loss errors but are susceptible to dephasing errors. Thus, translation-symmetric codes are suited for loss dominated systems. In contrast, two-cat codes can correct dephasing errors well if they are stabilized by an engineered two-photon dissipation, but are not capable of correcting photon loss errors. Hence, two-cat codes are naturally suited for dephasing dominated systems. However, as discussed in section 3.3, two-cat codes can also be useful in the loss dominated regime as their large noise bias can simplify any higher-level error correction schemes. In figure 2(e), we provide a qualitative schematic that represents different regimes of photon loss and dephasing where each code is designed to perform well. Note that if the loss and dephasing error probabilities are too high, the quantum capacity [99, 100] of the corresponding quantum channel will vanish. When this happens, encoding logical quantum information in a reliable way becomes impossible even with an optimal QEC code [101–105].

While photon loss is the dominant error channel in typical cQED implementations, excitation gain events do also occur, although at a much lower rate. In general, codes robust against photon loss errors tend to be robust against photon gain as well. For rotation-symmetric codes, parity (or super-parity) measurements can detect both photon loss and photon gain events. In the case of translation-symmetric GKP codes, photon gain can be converted into a random shift error by applying a suitable photon loss channel and hence be monitored with the modular quadrature measurement. On the other hand, since the two-cat codes are not robust against photon loss, they are consequently susceptible to photon gain too, which results in phase-flip errors. Nevertheless, in the presence of engineered dissipation, the stabilized two-cat codes can still preserve their noise bias under both photon loss and gain errors.

Until now, we have focused only on the intrinsic error-correcting capability of various bosonic codes without considering practical imperfections. In the following sections, we will discuss the implementation of bosonic QEC in cQED systems and examine errors that occur in practical situations.

4. The cQED hardware for bosonic codes

In the preceding sections, we have discussed the concepts and merits of various bosonic QEC codes. These ideas are brought to reality by developing robust quantum hardware with both coherent harmonic modes for encoding information as well as non-linear ancillae for effective control and tomography of the encoded information. Such systems have been realized with the motional degree of freedom in trapped ions [72, 73], electromagnetic fields of microwave [106] and optical cavities [107], Rydberg atom arrays [108], and flying photons [109], and there are proposals that use other physical systems [110, 111] as well. Among the various platforms, the cQED architecture consisting of superconducting microwave cavities and Josephson junction-based non-linear ancillae have enabled many prominent experimental milestones toward realizing QEC using bosonic codes. In this section, we will introduce the key hardware building blocks necessary for the successful realization of bosonic codes in cQED.

4.1. Components of the cQED architecture

cQED explores light–matter interactions by confining quantized electromagnetic fields in precisely engineered compositions of superconducting inductors and capacitors [15]. These superconducting circuit elements can be tailor-made by conventional fabrication techniques [112] and controlled by commercially available microwave electronics and dilution refrigerators [113] to access strong coupling regimes [114–116]. These characteristics make cQED a compelling platform for universal quantum computation, as noted by several review articles [14, 117–123].

Quantum devices in the cQED framework are typically built by coupling two components, a linear oscillator mode and an anharmonic mode, in different configurations [15]. The anharmonic modes are discrete few-level systems that can be implemented using Josephson junctions. They can be designed to interact with one or more harmonic modes, akin to atoms in an optical field in the cavity QED framework [124]. Unlike naturally occurring atoms, the parameters of these anharmonic oscillators, or artificial atoms, can be precisely engineered. For instance, they may be made to have a fixed resonance frequency in devices with a single Josephson junction or have tunable frequencies by integrating multiple junctions in the presence of an external magnetic flux. The lowest two or three energy levels of these artificial atoms, typically in the form of transmons [125, 126], can be used to effectively encode and process quantum information, as demonstrated by successful realizations of various NISQ era [127] processors [128–134].

Linear oscillators are typically realized in cQED by superconducting microwave cavities. Commonly used architectures include coplanar waveguide (CPW) [114], three-dimensional (3D) rectangular [135] and cylindrical co-axial [136], and micromachined [137] cavities. These quantum harmonic oscillators have well-defined but degenerate energy transitions. Therefore, to selectively address their transitions, we must introduce some non-linearities in them. In cQED, non-linearities are introduced by coupling superconducting cavities to artificial atoms in either the resonant or dispersive regimes.

Under resonant coupling, the transition frequencies of the artificial atom and the cavity coincide, which allows the direct exchange of energy from one mode to the other. In this configuration, cavities can act as an on-demand single photon source [138] or a quantum bus that mediates operations between two isolated artificial atoms by sequentially interacting with each of them [139–141]. Dispersive coupling is achieved by detuning the frequencies of the cavity, ωa , and the artificial atom, ωb , such that the detuning is much larger than the direct interaction strength between them. In this regime, there is no resonant energy exchange between the modes. Instead, the coupling translates into a state-dependent frequency shift, which can be described by the following Hamiltonian:

where  are the annihilation operators associated with cavity and artificial atom respectively, χab

is the dispersive coupling strength between them, α is the anharmonicity of the artificial atom, and K is the non-linearity of the cavity inherited from the atom. Equation (16) further illustrates that the coupling between the two modes is symmetric. In other words, the frequency of the cavity shifts conditioned on the state of the artificial atom, and vice versa.

are the annihilation operators associated with cavity and artificial atom respectively, χab

is the dispersive coupling strength between them, α is the anharmonicity of the artificial atom, and K is the non-linearity of the cavity inherited from the atom. Equation (16) further illustrates that the coupling between the two modes is symmetric. In other words, the frequency of the cavity shifts conditioned on the state of the artificial atom, and vice versa.

Superconducting cavities dispersively coupled to an artificial atom constitute a highly versatile tool that can be employed to fulfill many different roles for quantum information processing. For instance, cavities that are strongly coupled to a transmission line are useful for the efficient readout of the quantum state of the artificial atom [114, 142, 143]. Conversely, cavities weakly coupled to the environment can be used as quantum memories for storing information coherently [136]. Moreover, when the linewidth of the cavity is narrow compared to the dispersive shift χab , we can selectively address the individual energy levels of the cavity via the artificial atom [115]. In this case, the artificial atoms act as non-linear ancillae whose role is to enable conditional operations and perform efficient tomography of the cavity state [144]. This configuration where multi-photon states of the cavity encode logical information and the ancilla affords universal control [145] has become an increasingly prevalent choice for implementing bosonic QEC.

4.2. Coherence of superconducting cavities

Superconducting microwave cavities may be realized in several geometries, with each having their respective advantages. Typically, cavities are constructed in two main architectures, which are two-dimensional (2D) and 3D, based on the dimensionality of the electric field distribution. A third possibility combines the advantages of both the 2D and 3D designs to realize a compact and highly coherent '2.5D' cavity structure, for instance, using micromachining techniques [137, 146].

In the 2D architecture, such as the CPW, the cavity is defined by gaps between circuit elements printed on a substrate which is typically made from silicon or sapphire (figure 3(a)). In such planar structures, the energy is mostly stored in the substrate, surfaces, and interfaces, all of which suffer from losses due to spurious two-level systems in the resonator dielectrics [147–150]. Hence, the Qint of 2D cavities is currently limited to ∼105 to 106 but can potentially be improved with more sophisticated cavity design [151–154], materials selection [155–158], and surface treatment [159–162]. Despite their limited coherence properties, 2D cavities are widely featured in cQED, and especially in NISQ processors, as they have a small footprint and a straightforward fabrication process. Furthermore, in these processors, these cavities are typically used as readout or bus modes, which do not require long coherence times.

Figure 3. Designs of superconducting cavities and their coherence. (a)–(c) Illustrations of 2D CPW, 2.5D micromachined, and 3D co-axial cavities respectively. (d) A selected set of Qint for four commonly used cavity designs in cQED extracted from the literature. Overall, the coherence properties of superconducting cavities have been steadily increasing over the last decade, with the 3D co-axial cavities currently being the main architecture for realizing bosonic QEC. The references for each cavity design, in chronological order, are: 2D cavities: references [154, 159, 164, 170, 171]; 3D rectangular cavities: references [16, 135, 172, 173]; 3D cylindrical co-axial cavities: references [21, 136, 163, 174]; 2.5D cavities: references [146, 167–169]. We have highlighted studies that successfully integrated one or more non-linear ancillae with an asterisk(*).

Download figure:

Standard image High-resolution imageIn contrast, 3D cavities (figure 3(c)) achieve a higher Qint of ∼107 to 108, at the cost of a much larger footprint than their 2D counterparts, by storing energy in the vacuum between the walls of a superconducting box. Among 3D designs, 3D co-axial cavities machined out of high-purity aluminum (≃99.999%) have shown a Qint as high as 1.1 × 108 [163]. This is achieved by significantly suppressing the dissipation due to current flowing along the seams of the superconducting walls and imperfections on the inner surfaces of the structure. Additionally, this design is particularly effective as a platform for implementing bosonic codes as one or more ancillae and readout modes can be conveniently integrated into the platform [164].

One strategy to take advantage of both the long lifetimes of 3D designs and the small footprint and scalable fabrication of 2D geometries is to construct compact 2.5D cavities [137, 146, 164–168]. In particular, the micromachining technique provides a promising method to fabricate lithographically defined 2.5D cavities etched out of silicon wafers [137]. In this configuration, the energy can be stored primarily in the vacuum but the depth of the cavity is much smaller compared to the other dimensions (figure 3(b)). Here, the key challenge is to realize a high-quality contact between the top wall and the etched region. With carefully optimized indium bump-bonding methods, internal quality factors surpassing 300 million have recently been achieved in the 2.5D architecture [169]. Moreover, the integration of a transmon ancilla in this design has also been demonstrated [146], thus making these 2.5D cavities promising candidates for realizing large-scale quantum devices based on bosonic modes.

Regardless of the architecture, the effective implementation of bosonic QEC schemes requires a careful balance between the need for isolation and coherence as well as the ability to effectively manipulate and characterize the cavity. The various loss channels [175, 176] as well as lossy interfaces [177–179] of superconducting microwave cavities have been extensively studied and their intrinsic coherence properties have been improving significantly over the last 15 years [176]. In figure 3(d), we compile a non-exhaustive summary of the internal quality factors of cavities demonstrated in various geometries over the last decade. Besides enhancing the Qint of the cavities, integrating them with ancillary mode(s) is crucial for realizing bosonic logical qubits. However, note that introducing an ancilla results in the degradation of the Qint, as the best ancilla coherence times (∼50–100 μs) are typically about 10–20 times lower than those of the state-of-the-art superconducting cavities. Hence, while comparing the performance of the cavities in figure 3(d), we have only included demonstrations that are compatible with being coupled to non-linear ancillae in the cQED architecture. From the figure, the 3D co-axial cavities emerge as the leading design currently used to realize bosonic qubits.

5. Realization of bosonic logical qubits

The remarkable improvements in the performance of cQED hardware components highlighted in section 4 have made realizing protected logical qubits using bosonic codes a realistic goal. Studies that encode a single logical mode and protect it against dominant error channels have been reported for the four-component cat [16], binomial kitten [173], and square and hexagonal GKP [74] codes. Moreover, robust operations on both single [17, 21, 174] and two bosonic modes [19, 20, 180] have also been explored, thus paving the way toward building a fault-tolerant universal quantum computer based on bosonic logical qubits. In this section, we highlight recent efforts to implement universal control of as well as error correction protocols with bosonic modes.

5.1. Operations on single bosonic modes

For processing quantum information encoded in multi-photon states of superconducting cavities, we must be able to perform effective operations on and characterization of the cavity states. The only operation available for a standalone cavity mode is a displacement,  , which displaces the position and/or momentum of the harmonic oscillator depending on the value of the complex number α. Real values of α correspond to pure position displacements, while imaginary values of α correspond to pure momentum ones [181]. Displacements can only result in the generation of coherent states from vacuum without the possibility to selectively address individual photon number states in the cavity. Therefore, non-trivial operations on these bosonic logical qubits are implemented by dispersively coupling the bosonic mode to a non-linear ancilla in combination with simple displacements.

, which displaces the position and/or momentum of the harmonic oscillator depending on the value of the complex number α. Real values of α correspond to pure position displacements, while imaginary values of α correspond to pure momentum ones [181]. Displacements can only result in the generation of coherent states from vacuum without the possibility to selectively address individual photon number states in the cavity. Therefore, non-trivial operations on these bosonic logical qubits are implemented by dispersively coupling the bosonic mode to a non-linear ancilla in combination with simple displacements.

A key capability enabled by this natural dispersive coupling is a controlled phase shift (CPS). CPS is a unitary operation that imparts a well-defined ancilla state-dependent phase on arbitrary cavity states, and is governed by  , where

, where  is the cavity photon number operator, χ is the dispersive coupling strength, and t is the evolution time. With this unitary, we can efficiently implement conditional phase operations by simply adjusting the evolution time. In particular, when t = π/χ, all the odd photon number states acquire an overall π-phase while the even states get none. This allows us to effectively map the photon number parity of the bosonic mode onto the state of the ancilla (figure 4(a)).

is the cavity photon number operator, χ is the dispersive coupling strength, and t is the evolution time. With this unitary, we can efficiently implement conditional phase operations by simply adjusting the evolution time. In particular, when t = π/χ, all the odd photon number states acquire an overall π-phase while the even states get none. This allows us to effectively map the photon number parity of the bosonic mode onto the state of the ancilla (figure 4(a)).

Note that the time required for the parity-mapping operation scales inversely with the dispersive coupling strength, χ. Naively, one might want to minimize the operation time by engineering a large χ. However, increasing χ can also result in a stronger inherited non-linearity in the cavity (also known as the Kerr effect), which distorts the encoded information. Therefore, the coupling strength between the cavity and its ancilla, as well as the type of the non-linear ancillary mode, such as the transmon or the SNAIL design [182], are usually carefully optimized for each system to produce the desired Hamiltonian configuration.

Figure 4. Operations on single bosonic modes. (a) The sequence for mapping the photon number parity of a bosonic mode to the states of its ancilla. (b) Creation of arbitrary quantum states via repeat SNAP operations on the bosonic mode. (c) Implementing universal control on a single bosonic mode by concurrently driving the cavity and its ancilla with numerically optimized pulses.

Download figure:

Standard image High-resolution imageWith  , we can deterministically create complex bosonic encodings using analytically designed protocols such as the qcMAP operation, which maps an arbitrary qubit state onto a superposition of coherent states in the cavity [183]. This scheme is useful for preparing four-cat states in both single [172] and multiple [184] cavities. Furthermore,

, we can deterministically create complex bosonic encodings using analytically designed protocols such as the qcMAP operation, which maps an arbitrary qubit state onto a superposition of coherent states in the cavity [183]. This scheme is useful for preparing four-cat states in both single [172] and multiple [184] cavities. Furthermore,  is also employed as the parity-mapping operation which is crucial for the characterization and tomography of the encoded bosonic qubits and the engineered gates on these qubits.

is also employed as the parity-mapping operation which is crucial for the characterization and tomography of the encoded bosonic qubits and the engineered gates on these qubits.

In general, the quantum states encoded in a cavity can be fully characterized by probing the cavity's quasi-probability distributions, which is commonly achieved by performing Wigner tomography. The Wigner function can be defined as the expectation value of the displaced photon number parity operator, ![$W\left(\beta \right)=\frac{2}{\pi }\enspace \mathrm{Tr}\left[\hat{D}{\left(\beta \right)}^{{\dagger}}\rho \hat{D}\left(\beta \right)\hat{P}\right]$](https://content.cld.iop.org/journals/2058-9565/6/3/033001/revision6/qstabe989ieqn62.gif) . In cQED, the Wigner functions of arbitrary quantum states can be measured precisely with a well-defined sequence that uses only the cavity displacement, ancilla rotation, and CPS operations [15]. From the results of the Wigner tomography, we can reconstruct the full density matrix and characterize the action enacted on the cavity states in either the Pauli transfer matrix [185] or the process matrix [24].

. In cQED, the Wigner functions of arbitrary quantum states can be measured precisely with a well-defined sequence that uses only the cavity displacement, ancilla rotation, and CPS operations [15]. From the results of the Wigner tomography, we can reconstruct the full density matrix and characterize the action enacted on the cavity states in either the Pauli transfer matrix [185] or the process matrix [24].

Another crucial operation arising from the natural dispersive coupling is the non-linear selective number-dependent arbitrary phase (SNAP) gate [145, 186]. An SNAP gate (figure 4(b)), defined as  , selectively imparts a phase θn

to the number state |n⟩. Due to the energy-preserving nature of this operation, we can simultaneously perform

, selectively imparts a phase θn

to the number state |n⟩. Due to the energy-preserving nature of this operation, we can simultaneously perform  on multiple number states. By numerically optimizing the linear displacement gates and the phases applied to each photon number state in the cavity, we can effectively cancel out the undesired Fock components via destructive interference to obtain the intended target state.

on multiple number states. By numerically optimizing the linear displacement gates and the phases applied to each photon number state in the cavity, we can effectively cancel out the undesired Fock components via destructive interference to obtain the intended target state.

While schemes like qcMAP and SNAP are sufficient to realize universal control on the cavity state, they quickly become impractical for handling more complex bosonic states. For instance, an operation on n photons requires O(n2) gates using the SNAP protocol. To address this challenge, a fully numerical approach using optimal control theory (OCT) has been developed and widely adopted in recent years. The OCT framework provides an efficient general-purpose tool to implement arbitrary operations. In particular, the gradient ascent pulse engineering method [187, 188] has been successfully deployed in other physical systems [189, 190] to implement robust quantum control. By constructing an accurate model of the time-dependent Hamiltonian of the system in the presence of arbitrary control fields, we can apply this technique to cQED systems to realize high-fidelity universal gate sets on any bosonic qubit encoded in cavities, as demonstrated in reference [17]. A typical example of a set of pulses obtained through the gradient-based OCT framework is shown in figure 4(c).

More recently, various concepts from classical machine learning, such as automatic differentiation [191] and reinforcement learning [192, 193], have been applied to enhance the efficiency of the numerical optimization. Crucially, the success of these techniques does not only rely on the robustness of the algorithms, but also depends on the choice of boundary conditions. Knowledge of these boundary conditions requires comprehensive investigations of the physics of the quantum system and the practical constraints of the control and measurement apparatus.

5.2. Operations on multiple bosonic modes

Apart from robust single-mode operations, universal quantum computation using bosonic qubits also requires at least one entangling gate between two modes. Realizing such an operation can be challenging due to the lack of a natural coupling between cavities. Moreover, the individual coherence of each bosonic qubit must be maintained while maximizing the rate of interactions between them. One promising strategy to tackle this issue is to use the non-linear frequency conversion capability of the Josephson junction to provide a driven coupling between two otherwise isolated cavities [194]. Such operations are fully activated by external microwave drives which can be tuned on and off on-demand without modifying the hardware. This arrangement ensures that the individual bosonic modes remain well-isolated during idle times and undergo the engineered interaction only when an operation is enacted.

Using this strategy, a CNOT gate was the first logical gate enacted on two bosonic qubits [195]. This gate is facilitated by a parametrically-driven sideband transition between the ancilla and the control mode together with a carefully chosen conditional phase gate between the ancilla and the target cavity. By achieving a gate fidelity above 98%, this study showcases the potential of such engineered quantum gates between bosonic modes. More recently, a controlled-phase gate has been demonstrated between two binomial logical qubits in reference [20]. Here, the microwave drives are tailored to induce a geometric phase that depends on the joint state of the two bosonic modes. However, these two types of operations are both customized for a selective set of code words and do not yet generalize readily to other bosonic encoding schemes.

A code-independent coupling mechanism between two otherwise isolated bosonic modes is a crucial ingredient for realizing universal control on these logical qubits. The isolated cavities must be sufficiently detuned from each other to ensure the coherence of each mode and the absence of undesired cross-talk. Reference [180] demonstrated how the four-wave mixing process in a Josephson junction can provide a frequency converting bilinear coupling of the form  . Here,

. Here,  are the annihilation operations associated with each of the cavities, the time-dependent coefficient g(t) is the coupling strength, and φ is the relative phase between the two microwave drives. The coefficient g(t) depends on the effective amplitudes of the drives, which satisfy the frequency matching condition |ω2 − ω1| = |ωa

− ωb

|. Most notably, this coupling can be programmed to implement an identity, a 50:50 beamsplitter, or a full SWAP operation between the stationary microwave fields in the cavities by simply adjusting the duration of the evolution. Such an engineered coupling provides a powerful tool for implementing programmable interferometry between cavity states [180], which is a key building block for realizing various continuous-variable information processing tasks such as boson sampling [196], simulation of vibrational quantum dynamics of molecules [197–200], and distributed quantum sensing [201–204].

are the annihilation operations associated with each of the cavities, the time-dependent coefficient g(t) is the coupling strength, and φ is the relative phase between the two microwave drives. The coefficient g(t) depends on the effective amplitudes of the drives, which satisfy the frequency matching condition |ω2 − ω1| = |ωa

− ωb

|. Most notably, this coupling can be programmed to implement an identity, a 50:50 beamsplitter, or a full SWAP operation between the stationary microwave fields in the cavities by simply adjusting the duration of the evolution. Such an engineered coupling provides a powerful tool for implementing programmable interferometry between cavity states [180], which is a key building block for realizing various continuous-variable information processing tasks such as boson sampling [196], simulation of vibrational quantum dynamics of molecules [197–200], and distributed quantum sensing [201–204].

Moreover, this bilinear coupling is also a valuable resource for enacting gates on two logical elements encoded in GKP states [49]. As mentioned in section 3, GKP encodings rely on the non-linearity of the code words and only require linear or bilinear operations for universal control [78]. Therefore, this engineered bilinear coupling provides a simple and effective strategy for implementing a deterministic entangling operation for the GKP code.

For other bosonic codes, this bilinear interaction is not alone sufficient to generate a universal gate set, which requires at least one entangling gate. In this case, the exponential SWAP (eSWAP) operation can be designed to provide deterministic and code-independent entanglement [205]. The eSWAP operation, akin to an exchange operation between spins, implements a programmable unitary of the form:

where θ is the rotation angle on the ancilla and  is the identity operation. Intuitively, this unitary implements a weighted superposition of the identity and SWAP operations between two bosonic modes regardless of their specific encodings. The eSWAP unitary has been realized in reference [19] between two bosonic modes housed in 3D co-axial cavities bridged by an ancilla. In this demonstration, an additional ancilla is introduced to one of the cavity modes and the resultant dispersive coupling is used to enact a

is the identity operation. Intuitively, this unitary implements a weighted superposition of the identity and SWAP operations between two bosonic modes regardless of their specific encodings. The eSWAP unitary has been realized in reference [19] between two bosonic modes housed in 3D co-axial cavities bridged by an ancilla. In this demonstration, an additional ancilla is introduced to one of the cavity modes and the resultant dispersive coupling is used to enact a  operation to provide the tunable rotation necessary for the eSWAP unitary. The eSWAP unitary is then enacted on several encoding schemes in the Fock, coherent, and binomial basis. The availability of such a deterministic and code-independent entangling operation is a crucial step toward universal quantum computation using bosonic logical qubits. A recent study has shown that universal control and operations on tens of bosonic qubits can be achieved in a novel architecture comprising a single transmon coupled simultaneously to a multi-mode superconducting cavity [206].

operation to provide the tunable rotation necessary for the eSWAP unitary. The eSWAP unitary is then enacted on several encoding schemes in the Fock, coherent, and binomial basis. The availability of such a deterministic and code-independent entangling operation is a crucial step toward universal quantum computation using bosonic logical qubits. A recent study has shown that universal control and operations on tens of bosonic qubits can be achieved in a novel architecture comprising a single transmon coupled simultaneously to a multi-mode superconducting cavity [206].

5.3. Implementations of QEC

In general, implementations of QEC with bosonic codes suffer from an initial rise in error rates due to the presence of and need to control multi-photon states. Hence, the main experimental challenge is to achieve an enhancement in the lifetime of the encoded qubit despite this initial penalty. In the context of bosonic codes, the break-even point is defined relative to the |0, 1⟩ Fock states, which are the longest-lived physical elements in the cavity without error correction. Beyond the break-even point, we can be confident that the chosen QEC protocol does not introduce more errors into the system, thus attaining an improvement in the lifetime of the logical qubit. Till date, three studies have approached [74, 173] or achieved [16] the 'break-even point' for QEC with bosonic codes in cQED devices without any post-selection.

Broadly, QEC schemes fall into three categories based on how they afford protection to the logical qubit. In active QEC, error syndromes are repeatedly measured during the state evolution of the logical qubit and any errors detected are subsequently corrected based on the measurement outcomes. In autonomous QEC, errors are removed by tailored dissipation or by coupling to an auxiliary system, without repeatedly probing the logical qubit. In passive QEC, the logical information is intrinsically protected from decoherence because of specifically designed physical symmetries [207]. In this discussion, we have opted to distinguish between autonomous and passive QEC to highlight potential differences in system design. In the autonomous approach, the environment is intentionally engineered to suppress or mitigate errors. Whereas in the passive case, the system Hamiltonian itself is tailored to be robust against certain errors, which often involves constructing a unique physical element in the hardware [208–210].

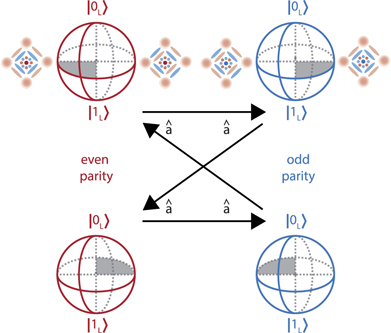

Typically, active QEC requires robust measurements of the error syndrome and real-time feedback. In superconducting cavities, the dominant source of error is single photon loss. For encoding schemes with rotational symmetries, such as the cat and binomial codes, single photon loss results in a flip in the parity of the code words. Therefore, measuring the parity operator tells us whether a photon jump has taken place, thus allowing us to detect the error syndrome of these logical qubits [211]. In reference [16], which corrected a four-component cat state under photon loss (figure 5), the correct logical state was recovered by making appropriate adjustments in the decoding step based on the number of parity flips detected with real-time feedback. The corrected logical qubit showed an improved lifetime compared to both the uncorrected state and the |0, 1⟩ Fock state encoding, thereby achieving the break-even point. However, this error correction strategy is not suitable for protecting bosonic qubits for timescales exceeding the intrinsic cavity lifetime as the loss of energy from the system is accounted for but not physically rectified. Thus, such energy attenuation should be physically compensated to significantly surpass the break-even point.

Figure 5. Four-component cat code under photon loss. Every photon loss event ( ) changes not only the parity of the basis states, but also changes the phase relationship between them. The encoded state cycles between the even (logical) and odd (error) parity subspaces, while also rotating about the Z-axis by π/2. The decoding sequence must take both these effects into account to correctly recover the logical information.

) changes not only the parity of the basis states, but also changes the phase relationship between them. The encoded state cycles between the even (logical) and odd (error) parity subspaces, while also rotating about the Z-axis by π/2. The decoding sequence must take both these effects into account to correctly recover the logical information.

Download figure:

Standard image High-resolution imageIn contrast, reference [173] admits a photon pumping operation to achieve QEC on a logical qubit encoded in the binomial code. The errors are detected by photon number parity measurements, as in reference [16]. The errors occurring on the logical state are then corrected by an appropriate recovery operation as soon as they are detected. An approximated recovery operation is still required in case no errors are detected as the system evolves under the no-parity-jump operator [25]. In this experiment, the lifetime of the logical qubit was greater than that of the uncorrected binomial code state, but was marginally below that of the |0, 1⟩ Fock state.

In addition to the cat and binomial encodings, active QEC has also been recently demonstrated on a high-quality GKP state stored in a superconducting cavity [74]. As explained in section 3.2, errors occurring on a moderately squeezed GKP state simply manifest as displacements of the cavity state, and are revealed and mitigated by measuring the displacement stabilizers. While mitigating errors is relatively straightforward, experimental challenges in implementing the GKP code lie in preparing finitely squeezed approximate GKP states and performing modulo quadrature measurement of stabilizers. The QEC protocol used in reference [74] suppresses all logical errors for a GKP state by alternating between two peak-sharpening and envelope-trimming rounds, each consisting of different conditional displacements on the cavity. The magnitude of these conditional displacements is dependent on the measured state of the ancilla onto which displacement stabilizers are mapped. This sharpen-and-trim technique can be generalized based on the second-order Trotter formula [65].