Abstract

Photonic technologies offer numerous functionalities that can be used to realize astrophotonic instruments. The most spectacular example to date is the ESO Gravity instrument at the Very Large Telescope in Chile that combines the light-gathering power of four 8 m telescopes through a complex photonic interferometer. Fully integrated astrophotonic devices stand to offer critical advantages for instrument development, including extreme miniaturization when operating at the diffraction-limit, as well as integration, superior thermal and mechanical stabilization owing to the small footprint, and high replicability offering significant cost savings. Numerous astrophotonic technologies have been developed to address shortcomings of conventional instruments to date, including for example the development of photonic lanterns to convert from multimode inputs to single mode outputs, complex aperiodic fiber Bragg gratings to filter OH emission from the atmosphere, complex beam combiners to enable long baseline interferometry with for example, ESO Gravity, and laser frequency combs for high precision spectral calibration of spectrometers. Despite these successes, the facility implementation of photonic solutions in astronomical instrumentation is currently limited because of (1) low throughputs from coupling to fibers, coupling fibers to chips, propagation and bend losses, device losses, etc, (2) difficulties with scaling to large channel count devices needed for large bandwidths and high resolutions, and (3) efficient integration of photonics with detectors, to name a few. In this roadmap, we identify 24 key areas that need further development. We outline the challenges and advances needed across those areas covering design tools, simulation capabilities, fabrication processes, the need for entirely new components, integration and hybridization and the characterization of devices. To realize these advances the astrophotonics community will have to work cooperatively with industrial partners who have more advanced manufacturing capabilities. With the advances described herein, multi-functional integrated instruments will be realized leading to novel observing capabilities for both ground and space based platforms, enabling new scientific studies and discoveries.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 license. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

Nemanja Jovanovic and Pradip Gatkine

Department of Astronomy, California Institute of Technology, Pasadena, CA, United States of America

Status

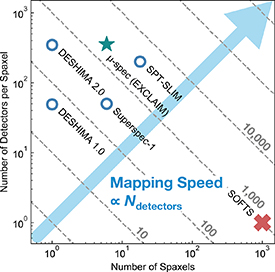

Astrophotonics is simply defined as the application of photonic technologies to astronomy. Like many fields of technology-driven science, astrophotonics directly benefits from the multi-decade and multi-billion dollar investments in photonics by industry, especially by telecommunications. Photonic technologies are appealing because they can provide many avenues for controlling and manipulating light, including spectral dispersion, spectral, spatial, and polarization filtering, phase and amplitude modulation, light generation, frequency shifting, and light detection to name a few. In addition, operating at the diffraction limit of the input telescope means that the instrument can have the smallest possible footprint for a given set of specifications, reducing volume, mass and cost [1, 2]. Guiding the light in fibers or waveguides in a photonic integrated circuit (PIC) allows further reductions in instrument size by enabling flexible integration of many functions optimized for a specific science case and the elimination of bulk optics in most cases leading to extreme miniaturization, which can more readily be thermally and mechanically stabilized. Unlike bulk optics, photonic technologies can be highly replicable, offering dramatic cost savings once produced in volume. A highly replicable diffraction-limited instrument can be readily deployed to numerous observatories and achieve the same performance, potentially providing cost-savings by eliminating the prototypical nature of current astronomical instruments.

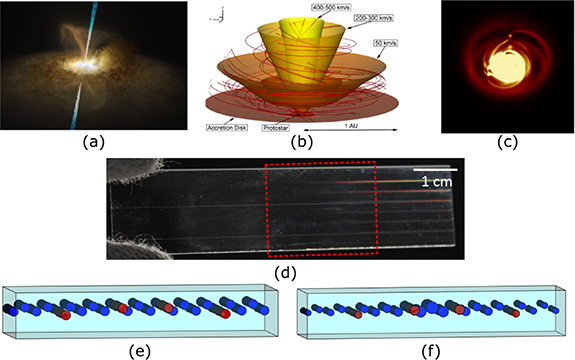

With such favorable properties, photonic technologies stand to impact many areas of astronomy. Leveraging the replicability for example, means that low cost massively multiplexed spectroscopic surveys could be enabled in the future. These would allow the measurement of large samples of spectroscopic redshifts, constrain galaxy evolution through tracers of star formation, outflows, etc, and enable detailed compositional study of stellar populations to name a few. On the other hand, the diffraction-limited nature of photonics makes them directly applicable to point-source-like targets that typically rely on adaptive optics (AO) observations. These include the study of exoplanets and disks, young stellar objects, evolved stars, active galactic nuclei, and nuclear star cluster kinematics for example.

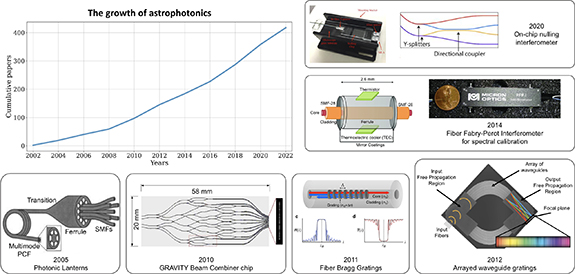

Despite its origin in the late 1970s, and pioneering overviews about the prospects of photonics in the 90's [3], the field of astrophotonics was first recognized as a sub-field of astronomical instrumentation in 2009, when the editors of Optics Express [4] solicited a special issue on the topic for the first time. Although then already a thriving field, it has grown over the last 14 years as shown in figure 1, which highlights some of the various technical developments resulting in two further special issues being developed in 2017 and 2021 respectively [5, 6].

Figure 1. Top left: a plot showing the growing trajectory of research in the field of astrophotonics, compiled using NASA ADS search engine. The surrounding insets show examples of various technologies representing the sub-fields in astrophotonics, as they matured for on-sky demonstration. These examples include: photonic lanterns. Reproduced with permission from [7] © The Optical Society. Photonic beam combiners for interferometry with GRAVITY. Reproduced with permission from [8]. Fiber Bragg gratings for atmospheric OH emission suppression. Reproduced with permission from [9]. Arrayed waveguide gratings for spectroscopy. Reproduced with permission from [10] © The Optical Society. Fiber-based Fabry–Perot interferometer for spectral calibration. Reproduced from [11]. © IOP Publishing Ltd. All rights reserved. And an on-chip nulling interferometer. Reproduced with permission from [12]. This is just a small subset of a large family of astrophotonic technologies that have been developed and demonstrated over the last two decades.

Download figure:

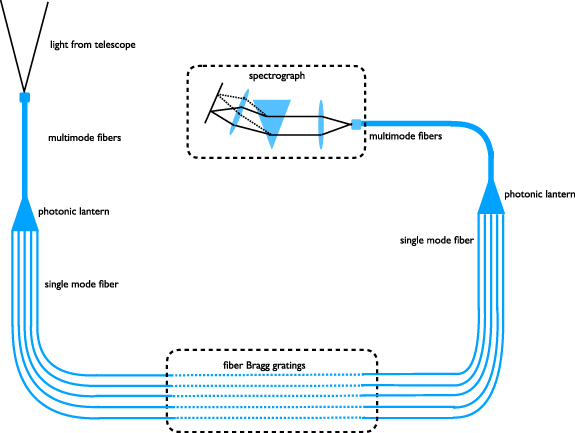

Standard image High-resolution imageNumerous technologies have been advanced over the past decade. Here we briefly highlight a few success stories. The photonic lantern (PL) was invented to exploit the coupling advantages of multimode (MM) fibers and the stability of single-mode fibers (SMFs) for use in astronomy [7]. This mode converting device has since undergone extensive technical development [13–21] and now a device that converts a MM input into 19 SM outputs can routinely do this with a throughput >95% at 1550 nm. The lantern device was leveraged to realize a complex spectral filter that could suppress the telluric OH emission [9, 22]. This was achieved by inscribing complex fiber Bragg gratings (FBGs) and attaching them to each of the SM outputs of a lantern, before recombining the filtered signal into a MM fiber and feeding a downstream spectrograph [23–25]. This spectral filtering capability enabled accurate sky-subtraction in the near-infrared (NIR), key to advancing faint observations in that region. Regarding calibration, laser frequency combs (LFCs) provide an ultra-stable set of highly dense comb lines across large bandwidths [26, 27], making them the perfect tool for calibrating a spectrograph [28, 29]. These tools have been optimized for astronomy for the past decade [30, 31] and are now becoming commonplace for use with precision radial velocity (RV) spectrographs [32]. Finally, in the field of interferometry, the GRAVITY instrument is a long-baseline interferometer that can combine the four 8 m telescopes at the VLT in the NIR K-band centered at 2.2 µm. It does this using a photonic beam combiner chip [33] and provides milli arcsec spatial resolution, microarcsecond astrometric precision, and high throughput thanks to the large telescopes and AO systems, which have enabled many transformative discoveries [34–37].

With these successes and a long list of possible technologies and functions to explore, astrophotonics has a huge potential to impact astronomy. This potential was recently outlined in several whitepapers [38, 39] solicited by the National Academy of Sciences for the US Decadal survey and later explicitly called out in the survey itself [40]. Specifically the survey stated 'The possibility of obtaining extremely high-precision radial velocities, of the order of a 10 cm s−1 or better, as well as direct imaging of exoplanets may largely rely on the maturity of SMFs and on-chip nulling interferometers'.

Importantly, astronomy driven technology developments have broader applicability which could improve funding for such developments. For example, the all-photonic flattener on a chip [41, 42] may find application beyond astronomy for gain flattening [43, 44], temporal pulse shaping [45, 46] as well as for targeted excitation of particular molecular species [47]. In addition, PLs are being considered for spatial division multiplexing in telecommunications [48] as well for high efficiency free-space optical communications [49]: the latter is critical to ensuring high data rates for future astronomy and planetary science missions and may also find application in microscopy. These are just a few examples of the potential of astrophotonics to impact society more generally.

Despite these successes, astrophotonic technologies require further developments in nearly all cases to advance them to facility-class instrument science readiness and/or expand capabilities. With this roadmap, we aim to outline some key areas development should be focused on to advance the field and allow for truly integrated, multi-functional instruments to be realized that advance scientific investigation. The choice of development areas outlined in this work was driven by (1) relevant technologies demonstrated by the community with the potential for further growth and (2) promising opportunities given recent technological advances to address outstanding goals. The areas of development covered in this article are non-exhaustive and other areas should also be considered.

Roadmap organization and goals

The roadmap explores the status of currently used technologies and outlines other promising choices that should be investigated. The aim is to highlight the potential astronomical applications of a range of technologies and provide guidance on the specific developmental path to realize that promise including, developing more advanced design tools, prototyping, fabricating, characterizing, packaging, integrating, field testing, and science demonstration. The roadmap is broken up into five key thematic areas which span all of astrophotonics as follows:

Light injection, wavefront control, and light transport

The efficient coupling of light into photonic technologies is the first step in being able to utilize them for astronomical instruments. Given photonic devices generally operate at or near the diffraction limit, the ability to couple light from a telescope into such a device relies on either an AO system to correct for the wavefront in the optical/infrared [50] (common to most large 5 m+ telescopes) or a mode-matching solution, be it a with a PL [18, 20], an integral field unit (IFU), or a series of small telescopes more closely matched to the Fried parameter, r0 [51]. In addition, with advances in AO systems that can now generate reasonable correction across large fields (MCAO; ∼25% Strehl over ∼2 arcminute fields-of-view [52]) and references therein, when combined with low-mode-count PLs, efficient multi-object (MO) photonic instruments can be realized.

Photonic technologies can also be used to produce signals critical to driving the AO system, and offer numerous advantages including eliminating non-common path and chromatic errors. Next-generation instrument architectures will merge photonic wavefront sensing and science instruments and result in integrated instruments with superior performance.

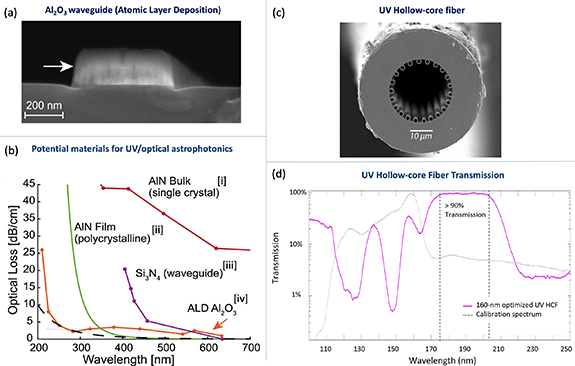

Once light is coupled to a photonic system, light transport is the next critical aspect to ensure the light can be efficiently routed to the science instrument. Optical fibers are ideally suited to this and here we explore a range of new silica fiber architectures that enable photonic applications to currently un-explored wavelength ranges with advantageous properties (dispersion, polarization, bandwidth, few-mode vs SM, etc.).

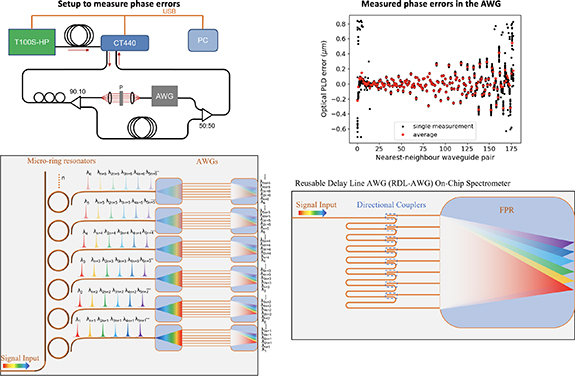

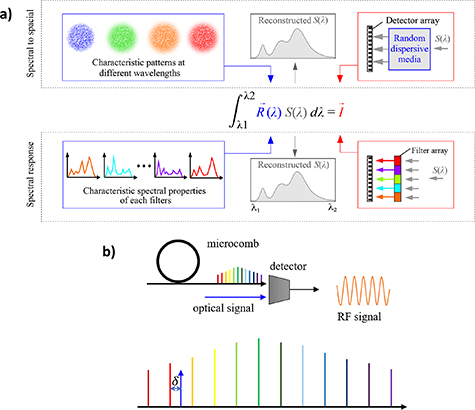

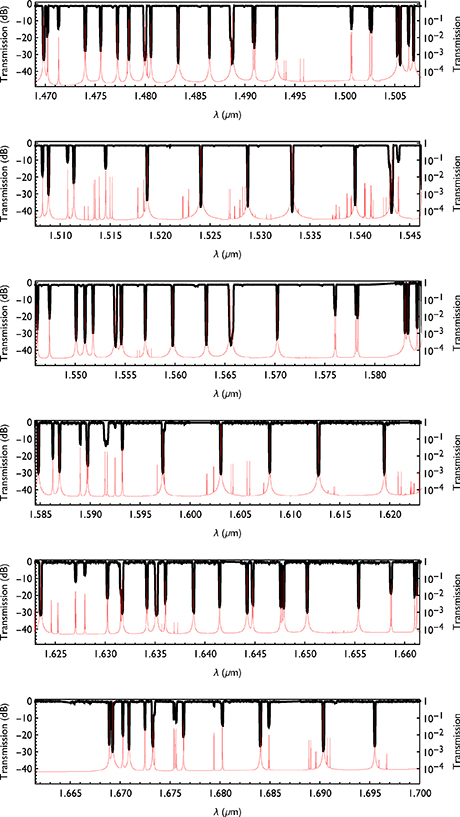

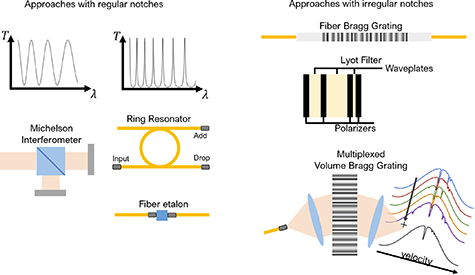

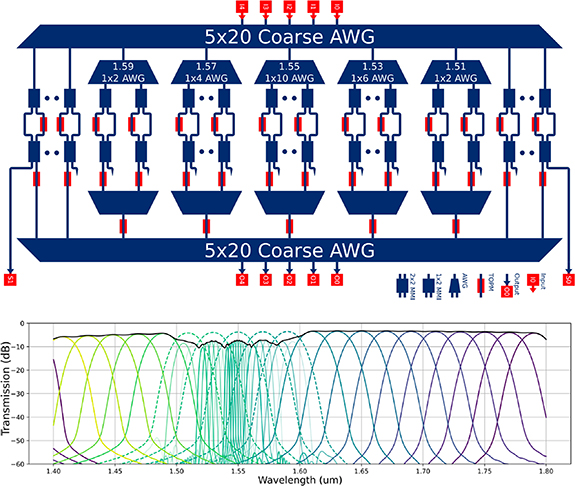

Spectroscopy and spectral filtering

Spectroscopy is a key analytical tool in an astronomers toolbox. It can be used in several ways: to disperse the light for scientific measurement as well as for spectral filtering applications. Photonic dispersing elements in the form of arrayed waveguide gratings (AWGs) have been tested for their suitability for astronomy, but need further developments to optimize throughput, bandwidth, polarization response as well as to scale to higher resolution before wide astronomical application. Apart from AWGs, other photonic technologies provide a plethora of novel approaches to the dispersion problem including dispersed Fourier transform interferometers consisting of Mach–Zehnder interferometers (MZIs) and AWGs, ring-resonator enhanced spectrometers and integrated serpentine grating spectrometers to name a few. These technologies need to be explored to ascertain their scientific potential and demonstrate the technical advantages and to assess challenges to implementation.

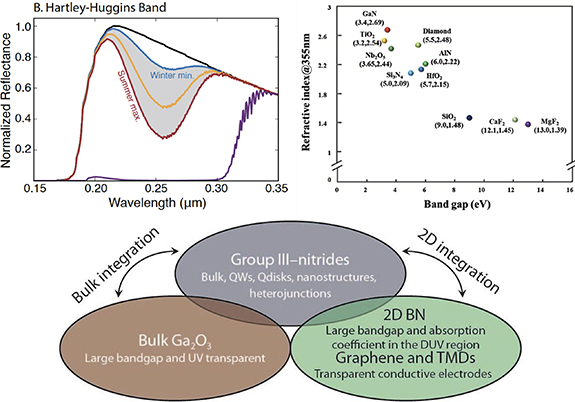

Critical to exploiting photonic spectroscopy is being able to realize the devices in wavebands other than the NIR, where telecommunications has already invested heavily. Photonics is evolving in the visible, so we outline photonic efforts to develop platforms, both photonic integrated circuits (PICs) and fiber based, that are promising at more extreme wavelengths like the UV (<400 nm) and in the MIR (>2.5 μm). AWGs for example have already been built and demonstrated efficiently at sub-mm wavelengths, and in the roadmap we look at further advances needed to optimize these devices, as well as a pathway to large field-of-view instruments.

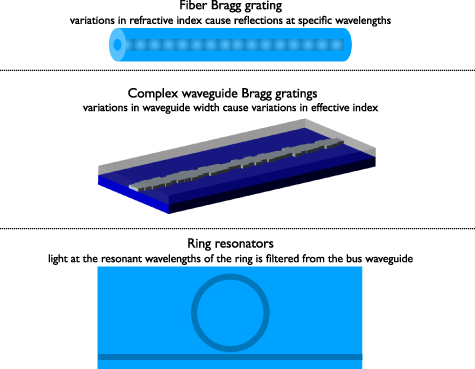

Spectral filtering is a critical capability. Technologies like FBGs are very mature, but require further development to be applicable to wavebands other than the NIR. Micro-ring resonators, which form tiny resonant cavities and waveguide Bragg gratings, are less mature and need more significant advancements, including utilizing smaller feature sizes, enabling complex filters and reducing cladding mode coupling, but offer the potential to integrate them with other components on PICs.

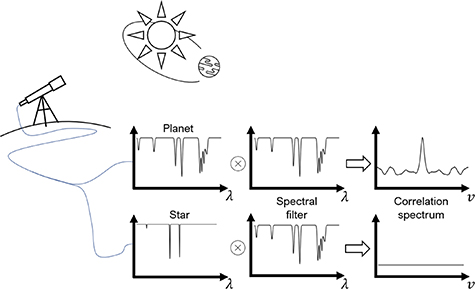

The applications of filtering devices are growing as well. Optical cross-correlation for example looks at introducing a paradigm shift in how instruments are built, eliminating the need for extremely costly high pixel count arrays, if efficient and flexible filters can be realized to process the light optically. This would be transformative for instruments designed for extremely large telescopes.

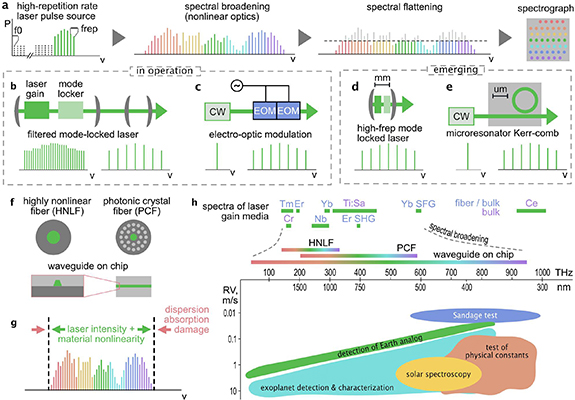

Spectral calibration

Key to extracting physical quantities via spectroscopy is the ability to calibrate the spectrograph. Photonics offers the ultimate wavelength calibration tool: the LFC. An LFC can be stabilized to extreme levels over decades [26, 27], meeting even the most demanding science needs in astronomy (e.g. extreme precision radial velocity (EPRV)). Although LFCs are becoming common at many observatories, they are still complex and costly, and lack reliable spectral coverage in the blue, as needed for the most precise RV measurements of Sun-like stars. In addition, LFCs offer the potential for non-spectroscopic calibration applications, including extreme time keeping for very long baseline interferometers as well as metrology.

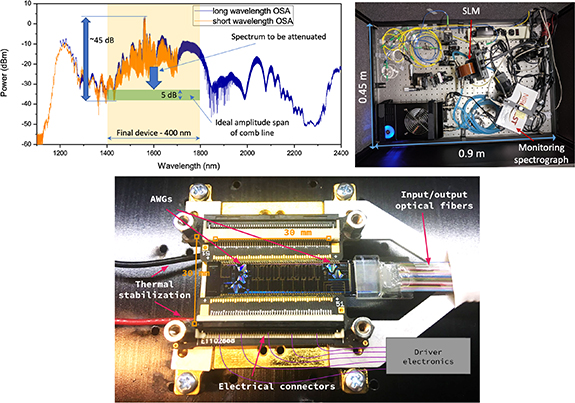

To maximize the benefit to spectroscopy, the spectral profile of the comb lines in an LFC spectrum must be flattened, or be made more uniform to fit within the spectrometer's dynamic range. On-chip flattener technology has only recently been explored for astronomy and requires further development. In particular, investigation is needed into the optimal device design given the LFCs optical properties, the number of channels that can be realized on a single chip, how to build devices that span several astronomical bands, and the prospects for devices in the visible region.

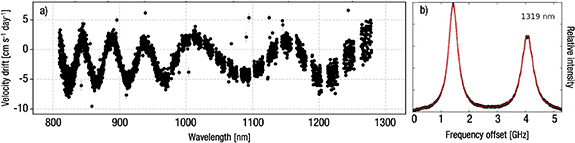

An alternative calibration technology that offers a compact, portable, cost effective solution to wavelength calibration is the Fabry–Perot etalons. In recent years, these devices have been commissioned at several observatories with precision RV instruments (e.g. ESPRESSO, HARPS, NEID, HPF, SPIROU, Maroon-X). Their spectrum can be readily engineered to provide very broad wavelength coverage including the UV range, and linewidth and spacing precisely matched to the spectrograph. While etalons have demonstrated very reliable operation, and excellent short term stability, they exhibit long term drifts that are wavelength dependent, likely due to aging effects in the mirror coatings, necessitating the use of other, absolute calibrations sources to periodically recalibrate the etalon spectrum and track the long term drift. The mechanism responsible for the chromatic drifts needs to be identified and the coating effects mitigated before Etalons can become stand-alone calibrators at extreme precisions.

Interferometry

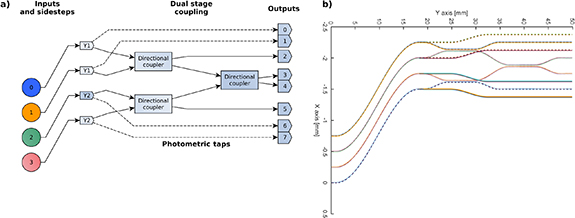

Interferometry is a powerful approach to reach angular resolutions beyond that of single telescopes so one can study the environment in the immediate vicinity of the host target. While GRAVITY has led to groundbreaking results in the NIR with its classical ABCD beam combiner approach, several technical advancements can be undertaken to extend the scientific reach including improving the throughput in several spectral ranges, increasing the Fourier coverage by combining more telescopes, and integrating active fringe tracking capabilities onto the beam combining chips. As an alternative, discrete beam combiner (DBC) technologies are a promising approach that may simplify beam combination circuits when >4 telescopes need to be combined due to their implementation of straight SM waveguides in a lattice. But, the technology also requires their operational bandwidths to be extended and at the same time, realize a smart algorithm for finding the best input configurations and the geometry of the lattice when the number of input telescopes increases. Given their substantial differences, we discuss classical ABCD based beam combiners and DBCs in separate chapters.

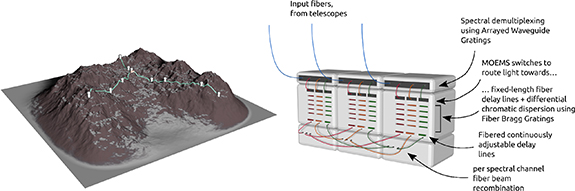

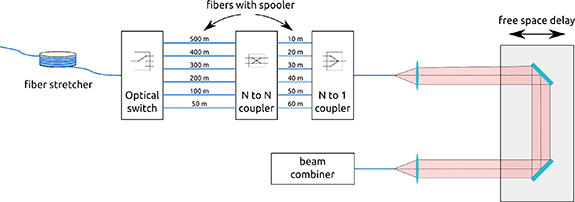

In parallel to advancing beam combiner technologies, developing the components to allow kilometric baselines by using optical fibers is also critical. This requires the development of low loss fibers across the NIR and MIR regions (1–17 μm), a careful understanding of the dispersion properties of fibers and how to compensate for delays with fibers as well as FBGs, and to determine if photonic fringe tracking, via piezo stretched fibers or thermal or electro-optic (EO) phase shifters on-a-chip will be sufficient to replace bulk optic delay lines. Birefringence and diattenuation will also need to be closely studied.

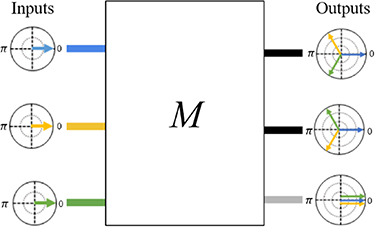

For high-contrast applications, nulling interferometry is the desired approach and the roadmap outlines the components (tri-couplers, achromatic phase shifters, etc) needed to realize high performance circuits in future at both NIR and MIR wavelengths. To eliminate any stellar leakage the circuit architecture also needs further development, with approaches like kernel nulling showing promise, but careful evaluation is needed. Fiber and PL nulling rely on a fiber or a mode-selective photonic lantern (MSPL) to be positioned directly in the telescope focal plane. Although they are simple to implement, the contrast will be limited without further investigations into (1) the ability of the cladding to suppress the rejected stellar light and (2) the limitations of cross-coupling in MSPLs. A PIC-based beam combiner is a promising solution to calibrate the leaked light of a PL nuller to enhance the null, but needs to be studied in detail to determine the optimum architecture of the circuit.

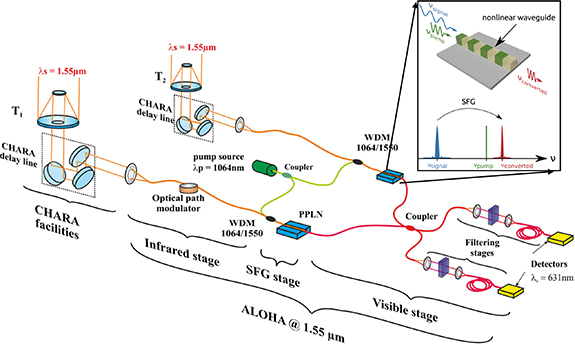

Finally we can consider other techniques used in radio astronomy, including heterodyning and frequency conversion. The latter allows the thermal IR photons to be converted to the visible or the near infrared where detectors are abundant and thermal background is no longer the limiting factor. Moreover the combination of the heterodyne or frequency conversion and the use of photonic technologies borrowed from the telecommunication world offers the possibility of linking the telescopes of an array to the central interferometric correlator without the need for costly and hard-to-maintain infrastructure. Interestingly, the combination of a heterodyne approach and single-photon quantum technologies may allow highly efficient interferometric schemes. However, to realize functioning instruments in the MIR one needs broader bandwidths using faster mid-infrared (MIR) detectors, phase-stable mid-IR LFCs and broadband nonlinear conversion crystals.

Realizing efficient, multi-functional instruments

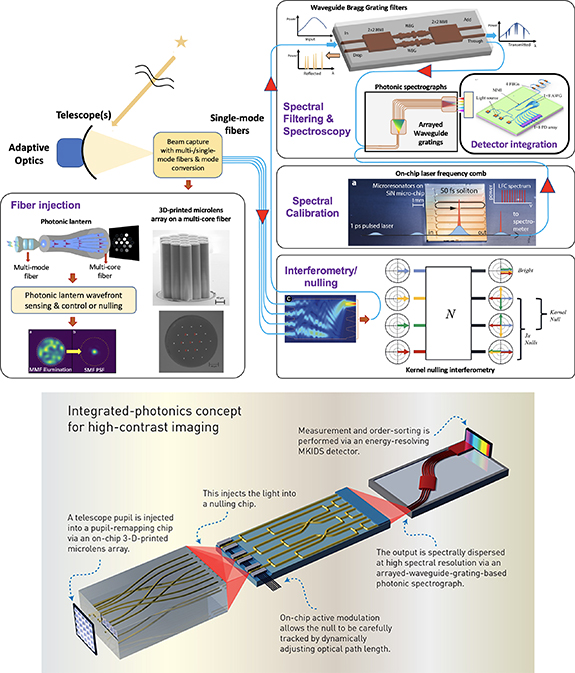

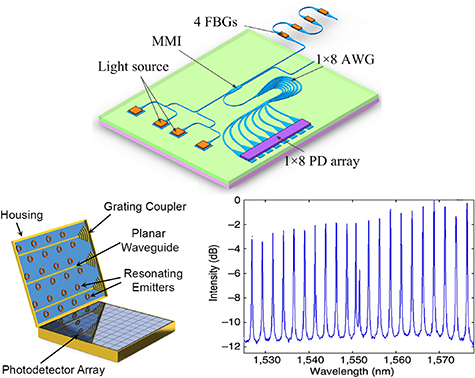

The ultimate goal is to route and process the light collected from the telescope completely in photonics to the detectors (see figure 2 for an example of an integrated photonic instrument concept). Photonics offers many useful functions to achieve this, each optimized across a broad range of disparate materials and platforms. To advance towards realizing instruments on-a-chip, efficient hybridization will be necessary. Mastering repeatable high-efficiency packaging to deliver ultra-low loss coupling from fiber arrays to PICs, and between disparate PIC platforms optimized for various functions (silica-on-silicon (SOS), ion-exchange waveguides in glass, silicon nitride, silicon on insulator, etc) will be necessary. In addition, fan-out devices, needed to go from the output of multi-core fibers (MCFs) to PICs will need to be further developed to reduce losses. Active circuits will also require electrical integration and possibly laser sources for calibration and/or metrology.

Figure 2. (Top) The five main subcategories of the roadmap are shown with example technologies that can be used in each, as well as an example potential implementation. Some of the technologies shown here include: fiber injection (with photonic lanterns [20], lantern-based wavefront sensing (Reproduced from [53]. CC BY 4.0.) and 3D-printed microlens arrays on multi-core fibers (Reproduced with permission from [54])), spectral filtering and spectroscopy (using complex waveguide Bragg gratings (Reproduced with permission from [55] © The Optical Society) and arrayed waveguide gratings [56]), spectral calibration (with on-chip laser frequency combs (Reproduced with permission from [57])), interferometry (using Kernel-phase nulling [58]), and detection integration. Reproduced from [59]. CC BY 4.0. (Bottom) A future vision for an integrated photonic high-contrast imaging instrument. Micro-lenses segment the pupil plane, a pupil remapper routes the light to a nulling beam combiner chip which has active control for fringe tracking, before light is injected into an arrayed waveguide grating (AWG) to disperse the light and then onto a detector (image taken from [60]).

Download figure:

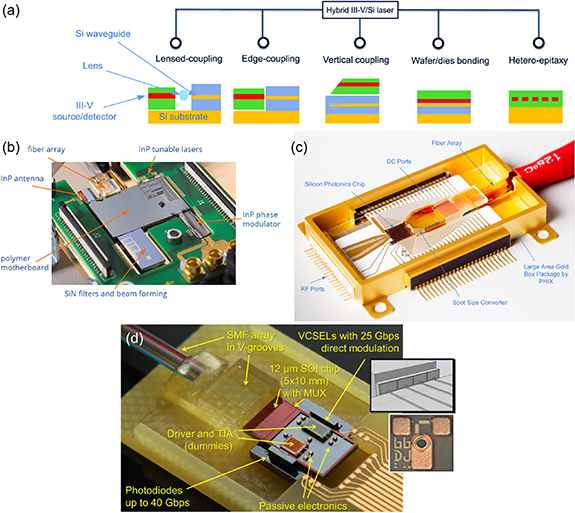

Standard image High-resolution imageRepeatable and robust packaging—integration of various technologies to make a single functioning device will be critical. Fabrication and packaging of most photonic devices is still a niche industry or done on an individual basis, we need to better utilize the expertise of the broader integrated photonics industry and for their capabilities to evolve and mature (e.g.: multi-project wafer (MPW) runs, detector integration, fiber to chip to free space/detector packaging). Standardization within the photonic industry will dramatically reduce costs for future devices.

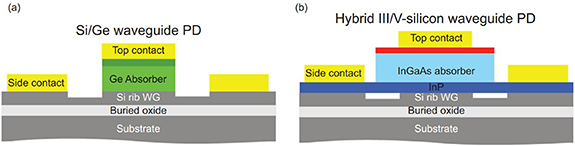

Detector integration with PIC or fiber devices will be key to realizing an integrated instrument. Semiconductor detectors operating at temperatures closer to where photonics are typically operated could be integrated via single pixel photo-diodes on the chip, on an active chip which is flip chipped onto the passive device, via linear arrays which are edge coupled to the PIC or 2D arrays that image the beams ejected out of the top surface of the PICs, via grating or vertical couplers. These options need to be studied in more detail to understand the relative pros and cons and for example at which pixel count to transition from single pixel photodiodes to arrays. Losses of the various outcoupling options (edge, vertical, grating couplers) need to be minimized over broadbands as well. The role of micro-optics needs to be better understood.

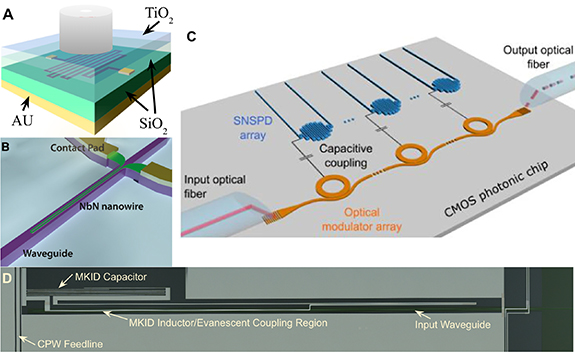

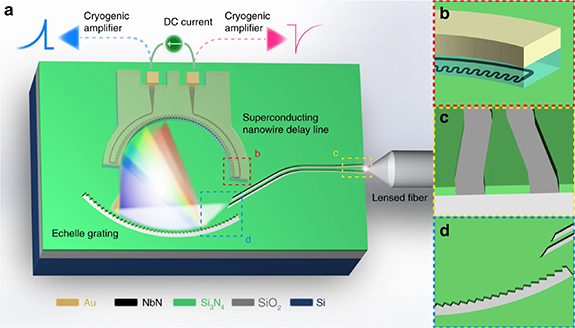

To push the sensitivity of detection, photonics could be integrated with superconducting detectors as well. Both microwave kinetic inductance detectors (MKIDs) and superconducting nanowire single photon detectors (SNSPDs) run at much lower temperatures (<4 K), but provide extreme sensitivity photon counting capabilities, free from dark current and read noise. The mismatch in materials between those used for PICs and detectors means further studies are needed to determine the optimum route to integrate them. In addition, superconducting detectors and circuit structures should be optimized for IFUs in the THz as well.

Community development

In addition to the technical developments outlined above, the community needs development as well. Firstly, there is a lack of astrophotonics experts primarily because universities nurture astronomers or photonics technologists. Although specialists are needed, the field also requires interdisciplinary experts that can understand the astrophysics science cases, formulate requirements and then design and realize photonic instruments. New bridges between workforce training centers and new pipelines to bring people into the field are needed. In addition, multidisciplinary centers such as innoFSPEC (Germany) fuel innovation in astrophotonics by bringing together expertise from different fields.

Secondly, the community is not representative of society at large as is the case of the parent field—astronomical instrumentation. As a first step towards addressing the latter point, the community should leverage the initiatives developed by professional societies like SPIE, Optica and AAS, who frequently have discussions, training and workshops on various topics about diversity, equity and inclusivity at conferences, to name a few. At the local level, revising admissions and hiring, retention and advancement practices to eliminate biases and increase diversity and equity are critical.

Realizing the potential of astrophotonics is contingent upon developing a talented, robust and diverse workforce. This work force will not be developed without significant and directed attention and effort by the community.

Concluding remarks

The series of papers that follow elaborate on these five technical themes, each outlining the status, current and future challenges, and advances in the science and technology to meet the challenges for 24 key areas of the astrophotonics field. The technical developments specified in the roadmap will require development of the workforce, adding new interdisciplinary specialists and creating a more diverse and a robust community. Through close collaboration between academia, which is better positioned to advance concepts, designs and qualify devices, and industry partners who are better positioned to provide fabrication and packaging, the technical developments outlined can most efficiently be realized. While astrophotonics has great science potential, currently the field is not funded at a level that can sustain large infrastructure upgrades for PIC, fiber, and packaging advancements. Instead, the field will benefit from continued investments by industries such as telecommunications, AR/VR headsets, photonics for satellites, and LIDAR for autonomous vehicles. Conversely, those fields will also benefit from astrophotonic driven developments. Despite this, the astronomical community must stay engaged with industry partners to provide them with guidance on the needs of the community as well as to be inspired by advances in vendor capability.

Acknowledgments

This work was supported by the National Science Foundation under Grant No. 2109232 and support for P Gatkine was provided by NASA through the NASA Hubble Fellowship Grant HST-HF2-51478.001-A awarded by the Space Telescope Science Institute, which is operated by the Association of Universities for Research in Astronomy, Incorporated, under NASA Contract NAS5-26555.

2. Symbiosis between adaptive optics and photonics: the path to fully integrated instruments

Philipp Hottinger1, Olivier Guyon2,3,4,5 and Rebecca Jenson-Clem6

1Landessternwarte, Zentrum für Astronomie der Universität Heidelberg, Heidelberg, Germany

2Subaru Telescope, National Astronomical Observatory of Japan, National Institute of Natural Sciences, Hilo, HI, United States of America

3Astrobiology Center of NINS, Osawa, Mitaka, Tokyo, Japan

4Steward Observatory, University of Arizona, Tucson, AZ, United States of America

5College of Optical Sciences, University of Arizona, Tucson, AZ, United States of America

6Department of Astronomy and Astrophysics, University of California, Santa Cruz, CA, United States of America

Status

Most 8–10 meter class telescopes are equipped with AO to compensate for atmospheric turbulence. All AO systems rely on wavefront sensor(s) (WFS) to measure optical aberrations, deformable mirror(s) (DMs) for optical correction, and computational methods for linking WFS measurements to DM commands. Yet their design details vary considerably depending on the telescope environment and the intended science application.

Laser guide stars (LGS) assisted wavefront sensing enables AO-corrected observations of fields with no bright star, such as the galactic center [61, 62]. The AO-corrected field of view (FoV) can be extended by using multiple DMs and WFSs [52] to support, for example, crowded-field astrometry or MO spectroscopy (e.g. Gemini's GEMS and GNAO, VLT's MAVIS [63] and Subaru's ULTIMATE [64]).

Extreme-AO systems (ExAO), on the other hand, are optimized for performance over a small field-of-view around bright natural guide stars, integrating high actuator count DMs with advanced wavefront control methods. Several such systems have been deployed for imaging exoplanets, including Gemini/GPI [65], VLT/SPHERE [66], Subaru/SCExAO [67], and Magellan/MagAO-X [68]. Focal plane WFSs can address remaining wavefront distortions such as non-common path aberrations (NCPA) and thermally-induced phase discontinuities [69], using a wide range of algorithms and approaches [70–72].

Thanks to excellent AO correction in the (near-)IR over a small FoV of ∼ 50'' on large telescopes, starlight can now efficiently be coupled into SMF for high angular and spectral resolution spectroscopy, with an efficiency closely linked to Strehl ratio (SR). Jovanovic et al [50] achieved coupling efficiencies of over 50% with SRs of 60% in H-Band at Subaru/SCExAO, Crass et al [73] >35% in Y- and J-Band with LBTI/iLocater, and Delorme et al [74] coupling efficiences of 60% aim to reach coupling efficiencies of 60% in K- and L-Band with Keck2/KPIC. Photonic SM components extend SMF use to multiple telescopes [75], and compact integral-field spectroscopy with approaches including hexabundles [76], SCAR [77, 78], and 3D-M3 [54].

50'' on large telescopes, starlight can now efficiently be coupled into SMF for high angular and spectral resolution spectroscopy, with an efficiency closely linked to Strehl ratio (SR). Jovanovic et al [50] achieved coupling efficiencies of over 50% with SRs of 60% in H-Band at Subaru/SCExAO, Crass et al [73] >35% in Y- and J-Band with LBTI/iLocater, and Delorme et al [74] coupling efficiences of 60% aim to reach coupling efficiencies of 60% in K- and L-Band with Keck2/KPIC. Photonic SM components extend SMF use to multiple telescopes [75], and compact integral-field spectroscopy with approaches including hexabundles [76], SCAR [77, 78], and 3D-M3 [54].

With the intermediate SRs of 10%–20% delivered in near-IR by wide FoV (∼ 100'') AO systems, coupling into a single SMF becomes less efficient. Modal conversion with photonic lanterns (PLs) [7] can couple light to multiple SMFs to feed downstream SMF-base instrumentation such as a fiber-bragg gratings (FBG) for airglow suppression of OH emission lines in the H-Band [23]. Alternatively, few-mode fibers can make use of this partial AO correction like NIRPS [79] but these are prone to the negative impact of modal noise.

100'') AO systems, coupling into a single SMF becomes less efficient. Modal conversion with photonic lanterns (PLs) [7] can couple light to multiple SMFs to feed downstream SMF-base instrumentation such as a fiber-bragg gratings (FBG) for airglow suppression of OH emission lines in the H-Band [23]. Alternatively, few-mode fibers can make use of this partial AO correction like NIRPS [79] but these are prone to the negative impact of modal noise.

AO subsystems could individually be replaced by maturing photonic technologies, offering identical or enhanced functionalities in a miniaturized and integrated footprint. The manufacturing processes often allow in-situ alignment [80] that reduces operational complexity and increases optical stability. One of the most promising applications is the use of PLs as focal plane WFS with the potential to supplement well-established pupil plane WFSs as compact additions to sense residual aberrations (see section 3 for more details). Large systems would benefit from the reduced complexity and smaller footprint of these sensors, enabling better scalability for Multi-Object-AO and Multi-Conjugate-AO systems requiring multiple WFSs and thus making it easier to achieve better AO performance over a wide FOV. Goodwin et al [81] introduced the concept of a miniaturized Shack-Hartmann WFS with similar benefits.

To optimally exploit photonic technologies, they should not simply replace individual conventional components but rather aim to be integrated as part of the science instrument and the AO system. This type of hybridization will make telescope optics more resource efficient as it reduces optical and mechanical footprint and complexity. One partially integrated approach has been proposed by Dietrich et al [82] for reconstructing tip-tilt with a multi-core SMF equipped with an 3D-printed lenslet array and tested on-sky with a refined design utilizing a micro-lens ring tip-tilt sensor (MLR-TT sensor) by Hottinger et al [83]. There, wavefront sensing is integrated into a vital part of the science instrument, in this case with simultaneous SMF coupling. While tip-tilt sensing is only a limited functionality, it shows the advantages such an integrated approach can have as it reduces complexity by replacing multiple bulk optic components while almost completely eliminating NCPA.

This interplay between AO and instrument has led to demand and existence of test environments that allow transition of the development from laboratory to on-sky performance in order to mature existing concepts. These are core objectives of the SCExAO testbench at Subaru [67] and Canary at WHT [84].

Current and future challenges

Efficient injection of astronomical light into photonic devices requires exquisite wavefront quality and stability, beyond what is achieved with AO on large telescopes. Reliable, efficient and stable coupling will require further improvements in AO performance, with particular attention to vibration control and atmospheric dispersion correction. Proper optical matching of the telescope beam to the fundamental mode of the SMF is also important [85]; phased induced amplitude apodization has already been shown to have the potential to significantly increase single mode (SM) fiber coupling [50, 86].

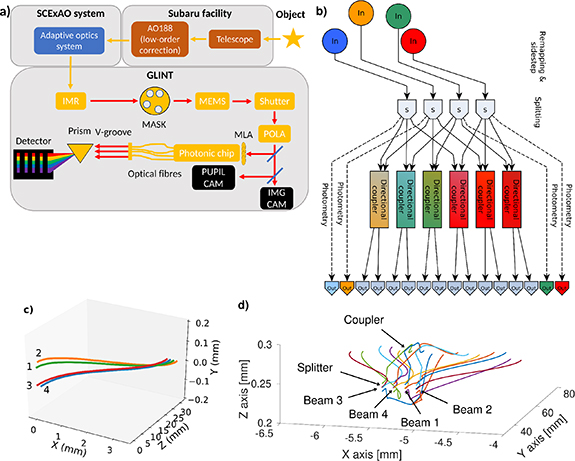

Modern AO systems' demanding wavefront quality requirements on large telescopes drive them to employ fast, sensitive and accurate WFS s driving high-count DMs, with higher optical complexity and use of advanced wavefront control algorithms. Precise calibration of system components (WFS, DM) and control of residual NCPA is becoming increasingly critical. This is especially essential in HCI applications requiring starlight suppression by nulling or coronagraphy to support the direct imaging and spectroscopic characterization of exoplanets. Photonic technologies can provide the high performance interferometric wavefront sensing solutions to meet this challenge, combining high sensitivity with large dynamical range, and providing wavelength diversity. This interferometric approach to wavefront sensing is well-suited for a second-stage high-precision WFS/C following a more conventional AO correction so that starlight is efficiently coupled to the photonic device(s). These benefits have recently been demonstrated on-sky with the PL [87] and the GLINT instrument [88, 89]. To fully realize this potential, the interferometric WFS output signals will need to be used for real-time active wavefront control, either by DM actuation or in-chip phase modulation. Wavefront information is then extracted by direct measurement of interferometic phases, as is done in multi-telescope inteferometers.

Manufacturing capabilities of photonic components are often still insufficient for astronomy applications, with most new developments driven by the much more broadly funded telecommunication industry which usually has less demanding requirements on optical throughput, pathlength control, and broadband performance. The astronomical community has the chance to contribute in these new areas, expanding the range of applications of photonic devices. When astrophotonics aims to integrate or replace full-scale AO systems, scaling of these devices also becomes an issue as the number of coherent elements scales with  and so will the required number of individual sensor readout and pathlength control units. In the near future, photonics-based AO systems could be deployed as small units downstream of AO systems using bulk optics, while full-scale photonics AO systems on large telescopes will require more development.

and so will the required number of individual sensor readout and pathlength control units. In the near future, photonics-based AO systems could be deployed as small units downstream of AO systems using bulk optics, while full-scale photonics AO systems on large telescopes will require more development.

Practical challenges of astrophotonic technologies need to be considered as these are often a new class of manufactured devices that need special attention. For small scale refraction components, 3D-printed microlenses fabricated using two-photon lithography allow high flexibility in free-form shapes and enable the possibility for in-situ printing providing integrated alignment [80]. But this also means that care needs to be taken for handling these devices during transportation and employment as their structural integrity and the bond joint between components is vital to their performance. SMFs feature a mode-field diameter (MFD) that varies slightly between manufacturing batches. For maximum coupling between separate fibers, the MFD needs to be matched. Ultra-fast laser inscription [90, 91] can be used for precise waveguide design and is continuing to mature but many manufacturing challenges such as reliable waveguide printing, limited refractive index contrast, and high attenuation still remain. In particular, these considerations will also be essential when astrophotonic devices are to be employed at space based observatories as the environmental impact regarding vibrations, temperature, pressures and background radiation pose an additional challenge.

Advances in science and technology to meet challenges

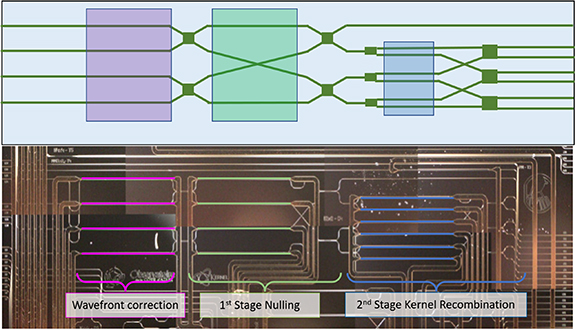

There are two main roads towards an increased symbiosis between astrophotonic instruments and AO. Firstly, individual photonic components can be employed to augment conventional systems, for example by providing a second stage WFS, or by performing a science measurement well suited for photonic approaches downstream of high performance AO correction. In the long term, photonic devices can potentially also replace parts of conventional AO systems, for example as the main WFS. Secondly, photonic components will be tightly integrated together as a system performing a wide range of functions, leading to more compact, less complex instrument systems that enable high automation both in manufacturing as well as in operation. Some concepts enable new optical approaches potentially surpassing the performance and capability of conventional systems. Figure 3 brings together these points, showing the existing individual photonic components that correspond to or can supplement conventional systems (center column) as well as integrated concepts where photonic components are part of a larger instrument (right column).

Figure 3. Overview of conventional adaptive optic (AO) systems, potential photonic counterparts and additions, as well as integrated instrumental concepts. Individual concepts: Thermo-coupled phase shifter. Reprinted with permission from [92] © The Optical Society. Sparse aperture masking (SAM) interferometry fringe tracker WFS. Reprinted with permission from [93] © SPIE. Photonic lantern WFS. Reproduced from [53]. CC BY 4.0. Photonic lantern with reformatter. Reproduced from [94]. CC BY 4.0. GLINT nulling interferometer. Reproduced from [12]. CC BY 4.0. Gravity beam combiner. Reproduced with permission from [75]. Microlens-ring tip-tilt (MLR-TT) sensor. Reproduced with permission from [83] © The Optical Society. Integrated photonic instrument illustration by Phil Saunders. Reproduced with permission from [60] © The Optical Society.

Download figure:

Standard image High-resolution imageA general challenge in the development of astrophotonic components is the adaptation of photonic components to the requirements of astronomical observations. Three requirements that are significantly less stringent in telecommunication application play a central role in astrophotonics. Firstly, the wavelength performance needs to be enlarged to broader bands as both instrument sensitivity and science goals require throughput and optical performance over a larger wavelength range. Secondly, throughput of astrophotonic devices has only a very small loss budget as most scientific observations are performed in the photon starved regime. Thirdly, environmental stability cannot always be guaranteed in observatories, imposing higher tolerances on temperature, humidity, and mechanical constraints.

The deployment of the photonic beam combiner at GRAVITY [75] has indeed shown that photonics can already individually replace conventional components and uniquely enable high precision astrophysical measurements. In general, evolution for these components is slow as these concepts need to prove their optical performance and optical efficiency before consideration for large scale investments. Adoption of astrophotonic solutions in major astronomical instrumentation projects relies on successful proof-of-concept demonstrations, preferably performed on-sky. To boost development, access to and support from state-of-the-art AO instrumentation on large telescopes should be prioritized.

As outlined in the previous sections, astrophotonic technologies are already bringing advances in wavefront sensing, although they currently support a moderate number of wavefront modes. Two main approaches are on the horizon. Firstly, interferometric wavefront sensing between multiple telescopes for facilities like VLTI/GRAVITY and CHARA or within the same large telescope aperture as demonstrated in FIRST [95] and GLINT [12], offering nanometer-level phase precision simultaneously with spectral resolution thanks to wavelength-dispersed fringes. Secondly, PLs and MCFs can reduce optical elements and thus provide a simple high-throughput alternative to bulk optics slit formatting, light transport, etc. Additionally, in these applications, the photonic device can perform low-order wavefront sensing of a few modes and operates as a final-stage WFS downstream of the high-order AO system. In the near future, these few-mode devices could be integrated with the kHz-speed AO correction by feeding back into the upstream AO system.

For WFS control, communication between different subsystems must be established. The use of machine learning (ML) algorithms is explored in a number of projects and shows potential to solve some of the challenges associated with analysis of complex sensor data as was demonstrated in PL-WFS demonstration by [53]. The use of these algorithms is already widespread in many real time applications outside astronomy, and will need to be accommodated in observatories as well. The astronomical context will require operation in the kHz regime with a wide variety of input data from both optical and environmental sensors such as accelerometers, temperature sensors, and seeing measurements. The computational infrastructure must be expanded to fulfill this need, including the corresponding telemetry data filter and storage which is needed for both analysis and future algorithm training as well as specialized ML inference hardware. Linear and higher order reconstruction methods have also been explored by [96].

Photonic devices can integrate wavefront correction as on-chip phase shifters, a highly miniaturized equivalent to optical delay lines used in interferometers. While current interferometer systems use mechanically driven fiber delay lines, photonics provides several solutions that can potentially offer control authority into the kHz regime, including thermoacoustic devices [97], thermo-coupled [92], EO phase shifters [98] and piezo-actuated phase shifters [99]. Additionally to classical wavefront correction, fast photonic-based pathlength modulation can reveal incoherent exoplanet light within the coherent starlight residual when performing high-contrast imaging (HCI).

Photonic components can both transport and modify the transported light. This enables combinations of these functions where a fraction of the guided photons is used for sensing. Such integration of AO and science acquisition in a single compact photonic device will allow for new self-calibrating and self-tuning capabilities to be a core feature of the instrument design. This architecture is particularly attractive for HCI, where a photonic nulling chip could iteratively reconfigure its internal phase delays [100] or drive external DM(s) to maintain optimal broadband null depths. By integrating science measurement and WFS functions within the same photonic chips, the stable relationship between WFS and science outputs can be measured and leveraged for self-calibration of science measurements down to the photon noise residual [88].

Concluding remarks

Given the rapid pace of development of photonics applications to AO and astronomical instrumentation over the last decade, and the growing number of successful on-sky demonstrations, photonic technologies and devices are poised to play an increasing role in astronomy. Progress in this area is driven by advances in underlying core technologies, maturation of instrument designs incorporating emerging photonics solutions, and access to increasingly powerful AO systems providing the suitable wavefront quality on large astronomical telescopes.

Integration of AO and astronomical measurement functions in a single photonics-based system will be particularly enabling, providing in a compact format a rich set of functionalities with high stability and accurate calibration of science measurements. In order to realize this potential, further developments will need to include both lab prototyping activities and on-sky prototyping demonstrations. In this paper we have summarized the vast variety of different components involved, hinting at the challenges that lie ahead when all these systems are aimed to be combined. Sustained collaborative efforts between phononics experts and on-sky AO-equipped development platforms and photonics-capable instruments have been, and will continue to be particularly instrumental in maturing photonics systems for astronomy.

Acknowledgments

We thank R J Harris for the fruitful discussions on the topic. P H is supported by the Deutsche Forschungsgemeinschaft (DFG) through Project 326946494, 'Novel Astronomical Instrumentation through Photonic Reformatting'. O G acknowledges support from NASA Grant 80NSSC19K0336 and JSPS Grant 21H04998 supporting development of advanced WFS techniques including photonic devices. O G and R J-C acknowledge support from the Heising-Simons foundation.

3. Photonic lantern wavefront sensing and control

Barnaby Norris1, Jonathan Lin2, Robert J Harris3,4, Aline N Dinkelaker5 and Momen Diab6

1Sydney Institute for Astronomy (SIfA), School of Physics, The University of Sydney, Sydney, Australia

2Department of Physics and Astronomy, University of California, Los Angeles, CA, United States of America

3Max-Planck-Institute for Astronomy, Heidelberg, Germany

4Department of Physics, Durham University, Durham, United Kingdom

5Leibniz Institute for Astrophysics Potsdam (AIP), Potsdam, Germany

6Dunlap Institute for Astronomy and Astrophysics, University of Toronto, Toronto, Canada

Status

Astronomy has been revolutionized by the adoption of AO [101], where the wavefront of light, corrupted by Earth's turbulent atmosphere, is sensed, analyzed and corrected. This allows diffraction-limited, HCI of targets such as exoplanets, and is key to enabling the full potential of the upcoming Extremely Large Telescopes. Existing AO systems generally measure the wavefront error at the pupil plane, using WFSs such as a Shack–Hartmann or pyramid sensor [102]. These sensors are blind to highly problematic modes such as petaling (phase shear at the pupil plane around the telescope spiders [103]), and suffer from NCPA with respect to the science focal plane. In a standard imaging system, the focal plane image alone cannot be used to sense the wavefront since it contains only intensity (not phase) information about the PSF.

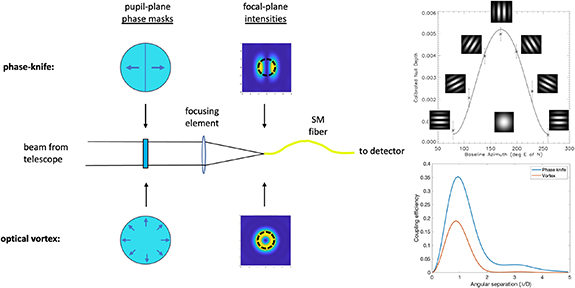

Placing a PL at the focal plane, allows the complex amplitude of the PSF, and hence the wavefront, to be directly measured (see figure 4 for examples). A PL is a passive device that consists of sets of waveguides with different numbers of modes, between which light is transferred [7, 20], see also section 4. Of particular interest is when the MM waveguide at the input tapers into several SM waveguides at the output, the number of which matches or exceeds the number of spatial modes at the input. The excitation of modes in the MM end of the PL is a direct function of the spatially-dependent complex amplitude of the injected PSF. If the transfer function of the PL is known, the wavefront can be reconstructed from the PL's SM outputs. But this transfer function is only known by measurement post-fabrication, since the fabrication process is not deterministic.

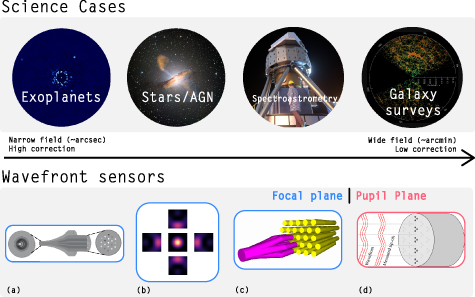

Figure 4. Different photonic lantern wavefront sensor designs (bottom) and their potential applications (top): the highest level of correction/narrow field-of-view on the left, and lower level of correction/wide field-of-view on the right. Blue indicates focal plane and red indicates pupil plane WFS. Image credits for the insets (top row, 'Science Cases'): 'Exoplanets': Jason Wang (Northwestern)/William Thompson (UVic)/Christian Marois (NRC Herzberg)/Quinn Konopacky (UCSD), 'Stars/AGN': ESO/WFI (Optical); MPIfR/ESO/APEX/A.Weiss et al (Submillimetre); NASA/CXC/CfA/R.Kraft et al (x-ray), 'Spectroastrometry': ESO, 'Galaxy surveys': M. Blanton and SDSS. Insets for the wavefront sensors (bottom row): (a) Reproduced from [53], CC BY 4.0. (b) Reproduced with permission from [105]. (c) Reprinted with permission from [113] © The Optical Society. (d) Reproduced from [106], CC BY 4.0. The source images for the 'Science Cases' insets and references [53, 106] are licensed under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/).

Download figure:

Standard image High-resolution imageThe PL-WFS offers several advantages: it is sensitive to any mode which affects the PSF, including modes to which pupil-plane WFSs are blind, it makes optimal use of detector pixels (one pixel per mode) minimizing detector noise, and the entire device fits within a standard fiber connector making it ideal for MO systems. The output can also be spectrally dispersed via a prism or grating (see sections 6 and 7), to achieve wavelength-resolved wavefront sensing, useful in breaking phase-wrapping degeneracy (such as seen in petaling) and to measure atmospheric scintillation. It can also be used for PSF reconstruction. Perhaps most significantly, a fully photonic device has high stability and is ideal for optimally injecting light into a SM fiber (for subsequent spectroscopy [104], interferometry [12], etc) wherein it offers a truly zero NCPA WFS (see hybrid MSPL below).

The relationship between low-order wavefront modes (such as Zernike aberrations) and PL outputs has been shown [105] as well as laboratory and on-sky demonstrations of wavefront reconstruction. These include a 19-mode PL-WFS and a neural network reconstructor using a multi-core-fiber based PL [53] and a pupil plane low-order WFS [106].

Research is underway to evaluate the efficacy of different PL types and algorithms for WFSing. Both optical-fiber-based [7] and ultrafast laser inscribed (ULI) lanterns [107] are being investigated, as well as MSPLs [108, 109]. While in a standard PL each SM output is a complex linear combination of the complex input mode amplitudes, in a MSPL a one-to-one mapping between the modes excited at the MM port and the SMFs can be engineered [19]. This can ideally match the modes coupled to those in the PSF [110]. However, the number of modes that MSPLs can multiplex is limited by device length and core diameter, with the length of the required taper proportional to the square of the number of modes [111], and therefore MSPLs are not expected to be effective in WFSing applications [96].

A so-called hybrid MSPL can circumvent this limitation; here, all light propagating in the fundamental (LP01) mode is routed to a single output, and higher order modes are used for WFSing. In this case, the higher order outputs drive the AO system to maximize coupling in the LP01 mode, which is then routed to the SM science instrument. Alternative modes could be made selective instead if desired. Importantly, this selectivity is maintained over a broad bandwidth [112], such as the 1.5 µm–1.8 µm range demonstrated in this reference.

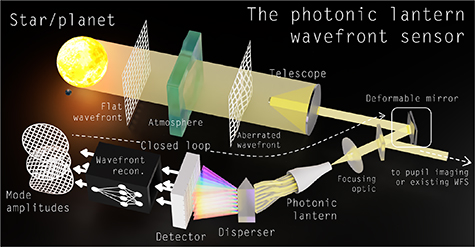

With ongoing developments and diverse configurations, PLs have the potential to provide solutions for a broad range of WFS applications (see figure 5 for an example of PL WFS integration).

Figure 5. Overview of a PL-WFS system. Light arrives at the telescope distorted from the atmosphere. After the telescope mirrors, the light is guided to a photonic lantern, where it is distributed between different channels and exits at multiple outputs. Here, it can be spectrally dispersed and is then detected. The data can be used directly for scientific analysis as well as wavefront reconstruction (e.g. using a neural network), with the results feeding back to a deformable mirror earlier in the optics chain for wavefront correction.

Download figure:

Standard image High-resolution imageCurrent and future challenges

One difference to overcome between the PL-WFS and traditional WFSs is that PL-WFSs are non-linear. Since the measured quantity is the SM output intensity (the square of the complex amplitude), the transfer function mapping wavefront phase to output flux is non-linear (and non-monotonic for large phase errors [53], since the coupling is cycling in a sinusoid-like fashion between constructive and destructive interference as phase-difference increases). Therefore, for wavefront reconstruction (e.g. for closed-loop AO) the standard linear reconstruction algorithms only work over small wavefront error ranges, where the transfer function is locally linear. One option is to use a higher order (e.g. quadratic) reconstruction algorithm [96] or to use a fully non-linear inference model (e.g. neural network [53]). An alternative approach is to instead optimize for PL linearity, either altering the PL itself or through additional optics such as phase masks and beam recombiners.

While measurements of on-sky wavefronts have been demonstrated [87], the next step is to deploy a PL-WFS in a closed loop configuration on-sky. The current challenge is deploying a suitable (e.g. non-linear) wavefront reconstruction algorithm that can operate with sub-millisecond latencies and integrating it into existing AO software, while running in parallel with existing high-order WFSs. Additionally, while the compact size of a PL-WFS makes it ideal for MO WFSing, algorithms and instruments to exploit this are yet to be developed.

To date, only low-order (<20 modes) PL-WFSs have been demonstrated. For high-order AO applications such as speckle suppression many more modes would be advantageous. PLs with many 100s of modes have been produced (e.g. using a cascaded design [21]), but not yet routinely. The difficulty in implementing algorithms for 100 or even 1000 mode PL WFsing is yet to be determined. An alternative could be to use microlens arrays to reduce the number of modes in each lantern, such as the WFS proposed by [106].

A high mode-count PL becomes especially important for imaging, wherein each mode corresponds to one spatial element of the image. This is an important goal, as obtaining a full complex image of the science target would allow speckles and the science object to be optimally disambiguated—currently one of the greatest challenges in HCI [114]. A suitable architecture (and algorithm) to measure this, along with the coherence properties of the source, is an active area of research. One possibility is to interferometrically recombine the outputs of the PL to measure their complex amplitudes [113, 115]. Alternatively, the interferometry could take place within the PL itself, with the number of PL outputs being made greater than the number of input modes, thus oversampling, and encoding phase, amplitude and spatial coherence directly into the output intensities.

While the design parameters of fiber-based PLs can be broadly specified, the tapering-based fabrication process means that the exact transfer function cannot be specified, and tapering parameters are set empirically [116]. A precise, deterministic fabrication method would enable more specialized and optimized designs. Deterministic fabrication would address the mismatch between the 'ideal' PLs assumed in simulations, and real PLs, which feature slightly mispositioned cores, non-circular claddings, and other defects. The simulation process also faces other challenges, such as the high computational cost and approximations of standard beam propagation algorithms (such as the beam propagation method (BPM)), reducing the accuracy of future high mode-count models.

One promising application is the use of the PL-WFS (possibly with hybrid MSPL fiber injection) on arrays of small telescopes. Their small apertures offer high SRs with only low-order correction such as that provided by a MSPL. Light from multiple telescopes could then be efficiently injected into SMFs and (in)coherently combined for diffraction-limited spectroscopy, or interferometry. A general challenge, however, is the overall lower flux collected by these smaller telescopes, requiring the PL-WFS system to match the brightness limit of any other WFS in the system.

Advances in science and technology to meet challenges

More accurate, deterministic control of PL parameters during fabrication would enable devices to be manufactured whose properties match those in the simulated design. Most mature photonic fabrication techniques such as photolithography are limited to two-dimensional structures, making them unsuitable for PLs. Current fiber-based PLs are produced via tapering on fusion splicing glass processing machines, making reliable and repeatable fabrication difficult. Increased demand for PLs in industry (e.g. telecommunications) may lead to PL-oriented glass processing machines. ULI is a promising technology, allowing the use of more arbitrary structures to construct the PL. This technology is still developing, and generally has lower refractive index contrast and worse control than fiber-tapering methods, but the field is evolving rapidly [90, 91].

For any fabrication technique, accurate simulations require a better understanding of how errors in the manufacturing process propagate to actual WFS behavior. For end-to-end simulations, the source of wavefront error represented should be refined to incorporate errors from a wider range of on-sky and instrumental sources. Advances in numerical simulation algorithms are also needed, both for increases in accuracy (e.g. adopting a fully vectorial BPM technique or finite-difference time-domain method) and speed. Fully differential numerical models would also be extremely useful to enhance the optimization processes. Together these will allow production of devices with precisely optimized imaging/wavefront sampling.

Advancements in AO algorithms are required, including optimization of non-linear algorithms (e.g. neural networks) at low-latency kilohertz rates for closed-loop operation. The performance of these algorithms under a range of conditions must be carefully characterized to achieve the reliable, routine operation demonstrated by standard AO algorithms. Beyond WFSing, performing full coherent imaging—simultaneously measuring the image and wavefront, and its spatial coherence properties—will require innovation in analysis and image reconstruction. New detector technologies, especially photon-counting, wavelength-resolved detectors such as MKIDS (see section 25), would also offer a huge advantage as they have effectively zero read-noise and would allow high spectral resolution (acting as cross-dispersion for an Echelle spectrograph), but are currently limited to low photon-count rates.

Due to their stability, compact form factor and high sensitivity due to optimal detector usage, PL WFSing may become invaluable for space-based telescopes. Since they operate in the low wavefront error regime, a high mode-count PL could be the only WFS (both for speckle control and mirror phasing), relaxing the need for separate pupil-plane WFS and optics. As highlighted by the Roman space telescope coronagraph technology demonstration [117] the success of future high resolution space telescopes will be contingent on WFSing and control. WFSing and control is also critical for optical communications between space and ground. Before PLs can be deployed in these contexts, these components will have to be space qualified by means of environmental testing and technology demonstrator missions using small satellites.

Concluding remarks

The use of PLs for wavefront sensing is a novel application and has already seen a surge of interest. Advantages include the ability to perform direct measurements of both phase and amplitude at the focal or pupil plane, inherent compactness and stability, optimal use of detector pixels, and the ability to perform optimal injection of starlight into one SMF (acting as a zero non-common-path WFS). While the concept has been demonstrated in laboratory and in open loop on-sky experiments, further development is required to enable widespread adoption. This includes implementing low-latency non-linear control algorithms and developing deterministic, accurate fabrication methods coupled with precise simulations. Full coherent imaging (wavefront and image measurement) is also on the horizon. There is a strong research effort by multiple groups worldwide to investigate these aspects, and programs to test PLs on major telescopes are already underway.

Acknowledgments

B R M N acknowledges support from the Australian Research Council, Discovery Early Career Researcher Award (DE210100953).

R J H would like to thank the Deutsche Forschungsgemeinschaft (DFG) for their support through Project 326946494, 'Novel Astronomical Instrumentation through photonic Reformatting'.

A N D acknowledges support by the Bundesministerium für Bildung und Forschung (BMBF) through the Project 03Z22AN11.

This material is based upon work supported by the National Science Foundation Graduate Research Fellowship Program under Grant No. DGE-2034835. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. This work was also supported by the National Science Foundation under Grant No. 2109232.

4. Spectroscopic applications enabled by photonic lanterns

Sergio-Leon Saval1, Steph Sallum2, Stephen Eikenberry3 and Kevin Bundy4

1Sydney Astrophotonics Instrumentation Laboratory (SAIL), School of Physics, The University of Sydney, Sydney, Australia

2Department of Physics and Astronomy, University of California, Irvine, CA, United States of America

3CREOL, The College of Optics and Photonics, University of Central Florida, Orlando, FL, United States of America

4Department of Astronomy and Astrophysics, University of California, Santa Cruz, CA, United States of America

Status

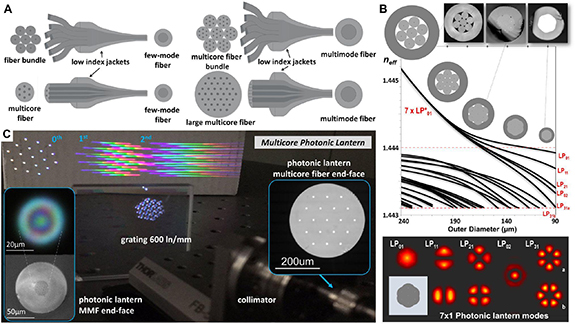

Conventional astronomical fiber-fed spectrographs use MM optical fibers to feed telescope light to a disperser. These fibers can be sized to match the width of the point-spread function (PSF), providing more efficient light capture compared to their telecom counterpart, SMFs [118]. However, these spectrographs are larger and limited in spectral resolution compared to diffraction-limited platforms fed with SM fibers. These have recently been demonstrated for precision RV measurements [119–121] for example, but require high performance AO systems to prevent significant fiber coupling losses [50, 122]. PLs offer a powerful solution for efficient diffraction-limited spectroscopy by converting MM inputs to SM outputs (figure 6(A) and (C)). This reduces AO performance requirements, enabling near-diffraction-limited spectroscopy at the outputs [123–125]. As a result, PLs have recently emerged as a viable technology for the following applications: spectro-astrometric imaging; efficient combination of light from small telescope arrays; and massively-multiplexed spectroscopy for wide fields of view.

Figure 6. (A) Schematics of all-fiber photonic lanterns, including: few-moded photonic lanterns conventionally fabricated using single-mode fibers or a single multicore (left), and highly-multimode photonic lanterns fabricated from one or several multicore fibers (right). (B) Simulation of the modal evolution as a function of the waveguide tapering factor, including cross-sectional images of a 7 × 1 photonic lantern along the transition and the supported simulated modes. (C) Actual images of a multicore photonic lantern device 'converting' multimode light in the visible (450–750 nm) to an array of single-mode outputs wavelength-dispersed by a volume holographic grating in the lab.

Download figure:

Standard image High-resolution imageSpectroastrometry (SA [126];) involves using spectral centroid shifts to infer the presence of wavelength-dependent morphology within the diffraction limit (as depicted in figure 7(C)). Traditionally carried out with slit spectrographs both with and without AO (e.g. [127]), conventional SA's astrometric precision is set by the PSF width and signal-to-noise ratio, with precision <1 mas for the best AO experiments [128]. Even AO-corrected SA can suffer from PSF variability (e.g. [129]), and PLs have recently been demonstrated in simulation as an avenue for recovering SA signals in the presence of variable correction [130] (figure 7(C)). For example, hydrogen line signals from accreting planets can be recovered for achievable tip-tilt jitters of 0.1 λ/D. Furthermore, since SM output intensities encode pupil plane phase variations, PL spectro-astrometry does not suffer from position angle degeneracies present in conventional approaches, making it a promising technique for complex scenes such as resolved circumstellar disks and outflows.

Figure 7. Spectroscopic photonic lantern applications. (A) Schematic layout of the PolyOculus telescope array concept. An array of small autonomous telescopes point to the same target on the sky, with fiber optic feeds coupled via high-efficiency photonic lanterns into a single fiber, which feeds into a scientific detector. (B) Illustration of the state of the art in massively-multiplexed spectrographs, future instruments enabled by photonic lanterns and chip spectrometer arrays, and their anticipated science outcomes. (C) Simulation of photonic lantern spectroastrometry. Companions with excess flux relative to the primary star at specific wavelengths (left) can be detected within the classical diffraction limit by measuring spectral centroid shifts. These signals can be modeled as Zernike modes, illustrated in the right panels showing an injected and recovered protoplanet with Pa-β excess emission (adapted from [130]). Reprinted with permission from [Xanadu X8 quantum photonic processor: Xanadu]. Reproduced from [130].

Download figure:

Standard image High-resolution imageA second PL application involves combining light from arrays of incoherently-linked smaller telescopes. Such arrays have recently emerged as a reduced-cost (by ∼10× per unit area) method of increasing telescope collecting area for spectroscopy of faint sources [131–134]. In the PolyOculus implementation (figure 7(A)), packs of semi-autonomous, small (25–40 cm), off-the-shelf telescopes comprise the array [132]. PLs that incoherently combine several multi-/few-mode fibers into a single MM fiber offer particular advantages for combining the light, because they preserve etendue without oversized downstream pupils [132]. PolyOculus has demonstrated '7-pack' PL light combiners with >90% efficiency across the visible [135], producing a collecting area equivalent to a ∼0.9 m telescope (figure 7(B)). Furthermore, using multiple seven-pack arrays, the PL outputs can be spliced to optical fibers which then feed into another PL combiner. This approach can be extended, providing a hierarchical scaling to build collecting areas equivalent to the 30–40 m diameter mirrors of upcoming Extremely Large Telescopes.

Finally the ability of astronomical spectrometers to measure multiple target spectra simultaneously (i.e. the multiplex; e.g. SDSS, DESI, SAMI; [136–138]), has enabled major progress in cosmology, galaxy formation, and stellar astrophysics. The state-of-the-art is the DESI facility, which collects 5000 simultaneous fiber spectra across an eight square degree field on a 4 m telescope. Future instruments with 4–10 times greater multiplex on larger telescopes are already under consideration. With a conventional design, such an instrument would be impractical, exceeding the cost of the telescope itself. Mass-produced photonic spectrometers, however, offer a path toward greatly reducing the 'cost per spectrum' (e.g. a 'spectrometer-on-a-chip'; sections 6 and 7). Because these devices typically demand single- or few-mode input, PLs will play an important role by extracting the modal components of potentially thousands of on-sky sources.

Current and future challenges

Currently, PLs have achieved high performance with transmission efficiency of >90%. However, lanterns have been constrained in terms of the number of inputs and outputs. PLs with up to 19 output SMs are fairly standard, but larger port number devices would be desirable. They would enable more efficient combiners for telescope arrays, more versatile reformatting for integral field and multiplexed spectroscopy, and more detailed recovery of asymmetries via spectro-astrometry.

Increasing the number of PL modes relies on increasing the number of fibers or cores in a multicore (figure 6(A)). Scaling to a large number of fibers is challenging due to manufacturing limitations related to handling hundreds of individual fibers and limitations on the maximum diameter handled by glass processing equipment (e.g. splicing, tapering). However, MCFs reduce some manufacturing limitations and could offer hundreds to thousands of cores. To date, the devices with the highest port counts constructed from individual SMFs are 1 × 61 [14], and 1 × 88 with reduced SMF cladding diameters [20]. The largest single multi-core PL with SM cores have 121 cores [139]. A 600 µm-diameter, 511-core multi-core lantern was reported in Birks 2015. However, this PL, intended for fiber scrambling, contained a mix of SM and few-mode cores.

The need for high-performance, wide-field AO presents an additional challenge for high-multiplex instruments with large fields-of-view (e.g. >1° diameter; [136–138]). Diffraction-limited performance on large ground-based telescopes across several arcminutes is currently infeasible, although modest corrections are possible with ground-layer adaptive optics (0.3'' FWHM over 8 arcminute fields; [140]) and multi-conjugate adaptive optics (MCAO; ∼25% Strehl over ∼2 arcminute fields-of-view [52]; and references therein). For instruments with fields-of-view comparable to MCAO correction, the architectural goal would be to optimize coupling efficiency, number of modes, and field-of-view, to achieve acceptable coupling efficiency without prohibitively high requirements on the number of lanterns, outputs, and instruments. While the AO system can reduce the number of modes, high-performance low-mode-count (<19) lanterns will be needed to take full advantage. Although such devices have been demonstrated, reliable reproduction and connectorization of such lanterns remains a challenge. For wider fields—i.e. where Strehl remains low—the challenge lies in identifying and filtering the most valuable SMs from the low-Strehl, multi-mode light. For example, a PL 'speckle spectrograph' (where individual speckles are spatially directed to a spectrograph; [141]) may reduce sky noise and boost signal-to-noise, compensating for ignoring many fainter, more spatially-distributed modes.

Advances in science and technology to meet challenges

One solution to increase the desired number of modes (i.e. inputs/outputs) in PLs systems may be to implement 'hierarchical' PLs [21]. In this concept, a large field-of-view PL efficiently separates the light into a large (but manufacturable and manageable) number of MM output fibers. Each multi-mode output feeds into another PL that further subdivides the light. This cascade can be repeated until each output reaches the desired modal content—be it single-, few-, or (smaller) multi-mode. With this approach, lanterns with relatively modest output numbers (∼19) could approach ten-thousand-mode outputs with only three hierarchical levels.

Generally, fabricating complex lanterns as described above is possible, with limitations imposed by the system's scientific and/or technical applications. For example, limitations to realizing practical, high-order lanterns are imposed by the need to potentially share light between a science instrument and a WFS, in which case some cores would physically be split off from others. These requirements will impact both the manufacturability and functionality of the lantern. Additionally, manufacturing and engineering challenges will need to be overcome to achieve integration and connectorization of these systems for rugged use in observatory environments.

Hybrid PL devices may soon allow for increasing the number of modes while improving AO performance [87]. Hybrid PLs are made with dissimilar fibers, and can divide light from an MM fiber into separate science and wavefront sensing fiber arrays. These could potentially bring the best of both worlds: single- or few-mode fiber coupling to a science instrument, and simultaneous wavefront sensing without non-common path errors (as discussed in section 3). This would, for example, improve spectroastrometric precision by providing a better, more stable point spread function, and by allowing for selection of science fibers that optimally recover asymmetries in the target. Furthermore, deploying many of these devices could improve AO correction for large fields-of-view, highly-multiplexed instruments, enabling more efficient spectroscopy of faint, distant objects.

Concluding remarks

PLs present an opportunity for efficient, diffraction-limited spectroscopy using both single-object and wide-field, highly-multiplexed instruments. While these devices have been demonstrated to provide high coupling efficiency for a small number of inputs/outputs, their versatility for astronomical observations would be improved by: (1) manufacturing devices with larger numbers of inputs/outputs, increasing the number of PL modes, (2) developing techniques to extract the most valuable modes from low-Strehl light, and (3) manufacturing devices where outputs can be physically separated for simultaneous applications (i.e. science and wavefront sensing, which would improve AO performance for both narrow and wide fields-of-view). Future applications of these enhanced PLs will lead to new observational constraints for a wide range of sub-fields, from cosmology, to galactic astrophysics, to stellar and planetary formation and evolution.

Acknowledgments

This work was also supported by the National Science Foundation under Grant No. 2109232.

5. New optical fibers for astrophotonics

Stephanos Yerolatsitis1, Rodrigo Amezcua-Correa1, Itandehui Gris-Sanchez2 and Julia Bryant3

1CREOL, The College of Optics and Photonics, University of Central Florida, Orlando, FL, United States of America

2Institute of Telecommunications and Multimedia, Universitat Politècnica de València, Valencia, Spain

3Sydney Institute for Astronomy (SIfA), School of Physics, The University of Sydney, Sydney, Australia

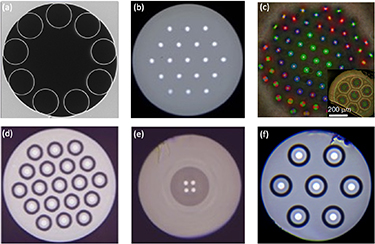

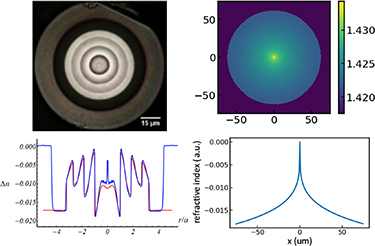

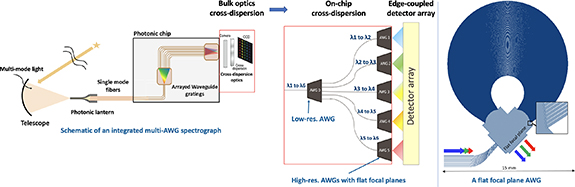

Status