Abstract

We study a Bayesian inverse problem arising in the context of resin transfer molding (RTM), which is a process commonly used for the manufacturing of fiber-reinforced composite materials. The forward model is described by a moving boundary problem in a porous medium. During the injection of resin in RTM, our aim is to update, on the fly, our probabilistic knowledge of the permeability of the material as soon as pressure measurements and observations of the resin moving domain become available. A probabilistic on-the-fly characterisation of the material permeability via the inversion of those measurements/observations is crucial for optimal real-time control aimed at minimising both process duration and the risk of defects formation within RTM. We consider both one-dimensional (1D) and two-dimensional (2D) forward models for RTM. Based on the analytical solution for the 1D case, we prove existence of the sequence of posteriors that arise from a sequential Bayesian formulation within the infinite-dimensional framework. For the numerical characterisation of the Bayesian posteriors in the 1D case, we investigate the application of a fully-Bayesian sequential Monte Carlo method (SMC) for high-dimensional inverse problems. By means of SMC we construct a benchmark against which we compare performance of a novel regularizing ensemble Kalman algorithm (REnKA) that we propose to approximate the posteriors in a computationally efficient manner under practical scenarios. We investigate the robustness of the proposed REnKA with respect to tuneable parameters and computational cost. We demonstrate advantages of REnKA compared with SMC with a small number of particles. We further investigate, in both the 1D and 2D settings, practical aspects of REnKA relevant to RTM, which include the effect of pressure sensors configuration and the observational noise level in the uncertainty in the log-permeability quantified via the sequence of Bayesian posteriors. The results of this work are also useful for other applications than RTM, which can be modelled by a random moving boundary problem.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

In this paper we study the Bayesian inverse problem within the moving boundary setting motivated by applications in manufacturing of fiber-reinforced composite materials. Due to their light weight and high strength, as well as their flexibility to fit mechanical requirements and complex designs, such materials are playing a major role in automotive, marine and aerospace industries [3, 4, 27]. The moving boundary problem under consideration arises from resin transfer molding (RTM) process, one of the most commonly used processes for manufacturing composite materials. RTM consists of the injection of resin into a cavity mold with the shape of the intended composite part according to design and enclosing a reinforced-fiber preform previously fabricated. The next stage of RTM is curing of the resin-impregnated preform, which may start during or after the resin injection. Once curing has taken place, the solidified part is demolded from the cavity mold. In the present work we are concerned with the resin injection stage of RTM under the reasonable assumption that curing starts after resin has filled the preform. Though the current study is motivated by RTM, the results can be also used for other applications where a moving boundary problem is a suitable model.

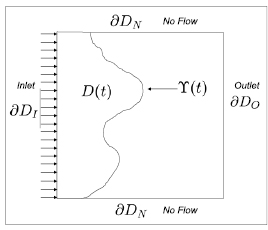

We now describe the forward model (see further details in [3, 38, 47]). Let  ,

,  , be an open domain representing a physical domain of a porous medium with the permeability

, be an open domain representing a physical domain of a porous medium with the permeability  and porosity φ. The boundary of the domain

and porosity φ. The boundary of the domain  is

is  , where

, where  is the inlet,

is the inlet,  is the perfectly sealed boundary, and

is the perfectly sealed boundary, and  is the outlet. The domain

is the outlet. The domain  is initially filled with air at a pressure p0. This medium is infused with a fluid (resin) with viscosity μ through an inlet boundary

is initially filled with air at a pressure p0. This medium is infused with a fluid (resin) with viscosity μ through an inlet boundary  at a pressure pI and moves through

at a pressure pI and moves through  occupying a time-dependent domain

occupying a time-dependent domain  , which is bounded by the moving boundary

, which is bounded by the moving boundary  and the appropriate parts of

and the appropriate parts of  . An example of the physical configuration of this problem in 2D is illustrated in figure 1.

. An example of the physical configuration of this problem in 2D is illustrated in figure 1.

Figure 1. An example of the physical configuration of the moving boundary problem.

Download figure:

Standard image High-resolution imageThe forward problem for the pressure of resin  consists of the conservation of mass

consists of the conservation of mass

where the flux  is given by Darcy's law

is given by Darcy's law

with the following initial and boundary conditions

Here  is the velocity of the point x on the moving boundary

is the velocity of the point x on the moving boundary  in the normal direction at x,

in the normal direction at x,  and

and  are the unit outer normals to the corresponding boundaries.

are the unit outer normals to the corresponding boundaries.

We remark that for definiteness we have assumed that at the initial time the moving boundary  coincides with the inlet boundary

coincides with the inlet boundary  and that the constant pressure condition is imposed at the inlet. It is not difficult to carry over the inverse problem methodology considered in this paper to other geometries and other conditions on the inlet (e.g. constant rate). Further, in two (three) dimensional RTM settings one usually models permeability via a second (third)-order permeability tensor to take into account anisotropic structure of the media [3, 38] but here for simplicity of the exposition the permeability

and that the constant pressure condition is imposed at the inlet. It is not difficult to carry over the inverse problem methodology considered in this paper to other geometries and other conditions on the inlet (e.g. constant rate). Further, in two (three) dimensional RTM settings one usually models permeability via a second (third)-order permeability tensor to take into account anisotropic structure of the media [3, 38] but here for simplicity of the exposition the permeability  is a scalar function. Again, the developed methodology is easy to generalize to the tensor case.

is a scalar function. Again, the developed methodology is easy to generalize to the tensor case.

Let us note that in the 1D case the nonlinear problem (1)–(9) is analytically simple and admits a closed form solution (see section 2 and [38]) but the two and three dimensional cases are much more complicated and analytical solution is in general not available. We remark that in two and three dimensional cases the resin can race around low permeability regions and the front  can become discontinuous creating macroscopic voids behind the main front (see further details in [3, 38]) but in this paper we ignore such effects which deserve further study.

can become discontinuous creating macroscopic voids behind the main front (see further details in [3, 38]) but in this paper we ignore such effects which deserve further study.

It has been extensively recognized [13, 14, 30, 31, 37, 42] that imperfections in a preform that arise during its fabrication and packing in the molding cavity can lead to variability in fiber placement which results in a heterogenous highly-uncertain preform permeability. In turn, these unknown heterogeneities in permeability of the preform give rise to inhomogeneous resin flow patterns which can have profound detrimental effect on the quality of the produced part, reducing its mechanical properties and ultimately leading to scrap. To limit these undesirable effects arising due to uncertainties, conservative designs are used which lead to heavier, thicker and, consequently, more expensive materials aimed at avoiding performance being compromised. Clearly, the uncertainty quantification of material properties is essential for making RTM more cost-effective. One of the key elements in tackling this problem is to be able to quantify, in real time, the uncertain permeability, which can, in turn, be used in active control systems aimed at reducing the risk of defects formation.

In this work we assume that  ,

,  ,

,  ,

,  , pI, p0, μ and φ are known deterministic parameters while the permeability

, pI, p0, μ and φ are known deterministic parameters while the permeability  is unknown. Our objective is within the Bayesian framework to infer

is unknown. Our objective is within the Bayesian framework to infer  or, more precisely, its natural logarithm

or, more precisely, its natural logarithm  from measurements of pressure

from measurements of pressure  at some sensor locations as well as measurements of the front

at some sensor locations as well as measurements of the front  , or alternatively, of the time-dependent domain

, or alternatively, of the time-dependent domain  at a given time t > 0. We put special emphasis on computational efficiency of the inference, which is crucial from the applicable point of view.

at a given time t > 0. We put special emphasis on computational efficiency of the inference, which is crucial from the applicable point of view.

1.1. Practical approaches for permeability estimation in fiber-reinforced composites

While the estimation of preform permeability during resin injection in RTM is clearly an inverse problem constrained by a moving boundary PDE such as (1)–(9), most existing practical approaches pose the estimation of permeability in neither a deterministic nor stochastic inverse problems framework. For example, the very extensive review published in 2010 [41] reveals that most conventional methods for measuring permeability assume that (i) the material permeability tensor is homogenous and (ii) the flow is 1D (including 2D radial flow configurations). Under these assumptions the resin injection in RTM can be described analytically, via expressions derived from Darcy's law, which enable a direct computation of the permeability in terms of quantities that can be measured before or during resin injection. These conventional methods suffer from two substantial practical limitations. First, they do not account for the heterogenous structure of the preform permeability, and although they provide an estimate of an effective permeability, this does not enable the prediction of the potential formation of voids and dry spots. Second, those conventional methods compute the permeability in an off-line fashion (i.e. before RTM) with specific mold designs that satisfy the aforementioned assumptions intrinsic to those methods (e.g. rectangular flat molds). This second limitation is not only detrimental to the operational efficiency of RTM but also neglects the potential changes in permeability that can results from encapsulating the preform in cavities with complex designs.

Some practical methodologies for online (i.e. during resin injection) estimation of heterogenous permeability have been proposed in [36, 49]. While these approaches seem to address the aforementioned limitations of conventional methods, they also use a direct approach for the estimation of permeability which faces unresolved challenges. As an example, let us consider the recent work of [49] which uses an experimental configuration similar to the one described in figure 1 and which, by using pressure measurements from sensors located within the domain occupied by the preform, computes a finite-difference approximation of the normal flux to the front  . In addition, by means of images from CCT cameras, seepage velocity of the resin front is computed in [49]; this velocity is nothing but

. In addition, by means of images from CCT cameras, seepage velocity of the resin front is computed in [49]; this velocity is nothing but  defined by (5) in the context of the moving boundary problem (1)–(9). Under the assumption that μ and φ are known, the approach proposed in [49] consists of finding

defined by (5) in the context of the moving boundary problem (1)–(9). Under the assumption that μ and φ are known, the approach proposed in [49] consists of finding

with  and

and  computed from measurements as described above. This approach offers a practical technique to estimating κ on the moving front and can then potentially infer the whole permeability field during the resin injection in RTM. However, from the mathematical inverse problems perspective, this ad-hoc approach is not recommended as it involves differentiating observations of pressure data for the computation of

computed from measurements as described above. This approach offers a practical technique to estimating κ on the moving front and can then potentially infer the whole permeability field during the resin injection in RTM. However, from the mathematical inverse problems perspective, this ad-hoc approach is not recommended as it involves differentiating observations of pressure data for the computation of  . Indeed, it is well-known [23] that differentiation of data is an ill-posed problem that requires regularization. In addition, rather than an inverse problem, the least-squares formulation in (10) is a data fitting exercise that excludes the underlying constraint given by the moving boundary problem and which entails a global effect induced by κ. As a result, the estimate of permeability obtained via (10) has no spatial correlation and thus fails to provide an accurate global estimate of the permeability field.

. Indeed, it is well-known [23] that differentiation of data is an ill-posed problem that requires regularization. In addition, rather than an inverse problem, the least-squares formulation in (10) is a data fitting exercise that excludes the underlying constraint given by the moving boundary problem and which entails a global effect induced by κ. As a result, the estimate of permeability obtained via (10) has no spatial correlation and thus fails to provide an accurate global estimate of the permeability field.

The recent work of [29] demonstrates considerable advantages of using systematic data assimilation approaches to infer permeability during the resin injection of RTM. By means of a standard ensemble Kalman methodology for data assimilation, the approach of [29] uses measurements from visual observations of the front location to produce updates of the preform permeability within the context of a discrete approximation of the moving boundary problem (1)–(9). While the methodology used in [29] is focused in producing deterministic estimates, the standard Kalman methodology can be potentially used to quantify uncertainty in preform permeability. However, it has been shown that standard Kalman methodologies, such as the one used in [29], could result in unstable estimates unless further regularisation to the algorithm is applied [18].

In addition to the lack of an inverse problem framework that can lead to unstable and ultimately inaccurate estimates of the permeability in resin injection of RTM, most existing approaches (i) do not incorporate the uncertainty in the observed variables and (ii) do not quantify uncertainty in the estimates of the permeability of preform. It is indeed clear from our literature review that the estimation of permeability of preform during resin injection deserves substantial attention from an inverse problems perspective capable of quantifying uncertainty inherent to the fabrication and packing of the preform.

1.2. The Bayesian approach to inverse problems

In this paper we propose the application of the Bayesian approach to inverse problems [46] in order to infer the logarithm of the permeability  , from observations

, from observations  collected at some prescribed measurement/observation times

collected at some prescribed measurement/observation times  during the resin injection in RTM. At each time tn we observe a vector, yn, that contains noisy measurements of resin pressure from sensors as well as some information of the moving domain (or alternatively front location) observed, for example, via CCT cameras or dielectric sensors [32]. In the Bayesian approach, the unknown

during the resin injection in RTM. At each time tn we observe a vector, yn, that contains noisy measurements of resin pressure from sensors as well as some information of the moving domain (or alternatively front location) observed, for example, via CCT cameras or dielectric sensors [32]. In the Bayesian approach, the unknown  is a random function that belongs to a space of inputs X. A prior probability measure

is a random function that belongs to a space of inputs X. A prior probability measure  on u must be specified before the data are collected; this enables us to incorporate prior knowledge which may include design parameters as well as the uncertainty that arises from preform fabrication (i.e. prior to resin injection). In our work we consider Gaussian priors which have been identified as adequate for characterizing the aforementioned uncertainty in log-permeability from the preform fabrication [30, 31, 50] (see also references therein).

on u must be specified before the data are collected; this enables us to incorporate prior knowledge which may include design parameters as well as the uncertainty that arises from preform fabrication (i.e. prior to resin injection). In our work we consider Gaussian priors which have been identified as adequate for characterizing the aforementioned uncertainty in log-permeability from the preform fabrication [30, 31, 50] (see also references therein).

At each observation time tn during the infusion of resin in RTM, we then pose the inverse problem in terms of computing,  , the (posterior) probability measure of the log-permeability conditioned on measurements

, the (posterior) probability measure of the log-permeability conditioned on measurements  . Each posterior

. Each posterior  then provides a rigorous quantification of the uncertainty in the log-permeability field given all available measurements up to the time tn. Knowledge of each of these posteriors during RTM can then be used to compute statistical moments of the log-permeability under

then provides a rigorous quantification of the uncertainty in the log-permeability field given all available measurements up to the time tn. Knowledge of each of these posteriors during RTM can then be used to compute statistical moments of the log-permeability under  (e.g. mean, variance) as well as expectations of quantities of interest that may be needed for the real-time optimization of controls (e.g. pressure injection) in RTM.

(e.g. mean, variance) as well as expectations of quantities of interest that may be needed for the real-time optimization of controls (e.g. pressure injection) in RTM.

Although the proposed application of the Bayesian formulation assumes Gaussian priors, the nonlinear structure of the PDE problem, that describes resin injection in RTM, gives rise to a sequence of non-Gaussian Bayesian posteriors  which cannot be characterized in a closed form. A sampling approach is then required to compute approximations of these posteriors. Among existing sampling methodologies, Sequential Monte Carlo (SMC) samplers [1, 8, 22, 34] are particularly relevant for the formulation of the above described inverse problem as they provide a recursive mechanism to approximate the sequence of Bayesian posteriors

which cannot be characterized in a closed form. A sampling approach is then required to compute approximations of these posteriors. Among existing sampling methodologies, Sequential Monte Carlo (SMC) samplers [1, 8, 22, 34] are particularly relevant for the formulation of the above described inverse problem as they provide a recursive mechanism to approximate the sequence of Bayesian posteriors  . Markov Chain Monte Carlo (MCMC) approaches [9] can also be used to compute

. Markov Chain Monte Carlo (MCMC) approaches [9] can also be used to compute  , at each observation time tn. However, conventional MCMC formulations do not exploit the sequential nature of the problem by enabling a recursive estimation of

, at each observation time tn. However, conventional MCMC formulations do not exploit the sequential nature of the problem by enabling a recursive estimation of  which is crucial for the optimisation of the RTM process via making use of active control systems.

which is crucial for the optimisation of the RTM process via making use of active control systems.

Starting with J samples from the prior  ,

,  (i.i.d.), the idea behind SMC is to transform a system of weighted particles

(i.i.d.), the idea behind SMC is to transform a system of weighted particles  that define

that define  to an updated set

to an updated set  that approximates

that approximates  as the new data yn collected at time tn become available. The weights

as the new data yn collected at time tn become available. The weights  are normalised (i.e.

are normalised (i.e.  ,

,  ) and the empirical measure

) and the empirical measure

converges to  as

as  (

( denotes the Dirac measure concentrated at w). Moreover, if

denotes the Dirac measure concentrated at w). Moreover, if  denotes a quantity of interest of the unknown log-permeability

denotes a quantity of interest of the unknown log-permeability  , the weighted particles

, the weighted particles  can be easily used to compute the sample mean

can be easily used to compute the sample mean

which converges (see for example [34]) to the expectation (under  ) of the quantity of interest

) of the quantity of interest  .

.

The recursive computation of the weighted particles in SMC is suitable for the proposed application in RTM as it allows us to update, potentially in real time, our knowledge of the uncertainty in the log-permeability. However, producing accurate approximations of the Bayesian posteriors  in the context of the inference of preform log-permeability in RTM represents a substantial computational challenge that arises from the fact that these posterior measures are defined on a (infinite-dimensional) functional space. Upon discretization, these posteriors could be potentially defined on a very high-dimensional space. Unfortunately, it has been shown [5, 9] that standard Bayesian sampling methodologies such as standard SMC do not scale well with the dimension of the (discretized) unknown; this leads to unstable and ultimately inaccurate algorithms.

in the context of the inference of preform log-permeability in RTM represents a substantial computational challenge that arises from the fact that these posterior measures are defined on a (infinite-dimensional) functional space. Upon discretization, these posteriors could be potentially defined on a very high-dimensional space. Unfortunately, it has been shown [5, 9] that standard Bayesian sampling methodologies such as standard SMC do not scale well with the dimension of the (discretized) unknown; this leads to unstable and ultimately inaccurate algorithms.

The recent works [9, 22] developed scalable (dimension independent) sampling algorithms for the approximation of the Bayesian posterior that arises from high-dimensional inverse problems. While these algorithms have a solid theoretical background that ensures their stability and convergence properties, achieving a desirable level of accuracy often comes at extremely high computational cost. More specifically, Bayesian methodologies, that provide approximation of the form (11) and that converge asymptotically to the underlying posterior measure  , often involve solving the forward model thousands or even millions of times. In the context of the inverse problem for RTM, the numerical solution of the moving boundary (forward) problem in 2D or 3D settings is computationally very intensive. Therefore, the sequential approximation of the Bayesian posteriors of preform's log-permeability must be conducted with scalable computational efficiency so that it can be realistically used within a near real-time optimization loop for RTM. In the proposed work we develop a computational inverse framework that possess such computational efficiency with the ultimate aim of the real-time uncertainty quantification of the reinforced preform's log-permeability.

, often involve solving the forward model thousands or even millions of times. In the context of the inverse problem for RTM, the numerical solution of the moving boundary (forward) problem in 2D or 3D settings is computationally very intensive. Therefore, the sequential approximation of the Bayesian posteriors of preform's log-permeability must be conducted with scalable computational efficiency so that it can be realistically used within a near real-time optimization loop for RTM. In the proposed work we develop a computational inverse framework that possess such computational efficiency with the ultimate aim of the real-time uncertainty quantification of the reinforced preform's log-permeability.

1.3. Contributions of this work

The contributions of this article are the following:

- (A)A Bayesian formulation of the inverse problem to infer log-permeability from sequential data collected during resin injection in RTM. Both the 2D forward model described by (1)–(9) as well as the corresponding 1D version are considered. For the 1D case, we show that application of the infinite-dimensional Bayesian framework of [46] leads to well-posedness of the sequence of Bayesian posteriors.

- (B)Application of a state-of-the-art SMC framework [22] for the approximation of the sequence of Bayesian posteriors

that arises from the Bayesian formulation. Our use of SMC serves two purposes. First, the SMC framework motivates construction of a novel regularizing ensemble Kalman algorithm (REnKA) that aims at approximating this sequence of posteriors in a computationally efficient manner, thus suitable for its implementation in a practical setting of RTM. Second, we use a Benchmark obtained by SMC application in order to test accuracy of REnKA.

that arises from the Bayesian formulation. Our use of SMC serves two purposes. First, the SMC framework motivates construction of a novel regularizing ensemble Kalman algorithm (REnKA) that aims at approximating this sequence of posteriors in a computationally efficient manner, thus suitable for its implementation in a practical setting of RTM. Second, we use a Benchmark obtained by SMC application in order to test accuracy of REnKA. - (C)Numerical investigation of the accuracy and robustness of the proposed REnKA scheme in the 1D case; this involves constructing, via the SMC sampler of [22], accurate approximations of the posteriors that we use as Benchmark against which we compare the proposed REnKA. The advantages of REnKA in terms of accuracy versus computational cost are showcased by comparing it with the implementation of a low-resolution SMC whose computational cost is comparable to REnKA's.

- (D)Application of REnKA for further investigations of the Bayesian inverse problem in both 1D and 2D. In particular for the 1D case we conduct a numerical investigation of the added value of assimilating the front location relative to the number of pressure sensors. Since the number of pressure sensors that can be physically deployed for preform permeability monitoring in RTM is usually limited, this investigation aims at providing practitioners with guidelines for the number of sensors that can accurately infer preform permeability alongside with its uncertainty. In addition, for the 1D case we study the effect of the frequency of the observations, as well as the observational noise level on the inference of the log-permeability. We further apply REnKA to the 2D forward model and, analogous to the 1D case, we study the effect that the number of pressure sensors have on the inferred log-permeability.

The rest of the paper is organized as follows. In section 2 we introduce the Bayesian inverse problem of inferring the permeability of a porous media in a 1D moving boundary problem for resin injection in RTM. In section 3 we discuss and apply SMC to approximate the Bayesian posteriors that arise from the Bayesian approach. In section 4 we introduce REnKA and then conduct, via numerical experiments with the 1D RTM model, a numerical investigation of its approximation properties relative to its computational cost. In section S1 of the supplementary material (stacks.iop.org/IP/34/105002/mmedia) SupMat we further investigate relevant practical aspects of the inverse problem in 1D including the study of the effect of the number of pressure sensors as well as the noise level on accuracy of the inferred log-permeability and its uncertainty. In section 5 we demonstrate the applicability of REnKA to approximate the Bayesian inverse problem in the 2D RTM model described earlier. Some conclusions are presented in section 6.

2. Bayesian inversion of a 1D RTM model

In this section we apply the Bayesian approach to infer log-permeability in the context of the 1D version of the forward problem defined in (1)–(9). The corresponding 1D moving boundary problem induces a sequence of forward maps that we define in section 2.1 and that we aim at inverting with the Bayesian formalism that we introduce in section 2.2. This sequence of 1D forward maps admits a closed-form solution that can be numerically approximated at a very low computational cost. This will enable us in section 3 to obtain accurate numerical approximations of the solution to the Bayesian inverse problem; we use these accurate approximations as a benchmark for assessing the approximation properties of the ensemble Kalman algorithm that we introduce in section 4.

2.1. The forward 1D RTM model

Let us consider a 1D porous media with physical domain ![$ \newcommand{\e}{{\rm e}} D^{\ast}\equiv [0, x^{\ast}]\subset \mathbb{R}$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn073.gif) . As before, we denote by

. As before, we denote by  (

( ) and

) and  the permeability and porosity of the porous medium, respectively. Resin with viscosity μ is injected at x = 0 at a pressure pI. The pressure at the moving front (outlet)

the permeability and porosity of the porous medium, respectively. Resin with viscosity μ is injected at x = 0 at a pressure pI. The pressure at the moving front (outlet)  is prescribed and equal to p0. The initial pressure distribution before injection is also set to p0. For convenience of the subsequent analysis, we parameterize the permeability in terms of its natural logarithm

is prescribed and equal to p0. The initial pressure distribution before injection is also set to p0. For convenience of the subsequent analysis, we parameterize the permeability in terms of its natural logarithm  . The pressure

. The pressure  and the moving front

and the moving front  are given by the solution to the following model

are given by the solution to the following model

The solution to (13)–(17) can be obtained analytically by the following proposition (see [3, 38, 47]).

Proposition 2.1. Given ![$ \newcommand{\e}{{\rm e}} u\in X\equiv C[0, x^{\ast}]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn081.gif) , let us define

, let us define

The unique solution  ,

,  of (13)–(17) is given for

of (13)–(17) is given for  by

by

The quantity of interest arising from the RTM injection model is the so-called filling time: the time it takes the front  to reach the right boundary of the domain of interest

to reach the right boundary of the domain of interest ![$[0, x^{\ast}]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn086.gif) . Filling time, denoted by

. Filling time, denoted by  , is defined by

, is defined by  . From (19) and the definition in (18) it follows [38] that

. From (19) and the definition in (18) it follows [38] that  is given by

is given by

Note that the parameters p0 and pI are prescribed control variables and thus known. In addition, we assume that μ and φ are known constants. As stated earlier, we are interested in the inverse problem of estimating the permeability, or more precisely its natural logarithm  given time-discrete measurements of the front location as well as the pressure from M sensors located at

given time-discrete measurements of the front location as well as the pressure from M sensors located at ![$\{x_{m}\}_{m=1}^{M}\subset [0, x^{\ast}]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn091.gif) . We denote by

. We denote by  the set of N observation times. For fixed (assumed known) parameters pI, p0, φ and μ, the solution to the PDE model (13)–(17) induces the nth forward map

the set of N observation times. For fixed (assumed known) parameters pI, p0, φ and μ, the solution to the PDE model (13)–(17) induces the nth forward map ![$ \newcommand{\cG}{{\mathcal G}} \cG_{n}:C[0, x^{\ast}]\to \mathbb{R}^{M+1}$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn093.gif) defined by

defined by

Given  , the evaluation of the forward map

, the evaluation of the forward map  predicts the location of the front and the pressure at the sensor locations at the time t = tn. Since observation times are prescribed before the experiment, there is no assurance that for a given u, the corresponding filling time satisfies

predicts the location of the front and the pressure at the sensor locations at the time t = tn. Since observation times are prescribed before the experiment, there is no assurance that for a given u, the corresponding filling time satisfies  for all

for all  . In other words, the front could reach the right end of the domain before we observe it at time tn. In a real experimental setting, the process stops at time

. In other words, the front could reach the right end of the domain before we observe it at time tn. In a real experimental setting, the process stops at time  . However, in the inverse problem of interest here, observation times are selected beforehand, and the search of optimal u's within the Bayesian calibration of the nth forward map can lead to filling times greater than some observation times. In this case

. However, in the inverse problem of interest here, observation times are selected beforehand, and the search of optimal u's within the Bayesian calibration of the nth forward map can lead to filling times greater than some observation times. In this case  , the definition (22) yields

, the definition (22) yields ![$ \newcommand{\cG}{{\mathcal G}} \newcommand{\Ga}{\Upsilon} \cG_{n}(u) =[\Ga(\tau^{\ast}), \{\,p(x_{m}, \tau^{\ast})\}_{m=1}^{M}]^{T}$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn100.gif) .

.

The following theorem ensures the continuity of the forward map, which is necessary for justifying the application of the Bayesian framework in section 2.2.2.

Theorem 2.2. The forward map ![$ \newcommand{\cG}{{\mathcal G}} \cG_{n}:C[0, x^{*}]\to \mathbb{R}^{M+1}$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn101.gif) is continuous.

is continuous.

For the proof of this theorem, see appendix A.

In the following subsection we apply the Bayesian framework for inverse problems in order to invert observations of  .

.

Remark 2.3. We note that for the present work the porosity φ is an assumed known constant; our objective is to infer the log-permeability  . However, the Bayesian methodology that we apply can be extended to the case where the unknown is not only

. However, the Bayesian methodology that we apply can be extended to the case where the unknown is not only  but also φ, and can include the case where

but also φ, and can include the case where  is a spatial function defined on the physical domain

is a spatial function defined on the physical domain  .

.

2.2. The Bayesian inverse problem

Suppose that, at each observation time t = tn, we collect noisy measurements of the front location as well as pressure measurements from sensors. We denoted these measurement by  and

and  , respectively. Our aim is to solve the inverse problem of estimating the log permeability

, respectively. Our aim is to solve the inverse problem of estimating the log permeability  given all the data

given all the data  up to time t = tn. We assume that the aforementioned observations are related to the unknown

up to time t = tn. We assume that the aforementioned observations are related to the unknown  , via the forward map (22), in terms of

, via the forward map (22), in terms of

where  and

and  are realizations of Gaussian noise with zero mean and covariance

are realizations of Gaussian noise with zero mean and covariance  and

and  , respectively, i.e.

, respectively, i.e.  and

and  (i.i.d.). For simplicity we assume that both measurements of the front location and pressures are uncorrelated in time. We additionally assume that

(i.i.d.). For simplicity we assume that both measurements of the front location and pressures are uncorrelated in time. We additionally assume that  and

and  are uncorrelated for all

are uncorrelated for all  .

.

Note that (23) and (24) can be written as

with

Remark 2.4. Due to the nature of the RTM problem, we have that the pressure p(xm,t) at each sensor xm should increase with time as well as the fact that  . However, the Gaussian noise in (23) and (24) can make the observations

. However, the Gaussian noise in (23) and (24) can make the observations  and

and  'unphysical'. In practice, observations need to be post-processed before using them for the Bayesian inverse problem and unphysical

'unphysical'. In practice, observations need to be post-processed before using them for the Bayesian inverse problem and unphysical  should be excluded. We leave the question of how to incorporate such a post-processing framework for future study. Here we follow the traditional point of view on data modeled via (23) and (24) and choose sufficiently small

should be excluded. We leave the question of how to incorporate such a post-processing framework for future study. Here we follow the traditional point of view on data modeled via (23) and (24) and choose sufficiently small  and

and  so that the probability of

so that the probability of  being unphysical is very low.

being unphysical is very low.

We adopt the Bayesian framework for inverse problems where the unknown  is a random field and our objective is to characterize the sequence of distributions of u conditioned on the observations which we express as

is a random field and our objective is to characterize the sequence of distributions of u conditioned on the observations which we express as  . In other words, at each observation time t = tn we aim at computing the Bayesian posterior

. In other words, at each observation time t = tn we aim at computing the Bayesian posterior  . From this distribution we can obtain point estimates of the unknown that can be used in real time to, for example, modify controls (e.g. pI). More importantly, as we stated in the Introduction, the aforementioned distribution enables us to quantify uncertainty not only of the unknown but also of quantities of interest that may be relevant to an optimization of resin injection in RTM.

. From this distribution we can obtain point estimates of the unknown that can be used in real time to, for example, modify controls (e.g. pI). More importantly, as we stated in the Introduction, the aforementioned distribution enables us to quantify uncertainty not only of the unknown but also of quantities of interest that may be relevant to an optimization of resin injection in RTM.

Even though for the illustrative purposes the model presented in this section is discretized on a relatively low dimensional space (e.g. 60 cells), our aim is to introduce a general computational framework independent of the size of the discretized domain. We therefore consider an infinite-dimensional formulation of the Bayesian inverse problem for which the unknown u belongs to a functional space X. The discretization of the Bayesian inverse problem will be conducted at the last stage of the computational algorithm, when the posteriors are sampled/approximated. Thus, we are aiming at robust mesh-invariant computational algorithms.

2.2.1. The prior.

For the Bayesian approach that we adopt in this work, we require to specify a prior distribution  of the unknown, before the data are collected. This distribution comprises all our prior knowledge of the unknown and may include, for example, the regularity of the space of admissible solutions to the inverse problem. For the present work we consider Gaussian priors which have been used to characterize the uncertainty in the (log) permeability that arises from the preform fabrication [30, 31, 50] (see also references therein). In particular, here we consider stationary Gaussian priors

of the unknown, before the data are collected. This distribution comprises all our prior knowledge of the unknown and may include, for example, the regularity of the space of admissible solutions to the inverse problem. For the present work we consider Gaussian priors which have been used to characterize the uncertainty in the (log) permeability that arises from the preform fabrication [30, 31, 50] (see also references therein). In particular, here we consider stationary Gaussian priors  with covariance operator

with covariance operator  that arises from the Wittle–Matern correlation function defined by [25, 28, 39, 43]:

that arises from the Wittle–Matern correlation function defined by [25, 28, 39, 43]:

where Γ is the gamma function, l is the characteristic length scale,  is an amplitude scale and

is an amplitude scale and  is the modified Bessel function of the second kind of order ν. The parameter ν controls the regularity of the samples. It can be shown [11, 43] that, for any

is the modified Bessel function of the second kind of order ν. The parameter ν controls the regularity of the samples. It can be shown [11, 43] that, for any  , if

, if  , then

, then ![$u\in C[0, x^{\ast}]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn138.gif) almost-surely, i.e.

almost-surely, i.e. ![$\mu_{0}([0, x^{\ast}])=1$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn139.gif) . This requirement, together with the continuity of the forward map ensures the well-posedness of the Bayesian inverse problems as we discuss in the next subsection. In the context of composite preform's permeability, it is natural to choose the mean

. This requirement, together with the continuity of the forward map ensures the well-posedness of the Bayesian inverse problems as we discuss in the next subsection. In the context of composite preform's permeability, it is natural to choose the mean  according to the log-permeability intended by the design of the composite part [38].

according to the log-permeability intended by the design of the composite part [38].

For computational purposes we use the prior to parametrize the unknown u in terms of its Karhunen–Loeve (KL) expansion [2]:

with coefficients uk and where  and

and  are the eigenvectors and eigenfunctions of

are the eigenvectors and eigenfunctions of  , respectively. A random draw from the prior

, respectively. A random draw from the prior  can then be obtained from (28) with drawing

can then be obtained from (28) with drawing  i.i.d.

i.i.d.

2.2.2. The posterior.

From (25) and our Gaussian assumptions on the observational noise, it follows that for a fixed  , we have

, we have  . Therefore, the likelihood of

. Therefore, the likelihood of  is given by

is given by

At a given time t = tn, the Bayesian posterior  is defined by the following infinite-dimensional version of Bayes's rule.

is defined by the following infinite-dimensional version of Bayes's rule.

theorem Let  be the sequence of forward maps defined by (22) and let

be the sequence of forward maps defined by (22) and let  be the corresponding likelihood functions (29). Let

be the corresponding likelihood functions (29). Let  be the prior distribution with correlation function (27). Then, for each

be the prior distribution with correlation function (27). Then, for each  , the conditional distribution of

, the conditional distribution of  , denoted by

, denoted by  , exists. Moreover,

, exists. Moreover,  with the Radon–Nikodym derivative

with the Radon–Nikodym derivative

where

Proof. The proof follows from the application of theorem 6.31 in [46] and the continuity of the forward maps (theorem 2.2) on a full  -measure set X. □

-measure set X. □

Note that from our assumption of independence of  , the right hand side of (30) is the likelihood of

, the right hand side of (30) is the likelihood of  .

.

Remark 2.6. Due to the assumption of independence between front location and pressure measurements, the likelihood (29) can be expressed as

where

This enables us to define two particular cases of the inverse problem. The first case corresponds to the assimilation of only pressure measurements  , while in the second case only front location measurements

, while in the second case only front location measurements  are assimilated. Similar arguments to those that led to theorem 2.5 can be applied (with

are assimilated. Similar arguments to those that led to theorem 2.5 can be applied (with  instead of

instead of  ) to define the Bayesian posteriors

) to define the Bayesian posteriors  and

and  associated to these two Bayesian inverse problems. In section S1 of the supplementary material SupMat we study these two particular cases with an eye towards understanding the added value of assimilating observations of the front location with respect to assimilating only pressure measurements.

associated to these two Bayesian inverse problems. In section S1 of the supplementary material SupMat we study these two particular cases with an eye towards understanding the added value of assimilating observations of the front location with respect to assimilating only pressure measurements.

3. Approximating the posteriors via sequential Monte Carlo method

In the previous section we have established the well-posedness of the Bayesian inverse problem associated to inferring the log-permeability in the 1D moving boundary problem (13)–(17). The solution of this inverse problem is the sequence of posterior measures  defined by theorem 2.5. As we discussed in section 1, these posteriors cannot be expressed analytically and so a sampling approach is then required to compute the corresponding approximations. As stated earlier, the sampling of each posterior

defined by theorem 2.5. As we discussed in section 1, these posteriors cannot be expressed analytically and so a sampling approach is then required to compute the corresponding approximations. As stated earlier, the sampling of each posterior  (

( ) can be performed independently by, for example, Markov chain Monte Carlo (MCMC) methods. However, we reiterate that, for the present application SMC samplers are rather convenient as they exploit the sequential nature of the considered inverse problem by enabling a recursive approximation of the posterior measures as new data (in time) become available. Such recursive approximations of the posterior could enable practitioners to update their probabilistic knowledge of preform's log-permeability which is, in turn, essential to develop real-time optimal control strategies for RTM under the presence of uncertainty.

) can be performed independently by, for example, Markov chain Monte Carlo (MCMC) methods. However, we reiterate that, for the present application SMC samplers are rather convenient as they exploit the sequential nature of the considered inverse problem by enabling a recursive approximation of the posterior measures as new data (in time) become available. Such recursive approximations of the posterior could enable practitioners to update their probabilistic knowledge of preform's log-permeability which is, in turn, essential to develop real-time optimal control strategies for RTM under the presence of uncertainty.

Recognizing that the inverse problem under consideration involves inferring a function potentially discretized on a very fine grid, it is vital to consider the application of SMC samplers such as the one introduced in [22], carefully designed for approximating measures defined on a high-dimensional space. In this section we review and apply this scheme for the approximation of the Bayesian posteriors  that we defined in the previous section. The aims of this section are to (i) provide a deeper quantitative understanding of the accuracy of the fully-Bayesian methodology of [22] with respect to its computational cost under practical computational conditions; (ii) provide a motivation for the proposed REnKA that we propose from this SMC sampler in section 4; and (iii) define accurate approximations of

that we defined in the previous section. The aims of this section are to (i) provide a deeper quantitative understanding of the accuracy of the fully-Bayesian methodology of [22] with respect to its computational cost under practical computational conditions; (ii) provide a motivation for the proposed REnKA that we propose from this SMC sampler in section 4; and (iii) define accurate approximations of  which we use as a benchmark for testing our REnKA scheme.

which we use as a benchmark for testing our REnKA scheme.

In section 3.1 we briefly discuss the essence of the standard SMC that we then use in sections 3.2–3.3 to review methodological aspects of the adaptive-tempering SMC sampler for high-dimensional inverse problems of [22]. We then apply this SMC in section 3.4 for the solution of the Bayesian inverse problem in the 1D case defined in the previous section. In section 3.5 we assess practical limitations of the SMC.

3.1. Standard SMC for Bayesian inference

As we discussed in the Introduction, starting with the prior  , the objective of SMC is to recursively compute an approximation of the sequence of Bayesian posteriors

, the objective of SMC is to recursively compute an approximation of the sequence of Bayesian posteriors  in terms of weighted particles. More specifically, assume that at the observation time tn, we have a set of J particles

in terms of weighted particles. More specifically, assume that at the observation time tn, we have a set of J particles  with, for simplicity, equal weights (

with, for simplicity, equal weights ( ,

,  ), which provides the following particle approximation of

), which provides the following particle approximation of  :

:

The objective now is to construct a particle approximation of  , which includes the new data yn collected at time tn. In a standard SMC framework [8, 10, 33], this particle approximation is constructed by means of an importance sampling step with proposal distribution

, which includes the new data yn collected at time tn. In a standard SMC framework [8, 10, 33], this particle approximation is constructed by means of an importance sampling step with proposal distribution  . To illustrate this methodology, let us first note formally that

. To illustrate this methodology, let us first note formally that

where we have used

which can be obtained directly from theorem 2.5. An approximation of (35) can be obtained by

where

From (37) we see that the importance (normalized) weights  assigned to each particle

assigned to each particle  define the following empirical (particle) approximation of

define the following empirical (particle) approximation of  :

:

However, the accuracy of such empirical approximation relies on  being sufficiently close to

being sufficiently close to  ; when this is not the case, after a few iterations (observation times) the algorithm may produce only a few particles with nonzero weights. This is a well-known issue of weight degeneracy that often arises from the application of empirical (importance sampling) approximations within the context SMC samplers [5]. Weight degeneracy is routinely measured in terms of the effective sample size (ESS) statistic [24]:

; when this is not the case, after a few iterations (observation times) the algorithm may produce only a few particles with nonzero weights. This is a well-known issue of weight degeneracy that often arises from the application of empirical (importance sampling) approximations within the context SMC samplers [5]. Weight degeneracy is routinely measured in terms of the effective sample size (ESS) statistic [24]:

which takes a value between 1 and J;  when all weights are equal and

when all weights are equal and  when the distribution is concentrated at one single particle. A common approach to alleviate weight degeneracy is, for example, to specify a threshold for the ESS below which resampling (often multinomially) according to the weights

when the distribution is concentrated at one single particle. A common approach to alleviate weight degeneracy is, for example, to specify a threshold for the ESS below which resampling (often multinomially) according to the weights  is performed. Resampling discards particles with low weights by replacing them with several copies of particles with higher weights. The approximation of a sequence of measures via the combination of the importance sampling step followed with resampling leads to the sequential importance resampling (SIR) scheme [10].

is performed. Resampling discards particles with low weights by replacing them with several copies of particles with higher weights. The approximation of a sequence of measures via the combination of the importance sampling step followed with resampling leads to the sequential importance resampling (SIR) scheme [10].

It is important to note that the aforementioned resampling step in SIR can clearly lead to the lack of diversity in the population of resampled particles. This is, in turn, detrimental to the approximation of the sequence of posteriors. The general aim of the standard SMC approach is to diversify these particles by a mutation step with involves replacing them with samples from a Markov kernel  with invariant distribution

with invariant distribution  . In the following subsection we provide a discussion of the aforementioned mutation in the context of the SMC sampler for high-dimensional inverse problem [22]. We refer the reader to [8, 10, 33, 34] for a thorough treatment of more standard SMC samplers.

. In the following subsection we provide a discussion of the aforementioned mutation in the context of the SMC sampler for high-dimensional inverse problem [22]. We refer the reader to [8, 10, 33, 34] for a thorough treatment of more standard SMC samplers.

3.2. SMC for high-dimensional inverse problems

The weight degeneracy in the importance sampling step described above is more pronounced when the two consecutive measures  and

and  differ substantially from each other. This has been particularly associated with complex (e.g. multimodal) measures defined in high-dimensional spaces. When the change from

differ substantially from each other. This has been particularly associated with complex (e.g. multimodal) measures defined in high-dimensional spaces. When the change from  to

to  is abrupt, the importance sampling step can result in a sharp failure, whereby the approximation of

is abrupt, the importance sampling step can result in a sharp failure, whereby the approximation of  is concentrated on a single particle [5]. Recent work for high-dimensional inference problems has suggested [1, 22] that further stabilization of the importance weights is needed by defining a smooth transition between

is concentrated on a single particle [5]. Recent work for high-dimensional inference problems has suggested [1, 22] that further stabilization of the importance weights is needed by defining a smooth transition between  and

and  . For the present work, we consider the annealing approach of [34, 35], where qn intermediate artificial measures

. For the present work, we consider the annealing approach of [34, 35], where qn intermediate artificial measures  are defined such that

are defined such that  and

and  . These measures can be bridged by introducing a set of qn tempering parameters denoted by

. These measures can be bridged by introducing a set of qn tempering parameters denoted by  that satisfy

that satisfy  and defining each

and defining each  as the probability measure with density proportional to

as the probability measure with density proportional to  with respect to

with respect to  . More specifically,

. More specifically,  satisfies

satisfies

which, formally, implies

Note that when qn = 1,  and so expression (42) reduces to (36). We now follow the SMC algorithm for high-dimensional inverse problems as described in [22].

and so expression (42) reduces to (36). We now follow the SMC algorithm for high-dimensional inverse problems as described in [22].

3.2.1. Selection step.

The first stage of the SMC approach of [22] is a selection step which consists of careful selection of the tempering parameters which define the intermediate measures  ; these are in turn approximated by the application of the SIR scheme described above. Let us then assume that at an observation time tn and iteration level r − 1, the tempering parameter

; these are in turn approximated by the application of the SIR scheme described above. Let us then assume that at an observation time tn and iteration level r − 1, the tempering parameter  has been specified, and that a set of particles

has been specified, and that a set of particles  provides the following approximation (with equal weights) of the intermediate measure

provides the following approximation (with equal weights) of the intermediate measure  :

:

From (42) we can see that the new tempering parameter  must be selected to ensure that

must be selected to ensure that  is sufficiently small, so that the subsequent measure

is sufficiently small, so that the subsequent measure  is close to

is close to  thus preventing a sharp failure of the empirical approximation of

thus preventing a sharp failure of the empirical approximation of  (39). In particular, once the next tempering parameter

(39). In particular, once the next tempering parameter  is specified, we note from expression (42) that the importance weights for the approximation of

is specified, we note from expression (42) that the importance weights for the approximation of  are given by

are given by

Recognizing that the ESS in (40) quantifies weight degeneracy in SIR, the approach of [22] (see also [21]) proposes to define on-the-fly the next tempering parameter  by imposing a fixed, user-defined value Jthres on the ESS. More specifically,

by imposing a fixed, user-defined value Jthres on the ESS. More specifically,  is defined by the solution to the following equation:

is defined by the solution to the following equation:

which may, in turn, be solved by a simple bisection algorithm on the interval ![$(\phi_{n, r-1}, 1]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn219.gif) . An approximation of

. An approximation of  is then given by the weighted particle set

is then given by the weighted particle set  . If at the r − 1 level, we find that

. If at the r − 1 level, we find that  , it implies that no further tempering is required and thus one can simply define

, it implies that no further tempering is required and thus one can simply define  . We note that the number of tempering steps qn is random.

. We note that the number of tempering steps qn is random.

While the tempering approach described above is aimed at preventing ESS from falling below a specified threshold Jthres and thus avoiding a sharp failure of the empirical approximation of  , resampling is still required to discard particles with very low weights. Let us then denote by

, resampling is still required to discard particles with very low weights. Let us then denote by  (

( ) the particles, with equal weights, that result from resampling with replacement of the set of particles

) the particles, with equal weights, that result from resampling with replacement of the set of particles  according to the weights

according to the weights  .

.

3.2.2. Mutation phase.

As stated in the preceding subsection, at the core of the SMC methodology is a mutation phase that adds diversity to the population of the resampled particles  . In the context of the tempering approach described above, this mutation is conducted by means of sampling from a Markov kernel

. In the context of the tempering approach described above, this mutation is conducted by means of sampling from a Markov kernel  with invariant distribution

with invariant distribution  . Similar to the approach of [22], here we consider mutations given by running

. Similar to the approach of [22], here we consider mutations given by running  steps of an MCMC algorithm with

steps of an MCMC algorithm with  as its target distribution. More specifically, we consider the preconditioned Crank–Nicolson (pcn)-MCMC method from [9] with target distribution

as its target distribution. More specifically, we consider the preconditioned Crank–Nicolson (pcn)-MCMC method from [9] with target distribution  and reference measure

and reference measure  . Formally, these two measures are related by

. Formally, these two measures are related by

The pcn-MCMC method for sapling  is summarised in algorithm 2 (see appendix B). Under reasonable assumptions this algorithm produces a

is summarised in algorithm 2 (see appendix B). Under reasonable assumptions this algorithm produces a  -invariant Markov kernel [22]. The resulting particles denoted by

-invariant Markov kernel [22]. The resulting particles denoted by  (

( ) then provide the following particle approximation of

) then provide the following particle approximation of  :

:

where the convergence is proven in a suitable metric for measures [1]. Note that at the end of the iteration r = qn, the corresponding particle approximation  provides the desired approximation of the posterior that arises from the Bayesian inverse problem of interest. This SMC sampler is summarized in algorithm 3 (see appendix B).

provides the desired approximation of the posterior that arises from the Bayesian inverse problem of interest. This SMC sampler is summarized in algorithm 3 (see appendix B).

Remark 3.1. For simplicity, here we use the resampling step at every iteration of the SMC sampler. However, whenever  (and so

(and so  ) the resampling step can be skipped; this involves using the corresponding weighted particle approximation and modifying the formula for the incremental weights as discussed in [22, section 4.3].

) the resampling step can be skipped; this involves using the corresponding weighted particle approximation and modifying the formula for the incremental weights as discussed in [22, section 4.3].

3.3. A note on tempering

Let us define the following inverse of the increment in tempering parameters:

and note that  implies

implies  . In addition, expression (42) can be written as

. In addition, expression (42) can be written as

where we have used the definition of the likelihood in (29). Informally, we can then interpret each iteration of the SMC sampler (at a given observation time tn) as the solution of a Bayesian inverse problem that consists of finding  given the prior

given the prior  and the data:

and the data:

From (48) and the fact that  , it follows that

, it follows that  . Therefore, (50) is nothing but the original problem (25) albeit with a noise

. Therefore, (50) is nothing but the original problem (25) albeit with a noise  that has an inflated covariance

that has an inflated covariance  .

.

We also note that  plays the role of a regularization parameter in the sense that it controls the transition between

plays the role of a regularization parameter in the sense that it controls the transition between  and

and  . The larger the

. The larger the  the smoother this transition. Alternatively, we can see that

the smoother this transition. Alternatively, we can see that  can be interpreted as a 'temperature' in the tempering scheme which, in turn, flattens out the likelihood function at the observation time tn. Clearly, more tempering will be required whenever

can be interpreted as a 'temperature' in the tempering scheme which, in turn, flattens out the likelihood function at the observation time tn. Clearly, more tempering will be required whenever  is large; this can for example happen if the observational data are accurate (i.e. small

is large; this can for example happen if the observational data are accurate (i.e. small  ) and/or many observations are available.

) and/or many observations are available.

The amount of tempering is controlled by the number of parameters obtained via (45). The greater the number of tempering parameters, the larger the  's which in turn indicates that more regularization is needed to ensure a stable transition between those measures. This has also, in turn, an associated increase in iterations and thus in computational cost.

's which in turn indicates that more regularization is needed to ensure a stable transition between those measures. This has also, in turn, an associated increase in iterations and thus in computational cost.

3.3.1. Computational aspects of SMC.

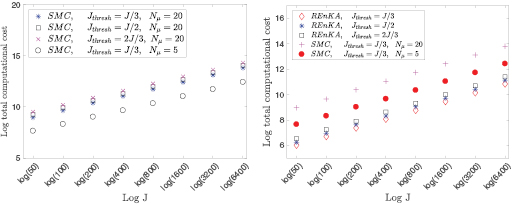

The main computational cost of the SMC sampler previously discussed is attributed to the mutation step for which  steps of the pcn-MCMC algorithm are performed. At each observation time tn and iteration r, the SMC sampler then requires

steps of the pcn-MCMC algorithm are performed. At each observation time tn and iteration r, the SMC sampler then requires  evaluations of the nth forward map

evaluations of the nth forward map  . Therefore, the computational cost of computing

. Therefore, the computational cost of computing  is

is  , where gn denotes the computational cost of evaluating

, where gn denotes the computational cost of evaluating  which, in turn, corresponds to solving the moving boundary problem from time t = 0 up to time tn. The total computational cost of computing the full sequence of posteriors

which, in turn, corresponds to solving the moving boundary problem from time t = 0 up to time tn. The total computational cost of computing the full sequence of posteriors  is then

is then

which is expressed in terms of gN, the cost of evaluating  (i.e. solving the forward model from time zero up to the final observation time).

(i.e. solving the forward model from time zero up to the final observation time).

The work of [22] has suggested that accurate approximations of the posterior via SMC samplers require, for example, values of  and J = 104. If we assume for a moment that only one observation time N = 1 is considered and that only one tempering step q1 = 1 is required to compute

and J = 104. If we assume for a moment that only one observation time N = 1 is considered and that only one tempering step q1 = 1 is required to compute  , the computational cost in this case would be approximately 105 times the cost of solving the forward model from time t = 0 up to time t1. Such cost would be clearly computationally prohibitive for practical applications, where the aforementioned forward simulation may take several minutes of CPU time. In particular, for the 2D or 3D version of the RTM process, the high computational cost of the SMC sampler becomes impractical. While reducing the values of J and

, the computational cost in this case would be approximately 105 times the cost of solving the forward model from time t = 0 up to time t1. Such cost would be clearly computationally prohibitive for practical applications, where the aforementioned forward simulation may take several minutes of CPU time. In particular, for the 2D or 3D version of the RTM process, the high computational cost of the SMC sampler becomes impractical. While reducing the values of J and  may result in a more affordable computational cost, this is substantially detrimental to the level of accuracy of the SMC sampler as we show via numerical experiments in section 3.5. Alternatively, a parallel implementation of the J forward model evaluations can substantially reduce the cost of this algorithm, which in turn, scales by a factor of J. However, within the manufacturing industry, the availability of computer resources that can deliver

may result in a more affordable computational cost, this is substantially detrimental to the level of accuracy of the SMC sampler as we show via numerical experiments in section 3.5. Alternatively, a parallel implementation of the J forward model evaluations can substantially reduce the cost of this algorithm, which in turn, scales by a factor of J. However, within the manufacturing industry, the availability of computer resources that can deliver  parallel simulations is the exception rather than the norm.

parallel simulations is the exception rather than the norm.

3.4. Numerical examples with SMC

In this subsection we report the results from the numerical application of the SMC sampler discussed in the previous subsection. The objective is to approximate the sequence of Bayesian posteriors that arise from the 1D moving boundary problem defined in section 2 for the experimental set-up described in section 3.4.1. In section 3.4.2 we discuss the numerical results obtained via the SMC sampler with a very high number of particles which results in accurate approximations of the Bayesian posteriors. These approximations are then used in section 3.5 to assess the practical limitations of the scheme under certain choices of tunable parameters and number of particles. These limitations motivate the approximate methods that we propose in section 4.

3.4.1. Experimental set-up.

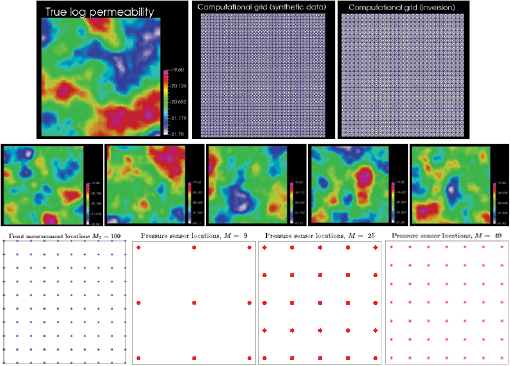

We consider a dimensionless version of the 1D model (13)–(17) which together with its numerical approximation is described in appendix C. The dimensionless values for the control variables are p0 = 1 and pI = 2. We use a Gaussian prior distribution  with the covariance operator

with the covariance operator  that arises from the covariance function defined in (27). We numerically solve (off-line) the eigenvalue problem associated to the matrix that results from discretizing

that arises from the covariance function defined in (27). We numerically solve (off-line) the eigenvalue problem associated to the matrix that results from discretizing  ; the corresponding eigenvector/eigenvalues are then stored for subsequent use in the parameterization of the log-permeability in the SMC sampler. The KL expansion (28) becomes a truncated sum with a number of elements equal to the the total number of eigenvalues of this matrix; these are, in turn, equal to the number of cells used for the discretization of the domain

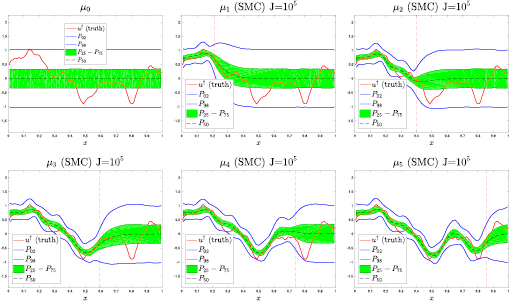

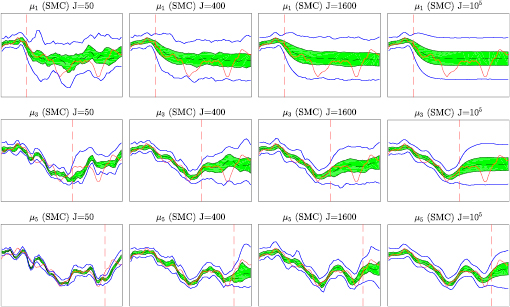

; the corresponding eigenvector/eigenvalues are then stored for subsequent use in the parameterization of the log-permeability in the SMC sampler. The KL expansion (28) becomes a truncated sum with a number of elements equal to the the total number of eigenvalues of this matrix; these are, in turn, equal to the number of cells used for the discretization of the domain ![$D^{\ast}=[0, 1]$](https://content.cld.iop.org/journals/0266-5611/34/10/105002/revision2/ipaad1ccieqn275.gif) . No further truncation to this KL expansion is carried out. A few samples from the prior are displayed in figure 2 (right). Pointwise percentiles (0.02, 0.25, 0.5, 0.75 and 0.98) of the prior are displayed in figure 3 (top-left). Tuneable parameters of the prior for the present experiments are

. No further truncation to this KL expansion is carried out. A few samples from the prior are displayed in figure 2 (right). Pointwise percentiles (0.02, 0.25, 0.5, 0.75 and 0.98) of the prior are displayed in figure 3 (top-left). Tuneable parameters of the prior for the present experiments are  ,

,  , l = 0.05 and

, l = 0.05 and  for all

for all  .

.

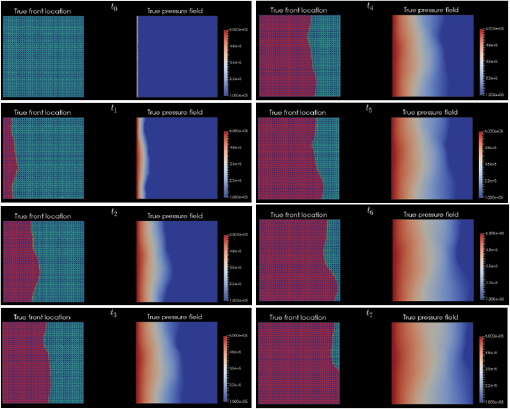

Figure 2. Left: true pressure field  and space-time measurement configuration with M = 9 sensors and N = 5 observation times. Middle: true pressure at observation times

and space-time measurement configuration with M = 9 sensors and N = 5 observation times. Middle: true pressure at observation times  (curves) together with the corresponding synthetic data

(curves) together with the corresponding synthetic data  (dots). Right: samples from the prior.

(dots). Right: samples from the prior.

Download figure:

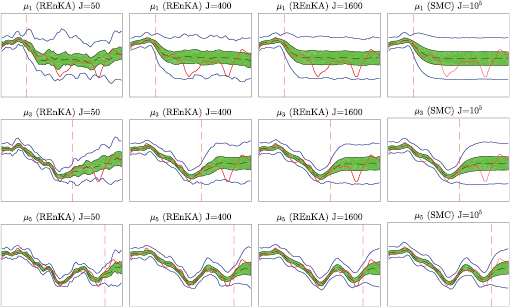

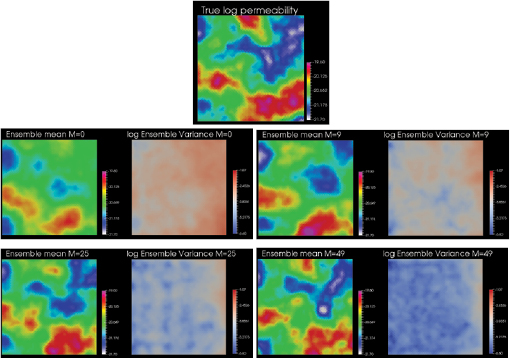

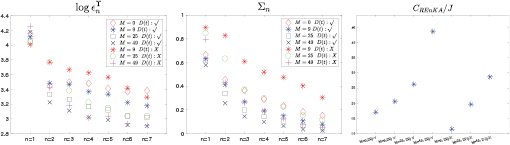

Standard image High-resolution imageFigure 3. Top-left: percentiles of the prior log-permeability  . Top-Middle to bottom-right: percentiles of the posteriors

. Top-Middle to bottom-right: percentiles of the posteriors  obtained via SMC with large number of samples J = 105. Solid red line corresponds to the graph of the true log-permeability

obtained via SMC with large number of samples J = 105. Solid red line corresponds to the graph of the true log-permeability  . Vertical dotted line indicates the location of the true front

. Vertical dotted line indicates the location of the true front  .

.

Download figure:

Standard image High-resolution imageIn order to generate synthetic data, we define the 'true/reference' log permeability field  whose graph (red curve) is displayed in figure 3 (top-left); this function is a random draw from the prior described above. We use

whose graph (red curve) is displayed in figure 3 (top-left); this function is a random draw from the prior described above. We use  in the numerical implementation of (13)–(17) in order to compute the true pressure field

in the numerical implementation of (13)–(17) in order to compute the true pressure field  as well as the true front location

as well as the true front location  . The plot of

. The plot of  is shown in figure 2 (left) together with the space-time configuration of M = 9 pressure sensors and N = 5 observation times. The graphs of

is shown in figure 2 (left) together with the space-time configuration of M = 9 pressure sensors and N = 5 observation times. The graphs of  are shown in figure 2 (middle). The true locations of the front

are shown in figure 2 (middle). The true locations of the front  are 0.21, 0.40, 0.58, 0.73 and 0.87. Synthetic data are then generated by means of

are 0.21, 0.40, 0.58, 0.73 and 0.87. Synthetic data are then generated by means of  and

and  , where

, where  and

and  are Gaussian noise (see section 2.2) with standard deviations equal to 1.5% of the size of the noise-free observations. Synthetic pressure data

are Gaussian noise (see section 2.2) with standard deviations equal to 1.5% of the size of the noise-free observations. Synthetic pressure data  are superimposed on the graphs of

are superimposed on the graphs of  in figure 2 (middle). Synthetic front locations

in figure 2 (middle). Synthetic front locations  are 0.21, 0.39, 0.59, 0.74, 0.86. In order to avoid inverse crimes, synthetic data are generated by using a finer discretization (with 120 cells) than the one used to approximate the posteriors (with 60 cells).

are 0.21, 0.39, 0.59, 0.74, 0.86. In order to avoid inverse crimes, synthetic data are generated by using a finer discretization (with 120 cells) than the one used to approximate the posteriors (with 60 cells).

3.4.2. Application of SMC.

In this subsection we report the application of the SMC sampler of [22] (see algorithm 3 in appendix B) which, as described in the preceding section, provides a particle approximation of each posterior that converges to the exact posterior measure  as the number of particles J goes to infinity. In order to achieve a high-level of accuracy we use J = 105 number of particles which is substantially larger compared to the number of particles (e.g. 103 to 104) often used in existing applications of SMC for high-dimensional inverse problems [8, 22]. In addition, we consider the selection of tunable parameters

as the number of particles J goes to infinity. In order to achieve a high-level of accuracy we use J = 105 number of particles which is substantially larger compared to the number of particles (e.g. 103 to 104) often used in existing applications of SMC for high-dimensional inverse problems [8, 22]. In addition, we consider the selection of tunable parameters  and

and  similar to the ones suggested in [22]. For each observation time tn, we store the ensemble of particles

similar to the ones suggested in [22]. For each observation time tn, we store the ensemble of particles  that approximates the corresponding posterior