Abstract

Geodesy, the oldest science, has become an important discipline in the geosciences, in large part by enhancing Global Positioning System (GPS) capabilities over the last 35 years well beyond the satellite constellation's original design. The ability of GPS geodesy to estimate 3D positions with millimeter-level precision with respect to a global terrestrial reference frame has contributed to significant advances in geophysics, seismology, atmospheric science, hydrology, and natural hazard science. Monitoring the changes in the positions or trajectories of GPS instruments on the Earth's land and water surfaces, in the atmosphere, or in space, is important for both theory and applications, from an improved understanding of tectonic and magmatic processes to developing systems for mitigating the impact of natural hazards on society and the environment. Besides accurate positioning, all disturbances in the propagation of the transmitted GPS radio signals from satellite to receiver are mined for information, from troposphere and ionosphere delays for weather, climate, and natural hazard applications, to disturbances in the signals due to multipath reflections from the solid ground, water, and ice for environmental applications. We review the relevant concepts of geodetic theory, data analysis, and physical modeling for a myriad of processes at multiple spatial and temporal scales, and discuss the extensive global infrastructure that has been built to support GPS geodesy consisting of thousands of continuously operating stations. We also discuss the integration of heterogeneous and complementary data sets from geodesy, seismology, and geology, focusing on crustal deformation applications and early warning systems for natural hazards.

Export citation and abstract BibTeX RIS

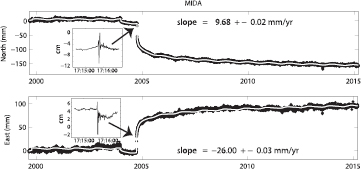

Corresponding Editor Michael Bevis

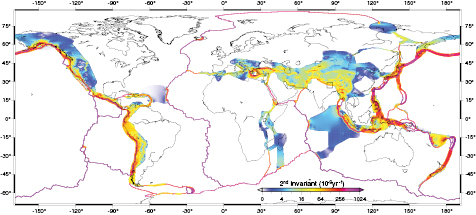

1. Introduction

Global positioning system (GPS) geodesy deploys precision instruments (GPS receivers and antennas) on platforms, static or dynamic, on the Earth's land and water surfaces, in the atmosphere or in space, to observe the constellation of GPS satellites and to explore a wide range of physical processes in the Earth system. One of the goals is to obtain precise estimates of 3D positions and their displacements in space and time in the case of quasi-static platforms and trajectories in the case of dynamic platforms, although the distinction is somewhat arbitrary. The underlying physical processes of interest may have temporal scales ranging from fractions of seconds (e.g. seismology) to millions of years (e.g. tectonic plate motion) and spatial scales from several meters (e.g. individual geologic faults) to global (e.g. polar motion). One of the advantages of the GPS is that, compared to other measurement systems in geodesy and seismology, it provides positions with respect to a global terrestrial reference frame. Besides precise measurement of point positions and their changes over time that are indicative of dynamic surficial and subsurface processes, all disturbances in the propagation of the transmitted GPS signal from satellite to receiver including troposphere and ionosphere refraction, receiver multipath, and the reflections of signals on solid ground, water and ice may be mined for physical signals. In this review, we emphasize the stringent positioning accuracy requirements of GPS geodesy that differentiates it from the ubiquitous use of GPS in everyday life (e.g. smart phone mapping applications, vehicle navigation). Regardless of the parameter of interest or the temporal resolution, GPS geodesy is defined by the requirement to estimate 3D platform trajectories with millimeter to centimeter-level precision; by comparison, most personal and commercial navigation and mapping applications of the GPS only require meter-level precision.

This review concentrates on applications that benefit from the considerable database of precise observations collected over the last 35 years, as well as more recent and novel applications. The focus is the role that the GPS is playing in improving our current knowledge of the dynamic Earth, as well as how this knowledge can be used to address hazards with significant societal impact. We selectively include equations, graphics, and videos to better demonstrate concepts, rather than providing a textbook-like review. Appropriate historical references provide a cursory overview of other geodetic methods, modern as well as historical. The combination of GPS observations with other types of data from geodesy, seismology, and geology, is dealt with in greater detail. We also discuss outstanding problems in the geosciences, where improvements in GPS and the availability of other emerging satellite constellations will be of benefit. Note that the general class of satellite constellations is referred to as Global Navigation Satellite Systems (GNSS); as more navigation systems become available 'GPS geodesy' and 'GNSS geodesy' are being used interchangeably.

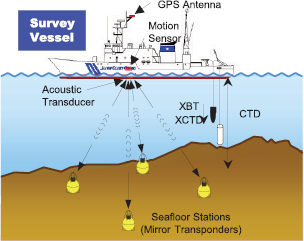

There are several review articles, books, and reports that may be of interest: Theoretical and technical aspects of the GPS and geodetic theory (Hofmann-Wellenhof 1993, Leick 2004, Parkinson et al 1996, Teunissen and Kleusberg 1998, Hofmann-Wellenhof et al 2007, Misra and Enge 2006, Blewitt 2007), tectonic geodesy (Segall and Davis 1997, Bürgmann and Thatcher 2013), seafloor geodesy (Bürgmann and Chadwell 2014), volcano geodesy (Dzurisin 2006, Segall 2010), international conventions for GPS data analysis (Petit and Luzum 2010), and grand challenges facing geodesy (Davis et al 2012a).

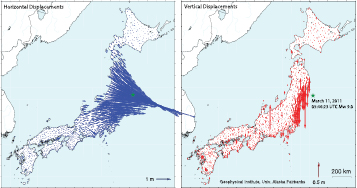

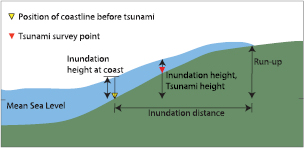

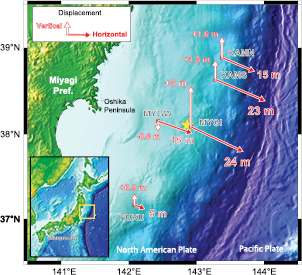

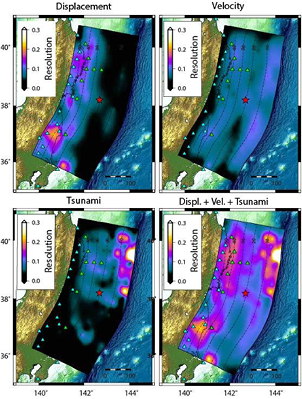

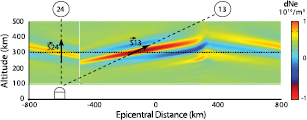

To put this review into perspective and to highlight the significant contributions of GPS geodesy to society, we discuss its impact within the context of several recent global scale catastrophes. In a little over a decade the world has witnessed a terrible number of casualties and severe economic disruption from several recent great subduction-zone earthquakes and tsunamis. Two events that we frequently cite in this review are the 26 December, 2004 Mw 9.3 Sumatra–Andaman event that resulted in over 250 000 casualties, the majority of them on the nearby Sumatra, Indonesia mainland with tsunami inundation heights of up to 30 m (Paris et al 2007) and the March 11, 2011 Mw 9.0 Tohoku-oki (Great Japan) earthquake, which generated a tsunami with inundation heights as high as 40 m resulting in over 18 000 casualties (Mimura et al 2011, Mori et al 2012, Yun and Hamada 2014).

2. GPS overview

2.1. Constellation and signals

The global positioning system is a satellite constellation operated by the United States Department of Defense (Air Force) to support military and civilian positioning, navigation, and timing. The first satellite was launched in 1978 with multiple generations of satellites thereafter. The orbital periods are approximately 12 h, so that the satellites are visible over the same ground point about every 24 h. Currently there are 32 satellites in operation in 6 orbital planes. There are at least five satellites visible to support instantaneous positioning at any geographic location, and often there are up to 12 satellites visible. Standard civilian applications require the observation of signal propagation time (expressed in terms of equivalent range or distance) from at least four satellites in order to triangulate the 3D position of the user and to estimate a clock/timing bias; additional observations provide redundancy and estimates of position uncertainties.

Atomic clocks on the satellites produce the fundamental radio frequency of 10.23 MHz and the satellites transmit at integer multiples (154 and 120) of this frequency at two frequencies called L1 (1575.42 MHz) and L2 (1226.60 MHz). Since the ionospheric effects on the signal are dispersive and inversely proportional to frequency, first-order effects on the propagated signal can be canceled by means of a simple linear combination of L1 and L2 observations.

A pseudorange measurement is derived from the measured travel time between a particular satellite and a GPS instrument, biased by instability in the satellite and receiver clocks, hence 'pseudo' range. There are two types of pseudorange codes that are modulated onto the L1 and L2 signals. The precision (P-) code originally intended for military applications is at the fundamental frequency of 10.23 MHz (an effective wavelength of ~30 m) and is modulated onto both the L1 and L2 signals. The P-code is encrypted by the US military (called anti-spoofing) to avoid jamming by adversaries in times of war. The Coarse Acquisition (C/A) code originally intended for civilian users at a tenth of the fundamental frequency (an effective wavelength of ~300 m) was originally only modulated onto the L1 signal, the intent being to limit the stand-alone point positioning accuracy of civilian users by precluding the ionospheric correction, increasing the effective wavelength, and distorting ('dithering') the satellite clock frequency (called selective availability or SA). SA was an intentional degradation of public GPS signals implemented for national security reasons but was discontinued in May 2000 to support civilian and commercial users; it was announced in September 2007 that the newest generation of satellites (GPS III) will not have the SA feature. Furthermore, as stated in the US Government GPS website 'The United States has no intent to ever use Selective Availability again'. Also modulated onto the L1 signal is the broadcast ephemeris message, which includes a description of the satellite orbit at any point in time (orbital elements), timing information, and atmospheric propagation models. The transmissions are maintained by the US Air Force's GPS Ground Control Segment. Consumer devices such as smart phones and vehicle navigation systems only track the C/A code on L1, which limits precision to the several meter level. The precision can be significantly improved to about a meter by using, where available, differential corrections via satellite-based augmentation systems (Van Diggelen 2009).

The GPS is in the process of modernization with the addition of civilian and military signals. Modernized GPS satellites will include four civilian signals. In addition to the C/A signal at L1 (1575.42 MHz) there is a new L2C signal at L2 (1227.6 MHz) allowing for redundancy and ionospheric corrections for users with C/A code receivers. Another new signal L1C at L1 frequency will be available with the latest generation of GPS satellites (Block III) designed to be backwards compatible with the original L1 C/A code signal and with signals from the European Union satellite constellation, Galileo. The United States Air Force began broadcasting pre-operational L2C and L5 civil navigation (CNAV) messages from a few satellites beginning in April 2014. Another broadband civil signal for 'safety of life' aviation applications will be broadcast at L5 (1176.45 MHz). At the time of this writing (August, 2015), there are 17 L2C-enabled satellites and nine L5-transmitting satellites. The US government intends to have 24 satellites broadcasting the L2C signal by 2018 and the L5 signal by 2024. The military M-code for more secure access includes two new encrypted P(Y) signals transmitted at both L1 and L2. The impact of GPS modernization on geodesy will be significant by allowing a new generation of geodetic-quality receivers to replace the proprietary codeless and semi-codeless receivers developed since the 1980s to circumvent P-code civilian restrictions. Furthermore, the addition of a third frequency will provide more accurate, robust, and efficient positioning, in particular for real-time geodetic applications (Hatch et al 2000, Geng and Bock 2013). Observations of other GNSS constellations (e.g. GLONASS, Russia; Galileo, European Union; BeiDou, China) will also have a similar impact through super redundancy and the number of visible satellites, providing improved positioning in urban and other environments with limited satellite visibility. GLONASS has been fully operational since 2012 and is increasingly being used for geodetic, precise surveying, and engineering applications.

2.2. GPS infrastructure and surveys

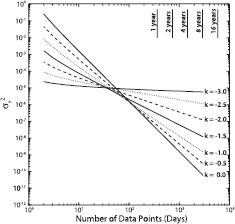

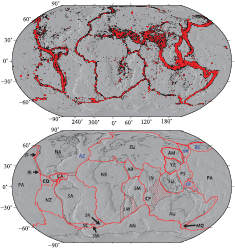

GPS geodesy was born when it was realized early on in the implementation of the GPS constellation that the measurement of phase observations of the L1 and L2 carrier signals at two nearby observing stations several kilometers apart could provide mm-level relative positional accuracy (Bossler et al 1980, Counselman and Gourevitch 1981, Remondi 1984, 1985). These early developments spurred the gradual deployment of a global infrastructure of civilian GPS tracking stations, beginning in the mid-1980s and distinct from the US Air Force's GPS Ground Control Segment. The primary benefit was improved satellite orbit precision, albeit after the fact, compared to the orbital elements continuously broadcast by the satellites. Precise mm- to cm-level positioning then became possible at longer and longer distances, eventually at global scales, and from post-processed analysis of days of data to instantaneous real-time positioning. Today, the global GPS infrastructure is well developed under the auspices of the International GNSS Service (IGS), a collaborative and mostly volunteer effort of scientists, with hundreds of globally distributed GPS tracking stations (figure 1), eight global analysis centers, five global data centers, and a central bureau which coordinates efforts to continuously advance the precision and reliability of precise GPS applications. The service also develops and maintains standards for instrumentation and station deployments, data formats, data analysis, and data archiving (Dow et al 2009, Noll et al 2009). The global analysis centers provide precise GPS satellite orbits and clock estimates and Earth orientation parameters (section 2.5.4), which are consistent with the International Terrestrial Reference Frame (ITRF—Altamimi et al 2011) and international conventions (section 2.5.3). Many of the IGS stations have been converted to real-time operations. The data are transmitted with a latency of about 1 s to support, for example, earthquake and tsunami early warning systems (sections 5.2 and 5.3), volcano monitoring (section 5.4), and GPS meteorology (section 6.1.3). The IGS infrastructure is essential for achieving consistent mm-level GPS geodesy. The global network also supports climate research (section 7), including sea level rise, changes in the cryosphere, atmospheric variations in water vapor and temperature, and environmental applications (section 8). The globally distributed stations, exclusive of stations experiencing plate boundary deformation, also provide estimates of current plate motions that can be compared to the ~3 Myr geological record (section 4.3).

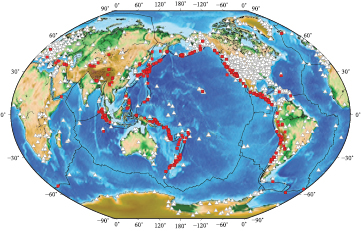

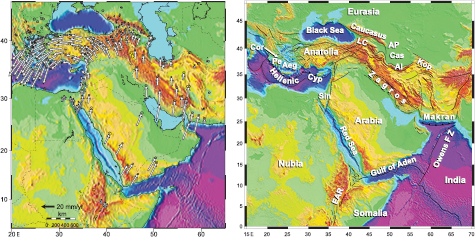

Figure 1. Thousands of continuous GPS stations (white triangles) established for global and regional geodetic applications, earthquakes greater than magnitude 5 (brown squares) since 1990, and major tectonic plate boundaries (black lines). The map is centered on the Pacific Rim, which along with the Indonesian archipelago contains the world's major subduction zones, and has produced nine of the largest ten earthquakes in recorded history.

Download figure:

Standard image High-resolution imageRegional-scale networks, as a natural extension and densification of the global network, now provide coverage of most plate boundaries, primarily by permanent and continuously operating reference stations, referred to as continuous GPS (cGPS) (figure 2). In the early 1990s the first cGPS networks were established in southern California (Bock et al 1997) and the concept quickly spread to other plate boundary zones, and to continental interiors to support surveying and transportation (e.g. Snay and Soler 2008). In this mode, GPS instruments are deployed in secure fixed facilities with a source of power and a communications link to a central facility, and operated autonomously (figure 2). The costs to install a station are significant, but once established maintenance is cost-effective. Initially, cGPS networks recorded data at a 15–30 s interval and data were downloaded to a central facility several times a day to once a day. In the last decade with improvements in data communications and computing power, many stations operate in real time; data are sampled at 1–10 Hz and continuously transmitted to users and to central facilities with a latency of about 1 s. As an example of a robust national network, there are about 1200 real-time stations operating in Japan for monitoring crustal deformation and to support land surveying (Sagiya et al 2000). More than 650 real-time stations are operating for crustal deformation studies and natural hazards mitigation in the Western US and Canada.

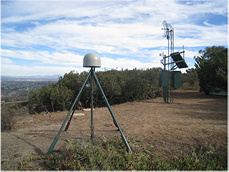

Figure 2. Monument and antenna (under the dome) of a typical cGPS station for monitoring tectonic plate boundary deformation (section 4) and atmospheric water vapor (section 6.1.3), in La Jolla, California. The small white box on the vertical leg contains a MEMS accelerometer used for seismogeodesy (section 5.2.3). See also figure 7 for a schematic of the monument. In the background are GPS equipment enclosures, solar panels, radio antenna for real-time transmission of data, and meteorological instruments. Photo courtesy of D Glen Offield.

Download figure:

Standard image High-resolution imageA cost effective means of further densification is episodic or 'survey-mode' measurements, referred to as sGPS. Prior to the existence of extensive regional networks, this mode was used almost exclusively in GPS geodesy. These surveys can be as accurate as cGPS over the long term if carefully executed, and short-term noise is reduced through multiday occupations. They provide more flexibility than cGPS in establishing inexpensive monuments (e.g. on bedrock), and are particularly useful for local geologic fault crossing surveys or for repeated long-term measurements in remote locations where permanent installations are impractical. GPS surveys have also been conducted in the epicentral regions of large earthquakes in rapid response to seismic events to record coseismic (section 4.7) and postseismic (section 4.6) deformation. However, sGPS is often logistically complex and manpower intensive; it requires teams of surveyors to repeatedly visit and occupy monuments and careful execution in order to replicate to the greatest extent possible the procedures and types of equipment used during each survey. Furthermore, it is of limited use for deriving appropriate noise models for GPS analysis (section 3.3) and for recording transient deformation (section 4.8), but can add information in both cases if the survey points are near cGPS stations, and if deployed expeditiously after a seismic or volcanic event. An intermediate approach involves semi-continuous measurements where instruments operate from 1–3 months at a sub-network of stations and are then moved within a network maintaining some station overlap until the full network is surveyed (Bevis et al 1997, Blewitt et al 2009a, Beavan et al 2010). This process is then repeated to measure station displacements.

2.3. Physical models and analysis

2.3.1. Observables.

GPS geodesy exploits the signal carrier waves to record 'carrier beat phase' observations at both L1 and L2 frequencies, which in simple terms result from the correlation of the signal observed by the receiver with its replica generated by the receiver. The unmodulated carrier wave on either the L1 or L2 frequency can be expressed as

where  is the carrier phase,

is the carrier phase,  o is the unknown initial phase,

o is the unknown initial phase,  is the amplitude,

is the amplitude,  is the angular frequency, and

is the angular frequency, and  is the wave number. By definition, the signal has phase velocity

is the wave number. By definition, the signal has phase velocity

with frequency  , wavelength

, wavelength  , index of refraction n, and the speed of light in a vacuum c. The signal on each frequency is modulated by the various codes including the P-code, C/A code (presently only on L1 and L2C, for the newest satellites), and the satellite navigation message ('the broadcast ephemeris'). The group velocity and phase velocity are related by

, index of refraction n, and the speed of light in a vacuum c. The signal on each frequency is modulated by the various codes including the P-code, C/A code (presently only on L1 and L2C, for the newest satellites), and the satellite navigation message ('the broadcast ephemeris'). The group velocity and phase velocity are related by

Since  and

and  for refraction through the ionosphere and troposphere, the group velocity is always less than the phase velocity, except in propagation through a vacuum, where

for refraction through the ionosphere and troposphere, the group velocity is always less than the phase velocity, except in propagation through a vacuum, where  and

and  . It follows that the pseudorange measurements are delayed, while the carrier phase measurements are advanced.

. It follows that the pseudorange measurements are delayed, while the carrier phase measurements are advanced.

The GPS carrier phase and pseudorange observations are essentially the radio signal time of travel at multiple frequencies from a particular satellite to receiver. The phase measurements are inherently more precise than their corresponding pseudorange measurements because of their effective wavelengths. Considering that the phase and pseudorange measurements with a geodetic GPS instrument can be made with a precision of about 1% of their wavelengths, this translates into 0.002 m in distance for phase compared to 0.3 m and 3 m for P-code and C/A code, respectively. However, the total number of full carrier wave cycles travelled by the GPS signal is unknown and is referred to as the integer-cycle phase ambiguity. The GPS carrier beat phase observations that result from the receiver cross correlation are a fraction of a cycle, or modulo  of the total number of cycles travelled from satellite to receiver. It is beneficial to estimate the number of integer cycles in order to achieve the highest geodetic precision, especially for real-time applications. The pseudorange measurements, although much less precise than the phase measurements, provide valuable constraints on the process of integer-cycle phase ambiguity resolution (section 2.4), and hence on the overall precision of GPS geodesy.

of the total number of cycles travelled from satellite to receiver. It is beneficial to estimate the number of integer cycles in order to achieve the highest geodetic precision, especially for real-time applications. The pseudorange measurements, although much less precise than the phase measurements, provide valuable constraints on the process of integer-cycle phase ambiguity resolution (section 2.4), and hence on the overall precision of GPS geodesy.

The basic elements of precise GPS positioning can be summarized as a quartet of idealized equations for the phase observations ( ), in distance units, and pseudorange observations (

), in distance units, and pseudorange observations ( ) at frequencies

) at frequencies  and

and  by

by

where  (5) denotes the non-dispersive signal travel distance (the 'geometric term'),

(5) denotes the non-dispersive signal travel distance (the 'geometric term'),  is the (dispersive) ionospheric effect, and

is the (dispersive) ionospheric effect, and  and

and  are the integer-cycle phase ambiguities. The objective is to estimate the station position (embedded in

are the integer-cycle phase ambiguities. The objective is to estimate the station position (embedded in  ), while fixing the ambiguities to their integer values in the presence of ionospheric refraction (section 2.4). In reality, as discussed in the next two sections, the direct GPS signals from satellite antenna to receiver antenna are also perturbed by the non-dispersive neutral atmosphere (the troposphere) and are interfered with by indirect signals (multipath), for example, reflections off objects nearby the GPS antenna. Furthermore, the signal is perturbed by imperfect receiver and satellite clocks, introducing timing and 'clock' biases. Of course, the measurements themselves are subject to error due, for example, to thermal noise in the GPS receiver; errors can vary from one type of GPS receiver to the other. A physical and stochastic model used to invert the observations for the parameters of interest (e.g. position) is discussed in section 2.3.3. Other factors to be considered are phase wind up due to the electromagnetic nature of circularly polarized waves (Wu et al 1993), realizations of reference systems (section 2.5), Earth tides (section 2.5.1), and relativistic effects (section 2.5.5). First, we consider the precise definition of the point to be positioned, antenna phase centers, and metadata.

), while fixing the ambiguities to their integer values in the presence of ionospheric refraction (section 2.4). In reality, as discussed in the next two sections, the direct GPS signals from satellite antenna to receiver antenna are also perturbed by the non-dispersive neutral atmosphere (the troposphere) and are interfered with by indirect signals (multipath), for example, reflections off objects nearby the GPS antenna. Furthermore, the signal is perturbed by imperfect receiver and satellite clocks, introducing timing and 'clock' biases. Of course, the measurements themselves are subject to error due, for example, to thermal noise in the GPS receiver; errors can vary from one type of GPS receiver to the other. A physical and stochastic model used to invert the observations for the parameters of interest (e.g. position) is discussed in section 2.3.3. Other factors to be considered are phase wind up due to the electromagnetic nature of circularly polarized waves (Wu et al 1993), realizations of reference systems (section 2.5), Earth tides (section 2.5.1), and relativistic effects (section 2.5.5). First, we consider the precise definition of the point to be positioned, antenna phase centers, and metadata.

2.3.2. Phase centers, geodetic marks, and metadata.

To achieve mm-level geodetic precision it is necessary to clearly identify the phase centers of the transmitting satellite antenna (Zhu et al 2003) and receiving ground antenna (Mader 1999), and the exact point ('geodetic mark', 'survey marker') to be positioned. Phase center variations are typically calibrated and applied as known corrections in GPS analysis. There are two types of calibrations for ground antennas. Relative calibrations are derived from GPS observations between two antennas separated by tens of meters at locations with precisely known coordinates and relatively benign multipath environments, with one type of antenna used as the master for calibrations of other types of antenna. Absolute calibrations are derived from a single robot-mounted GPS antenna collecting thousands of observations at different orientations, or by observations within an anechoic chamber (Rothacher 2001). The global GPS community, through the IGS, maintains absolute phase center correction values for all known geodetic antennas, with and without antenna covers ('radomes') (figure 2), at both L1 and L2 frequencies, and for transmitting antennas of different types of satellites (e.g. GPS Block II or GLONASS-M). For ground antennas, the corrections include offsets (in the north, east, and up directions) relative to a particular antenna's reference (mounting) point and variations (<10 mm) as a function of satellite elevation angle and azimuth in 5° increments. In practice, the corrections are imperfect and changes in antenna types will often result in spurious offsets in position. Therefore, changes in antenna type are avoided whenever possible at cGPS stations as well as during repeated field surveys (it is most important in the latter since it is easier to correct for spurious offsets in continuous position time series, see section 3.2). Antennas for cGPS and sGPS are oriented to true north to reduce azimuthal effects and to be consistent with the calibration corrections.

Geodetic applications require a precise definition of the point to be positioned and its relationship to the antenna's phase center. This is typically given as the vertical antenna 'height' although there may be horizontal offsets, as well. The antenna height could be zero if the point is defined at the antenna reference point with respect to which the antenna was calibrated, a small non-zero value if the point is referred to a physical location on an antenna adapter (e.g. 0.0083 m for an adapter specially designed and used in many cGPS networks), or it could be on the order of 1–2 m if the point is defined as a small indentation in a geodetic marker on the ground ('monument'), over which a surveyor's tripod may be mounted. In any case, there are three steps involved in mounting a GPS antenna: Mechanical connection, level, and alignment. The GPS antenna mount needs to be level with respect to the direction of the local gravity field and centered (for example, with a plumb bob) over the mark. Typically, for a cGPS station intended for monitoring crustal deformation, a permanent and rigid monument is constructed (figure 2, see also figure 7) that is isolated from the surface and driven to depth or anchored to bedrock to reduce local surface deformations due to soil contraction, desiccation, or weathering (Williams et al 2004, Beavan 2005), but less expensive spikes mounts, rock pins, masts, building mounts, concrete pillars, etc are also used. The differences and advantages of differing anchoring methods (figure 7) are discussed in section 3.3 in terms of noise characteristics. As an antenna support, cGPS-type anchored monuments have the advantage of being less vulnerable to disturbance. For sGPS, a surveyor's wooden or metal tripod is often used as a base for the antenna, but so are spike mounts and masts. If a surveyor's tribrach (a device for leveling and centering that sits atop a tripod and on which the antenna is mounted) is used, it needs laboratory calibration so as not to introduce a bias into the station position. Alternatively, a rotating optical plummet can be used effectively without calibration. Masts and spike mounts require essentially no calibration. Higher mounted antennas such as a surveyor's tripod or a mast have an advantage over a spike mount in avoiding low elevation-angle obstructions and being less susceptible to low-frequency multipath. A spike mount is less susceptible to operator setup error than a movable tripod or mast and can be left unattended without being seen, a safety issue that can increase survey productivity.

It is critical, in order to achieve the mm-level position precision required by GPS geodesy, to properly record and archive the appropriate 'metadata' for a cGPS or sGPS deployment. At a minimum, metadata include antenna type and serial number, receiver type, serial number and firmware version, antenna eccentricities (height and any horizontal offsets), antenna phase calibration values, and dates when changes in any of these have occurred. Under the global framework of the IGS, keeping the metadata up to date is the responsibility of the Global Archive Centers.

2.3.3. Physical models and observation equations.

Now we present basic functional and stochastic models ('observation equations') that relate the GPS observables and the physical parameters of interest, for example station position, followed by an inversion of the observation equations to estimate the parameters. We assume that the satellite and receiver antenna phase centers and geodetic mark have been well defined and that the metadata are accurate.

GPS signal propagation in a vacuum ('the geometric term') is given by

a non-linear function of the satellite position vector  at the time of signal transmission

at the time of signal transmission  and the receiver position vector

and the receiver position vector  at the time of reception

at the time of reception  . The receiver position is defined in a right-handed Earth-fixed, Earth-centered terrestrial reference frame (section 2.5.3) by

. The receiver position is defined in a right-handed Earth-fixed, Earth-centered terrestrial reference frame (section 2.5.3) by

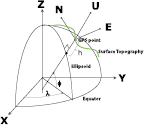

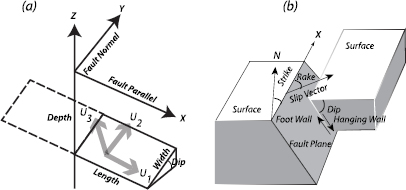

By convention, the X- and Y-axes are in the Earth's equatorial plane with X in the direction of the point of zero longitude and the Z-axis in the direction of the Earth's pole of rotation (see section 2.5.3 for a more precise definition and figure 3). The satellite position at any epoch of time is described, in state vector representation, by the satellite's equations of motion in a geocentric inertial (celestial) reference frame (section 2.5.2) as

Figure 3. Geodetic coordinate systems. Analysis of GPS phase and pseudorange data is carried out in a global Earth-centered Earth-fixed reference frame in (X, Y, Z) coordinates (6). An alternate representation is geodetic latitude, longitude, and height (ϕ, λ, h) with respect to a geocentric oblate ellipsoid of revolution (one octant shown). Transformation of positions in the right-handed (X, Y, Z) frame to displacements in a left-handed local frame (N, E, U) is a function of geodetic latitude and longitude (36).

Download figure:

Standard image High-resolution imageThe station position in a terrestrial reference frame and the satellite position in an inertial frame are related through a series of rotations (section 2.5.4); in most GPS software the calculations are performed in an inertial frame. The first term on the right of (8) is the spherical part of the Earth's gravitational field. The second term represents perturbing forces (accelerations) acting on the satellite, including the non-spherical part of the Earth's gravitational field, luni-solar gravitational effects, solar radiation pressure, and other perturbations specific to the GPS satellites such as satellite maneuvers. Solving the equations of motion for each GPS satellite based on observations from a global network of GPS stations, referred to as 'orbit determination', provides estimates of the satellite's state ('the satellite ephemeris') at any epoch of time (Beutler et al 1998). Access to accurate satellite ephemerides (at the 1–2 cm level in an instantaneous satellite position) is essential in geophysical applications where the goal is mm-level positioning; the broadcast ephemeris transmitted by the GPS satellites has meter-level precision, not sufficient for most geodetic applications. However, for regional networks (hundreds of km) 5–10 cm errors in individual satellites can still produce mm-level relative coordinates. Precise ephemerides for GPS (and GLONASS) satellites are available through the IGS and are sufficiently accurate for any geodetic application. Although of intrinsic interest as an orbit determination problem for large irregular satellites occasionally subject to maneuvers and the complex modeling required for solar radiation pressure effects, for most physical applications and for simplicity we will assume that the GPS ephemerides are given and without error (not estimated in the inversion process).

We can express the GPS inverse problem starting with a general non-linear functional model  , where the physical parameters of interest

, where the physical parameters of interest  are estimated from GPS phase and pseudorange observations contained in vector

are estimated from GPS phase and pseudorange observations contained in vector  . The model for an L1 or L2 phase measurement

. The model for an L1 or L2 phase measurement  at a particular epoch of time can be expressed in distance units by the observation equation

at a particular epoch of time can be expressed in distance units by the observation equation

where  (5) denotes the geometric (in vacuum) distance between station i and satellite j, t is the time of signal reception,

(5) denotes the geometric (in vacuum) distance between station i and satellite j, t is the time of signal reception,  is time delay between transmission and reception, and c is the speed of light. The second term on the right includes dti the receiver clock error and

is time delay between transmission and reception, and c is the speed of light. The second term on the right includes dti the receiver clock error and  is the satellite clock error; for our purposes we will simply refer to it as the clock error dt.

is the satellite clock error; for our purposes we will simply refer to it as the clock error dt.  is the tropospheric propagation delay in the zenith direction ('zenith total delay') and

is the tropospheric propagation delay in the zenith direction ('zenith total delay') and  is a function that maps the ZTD to lower elevation angles (see section 6.1.2 for additional horizontal troposphere delay gradient parameters).

is a function that maps the ZTD to lower elevation angles (see section 6.1.2 for additional horizontal troposphere delay gradient parameters).  is the total effect of the ionosphere along the signal's path (section 6.2.1).

is the total effect of the ionosphere along the signal's path (section 6.2.1).  denotes the integer-cycle phase ambiguity, Bi and Bj denote the non-integer (fractional) parts of receiver- and satellite-specific clock biases, respectively, and

denotes the integer-cycle phase ambiguity, Bi and Bj denote the non-integer (fractional) parts of receiver- and satellite-specific clock biases, respectively, and  is the wavelength at either the L1 or L2 frequency. The term

is the wavelength at either the L1 or L2 frequency. The term  denotes total signal multipath effects at the transmitting and receiving antennas; for our purposes we will neglect multipath at the satellite transmission antenna and refer to this term as

denotes total signal multipath effects at the transmitting and receiving antennas; for our purposes we will neglect multipath at the satellite transmission antenna and refer to this term as  . Finally,

. Finally,  denotes measurement error, whose assumed first- and second-order moments are described below after linearization of the observations equations.

denotes measurement error, whose assumed first- and second-order moments are described below after linearization of the observations equations.

The observation equation for a P1 or P2 pseudorange measurement is the same as the phase (9) except that there is no ambiguity term  and the sign is reversed for the dispersive ionosphere term

and the sign is reversed for the dispersive ionosphere term  . As mentioned previously, the uncertainties in the longer wavelength pseudorange measurements are about two orders of magnitude larger than for the phase errors. At each observation epoch, the number of observations is four times (L1, L2, P1, P2) the number of visible satellites. The number of estimated parameters depends on the application and the parameters of interest.

. As mentioned previously, the uncertainties in the longer wavelength pseudorange measurements are about two orders of magnitude larger than for the phase errors. At each observation epoch, the number of observations is four times (L1, L2, P1, P2) the number of visible satellites. The number of estimated parameters depends on the application and the parameters of interest.

The integer cycles are counted once tracking starts to a satellite so only the initial integer-cycle phase ambiguities  need to be estimated. However, in practice phase observations may include losses of receiver phase lock and cycle slips (jumps of integer cycles) due to a variety of factors including signal obstructions, severe multipath, gaps in the data due to communication failures, satellite rising and setting, severe ionospheric disturbances, etc. One outcome is losing count of the number of integer cycles in the signal propagation, which complicates phase ambiguity resolution (section 2.4) and reduces the precision of the parameters of interest, if not taken into account. Therefore, efficient geodetic GPS algorithms need to include automatic detection and repair of cycle slips and to account for gaps in the phase data.

need to be estimated. However, in practice phase observations may include losses of receiver phase lock and cycle slips (jumps of integer cycles) due to a variety of factors including signal obstructions, severe multipath, gaps in the data due to communication failures, satellite rising and setting, severe ionospheric disturbances, etc. One outcome is losing count of the number of integer cycles in the signal propagation, which complicates phase ambiguity resolution (section 2.4) and reduces the precision of the parameters of interest, if not taken into account. Therefore, efficient geodetic GPS algorithms need to include automatic detection and repair of cycle slips and to account for gaps in the phase data.

Neglecting second-order ionospheric effects (Kedar et al 2003), the 'ionosphere-free' linear combination of the L1 and L2 phase observables (in units of phase) is given by

so that the non-integer ambiguity term for  is

is

The variance in the ionosphere-free combination is by error propagation

assuming that the L1 and L2 variances,  and

and  are of equal weight (=

are of equal weight (= and uncorrelated. In most cases (except for very short baselines in network positioning described below), phase observations

and uncorrelated. In most cases (except for very short baselines in network positioning described below), phase observations  and

and  are combined to form

are combined to form  since the increase in variance is negligible compared to the differential ionospheric signal delay.

since the increase in variance is negligible compared to the differential ionospheric signal delay.

Depending on the application, 3D positions (6), zenith troposphere delays  , ionospheric delays

, ionospheric delays  , and multipath effects

, and multipath effects  (9) may be parameters of specific physical interest ('signals'). For example, the ionospheric parameters

(9) may be parameters of specific physical interest ('signals'). For example, the ionospheric parameters  may be eliminated to first order through the linear combination of phase measurements (10), but are of interest in tsunami modeling based on gravity wave and acoustic wave disruptions of the ionosphere (section 6.2). Similarly, the troposphere parameter is of interest in short-term weather forecasting (section 6.1.3) and climate change research (section 7.5). Thermal receiver noise and multipath effects are considered to be random 'noise' in the context of a precise GPS, although receiver multipath

may be eliminated to first order through the linear combination of phase measurements (10), but are of interest in tsunami modeling based on gravity wave and acoustic wave disruptions of the ionosphere (section 6.2). Similarly, the troposphere parameter is of interest in short-term weather forecasting (section 6.1.3) and climate change research (section 7.5). Thermal receiver noise and multipath effects are considered to be random 'noise' in the context of a precise GPS, although receiver multipath  can also be exploited as a 'signal' for environmental applications (section 8.5). The term

can also be exploited as a 'signal' for environmental applications (section 8.5). The term  and the clock error term are considered 'nuisance' parameters.

and the clock error term are considered 'nuisance' parameters.

The model equations  are linearized through a Taylor series expansion

are linearized through a Taylor series expansion

that can be expressed as

Since phase and pseudorange observations are subjected to some error  , the observation equations with assumed first-order (mean) and second-order moments (variance) can be expressed as

, the observation equations with assumed first-order (mean) and second-order moments (variance) can be expressed as

A is called the design matrix of partial derivatives, E denotes statistical expectation, D denotes statistical dispersion,  is a covariance matrix of observation errors, P is the weight matrix, and

is a covariance matrix of observation errors, P is the weight matrix, and  is an a priori variance factor. If we assume that the observations are uncorrelated in space and time, the covariance matrix

is an a priori variance factor. If we assume that the observations are uncorrelated in space and time, the covariance matrix  is diagonal. Linearization of the observation equation (9), here for the ionosphere-free linear combination (LC), for a particular station i and satellite j at any epoch of observation is

is diagonal. Linearization of the observation equation (9), here for the ionosphere-free linear combination (LC), for a particular station i and satellite j at any epoch of observation is

The  symbol denotes incremental adjustment to an estimated parameter relative to its a priori value in the linearization of observation equations (9). For example,

symbol denotes incremental adjustment to an estimated parameter relative to its a priori value in the linearization of observation equations (9). For example,  is the partial derivative for the position parameters such that

is the partial derivative for the position parameters such that

where  and

and  are the station and satellite positions, respectively. The subscript LC for the error term

are the station and satellite positions, respectively. The subscript LC for the error term  denotes that the error refers to the ionosphere-free observable (12). Note that multipath is dispersive (and correlated in time) (section 8.4) and

denotes that the error refers to the ionosphere-free observable (12). Note that multipath is dispersive (and correlated in time) (section 8.4) and  denotes the magnified effect.

denotes the magnified effect.

Here we describe a weighted least squares (used several times in this review) for the inversion of (15) whereby the Euclidian norm (L2-norm) of the residual vector  is minimized such that

is minimized such that

with the weighted least squares solution  and the estimated covariance matrix

and the estimated covariance matrix  given, respectively, by

given, respectively, by

The hat denotes an estimated quantity. The vector  contains the post-fit residuals. The a posteriori variance factor

contains the post-fit residuals. The a posteriori variance factor  is often called the 'a posteriori variance of unit weight', 'chi-squared per degrees of freedom', or 'goodness of fit', where the degrees of freedom is

is often called the 'a posteriori variance of unit weight', 'chi-squared per degrees of freedom', or 'goodness of fit', where the degrees of freedom is  ;

;  is the number of observations and

is the number of observations and  the number of parameters. In most cases, there is some a priori value for a subset of the parameters in vector

the number of parameters. In most cases, there is some a priori value for a subset of the parameters in vector  (e.g. the position), which can be introduced into the above model with its diagonal covariance matrix

(e.g. the position), which can be introduced into the above model with its diagonal covariance matrix  such that

such that

2.4. Positioning approaches

There are two basic approaches to GPS analysis. The earliest one is network ('relative') positioning (Dong and Bock 1989, Blewitt 1989) developed to position stations with respect to at least one fixed reference station within a local or regional network. Network positioning is also used to estimate satellite orbits and Earth orientation parameters (EOP) from a global network of reference stations. With the significant increase in the number of networks and the number of stations within networks, relative positioning became computationally cumbersome. Precise point positioning (PPP) (Zumberge et al 1997) was introduced as a way to individually and very efficiently estimate local and regional station positions directly with respect to a global reference network, the same one used to estimate the satellite orbits. The longest lived and most widely used software packages for precise GPS positioning and orbit determination covering both approaches include Bernese (at the Astronomical Institute, University of Berne: Beutler et al 2001, Hugentobler et al 2005), GAMIT ('GPS at MIT', Herring et al 2008), and GIPSY-OASIS ('GNSS-Inferred Positioning System and Orbit Analysis Simulation Program' at NASA's Jet Propulsion Laboratory: Zumberge et al 1997, Bertiger et al 2010). Other packages include NAPEOS ('NAvigation Package for Earth Orbiting Satellites, European Space Agency: Springer, 2009), PAGES ('Program for the Adjustment of GPS Ephemerides' at the US National Geodetic Survey: Eckl et al 2001), PANDA ('Position and Navigation Data Analyst' at Wuhan University: Liu and Ge 2003), and EPOS ('Earth Parameter and Orbit Software' at GeoForschungsZentrum, Potsdam: Angermann et al 1997). GPS analysis by these and other software has evolved with improved physical models and processing efficiencies, and has significantly benefited by the expansion of the global network under the aegis of the IGS (section 2.2), providing increasingly accurate GNSS orbit estimates (currently GPS and GLONASS) and more stable reference frames.

The simplest network for network positioning consists of two stations, a 'baseline vector' in geodetic parlance, one with fixed coordinates and one with an unknown position. In practice, networks at local to regional scales may consist of hundreds of stations, e.g. a network designed to span a tectonic plate boundary. In this method, the observation equations (9) for the two stations that determine the baseline are differenced so that the unknown parameters are the baseline vector components, not the absolute positions. In precise point positioning (PPP), an unknown station is directly positioned with respect to the same terrestrial frame, but now realized through known precise satellite ephemerides and satellite clock estimates estimated from the same global tracking network that defines the reference frame (Kouba and Heroux 2001). Network positioning and point positioning approaches can be considered equivalent in terms of the underlying physics. In both methods, positions are estimated with respect to the International Terrestrial Reference Frame, the latest version being ITRF2008 (section 2.5.3) (Altamimi et al 2011). The main advantage of PPP is the speed of computations in the inversion process (18) and (19). The efficiency of network positioning decreases with the number of stations (approximately as the cube of the number of stations), and becomes unwieldy as the number of stations grows. One approach to improve its efficiency is to divide the larger network into subnetworks with overlapping stations and then combine the subnetworks through a least squares network adjustment (Zhang 1996) or even to analyze the larger network baseline by baseline, in which case PPP and network positioning are nearly equivalent in terms of computational time. To improve accuracy it is useful to resolve integer-cycle phase ambiguities  (9) to their correct integer values (Counselman and Gourevitch 1981, Blewitt 1989, Dong and Bock 1989, Teunissen 1998 and references therein). The original PPP formulation did not include ambiguity resolution, while ambiguity resolution was always part of the network positioning approach. In section 2.4.2, we present a way to resolve phase ambiguities within PPP analysis at regional scales (up to about 4000 km).

(9) to their correct integer values (Counselman and Gourevitch 1981, Blewitt 1989, Dong and Bock 1989, Teunissen 1998 and references therein). The original PPP formulation did not include ambiguity resolution, while ambiguity resolution was always part of the network positioning approach. In section 2.4.2, we present a way to resolve phase ambiguities within PPP analysis at regional scales (up to about 4000 km).

2.4.1. Network positioning.

In network positioning 'common-mode' errors are cancelled by differencing the phases collected between stations so that the satellite clock biases  terms drop out since they are common to both stations, and by differencing between satellites so that the station clock biases

terms drop out since they are common to both stations, and by differencing between satellites so that the station clock biases  terms drop out since they are common to both satellites, thereby revealing the integer-cycle phase ambiguities

terms drop out since they are common to both satellites, thereby revealing the integer-cycle phase ambiguities  . This 'double differencing' is performed at each observation epoch for all stations in a network of receiving stations and all visible satellites. Estimating the satellite and receiver clock biases on an epoch by epoch basis is, assuming that the clock biases are uncorrelated in time, equivalent to double differencing (Schaffrin and Grafarend 1986). Likewise, 'common-mode' errors due to tropospheric and ionospheric refraction are reduced. At the very shortest distances (up to several kilometers), the satellite/receiver paths sample the same portion of the atmosphere and, thus, atmospheric effects are very similar and often assumed identical. This assumption degrades as the distance between stations increases. For the troposphere (section 6.1), the correlation length is on the order of tens of kilometers; for the ionosphere (section 6.2) the effect is approximately proportional to the distance. It should be noted that for short distances (<2–3 km) the increase in noise level when forming the ionosphere-free combination (10) is greater than the reduction in the ionospheric effect

. This 'double differencing' is performed at each observation epoch for all stations in a network of receiving stations and all visible satellites. Estimating the satellite and receiver clock biases on an epoch by epoch basis is, assuming that the clock biases are uncorrelated in time, equivalent to double differencing (Schaffrin and Grafarend 1986). Likewise, 'common-mode' errors due to tropospheric and ionospheric refraction are reduced. At the very shortest distances (up to several kilometers), the satellite/receiver paths sample the same portion of the atmosphere and, thus, atmospheric effects are very similar and often assumed identical. This assumption degrades as the distance between stations increases. For the troposphere (section 6.1), the correlation length is on the order of tens of kilometers; for the ionosphere (section 6.2) the effect is approximately proportional to the distance. It should be noted that for short distances (<2–3 km) the increase in noise level when forming the ionosphere-free combination (10) is greater than the reduction in the ionospheric effect  , and so the L1 and L2 observations can be directly inverted. In this case, rapid ambiguity resolution is robust even with a single epoch of L1 observations (e.g. instantaneous positioning, Bock et al 2000). This type of solution is useful, for example, for near geologic fault crossing arrays of GPS stations to quantify the degree of fault coupling (creep—section 4.5). However, these are special cases and for most applications ionosphere-free observables are used in the inversion (16) for the parameters of interest.

, and so the L1 and L2 observations can be directly inverted. In this case, rapid ambiguity resolution is robust even with a single epoch of L1 observations (e.g. instantaneous positioning, Bock et al 2000). This type of solution is useful, for example, for near geologic fault crossing arrays of GPS stations to quantify the degree of fault coupling (creep—section 4.5). However, these are special cases and for most applications ionosphere-free observables are used in the inversion (16) for the parameters of interest.

Although it is possible to eliminate first-order dispersive ionospheric effects  (9) by (10), in fact it is the ionosphere that is the primary limitation to successful ambiguity resolution. This is because the phase ambiguity term resulting from the ionosphere-free linear combination has a non-integer value (10). One solution is to use the pseudorange observables to extract the integer-cycle phase ambiguities

(9) by (10), in fact it is the ionosphere that is the primary limitation to successful ambiguity resolution. This is because the phase ambiguity term resulting from the ionosphere-free linear combination has a non-integer value (10). One solution is to use the pseudorange observables to extract the integer-cycle phase ambiguities  and

and  by first estimating

by first estimating  , the so-called 'wide-lane' or 'Melbourne–Wübbena' combination with an effective wavelength of 86.2 cm, compared to the narrower wavelength L1 (~19 cm) and L2 (~24 cm) phase observations (Hatch 1991, Melbourne 1985, Wübbena 1985), where

, the so-called 'wide-lane' or 'Melbourne–Wübbena' combination with an effective wavelength of 86.2 cm, compared to the narrower wavelength L1 (~19 cm) and L2 (~24 cm) phase observations (Hatch 1991, Melbourne 1985, Wübbena 1985), where

Once the  ambiguities are resolved then one can try to resolve the 'narrow lane'

ambiguities are resolved then one can try to resolve the 'narrow lane'  ambiguities (now with an effective wavelength of 10.7 cm). Usually, the wide lane ambiguity can be resolved, even for networks of global extent, by inverting multiple data epochs at static stations, as long as the pseudorange errors are a fraction of the wide-lane wavelength, as is the case for modern GPS receivers. This is more complicated for real-time (single epoch) observations and dynamic platforms. The main sources of error are the magnitude of the dispersive ionospheric refraction and receiver multipath effects, which are magnified in the ionosphere-free combination. Another approach to resolving the wide-lane ambiguities, appropriate to network positioning, is to apply a realistic a priori stochastic constraint (a 'pseudo' observation) on the ionosphere term

ambiguities (now with an effective wavelength of 10.7 cm). Usually, the wide lane ambiguity can be resolved, even for networks of global extent, by inverting multiple data epochs at static stations, as long as the pseudorange errors are a fraction of the wide-lane wavelength, as is the case for modern GPS receivers. This is more complicated for real-time (single epoch) observations and dynamic platforms. The main sources of error are the magnitude of the dispersive ionospheric refraction and receiver multipath effects, which are magnified in the ionosphere-free combination. Another approach to resolving the wide-lane ambiguities, appropriate to network positioning, is to apply a realistic a priori stochastic constraint (a 'pseudo' observation) on the ionosphere term  as a function of the inter-station distance (Schaffrin and Bock 1988).

as a function of the inter-station distance (Schaffrin and Bock 1988).

Intrinsic to ambiguity resolution is examining the estimated real-valued doubly-differenced integer-cycle ambiguities and picking out the corresponding correct integer values through some deterministic or stochastic criteria. The simplest approach is to round the real-valued ambiguity to its closest integer value if the uncertainty in the real value is within a certain tolerance (e.g. 0.1 of a cycle). Another approach is to attempt to resolve ambiguities in sequence of increasing baseline length, under the assumption that shorter baselines are subject to fewer errors due to orbital error and atmospheric refraction (Abbot and Counselman 1989, Dong and Bock 1989). However, this approach was suggested when orbital error dominated the GPS analysis error budget. In current practice with the availability of IGS orbits, local conditions (multipath, atmospheric refraction) are dominant up to ~1000 km, where shorter periods of mutual visibility are the issue. An efficient approach is the 'least-squares ambiguity decorrelation adjustment' (LAMBDA) method (Teunissen 1995). It first decorrelates the doubly-differenced phase ambiguities based on an integer approximation of the conditional least-squares transformation that reduces the ambiguity search space bounding all possible integer candidates. This is a multi-dimensional problem according to the number of ambiguities; in three dimensions the transformation can be viewed as changing a very elongated ellipsoidal search space into one that is more spheroidal. However, decorrelation is never complete. Consider that  is the ambiguity transformation and operates on the portion of the design matrix A (13) that corresponds to the vector a of real-valued ambiguities, and that the remainder of A contains all the other parameters (e.g. station positions). Then

is the ambiguity transformation and operates on the portion of the design matrix A (13) that corresponds to the vector a of real-valued ambiguities, and that the remainder of A contains all the other parameters (e.g. station positions). Then

and the equivalent minimization problem becomes

The search space is then

where  is a user-defined constant and

is a user-defined constant and  is the variance, where the vertical line denotes 'given'. The ambiguities are then resolved according to the sequential bounds of the transformed ambiguity space, starting with the transformed ambiguities of lowest uncertainty. Once the ambiguities are resolved to integers, they can be used to estimate the remaining parameters of interest (Teunissen 1998). The above transformed ambiguity approach can be applied to the L1, L2, or wide-lane ambiguities (22), and the process could be supplemented by taking into account the properly weighted pseudorange measurements. In the most general case, the search space of dimension of the number of, say, wide-lane ambiguities, is given by

is the variance, where the vertical line denotes 'given'. The ambiguities are then resolved according to the sequential bounds of the transformed ambiguity space, starting with the transformed ambiguities of lowest uncertainty. Once the ambiguities are resolved to integers, they can be used to estimate the remaining parameters of interest (Teunissen 1998). The above transformed ambiguity approach can be applied to the L1, L2, or wide-lane ambiguities (22), and the process could be supplemented by taking into account the properly weighted pseudorange measurements. In the most general case, the search space of dimension of the number of, say, wide-lane ambiguities, is given by

where  is the user-defined threshold,

is the user-defined threshold,  is the determinant of the portion of the normal matrix

is the determinant of the portion of the normal matrix  (19) containing the phase ambiguities,

(19) containing the phase ambiguities,  and

and  are the carrier-phase and pseudorange uncertainties, respectively, n is the number of epochs, and

are the carrier-phase and pseudorange uncertainties, respectively, n is the number of epochs, and  and

and  are the L1 and L2 carrier-phase wavelengths. This shows that for static GPS solutions, the integer ambiguity resolution should improve with the number of observation epochs and the wavelength, which is longer (~86 cm) for the wide-lane ambiguities (Geng and Bock 2013).

are the L1 and L2 carrier-phase wavelengths. This shows that for static GPS solutions, the integer ambiguity resolution should improve with the number of observation epochs and the wavelength, which is longer (~86 cm) for the wide-lane ambiguities (Geng and Bock 2013).

2.4.2. Precise point positioning.

Precise point positioning (PPP) (Zumberge et al 1997) relies on pre-computed true-of-observation-time satellite positions  (5) and satellite clock parameters

(5) and satellite clock parameters  (9) estimated through a network analysis of globally-distributed reference stations, as is done and distributed by the IGS and its analysis centers (Kouba and Heroux 2001). These are the same IGS stations whose ITRF coordinates and velocities are used to realize the international terrestrial reference system (section 2.5.1), and so their 'true-of-date coordinates' at a particular epoch of time are known with mm-level accuracy. As its name implies, PPP calculates 'absolute' positions at any location on the globe with respect to the ITRF (section 2.5.3), which is accessible through the given satellite orbit and clock parameters. The parameters estimated in the PPP inversion are then the station's position, zenith troposphere delays

(9) estimated through a network analysis of globally-distributed reference stations, as is done and distributed by the IGS and its analysis centers (Kouba and Heroux 2001). These are the same IGS stations whose ITRF coordinates and velocities are used to realize the international terrestrial reference system (section 2.5.1), and so their 'true-of-date coordinates' at a particular epoch of time are known with mm-level accuracy. As its name implies, PPP calculates 'absolute' positions at any location on the globe with respect to the ITRF (section 2.5.3), which is accessible through the given satellite orbit and clock parameters. The parameters estimated in the PPP inversion are then the station's position, zenith troposphere delays  at that location (i = 1), the receiver clock parameter

at that location (i = 1), the receiver clock parameter  , and the non-integer bias term

, and the non-integer bias term  for each satellite j (9). The ionosphere parameters

for each satellite j (9). The ionosphere parameters  are eliminated to first order by linear combination of the L1 and L2 phase observations (10). It is important that the physical models (e.g. Earth tides, antenna phase center corrections) used in the PPP inversion be the same as those used in the global network analysis. This is accomplished through standards adopted by the IGS.

are eliminated to first order by linear combination of the L1 and L2 phase observations (10). It is important that the physical models (e.g. Earth tides, antenna phase center corrections) used in the PPP inversion be the same as those used in the global network analysis. This is accomplished through standards adopted by the IGS.

In order to extend the PPP method to allow for ambiguity resolution (AR) (PPP-AR, Ge et al 2008, Laurichesse et al 2009, Geng et al 2012) within a limited area of interest (up to a scale of about 4000 km), another network solution is performed. This solution estimates the weighted averages of all  satellite bias terms (9), called fractional cycle biases (FCBs), so that these parameters can also be fixed, prior to the site-by-site PPP-AR position calculations within the area of interest. The reference stations for this network solution are chosen to be outside the region of interest so that, for example, coseismic motions will not affect FCB parameter estimation, an approach that is especially suited for real-time earthquake monitoring (section 5.2). After resolving the wide lane ambiguities

satellite bias terms (9), called fractional cycle biases (FCBs), so that these parameters can also be fixed, prior to the site-by-site PPP-AR position calculations within the area of interest. The reference stations for this network solution are chosen to be outside the region of interest so that, for example, coseismic motions will not affect FCB parameter estimation, an approach that is especially suited for real-time earthquake monitoring (section 5.2). After resolving the wide lane ambiguities  (22) as part of the network solution, the linearized narrow-lane ionosphere-free carrier phase observation equations for a particular station i and satellite j at each epoch of observation are

(22) as part of the network solution, the linearized narrow-lane ionosphere-free carrier phase observation equations for a particular station i and satellite j at each epoch of observation are

where now  denotes the narrow lane ambiguity, with

denotes the narrow lane ambiguity, with  (Geng et al 2013). The

(Geng et al 2013). The  symbol denotes incremental adjustment to an estimated parameter relative to its a priori value in the linearization of observation equations (9).

symbol denotes incremental adjustment to an estimated parameter relative to its a priori value in the linearization of observation equations (9).  is the partial derivative for the position parameter (17). The subscript LC for error

is the partial derivative for the position parameter (17). The subscript LC for error  , such that

, such that  , denotes that the error refers to the ionosphere-free linear combination. In the inversion of (27) the estimated parameters

, denotes that the error refers to the ionosphere-free linear combination. In the inversion of (27) the estimated parameters  are real-valued. Again, we have assumed that the precise satellite orbits

are real-valued. Again, we have assumed that the precise satellite orbits  and satellite clock parameters are available from the IGS, or another external source, and held fixed. The station coordinates are also held fixed in the network solution to their true-of-date values with respect to the ITRF, derived from time series analyses of years of daily positions (section 3.2); alternatively, the stations themselves may be IGS reference stations so their true-of-date coordinates are known by convention (e.g. ITRF2008). The receiver bias

and satellite clock parameters are available from the IGS, or another external source, and held fixed. The station coordinates are also held fixed in the network solution to their true-of-date values with respect to the ITRF, derived from time series analyses of years of daily positions (section 3.2); alternatively, the stations themselves may be IGS reference stations so their true-of-date coordinates are known by convention (e.g. ITRF2008). The receiver bias  is eliminated by differencing the observation equations (27) between satellites. In the inversion, the fractional part of

is eliminated by differencing the observation equations (27) between satellites. In the inversion, the fractional part of  ,

,  is estimated for each station in the reference network as well as the zenith troposphere delays

is estimated for each station in the reference network as well as the zenith troposphere delays  , the clock parameter

, the clock parameter  , and the narrow-lane ambiguities. A mean value for the satellite phase bias

, and the narrow-lane ambiguities. A mean value for the satellite phase bias  for each satellite j, called a fractional cycle bias (FCB), is then estimated over the r reference stations by

for each satellite j, called a fractional cycle bias (FCB), is then estimated over the r reference stations by

Increasing the number of stations in the reference network will improve the reliability and accuracy of the FCB estimates.

We can now individually perform PPP-AR inversions for each unknown station position in the area of focus using, importantly, the same model (27) and inputs as the prior network inversion. As before, we hold fixed the precise satellite orbits  and satellite clock parameters obtained from the IGS, and the receiver bias

and satellite clock parameters obtained from the IGS, and the receiver bias  is eliminated by differencing between satellites. The satellite bias

is eliminated by differencing between satellites. The satellite bias  for each satellite is assigned the FCB value

for each satellite is assigned the FCB value  . As a result, the integer-valued phase ambiguities

. As a result, the integer-valued phase ambiguities  (27) have been decoupled from the satellite and receiver phase biases. Ambiguity resolution for a single station can then be attempted. Upon successful fixing (or partial fixing) of

(27) have been decoupled from the satellite and receiver phase biases. Ambiguity resolution for a single station can then be attempted. Upon successful fixing (or partial fixing) of  to integers, e.g. using the LAMBDA method, the precise point positioning with ambiguity resolution (PPP-AR) solution for an individual station (client) is achieved, and the estimated coordinates are 'absolute' with respect to the ITRF (section 2.5.3). The point positioning method is particularly advantageous, compared with network positioning, for the case of estimating coseismic motions (section 5.2). Regional network positions may be contaminated when all stations are displaced during an earthquake. In the case of great earthquakes, since the zone of coseismic deformation may extend to distances of thousands of kilometers from the earthquake's epicenter, the solution that provides the FCB corrections may be biased. One could then revert to PPP without ambiguity resolution and take advantage of any collocated strong motion accelerometer data (next section) to improve the precision of the coseismic station displacements.

to integers, e.g. using the LAMBDA method, the precise point positioning with ambiguity resolution (PPP-AR) solution for an individual station (client) is achieved, and the estimated coordinates are 'absolute' with respect to the ITRF (section 2.5.3). The point positioning method is particularly advantageous, compared with network positioning, for the case of estimating coseismic motions (section 5.2). Regional network positions may be contaminated when all stations are displaced during an earthquake. In the case of great earthquakes, since the zone of coseismic deformation may extend to distances of thousands of kilometers from the earthquake's epicenter, the solution that provides the FCB corrections may be biased. One could then revert to PPP without ambiguity resolution and take advantage of any collocated strong motion accelerometer data (next section) to improve the precision of the coseismic station displacements.

Up to this point we have assumed that the inversion of GPS data (15) and (25) for parameters of interest is performed using a linearized weighted least squares algorithm (19). In practice, the inversion is often extended to a weighted least-squares algorithm, where realistic uncertainties are assigned to a priori estimates of particular parameters (15), e.g. station positions and satellite orbital elements may be tightly constrained in GPS meteorology (section 6.1.3). Kalman filters or similar formulations may be used to take into account temporal correlations in the estimated parameters. In the above PPP-AR example, troposphere delays and receiver clock parameters may be parameterized by piecewise continuous functions with assumed stochastic processes, e.g. first order Gauss–Markov (46), to account for temporal correlations in the phase and pseudorange observables.

2.4.3. Addition of accelerometer observations.

Precise GPS measurements are often supplemented with data from other sensors, e.g. accelerometers for monitoring of seismic displacements and velocities (section 5.2.3), or inertial navigation units for precise trajectories for airborne lidar measurements of active fault zones (Nissen et al 2012, Brooks et al 2013). Here we present a supplement to the GPS observation equations (27) for the addition of accelerometer observations, suitable for the inversion of positions and velocities with a Kalman filter discussed in detail in section 5.2.3. We modify the linearized observation equations (27) to explicitly introduce notation for time epoch k

The notation i here is somewhat redundant since there is only one station to position; it is used to denote station dependence. In addition to the positions  , the parameter set includes seismic velocities

, the parameter set includes seismic velocities  . The linear accelerometer observation equation (31), one for each accelerometer channel (local north, east, and up), includes

. The linear accelerometer observation equation (31), one for each accelerometer channel (local north, east, and up), includes  the observed accelerometer measurements,

the observed accelerometer measurements,  true accelerations,

true accelerations,  the acceleration biases, and

the acceleration biases, and  the accelerometer random error such that

the accelerometer random error such that  . The biases

. The biases  can be estimated as a stochastic process to accommodate slowly time-varying changes. Hence, no pre-event mean needs to be eliminated from the acceleration data before starting the Kalman filter. Accelerometer errors due to instrument rotation and tilt ('baseline' errors in seismology parlance) that are manifested in doubly-integrating accelerations to displacements (section 5.2.2) are minimized since these biases are absorbed by

can be estimated as a stochastic process to accommodate slowly time-varying changes. Hence, no pre-event mean needs to be eliminated from the acceleration data before starting the Kalman filter. Accelerometer errors due to instrument rotation and tilt ('baseline' errors in seismology parlance) that are manifested in doubly-integrating accelerations to displacements (section 5.2.2) are minimized since these biases are absorbed by  . The state transition matrix for the Kalman filter takes the form of

. The state transition matrix for the Kalman filter takes the form of

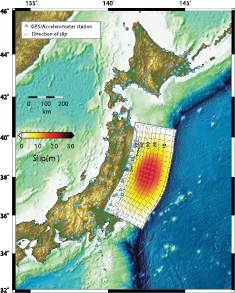

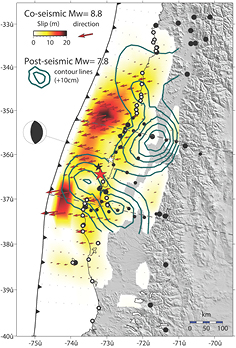

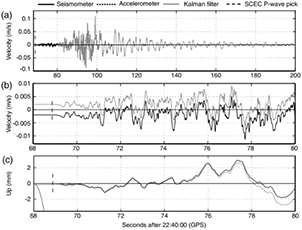

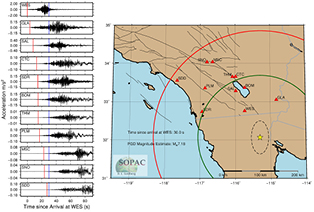

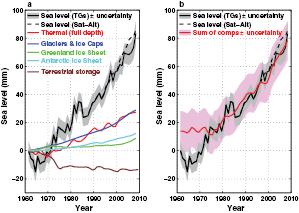

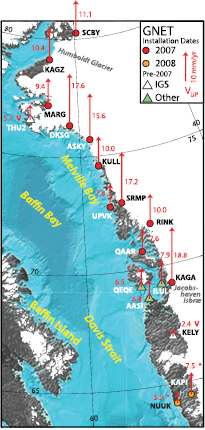

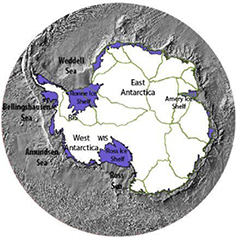

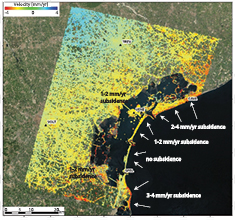

where  is the sampling interval of the accelerometer data. The accelerometer data are applied as tight constraints on the position variation between observation epochs when, as is usually the case, the sampling interval of accelerometers in 100–250 Hz but only 1–10 Hz for GPS. This approach is suitable for a 'tightly-coupled' Kalman filter inversion since it combines the accelerometer and GPS data at the observation level (Geng et al 2013, Yi et al 2013). It is distinguished from a 'loosely-coupled' Kalman filter, where the accelerometer measurements are included after a station displacement estimate has been achieved, either through PPP or network positioning (Bock et al 2011). The tightly-coupled approach is expected to improve cycle-slip repair (section 2.3.3) for GPS carrier-phase data and rapid ambiguity resolution after GPS outages (Grejner-Brzezinska et al 1998, Lee et al 2005, Geng et al 2013a).