Abstract

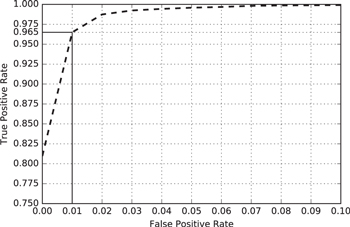

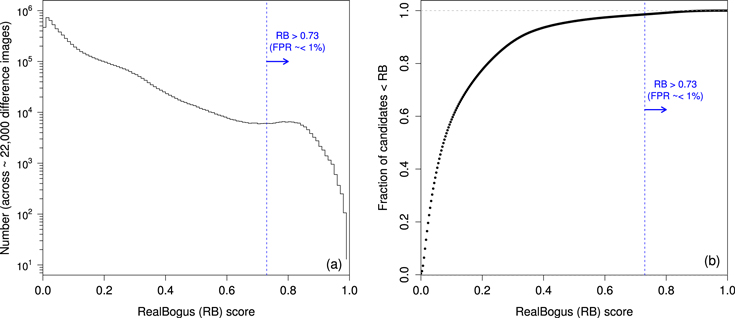

We describe the near real-time transient-source discovery engine for the intermediate Palomar Transient Factory (iPTF), currently in operations at the Infrared Processing and Analysis Center (IPAC), Caltech. We coin this system the IPAC/iPTF Discovery Engine (or IDE). We review the algorithms used for PSF-matching, image subtraction, detection, photometry, and machine-learned (ML) vetting of extracted transient candidates. We also review the performance of our ML classifier. For a limiting signal-to-noise ratio of 4 in relatively unconfused regions, bogus candidates from processing artifacts and imperfect image subtractions outnumber real transients by ≃10:1. This can be considerably higher for image data with inaccurate astrometric and/or PSF-matching solutions. Despite this occasionally high contamination rate, the ML classifier is able to identify real transients with an efficiency (or completeness) of ≃97% for a maximum tolerable false-positive rate of 1% when classifying raw candidates. All subtraction-image metrics, source features, ML probability-based real-bogus scores, contextual metadata from other surveys, and possible associations with known Solar System objects are stored in a relational database for retrieval by the various science working groups. We review our efforts in mitigating false-positives and our experience in optimizing the overall system in response to the multitude of science projects underway with iPTF.

Export citation and abstract BibTeX RIS

1. Introduction

The Palomar Transient Factory (PTF; Rau et al. 2009) and its successor survey currently underway, the intermediate Palomar Transient Factory (iPTF; Kulkarni 2013) have been advancing our knowledge of the transient, variable, and dynamic sky at optical wavelengths since 2009 March. From new classes of supernovae (Maguire et al. 2014; White et al. 2015), identifying gamma-ray burst optical afterglows (Singer et al. 2015) and counterparts to gravitational wave triggers (Kasliwal et al. 2016), exotic stellar outbursts (Miller et al. 2011; Tang et al. 2014), Milky Way tomography (Sesar et al. 2013) to near-Earth asteroids (Waszczak et al. 2016) and comets (Waszczak et al. 2013), iPTF continues to deliver,8

serving as a testbed for the development of future time-domain surveys. iPTF uses a 92-megapixel camera mosaicked into eleven functional 2048 × 4096 CCDs covering 7.26 deg2 on the Palomar 48-inch Samuel Oschin Schmidt telescope. The single exposures reach a depth of (Mould) R ≃ 21 mag (5σ) in 60 s. The pixel scale is ≈1'' and the image quality is ≈2 2 (median FWHM), implying the Point Spread Function (PSF) is better than critically sampled slightly more than 50% of the time. Further details of the hardware, survey design, and on-sky performance are described in Law et al. (2009, 2010) and Ofek et al. (2012). An overview of the image pre-processing and photometry pipelines, and archival system is described in Laher et al. (2014).

2 (median FWHM), implying the Point Spread Function (PSF) is better than critically sampled slightly more than 50% of the time. Further details of the hardware, survey design, and on-sky performance are described in Law et al. (2009, 2010) and Ofek et al. (2012). An overview of the image pre-processing and photometry pipelines, and archival system is described in Laher et al. (2014).

The near real-time discovery of transients from iPTF imaging data is currently performed using an image differencing pipeline at the National Energy Research Scientific Computing Center (NERSC; Cao et al. 2016). New incoming images are astrometrically and instrumentally calibrated, then aligned, PSF-matched, and differenced with deeper reference images supplied by the Infrared Processing and Analysis Center (IPAC, Caltech; Laher et al. 2014). Transient candidates are extracted from the differenced images then vetted using a classification engine (Bloom et al. 2012; Rusu et al. 2014). The NERSC infrastructure has contributed immensely to the success of PTF and iPTF.

We have implemented an enhanced version of the discovery pipeline to complement the pipeline at NERSC. In 2017, the iPTF project will be replaced by the Zwicky Transient Facility (ZTF) using a new camera on the same telescope (Bellm 2014; Smith et al. 2014). The ZTF camera will have a field of view of ∼47 square degrees, enabling a full scan of the Northern visible sky every night, at a rate ∼15 times faster than iPTF to similar depths. The massive high-rate data stream and volume expected from ZTF will require advancements in algorithms and data-management practices despite the (inevitable) growth in hardware technology. This will pave the way to the Large Synoptic Survey Telescope (LSST; Ivezić et al. 2014) that is expected to yield at least 100× as many astrophysical transients per image exposure than ZTF. In anticipation of this data deluge, we have embarked on a new efficient discovery pipeline and infrastructure at IPAC. Our design philosophy is flexibility, i.e., being able to operate in a range of complex astrophysical environments (including the galactic plane), robustness to instrumental glitches, adaptability to a wide range of atmospheric seeing and transparency, minimal tuning (unless warranted by instrumental changes), optimality (in the signal-to-noise sense), reliability in extracted candidates to moderately low S/N levels, and fast delivery of vetted candidates to enable follow-up in near real-time.

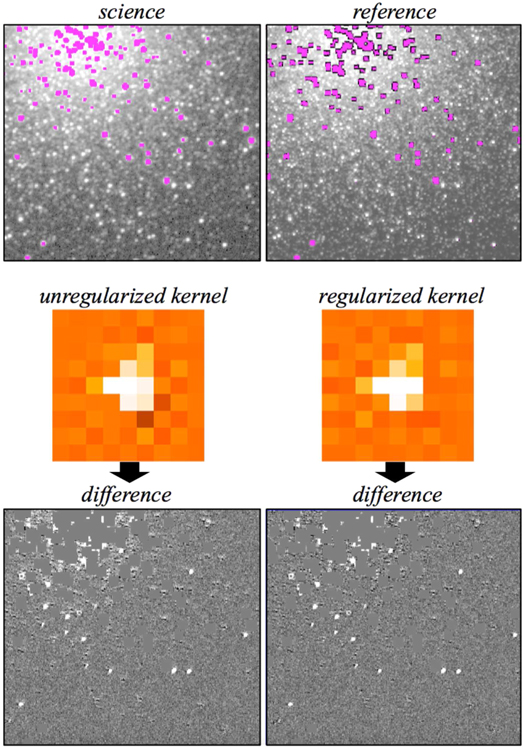

Searches for astrophysical transients (by virtue of changes in flux and/or position) have traditionally been conducted using either of two approaches. The first involves differencing of astrometrically aligned, PSF-matched images from two epochs: the science or target image containing the potential transient sources, and a deeper reference or template image serving as a static representation of the sky, for example, defined from an average of images from multiple historical epochs. The difference image is then thresholded to find and measure excess signals, i.e., the transient candidates. This approach was (and in some cases continues to be) used by numerous synoptic surveys, e.g., OGLE (Wyrzykowski et al. 2014), ROTSE (Akerlof et al. 2003), La Silla-QUEST (Hadjiyska et al. 2012), Pan-STARRS (Kaiser et al. 2010), and PTF (Law et al. 2009). Although simple in theory, a challenging aspect of discovery via image differencing is the prior matching of PSFs between the input images. This has lead to an intensive, ongoing research effort (e.g., Alard & Lupton 1998; Alard 2000; Woźniak 2000; Bramich 2008; Yuan & Akerlof 2008; Becker et al. 2012; Bramich et al. 2016; Zackay et al. 2016). The ultimate goal is the elimination of systematic instrumental residuals, e.g., induced by non-optimal calibrations and/or PSF-matching upstream. These would otherwise contaminate lists of extracted transient candidates, i.e., the false positives that would need to be dealt with later (see below). In practice, one strives to minimize their occurence in difference images such that in a global sense, the resulting pixel fluctuations and photometric uncertainties of bona fide flux transients approach expectations from Poisson noise and/or detector read-noise.

The second approach involves positionally matching source catalogs extracted from images at different epochs and searching for large flux differences between the epochs, e.g., as used by the Catalina Real-time Transient Survey (CRTS; Drake et al. 2009). This method avoids systematics from color-correlated source-position misalignments due to differential chromatic refraction, an effect that can be severe for some facilities. However, this method requires a relatively large flux-difference threshold to ensure reliability. This is at the expense of a higher missed detection rate (incompleteness) at low flux levels, particularly in regions with a complex background and/or high source-density (e.g., the galactic plane) where positional-matching is a challenge. On the other hand, assuming optimally calibrated and instrumentally matched inputs, image differencing excels in regions where source confusion is high and/or where complex, fast-varying backgrounds are present (e.g., near or within galaxies). Due to its adaptability to a wide range of astrophysical environments, the PTF project adopted image differencing as its primary means for discovery.

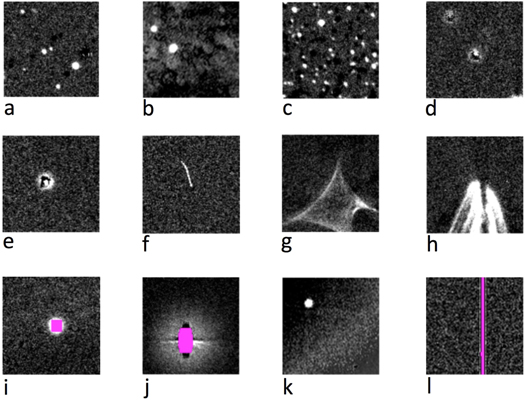

Following the extraction of transient candidates from differenced images, a somewhat daunting problem is deciding which are bogus (i.e., spurious) or real and worthy of further study. The existing iPTF discovery pipeline at NERSC accomplishes this using a supervised machine-learned (ML) classifier (Bloom et al. 2012; Brink et al. 2013). Here, a pre-labelled training set of previously discovered real transients are first fit to a two-class (real or bogus) non-parametric model described by a number of selected source features (or metrics). This model is then used to predict the class (real or bogus) of future candidates according to some probability threshold. The probabilities are also referred to as RealBogus (or quality) scores.

The iPTF discovery pipeline at NERSC typically yields a few to ten real "interesting" transients per night (excluding Solar-System objects and periodic or reoccuring variables in regions with a high stellar density). For ZTF, we expect at least 100 such transients per night. Currently however, real iPTF transients can be outnumbered by spurious candidates (false positives) by more than two orders of magnitude, despite efforts to minimize their incidence through careful pre-calibration. The problem gets worse if one is interested in finding the rare gems down to low S/N levels (e.g., Masci 2012). Depending on the science goals, the vetted candidates need to be delivered in a timely manner to the respective science working groups for follow-up. At NERSC, this currently takes ∼30 minutes since observation. The goal is to get this below ∼15 minutes. Large numbers of false-positives can strain any machine-learned vetting process and affect its reliability (Brink et al. 2013). It is crucial that the vetting process be efficient and reliable.

We have developed an automated image-differencing, transient-extraction and vetting system at IPAC; hereafter, the IPAC/iPTF Discovery Engine (or IDE). This infrastructure is currently in use for iPTF and is expected to be a foundation for ZTF in future. We have 6+ years of PTF science data in hand (ongoing with iPTF) and an experienced team at NERSC that aided in developing and refining all aspects of a robust discovery engine—from instrumental calibration to vetted transient candidates. Guided by previous implementations of the image subtraction problem, this paper reviews our algorithms, optimization strategies, experiences, and liens. We also describe our probabilistic (real–bogus) classification scheme for vetting transient candidates, Quality Assurance (QA) metrics, and database (DB) schema.

We note that two of the core pipeline steps in IDE: (i) image-differencing (that includes pre-conditioning of image inputs), and (ii) extraction of raw transient candidates therefrom, are both implemented in a stand-alone software module called PTFIDE.9 In this paper, we use the acronym PTFIDE when referring to these specific processing steps, otherwise, we use IDE when referring to the overall processing system. The latter includes all pre-calibration steps (prior to PTFIDE), machine-learned vetting and archival steps (post-PTFIDE). Furthermore, when referring to astrophysical transients, we use the term "transient" in a generic sense, i.e., all types of flux-excesses that can be detected in difference images (in both the positive and negative sense, relative to a reference image template): moving objects, periodic or aperiodic variable sources, or short-lived (fast) events. The goal of IDE is to deliver reliable transient candidates to the various science working groups for further follow-up. From hereon, these science working groups will be referred to as "science marshals," or simply marshals.

This paper is organized as follows. In Section 2 we give an overview of IDE and provide references for more information on each subsystem, both in this paper and elsewhere. Section 3 gives a broad overview of the image differencing and extraction module PTFIDE and its dependencies: input parameters, reference-image building, and output products. Section 4 expands on the specific processing steps in PTFIDE: gain and background matching, astrometric refinement, reference-image resampling, and PSF-matching. This includes a summary of all image-based and transient-candidate source metrics, and their use in deriving simple initial quality scores. Section 5 reviews the DB schema for storing all difference image-based and source-based metrics. The machine-learned vetting infrastructure, which includes training, tuning, and its overall performance is described in Section 6. Lessons learned during the course of development and testing are given in Section 7. Future and potential enhancements are discussed in Section 8 and conclusions are given in Section 9.

2. Overview of the Near Real-time Discovery Engine

The raw camera-image files are first sent from the Palomar 48-inch Samuel Oschin Schmidt telescope to the San Diego Supercomputing Center via a ≈100 Mbit s−1 microwave link and then pushed to Caltech and IPAC over the internet (bandwidth is ≳1 Gbit s−1). At IPAC, the camera-image files are ingested into an archive and associated metadata are stored in a relational database for fast retrieval and processing soon thereafter (see below).

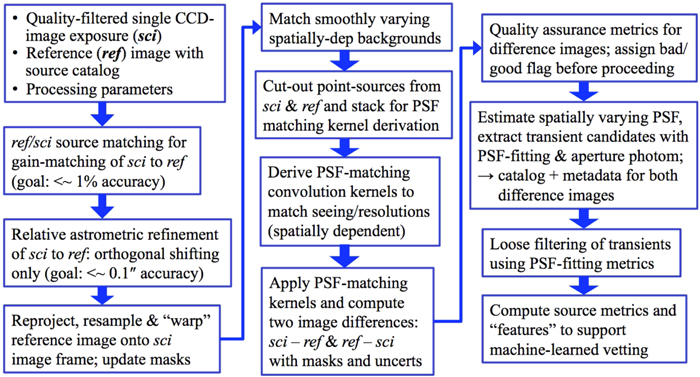

Figure 1 gives an overview of the near real-time discovery pipeline. An executive pipeline wrapper controls the various steps: preprocessing which performs basic instrumental and astrometric calibration per CCD image (light purple boxes); PTFIDE—the image-differencing and transient extraction module (red boxes); then returning to the pipeline executive for archiving, DB-loading, and machine-learned vetting (light purple boxes). The preprocessing steps are from a stripped down version of the PTF/iPTF frame-processing pipeline. This executes asynchronously and independently of IDE following ingestion of an entire night's worth of image data. The purpose of this pipeline is to provide accurately calibrated images and source catalogs for future public distribution. This pipeline and the IDE preprocessing steps borrowed therefrom (light purple boxes in Figure 1) are described in detail in Laher et al. (2014). Below we summarize the major processing steps. The steps specific to PTFIDE (red boxes) are expanded in Sections 3–6. Operational details and tools used by the various science marshals (green boxes) will be discussed in future papers. In particular, the streak-detection functionality that is designed to detect moving objects in difference images, i.e., that streak in individual exposures is described in Waszczak et al. (2016).

Figure 1. Processing flow in the near real-time IPAC/iPTF Discovery Engine (IDE). The color-coding separates the various modular steps: preprocessing, archival, and machine-learned vetting (light purple); core image-differencing and transient extraction module: PTFIDE (red); external science applications and follow-up marshals (green). See Section 2 for details.

Download figure:

Standard image High-resolution imageThe 92-megapixel raw camera-image files (one per exposure) are processed by the real-time pipeline soon after they are ingested, check-summed, and registered in the database at IPAC. The ingest process also loads a jobs database table that is automatically queried by the pipeline executive to initiate the camera-splitting pipeline (Laher et al. 2014). This pipeline splits the camera-images into twelve 17 MB CCD image files (with overscan regions included), and noting that one of the CCDs is defective. An initial astrometric solution is derived and attached to their FITS10 headers. This astrometric solution is not the final (and best) calibration attached to the CCD images prior to image-differencing with PTFIDE. It is used to support source-catalog overlays, quick-look image visualizations, and quality assurance (QA) from the archive. The individual raw CCD frames in FITS format are copied to a local sandbox directory and associated metadata (including quality metrics and image usability indicators) are stored in a database to facilitate retrieval for the next processing steps.

A number of preprocessing and instrumental calibration steps are then applied to the raw CCD image. These include a dynamic (floating) bias correction and a static bias correction, a flat-field (pixel-to-pixel responsivity) correction, and cropping to remove overscan regions. The static bias and flat-field calibration maps are retrieved from an archive. These are generally the latest (closest-in-time) products available for the night being processed, i.e., that were made by combining data from a prior night. For the flat-field calibration in particular, quality metrics are used to check that the responsivity pattern falls within the range expected for a specific CCD and filter. If not, a pristine superflat is used. This preprocessing also initiates and populates a 16-bit mask image to record bad hardware pixels for the specific CCD frame, badly calibrated pixels, and saturated pixels. This mask is further augmented below to record image artifacts and object detections.

At this stage, we have a bias-corrected, flattened CCD image and accompanying mask image. Sources are then extracted from the CCD image using SExtractor (Bertin & Arnouts 1996; Bertin 2006a) primarily to support astrometric calibration—the most important calibration step in the real-time pipeline since its accuracy is crucial to attaining good quality difference images (Section 4.2). The SExtractor module is executed twice. The first run is to compute an accurate value of the overall image seeing (point-source FWHM) from the mode of a filtered distribution of individual source FWHM values. This estimate is then used to support source-detection in the second SExtractor run via a point-source matched filter. The first SExtractor run also folds in the object detections into the image mask, or rather the contiguous pixels contributing to each object above the specified threshold. The createtrackimage module is also executed to detect satellite and aircraft tracks in the CCD image and record their locations in the image mask. These occur with a frequency of typically several times per night and the same track can cross multiple CCDs. Metrics for each track are also computed (e.g., length and median intensity) and stored in a database table. For details on track identification and characterization, see Laher et al. (2014). The second SExtractor run generates a source catalog for input into the astrometric calibration step.

Astrometric calibration is initially performed using SCAMP (Bertin 2006b, 2014). SCAMP is executed using one of two possible astrometric reference catalogs as input: if the CCD image overlaps entirely with a field from the Sloan Digital Sky Survey (SDSS), the SDSS-DR9 Catalog (Ahn et al. 2012) is used; otherwise, the UCAC4 Catalog (Zacharias et al. 2013) is used. If SCAMP fails to find an astrometric solution using either of these catalogs, it is rerun with the USNO-B1 Catalog (Monet et al. 2003). In addition to solving for the standard World Coordinate System (WCS) first-order terms (for a gnomonic sky-projection; Calabretta & Greisen 2002), SCAMP simultaneously solves for field of view distortion using the PV polynomial convention on a per-image basis. The solution implicitly captures both the fixed camera-distortion and any variable atmospheric refraction effects at the time of exposure. The WCS solution and PV distortion coefficients are written to the CCD image FITS header. To enable other downstream (as well as generic analysis) software to map from pixel to sky coordinates and vice-versa, the PV coefficients are converted to the SIP representation (Shupe et al. 2005) using the pv2sip module (Shupe et al. 2012). The associated SIP coefficients are also written to the FITS header.

The astrometric (and distortion) solution from SCAMP is then validated. The first validation step coarsely checks that the standard first-order WCS terms (pointing, rotation, and scale) are within their expected ranges according to specific prior values. The second validation step involves re-extracting sources from the astrometrically calibrated CCD image (again using SExtractor) and matching them to a filtered subset of sources from the 2MASS Point Source Catalog (PSC; Skrutskie et al. 2006). A matching radius of 2'' is used and a minimum of 20 2MASS matches must be present. If the number of matches exceeds this minimum, the axial root-mean-squared (rms) position differences are root-sum-squared (RSS'd) and compared against a threshold that is dependent on galactic latitude. This threshold (t) lies in the range  corresponding to galactic latitudes

corresponding to galactic latitudes  . The threshold is interpolated from a look-up table of predetermined values according to the galactic latitude of the input image. The reason for a latitude-dependent threshold is due to the less reliable rms estimates in position differences following source matching when the source density is high. We are less tolerant of larger rms estimates in this regime due to the higher probability of false matches.

. The threshold is interpolated from a look-up table of predetermined values according to the galactic latitude of the input image. The reason for a latitude-dependent threshold is due to the less reliable rms estimates in position differences following source matching when the source density is high. We are less tolerant of larger rms estimates in this regime due to the higher probability of false matches.

If the above validation checks on the astrometry are not satisfied, another attempt is made at the astrometric calibration, this time by executing the Astrometry.net module (Lang et al. 2010). This module uses the 2MASS PSC as the astrometric-reference catalog. Astrometry.net also solves for distortion on a per-image basis, however, its representation is only in the SIP format. To ensure proper execution of other downstream pipeline modules that depend exclusively on the PV representation, the SIP coefficients are converted to PV equivalents using the sip2pv module (Shupe et al. 2012) and written to the FITS header. The solution from Astrometry.net is validated in the same manner as above using the 2MASS PSC. If the acceptability criteria are still not satisfied, a bit-flag is set in a database table for use downstream. Metrics to assess the astrometric performance on each image are computed and also stored in the database to facilitate future analysis and trending (for details, see Laher et al. 2014).

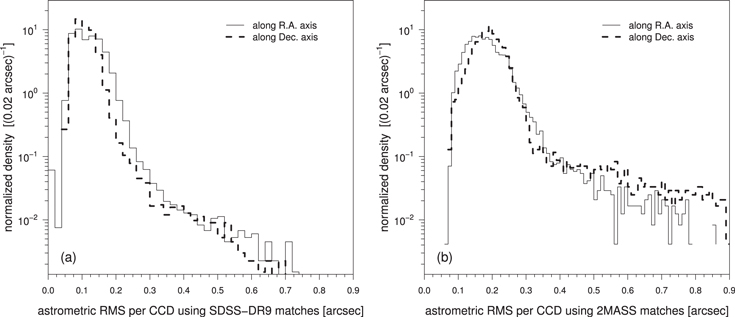

Figure 2(a) quantifies the astrometric performance of the real-time pipeline for 68,310 iPTF CCD images acquired from 2015 January 1 to 2015 May 1 that used the SDSS-DR9 Catalog in their SCAMP solution. This catalog covers ≃14,555 deg2 at galactic latitudes of typically  and has an overall astrometric accuracy (rms per axis) of ≃50 milli-arcseconds (mas) with respect to earlier UCAC releases (Pier et al. 2003). The median rms per iPTF CCD per axis is typically 115 mas with respect to SDSS-DR9. The astrometric performance outside the SDSS-DR9 footprint (calibrated using either UCAC4 or USNO-B1; see above) is similar, except however for exposures observed in regions with a high source-density (e.g., the galactic plane) where systematics are more prevalent. These systematics are currently being addressed since iPTF includes a number galactic-plane science programs. Figure 2(b) shows the rms distributions for a subset of 24,168 CCD images from the same SDSS-DR9 overlap region with respect to the 2MASS PSC. Here, only images containing >200 2MASS matches each were used. The median astrometric accuracy with respect to 2MASS degrades to ≃190 mas per axis. This larger rms is somewhat expected since the 2MASS PSC has an accuracy of typically 150–200 mas (Skrutskie et al. 2006).

and has an overall astrometric accuracy (rms per axis) of ≃50 milli-arcseconds (mas) with respect to earlier UCAC releases (Pier et al. 2003). The median rms per iPTF CCD per axis is typically 115 mas with respect to SDSS-DR9. The astrometric performance outside the SDSS-DR9 footprint (calibrated using either UCAC4 or USNO-B1; see above) is similar, except however for exposures observed in regions with a high source-density (e.g., the galactic plane) where systematics are more prevalent. These systematics are currently being addressed since iPTF includes a number galactic-plane science programs. Figure 2(b) shows the rms distributions for a subset of 24,168 CCD images from the same SDSS-DR9 overlap region with respect to the 2MASS PSC. Here, only images containing >200 2MASS matches each were used. The median astrometric accuracy with respect to 2MASS degrades to ≃190 mas per axis. This larger rms is somewhat expected since the 2MASS PSC has an accuracy of typically 150–200 mas (Skrutskie et al. 2006).

Figure 2. Distributions of the astrometric rms per CCD image along each axis with respect to (a) the SDSS-DR9 Catalog using 68,310 images and (b) the 2MASS PSC using a subset of 24,168 images containing sufficient matches. See the text for details.

Download figure:

Standard image High-resolution imagePreprocessing in the real-time pipeline also includes a step to detect and mask artifacts induced by bright-source reflections in the telescope optics, primarily ghosts and halos. The ghosts are due to bright sources lying off the telescope's optical axis, while halos are more coincident with the offending source. These features are located by first searching for bright (parent) stars from the Tycho-2 Catalog (Høg et al. 2000) with  mag. The positions of ghosts are then isolated using a pre-determined geometric mapping from the parent stars to expected ghost positions. Circular areas are flagged in the CCD bit-mask image to indicate probable ghosts and halos. Given the ghost and halo sizes vary with the brightness of the parent star, a conservative maximally sized masking area is used. The positions of ghosts, halos and their parent stars are stored in the database to facilitate future analysis. Lastly, the preprocessing phase computes a number of QA metrics for the image pixels and accompanying mask, a summary of which can be found in Laher et al. (2014). These are also loaded into the database.

mag. The positions of ghosts are then isolated using a pre-determined geometric mapping from the parent stars to expected ghost positions. Circular areas are flagged in the CCD bit-mask image to indicate probable ghosts and halos. Given the ghost and halo sizes vary with the brightness of the parent star, a conservative maximally sized masking area is used. The positions of ghosts, halos and their parent stars are stored in the database to facilitate future analysis. Lastly, the preprocessing phase computes a number of QA metrics for the image pixels and accompanying mask, a summary of which can be found in Laher et al. (2014). These are also loaded into the database.

There is no absolute photometric calibration in the preprocessing phase of the real-time pipeline to assign image-specific photometric zeropoints. Instead, the raw pixel signals are later throughput (gain)-matched to a reference image template during PTFIDE processing using sources extracted therefrom (Section 4.2). This reference image has an associated photometric zeropoint and therefore serves as the generic zeropoint for all real-time products that are matched to it, including the difference image products downstream. For details, see Sections 3.3 and 4.9.2. For an overview on the performance of the initial photometric calibration of the CCD images (on which the reference and difference-image products ultimately depend), see Ofek et al. (2012).

Figure 3 shows the typical durations of the primary steps in the real-time pipeline: from acquisition of a camera exposure to vetted candidates, ready to be examined by the science marshals. The median total time lag since exposure acquisition (Figure 3(b)) is ≃16 minutes and the 95th percentile is ≲22 minutes. When broken down into the various steps, the bulk of the lag is in the transfer of image data from the telescope to IPAC (≃9 minutes). This includes database ingestion and archiving, which amount to no more than several seconds per camera exposure. The preprocessing, PTFIDE and final archival steps amount to no more than ≃7 minutes, although there is a long tail in the PTFIDE runtime which we attribute to the extraction and processing of transient candidates from "bad" difference-images, i.e., containing an excess of residual artifacts (Section 6.2). The timing metrics shown in Figure 3 are those inferred at the time of writing using 1340 camera-image files and all eleven CCD images therein. The overall lag is expected to decrease in the near future, in particular in the transfer of image-data from the telescope to IPAC.

Figure 3. (a) Distributions of the elapsed time for various processing steps in the (near-)real-time discovery engine per CCD image. (b) Total elapsed time from acquisition of a CCD image exposure to vetted candidates extracted therefrom. Metrics were derived using 1340 camera-image exposures.

Download figure:

Standard image High-resolution imageAt the time of writing (pertaining to iPTF operations), the IDE pipeline executes on a Linux cluster of 23 machines consisting of 232 64-bit physical CPU cores in total: 11 machines have 8 Intel® Xeon® cores running at 3.0 GHz each and the remaining 12 machines have 12 similar cores running at 2.4 GHz each. All the machines, file and database servers are connected by a 10 Gbit network. Given that the 12-core machines can admit two threads per core, this cluster can in principle allow for 376 concurrent processes. However, since much of the processing involves a considerable amount of disk I/O, we achieve close to maximum throughput with only one thread per physical core, and therefore we usually execute at most 232 simultaneous threads. As raw camera-image files are received during the night, multiple instances of the camera-splitting pipeline are first run across all idle processor cores (until filled) to generate the individual raw CCD-images. These images then enter the processing queue and the level of core-parallelism now occurs at the CCD-image level through all the remaining pipeline steps (Figure 1).

The IDE pipeline was designed to be flexible enough to also process archival (preprocessed) image data. This mode facilitates pipeline tuning, iterative training of machined-learned classifiers in response to changing detector properties and/or science goals, but it also supports archival research in general, i.e., ad-hoc discovery projects using different pipeline parameters and thresholds. This offline execution mode only runs the PTFIDE steps (red boxes in Figure 1) using preprocessed image data that were previously instrumentally calibrated and archived by the regular PTF/iPTF frame-processing pipeline (Laher et al. 2014). This is because the preprocessed intermediate products from the initial phase of the IDE pipeline (light purple boxes in Figure 1) are not stored in a long-term archive.

To summarize, we have given a general overview of the near real-time IDE pipeline, with particular emphasis on the preprocessing steps needed to generate instrumentally and astrometrically calibrated CCD-images for input into the image-differencing and transient extraction module (PTFIDE). The primary outputs from the preprocessing step are a calibrated science image exposure, an accompanying bit-mask image, and metrics that quantify the astrometric performance and quality of the image-pixel data. The details on how these metrics and products are used in PTFIDE are discussed in Section 4.

3. PTFIDE Module Overview and Preliminaries

This section gives a broad overview of the PTFIDE software,11 dependencies, design assumptions, input data and formats, tunable parameters, and outputs—both primary products for archival and ancillary products for debug and analysis. PTFIDE is a standalone Unix command-line tool written in Perl. It calls a number of software executables written in C, C++ and Fortran. For a summary of the dependencies, see Section 3.1. The software can be built and configured to run under most Linux or Unix-like operating systems.

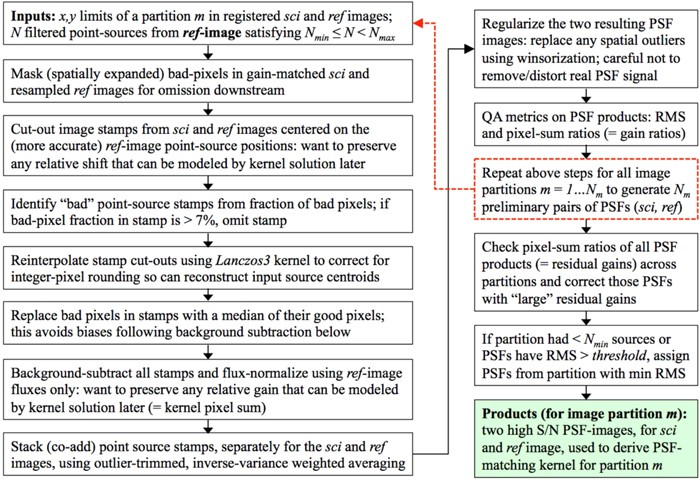

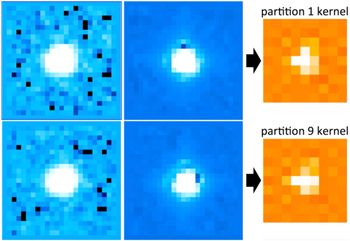

Figure 4 summarizes the main processing steps in PTFIDE, from preparing the inputs, to extracted transient candidates and metrics ready for loading into a relational database. A summary of all input files, parameters, and their default values is given in Section 3.2. One of the most important inputs is the reference image and its accompanying source catalog. Requirements regarding its construction are given in Section 3.3. Output products, formats, and their level of importance are summarized in Section 3.4. The details of each computational step in Figure 4 are expanded in Section 4.

Figure 4. Processing flow in the PTFIDE (image differencing and extraction) module. These steps are contained in the first red box of the real-time pipeline flowchart in Figure 1. See Section 4 for details.

Download figure:

Standard image High-resolution image3.1. Software Design Philosophy, Dependencies, and Parallelization

To expedite the delivery of science quality products following the commencement of iPTF, some of the processing steps in PTFIDE leverage existing astronomical software tools. This is mostly heritage software that has been well tested by the astronomical community and refined over time. Table 1 summarizes the external (third-party) software components used in PTFIDE and other dependencies.

Table 1. External (Third-party) Software used by PTFIDE

| Software or Library | Versiona | Purpose |

|---|---|---|

| Perl |

|

Core language for scripting and performing arithmetic operations |

| PDL |

|

Perl module for vectorized image processing; built with bad-value and GSL support |

| GSL |

|

GNU Scientific Library (numerical library) |

| Astro-WCS-LibWCS |

|

Perl module to support World Coordinate System (WCS) transformations |

| Ptfutils, Pars | 1.0 | In-house developed Perl modules specific to PTF data processing |

| xy2xytrans | 2.0 | For fast image-to-image pixel position transformations |

| libtwoplane | 1.0 | Library to support xy2xytrans module |

| wcstools |

|

Contains WCS library to support multiple modules listed here |

| cfitsio |

|

FITS file-manipulation library to support multiple modules listed here |

| SExtractor | 2.8.6 | For initial source extraction to support internal source-matching steps |

| SWarp | 2.19.1 | For image resampling and interpolation using WCS |

| DAOPhot | II, 1/15/2004 | Source detection, aperture photometry, and PSF-estimation |

| Allstar | II, 2/7/2001 | PSF-fit photometry and support for PSF-estimation (included in DAOPhot package). |

Note.

aVersion number shown is that in use at the time of writing.Download table as: ASCIITypeset image

One of the design goals was robustness against missing or corrupted input data with appropriate error handling and reporting upon pipeline termination. Depending on the error, any missing (or out-of-range) data or associated metadata are replaced with default values in an attempt to salvage as many products as possible. Warnings are issued and logged if these occur. Furthermore, different pipeline exit codes are assigned according to the different anomalies (fatal and benign) encountered in processing. These status codes are stored as a bit-string in a database table to enable follow-up or to avoid querying unusable (or non-optimal) science products in future. Another design consideration was the ability to generate as many intermediate products and write as much information as possible from each processing step (Section 3.4). This was to facilitate offline debugging and tuning since many of the steps have complex interdependencies. This debug mode is controlled by a command-line switch and is typically turned off in operations to minimize runtime.

The base language in PTFIDE is Perl. This code executes both the external software modules and performs its own image-processing computations through use of the Perl Data Language (PDL; Glazebrook & Economou 1997). PDL is an object-oriented extension to Perl5 that is freely available as an add-on module. PDL is optimized for computations on large multidimensional data sets by making use of the hyper-threading capabilities of modern processor technologies. That is, PDL has its own threading engine that uses constructs from linear algebra to process large arrays as efficiently as possible using parallel computations. This is crucial since most of the steps in PTFIDE are CPU-bound. This low-level parallelism occurs on the individual processor cores where our basic processing unit is a single CCD-image. A higher level of parallelism is achieved by using all of the 232 CPU cores in our Linux cluster (described in Section 2). Here we typically execute 232 simultaneous threads (one CCD-image per core at any time). This gives us close to maximum throughput.

3.2. Primary Inputs and Parameter Summary

PTFIDE is driven by the Perl script ptfide.pl. The inputs can be broadly separated into the following: an instrumentally calibrated CCD-image exposure (the science image); an accompanying bit-mask (pixel-status) image; a spatially overlapping reference image; an accompanying source catalog for the reference image; configuration files for the various external software modules; processing parameters, thresholds, and control switches.

All image files are in FITS format (defined in Section 2). Input parameters and thresholds may be supplied on either the ptfide.pl command-line or in a configuration file, while image FITS-file names, other configuration files, and switches can only be supplied on the command-line. Table 2 summarizes the inputs to ptfide.pl with a brief explanation for each. The default parameter values are those currently used for iPTF. More details on some of the parameters can be found in Section 4.

Table 2. Inputs to PTFIDE (Script ptfide.pl)

| Inputa | Defaultb | Purposec |

|---|---|---|

| -cfgide | ⋯ | Optional input configuraton file listing all numerical parameters and thresholds defined below; these override those on the command-line, if any |

| -scilst | ⋯ | Input filename listing science image FITS file(s) |

| -msklst | ⋯ | Input filename listing mask image FITS file(s) accompanying -scilist |

| -ref | ⋯ | Input FITS filename of reference image (co-add) |

| -catref | ⋯ | Input reference image source catalog file from SExtractor; -cn specifies required columns |

| -cn | 2, 3, 4, 42, 10, 57, 60, 63, 72, 78, 27, 48, 14, 15, 45 | List of integers defining locations of required columns in the -catref input file |

| -catfilt | 0.5, 100, 19.0, 1.3 | Thresholds for filtering reference source catalog: min/max tolerable values for CLASS_STAR, ISOAREAF_IMAGE, MAG_APER, and ratio AWIN_WORLD/BWIN_WORLD |

| -od | ⋯ | Directory name for output products (including any debug output) |

| -cfgswp | ⋯ | Input configuration file for SWarp module |

| -cfgsex | ⋯ | Input configuration file for SExtractor to support position/gain matching |

| -cfgsexpsf | ⋯ | Input configuration file for SExtractor to support association with PSF extractions |

| -cfgcol | ⋯ | Input SExtractor column name configuration file to support position/gain matching |

| -cfgcolpsf | ⋯ | Input SExtractor column name configuration file to support association with PSF extractions |

| -cfgfil | ⋯ | Input filename for SExtractor convolution kernel filter |

| -cfgnnw | ⋯ | Input SExtractor neural network configuration file for star/galaxy classification |

| -cfgdao | ⋯ | Input generic DAOPhot parameter file |

| -cfgpht | ⋯ | Input DAOPhot photometry parameter file |

| -tmaxpsf | 2000.0 | Threshold [#bckgnd sigma] above background in reference image for maximum usable pixel value when creating PSF |

| -tdetpsf | 50.0 | DAOPhot findthreshold [#bckgnd sigma] for PSF creation from reference image |

| -tmaxdao | 3000.0 | Threshold [#bckgnd sigma] above zero-background in difference image for maximum usable pixel value for source extraction |

| -tdetdao | 3.5 | DAOPhot findthreshold [#bckgnd sigma] for source extraction on difference image |

| -tchi | 8.0 | Threshold on chi metric from Allstar program below which extractions on difference image are retained; larger  more non-PSF-like profiles are retained more non-PSF-like profiles are retained |

| -tshp | 4.0 | Threshold on sharp metric from Allstar program where extractions on difference image with –tshp  +tshp are retained; values of sharp ≃ 0 +tshp are retained; values of sharp ≃ 0  sources are more PSF-like sources are more PSF-like |

| -tsnr | 4.0 | Threshold on flux signal-to-noise ratio in PSF-fit photometry above which difference image extractions are retained |

| -fatbits | 8, 9, 10, 12 | Fatal bits to mask as encoded in input mask images (-msklist input); set to 1 for no masking |

| -satbit | 8 | Saturation bit# in mask images for determining saturation level in science images |

| -expnbad | 3 | Mask an additional (expnbad × expnbad) - 1 pixels around each input masked science and reference image pixel; provides more complete blanketing |

| -eg | 1.5 | Native electronic gain of detector [e-/ADU]; used for pixel-uncertainty estimation |

| -sxt | 2.0 | SExtractor detection threshold [#sigma] to support position/gain matching |

| -rad | 3.0 | Match radius [pixels] for associating reference and science frame extractions for position refinement and gain matching |

| -nmin | 200 | Minimum number of reference-to-science image source matches above which to proceed with position refinement and gain matching |

| -dgt | 1.5 | Minimum relative gain factor [%] above which to proceed with relative gain correction |

| -dpt | 0.07 | Minimum offset [pixels] above which to proceed with position refinements (dX or dY) |

| -dgsnt | 5.0 | Minimum S/N ratio in gain factor above which to proceed with relative gain correction |

| -dpsnt | 5.0 | Minimum S/N ratio in deltas above which to proceed with position corrections (dX or dY) |

| -gridXY | 4,8 | Number of image partitions per axis to support differential SVB computation |

| -tpix | 2.0 | Threshold t [#sigma] for replacing pixel values > mode + t∗sigma in an image partition to support differential SVB computation |

| -tmode | 500.0 | Threshold t [%] for replacing all pixels of a partition with global mode if its local mode is ![$\gt (1+t[ \% ]/100)$](https://content.cld.iop.org/journals/1538-3873/129/971/014002/revision1/paspaa443cieqn14.gif) ∗ global mode; to support differential SVB computation ∗ global mode; to support differential SVB computation |

| -tsig | 100 | Threshold t [%] for replacing all pixels of a partition with local mode if its robust sigma is  t[%]/100) ∗ "median of all partition sigmas"; to support differential SVB computation t[%]/100) ∗ "median of all partition sigmas"; to support differential SVB computation |

| -rfac | 16 | Image-pixel sampling factor to speed up filtering for differential SVB computation |

| -szker | 41 | Median-filter size for downsampled image to support differential SVB computation [pixels] |

| -ker | LANCZOS3 | Interpolation kernel type for SWarp module |

| -zpskey | IMAGEZPT | Keyword name for photometric zeropoint in science image FITS headers |

| -zprkey | IMAGEZPT | Keyword name for photometric zeropoint in reference image FITS header |

| -pmeth | 2 | Method to derive PSF-matching kernel between sci and ref images: 1  old Alard & Lupton (1998) method (now deprecated); 2 old Alard & Lupton (1998) method (now deprecated); 2  Pixelated Convolution Kernel (PiCK) method Pixelated Convolution Kernel (PiCK) method |

| -conv | auto | For -pmeth 2: image to convolve; can be sci, ref, or auto. The auto option uses the sci and ref FWHM values to select the image to convolve |

| -kersz | 9 | For -pmeth 2: linear size of PSF-matching kernel stamps [pixels] |

| -kerXY | 3,3 | For -pmeth 2: number of image partitions along X, Y to represent spatially dependent kernel |

| -psfsz | 25 | For -pmeth 2 if -rpick was set: linear size of PSF stamps created from point source cutouts |

| -apr | 9.0 | For -pmeth 2 if -rpick was set: source aperture radius [pixels] to compute flux for normalizing PSFs and background level outside this |

| -nmins | 20 | For -pmeth 2 if -rpick was set: minimum number of sources in an image partition above which PSF-creation is attempted |

| -nmaxs | 150 | For -pmeth 2 if -rpick was set: use n brightest sources per image partition for PSF-creation |

| -rpickthres | 4.0, 5.0, 0.0004, 40, 0.045 | For -pmeth 2 if -rpick was set: list of parameter thresholds for creating PSFs: N-sigma threshold for stack-outlier rejection; N-sigma threshold for spatial outlier-detection and winsorisation; maximum tolerable RSS of spatial RMSs of PSF products for (re)assigning partition inputs for kernel derivation; minimum distance to edge to avoid when selecting sources from sci and ref images; threshold td for  where R = ratio of PSF pixel sums and PSFs with where R = ratio of PSF pixel sums and PSFs with  are rescaled to the median{R} of all image partitions are rescaled to the median{R} of all image partitions |

| -nbreftb | 65 | For -pmeth 2: number of pixel rows to force as bad at top and bottom of internal images used for kernel derivation to account for edge effects in resampled reference image |

| -nbreflr | 35 | For -pmeth 2: number of pixel columns to force as bad at left and right of internal images used for kernel derivation to account for edge effects in resampled reference image |

| -bckwin | 31 | For -pmeth 2: linear window size for median filtering of [downsampled] reference image when computing spatially varying background; note: downsampling factor is fixed at 16× per axis |

| -tsat | 0.65 | For -pmeth 2: factor threshold to perform more conservative tagging of resampled ref image pixels satisfying  where where  and saturate is from resampled ref image header. This assumes the ref image was made using the mkcoadd.pl co-addition software and saturate is from resampled ref image header. This assumes the ref image was made using the mkcoadd.pl co-addition software |

| -goodcuts | 5.3, 1.2, 5.0, 0.02, 22, 4, 0.8, 35, 14.3, 7.0, 2, 0.07, 0.2 | For -pmeth 2: list of parameter thresholds for performing simple 1D cuts on source metrics for assigning goodcand flag in output extraction tables: chi, sharp, snrpsf, magfromlim, nneg, nbad, magdiff, mindtoedge, magnear, dnear, elong,  , kpr , kpr |

| -baddiff | 80, 15, 15, 3, 140, 0.2, 0.7, 0.15, 0.15, 1.5, 1.5 | List of parameter thresholds for performing simple 1D cuts on difference-image metrics for assigning good flag in output QA file: diffpctbad, dmedchi, davgchi, diffsigpixmin, diffsigpixmax, dmedksum, medkpr, ncandscimrefratio, ncandrefmsciratio, dinpseeing, dconvseeing |

| -uglydiff | 80, 15, 15, 140, 0.2, 0.7, 0.35 | List of parameter thresholds for performing simple 1D cuts on difference-image metrics to decide if should proceed with source extraction on difference images: diffpctbad, dmedchi, davgchi, diffsigpixmax, dmedksum, medkpr, maxminksum |

| -qas | 41, 2008, 41, 4056 | Coordinate range of rectangular region in image for computing QA metrics in difference images if -qa switch was set; format is: xmin, xmax, ymin, ymax where pixel numbering is unit based and

|

| -apnum | 3 | Internal aperture number for which DAOPhot aperture photometry information should be propagated to output PSF-fit photometry table |

| -forceparams | R.A., decl., 43 | List of parameters to support "forced sub-image mode" if -forced switch was set; parameters are: R.A. [deg], decl. [deg], linsize [pixels] |

| -kerlst | ⋯ | Input filename listing FITS image cubes storing prior-derived, spatially dependent PSF-matching kernels for each science image to support "forced sub-image mode." |

| -phtcalsci | ⋯ | Switch to perform absolute photometric calibration of input science image after gain-matching to ref-image by computing a ZP using the calibrated MAG_AUTO values in the ref-image SExtractor catalog; this ZP will allow big-aperture (and PSF-fit) absolute photometry on the input science image before further gain refinements in the PSF-matching step downstream |

| -phtcaldif | ⋯ | Switch to perform absolute photometric calibration on science image after possible gain refinement and before image-differencing with ref-image by computing a ZP using the calibrated MAG_AUTO values in the ref-image SExtractor catalog; this ZP will allow big-aperture (and PSF-fit) absolute photometry on the science and difference images |

| -wmode | ⋯ | Switch to compute image modes (instead of medians) for the differential SVB correction |

| -rpick | ⋯ | Switch to use robust version of the PiCK method (-pmeth 2) when deriving PSF-matching kernel, i.e., via the construction of image PSFs using point-source cutouts |

| -psffit | ⋯ | Switch to perform PSF-fit photometry on difference images with prior PSF estimation off [possibly convolved] reference image |

| -apphot | ⋯ | Switch to perform fixed-aperture photometry on difference images using DAOPhot |

| -dontextract | ⋯ | Switch to only estimate spatially varying PSF; no extraction or photometry is performed |

| -forced | ⋯ | Switch to execute in "forced sub-image mode" where only "sci minus ref" difference image stamps (and ancillary files) centered on input R.A., decl. (-forceparams inputs) are made |

| -outstp | ⋯ | Switch to generate image cutouts of candidates from "sci minus ref" difference images |

| -pg | ⋯ | Switch to compute sci-to-ref relative astrometric and gain corrections, and apply if significant |

| -pcln | ⋯ | Switch to pre-clean (remove) output products directory specified by -od |

| -qa | ⋯ | Switch to generate QA metrics on difference images before and after PSF-matching within image slice defined by -qas string; results are written to standard output and an ASCII file |

| -d | ⋯ | Switch to write debug information to standard output, ASCII files and FITS images |

| -v | ⋯ | Switch to increase verbosity to standard output. |

Notes.

aThis same name (with prefix "–") is used in the ptfide.pl command-line specification. bDefault values, where shown, are optimal for the iPTF real-time pipeline. Command-line switches are "off" by default. cSome of these are further discussed in Section 4.3.3. Reference Image Construction and Requirements

The purpose of a reference image is to provide a static representation of the sky, or more specifically, a historical snapshot as defined by the state of the sky recorded in previous image exposures. This image provides a benchmark against which future exposures can be compared (i.e., differenced) to assist with transient discovery, both temporally (for flux changes) and/or spatially (for motion changes). The reference images also provide "absolute anchors" for assigning a photometric calibration to the incoming real-time science images and difference images derived therefrom. They are also used to check and refine astrometric solutions prior to differencing. Details are given below.

The reference images are co-adds (stack averages; see below) of several to fifty high-quality CCD-images selected from the image archive. Therefore, they have a higher S/N than the individual exposures. Besides supporting transient discovery, they can also benefit other science applications that require deeper photometry. So far in iPTF, the goal has been to construct reference images that are optimal for single-exposure image differencing and transient discovery. These do not necessarily achieve the highest possible depths (S/N) by using all available (good quality) images. This may be performed at a later date on completion of the survey and with different input image selection criteria.

Reference images are generated by a separate pipeline in iPTF operations that executes asynchronously and is independent of the real-time pipeline. This pipeline is only triggered when enough good quality images are available for a given field, CCD, and filter in the archive. The generation process is iterative in that reference images are remade and refined if an existing product is identified to be of low quality or unusable, provided better quality image-data are available. The input-image selection criteria for reference image generation were outlined in Laher et al. (2014). Given their importance, we repeat them below and expand on some of the details. These were derived from analyses of the distributions of numerous image metrics in 2013 June. The goal was to cover as much of the iPTF-visible sky as possible according to the available depth-of-coverage across all visited fields at the time.

- 1.The image must have been astrometrically and photometrically calibrated in an absolute sense and passed all automated quality checks prior to archiving (Laher et al. 2014).

- 2.The astrometric calibration (including full distortion solution) must have passed all validation steps (Section 2). This includes a separate check on the higher-order terms of the distortion polynomial.

- 3.The spatially binned photometric zeropoint values (provided by the ZP Variations Map or ZPVM from photometric calibration) must lie within ±0.15 mag. Furthermore, the source color-term coefficients derived from photometric calibration (as described in Ofek et al. 2012) must lie between the overall observed 1st and 99th percentiles.

- 4.The seeing (inferred from the mode of the point-source FWHM distribution) is

.

. - 5.The 5-σ limiting magnitude, estimated using both theoretical and empirically derived inputs is

mag.

mag. - 6.The number of sources extracted from the image (via SExtractor) is

. This reinforces the previous criterion and ensures the transparency was not too low or image noise not too excessive.

. This reinforces the previous criterion and ensures the transparency was not too low or image noise not too excessive. - 7.The minimum number of input images that must satisfy the above criteria before proceeding with reference image generation is Nmin = 5.

If  , the image limiting magnitudes Rlim are then sorted in descending order (faintest to brightest). Next, co-add limiting magnitudes mclim are predicted cumulatively and incrementally per-image for this list of candidate images. The resulting values of mclim are then compared to a predefined set of six target magnitude limits desired for the final co-add; e.g., for the iPTF R filter, these are defined:

, the image limiting magnitudes Rlim are then sorted in descending order (faintest to brightest). Next, co-add limiting magnitudes mclim are predicted cumulatively and incrementally per-image for this list of candidate images. The resulting values of mclim are then compared to a predefined set of six target magnitude limits desired for the final co-add; e.g., for the iPTF R filter, these are defined:

where  , Rmedlim is the typical (median) 5-σ limiting magnitude of a single R-band exposure (Law et al. 2009), and Nmin = 5. The faintest target limit

, Rmedlim is the typical (median) 5-σ limiting magnitude of a single R-band exposure (Law et al. 2009), and Nmin = 5. The faintest target limit  is then identified as the faintest mtlim that just falls below the co-add limit predicted from the entire image-list:

is then identified as the faintest mtlim that just falls below the co-add limit predicted from the entire image-list:  . The number of images N to co-add is then the smallest possible N whose cumulative mclim comes closest to

. The number of images N to co-add is then the smallest possible N whose cumulative mclim comes closest to  , i.e.,

, i.e.,

where 50 is the maximum number of images allowed at this stage.

The requirement of an upper cutoff in the input image FWHM (3 6; criterion #4 above) is an important consideration since it influences the quality (effective point-source FWHM) of the resulting reference image and image subtractions derived therefrom. It is desirable to generate a reference image whose effective FWHM is smaller than that generally expected in the science (target) images. This ensures the higher S/N reference image is preferentially convolved (smoothed) to match the science image PSF prior to subtraction in PTFIDE. Not only will this minimize the relative fraction of correlated pixel-noise in the difference images (i.e., since noise will be dominated by the science image), it ensures robustness and minimizes the potential for error when using an automatic method to decide on which image to convolve. This is because the decision metrics themselves are inherently noisy and one cannot be confident that the correct image will always be selected. For the interested reader, Huckvale et al. (2014) present an analysis on ways to select the best reference image and convolution direction for optimal image subtraction in the presence of variable seeing. The median FWHM of the iPTF science images is ≈2

6; criterion #4 above) is an important consideration since it influences the quality (effective point-source FWHM) of the resulting reference image and image subtractions derived therefrom. It is desirable to generate a reference image whose effective FWHM is smaller than that generally expected in the science (target) images. This ensures the higher S/N reference image is preferentially convolved (smoothed) to match the science image PSF prior to subtraction in PTFIDE. Not only will this minimize the relative fraction of correlated pixel-noise in the difference images (i.e., since noise will be dominated by the science image), it ensures robustness and minimizes the potential for error when using an automatic method to decide on which image to convolve. This is because the decision metrics themselves are inherently noisy and one cannot be confident that the correct image will always be selected. For the interested reader, Huckvale et al. (2014) present an analysis on ways to select the best reference image and convolution direction for optimal image subtraction in the presence of variable seeing. The median FWHM of the iPTF science images is ≈2 2. Therefore, it is inevitable that some cases will require the science image to be convolved when matching PSFs. This is not detrimental since PTFIDE can automatically select the image to convolve, with some margin for error (see below). The desire to have a lower cutoff for the input image FWHM when constructing reference images is mentioned here as a future improvement, specifically to optimize image subtraction. As mentioned, the requirement of FWHM

2. Therefore, it is inevitable that some cases will require the science image to be convolved when matching PSFs. This is not detrimental since PTFIDE can automatically select the image to convolve, with some margin for error (see below). The desire to have a lower cutoff for the input image FWHM when constructing reference images is mentioned here as a future improvement, specifically to optimize image subtraction. As mentioned, the requirement of FWHM  was driven by data availability (after accounting for all other selection criteria) and a need to generate reference images for a large fraction of the iPTF survey fields in short order.

was driven by data availability (after accounting for all other selection criteria) and a need to generate reference images for a large fraction of the iPTF survey fields in short order.

Before co-addition to create a reference image, the input list of high-quality overlapping CCD-images (for a given field and filter) are astrometrically refined as an ensemble. This is performed in a relative image-to-image sense using SCAMP with inputs provided by SExtractor. Their distortion solutions (in the PV format) are also refined self-consistently. This improves the astrometric solutions of the input images as well as the overall astrometry in final co-adds.

Following astrometric refinement, the images are fed to an in-house developed co-addition tool (mkcoadd.pl) specifically written for iPTF. This software first determines the WCS geometry of the output co-add footprint using WCS metadata from all the input images. The co-add pixel scale is set to the native value determined for the center of the focal plane: 1 01/pixel, and the footprint X, Y dimensions are fixed at 2500 pixels × 4600 pixels throughout. These dimensions can accomodate for slight offsets in the reconstructed image pointing within a field. Retaining the native pixel scale for co-add images ensures they more-or-less remain (marginally) critically sampled in median seeing conditions. A future consideration would be to use half the native pixel scale to take advantage of the natural dithering offered by random offsets in telescope pointing across image epochs. This dithering would benefit input lists that are dominated by undersampled images (i.e., acquired in better than median seeing) so that the effective PSF can be better sampled when all images are combined.

01/pixel, and the footprint X, Y dimensions are fixed at 2500 pixels × 4600 pixels throughout. These dimensions can accomodate for slight offsets in the reconstructed image pointing within a field. Retaining the native pixel scale for co-add images ensures they more-or-less remain (marginally) critically sampled in median seeing conditions. A future consideration would be to use half the native pixel scale to take advantage of the natural dithering offered by random offsets in telescope pointing across image epochs. This dithering would benefit input lists that are dominated by undersampled images (i.e., acquired in better than median seeing) so that the effective PSF can be better sampled when all images are combined.

Bad and saturated pixels are internally set to NaN in each CCD-image using their accompanying masks. This facilitates easier omission and tracking of all bad pixels downstream. Respective image-median levels are then subtracted. This stabilizes (or homogenizes) the images against temporally varying backgrounds before they are combined (see below). These backgrounds are not always astrophysical, for example, there is contamination from scattered moonlight and internal scattering from other bright objects whose line-of-sight may not directly fall on the focal plane. The individual image background levels are stored for later use. Each image is then de-warped (distortion-corrected) and interpolated onto the output co-add grid using its astrometric and distortion solution. This is accomplished using the SWarp software (Bertin et al. 2002). For an image observed at epoch t, the pixel values  at distortion-corrected positions i, j are interpolated and resampled using a 2D Lanczos kernel of window size three:

at distortion-corrected positions i, j are interpolated and resampled using a 2D Lanczos kernel of window size three:

where  and

and  , and the signal at pixel position x, y in the output grid is given by

, and the signal at pixel position x, y in the output grid is given by

This generates a new set of images for epochs t = 1, 2, 3...N that have been corrected for distortion, all sharing the same WCS geometry, i.e., that of the final co-add footprint.

The choice of a Lanczos kernel (Equation (1)), particularly with window size three, is motivated by three reasons. First, it is close to optimal for PSFs that are sampled close to or above the Nyquist rate, i.e., its sinc-like properties can reconstruct well-sampled signals to good accuracy. By "optimal," we mean in the context of conserving information content. Second, its sinc-like nature also ensures that uncorrelated input noise remains close to uncorrelated on output. Third, its relatively compact support minimizes aliasing and the spreading of bad and saturated pixels on output. Given the ≈1'' pixel size, one small downside is that localized ringing can occur when the PSF is severely undersampled, i.e., when the seeing falls below ≈1 6.

6.

Since the epochal images will have been observed at different atmospheric transparencies, their photometric throughput (or effective photon-to-DN gain factors) will be different. Throughput-matching the images to a common photometric gain or zeropoint (ZP) value is therefore necessary before combining them. This can be done in a relative sense (by computing source-flux ratios across images and rescaling pixel values therein) or in an absolute sense using the image-ZP values derived from photometric calibration upstream. We have chosen to use the absolute ZP values to compute the gain-factors. This is accomplished by throughput-matching all images to a common target zero point of ZPc. This value becomes the final co-add (reference image) ZP, where currently, all archived PTF reference images have ZPc = 27 magnitudes. The gain-corrected pixel values in a resampled image at epoch t with specific zero point ZPt are given by

The resampled and throughput-matched epochal images with pixel signals  are then combined using a lightly-trimmed weighted-average. Outlier-trimming is performed on the individual pixel stacks (along the t dimension) by first computing robust measures of the location and spread: respectively the median (p50) and

are then combined using a lightly-trimmed weighted-average. Outlier-trimming is performed on the individual pixel stacks (along the t dimension) by first computing robust measures of the location and spread: respectively the median (p50) and ![$\sigma \simeq 0.5[{p}_{84}-{p}_{16}]$](https://content.cld.iop.org/journals/1538-3873/129/971/014002/revision1/paspaa443cieqn38.gif) , where the px are percentiles. Pixels that satisfy

, where the px are percentiles. Pixels that satisfy  are rejected from their temporal-stack at position x, y prior to combining the remaining pixels using a weighted-average (see below). Our choice of a relatively loose trimming threshold (9σ) is driven by our goal to remove the largest outliers only (e.g., cosmic rays and unmasked satellite trails), therefore preserving as much information as possible.

are rejected from their temporal-stack at position x, y prior to combining the remaining pixels using a weighted-average (see below). Our choice of a relatively loose trimming threshold (9σ) is driven by our goal to remove the largest outliers only (e.g., cosmic rays and unmasked satellite trails), therefore preserving as much information as possible.

The pixels in a stack are weighted using an inverse power of the seeing (FWHMt) in the images they originated from, i.e.,

where FWHM0 is a constant fiducial value currently set to the modal value of 2'' and is unimportant since it cancels following normalization in the final weighted average. α is a parameter that controls the overall importance of the weighting. This weighting is purely motivated by empirical and practical considerations as an attempt to handle the time-dependent seeing in a qualitative sense, i.e., in that relatively more weight is given to images acquired in better seeing. There is no theoretical justification that satisfies some optimality criterion like maximal S/N, however, it is interesting to note that α = 2 corresponds to the case where  , where Np is the effective number of noise pixels12

for a Gaussian-like PSF and

, where Np is the effective number of noise pixels12

for a Gaussian-like PSF and  is the flux-variance that would result from PSF-fit photometry on the image (see also Masci & Fowler 2009). Therefore when α = 2, the weighting is effectively inverse-variance weighting of the images according to the expected point-source flux uncertainties from PSF-fitting. Besides being optimal for PSF-fitting (simultaneously over the entire image stack), and particularly when the input noise is Gaussian, we found through simulation and analysis of on-sky data that α = 2 can lead to significantly distorted PSFs and slight degradations in the co-add pixel S/N. This is due to the undersampled nature of the PSF when the seeing is better than average in iPTF exposures. We found that values of

is the flux-variance that would result from PSF-fit photometry on the image (see also Masci & Fowler 2009). Therefore when α = 2, the weighting is effectively inverse-variance weighting of the images according to the expected point-source flux uncertainties from PSF-fitting. Besides being optimal for PSF-fitting (simultaneously over the entire image stack), and particularly when the input noise is Gaussian, we found through simulation and analysis of on-sky data that α = 2 can lead to significantly distorted PSFs and slight degradations in the co-add pixel S/N. This is due to the undersampled nature of the PSF when the seeing is better than average in iPTF exposures. We found that values of  for the range of seeing encountered (and a forced cutoff of FWHM

for the range of seeing encountered (and a forced cutoff of FWHM  see above) work best. As a compromise, we assumed α = 1 throughout. This choice is similar to that adopted by Jiang et al. (2014) for combining SDSS image data. These authors also included inverse-variance weights in their weighting scheme, with pixel variances computed from the background rms in each input image.

see above) work best. As a compromise, we assumed α = 1 throughout. This choice is similar to that adopted by Jiang et al. (2014) for combining SDSS image data. These authors also included inverse-variance weights in their weighting scheme, with pixel variances computed from the background rms in each input image.

The Nr remaining pixels in a stack following outlier rejection are combined using a weighted average to produce the co-added pixel signal,

where  and wt are given by Equations (3) and (4) respectively. The Bct are the individual image background levels that were initially subtracted from each image (see above) then rescaled using the same throughput-match factors in Equation (3). A median of all these levels is computed and used as a fiducial background for the final co-add. We also generate an image of the uncertainties in the weighted averages S(x, y). For co-add pixel x, y, this can be written:

and wt are given by Equations (3) and (4) respectively. The Bct are the individual image background levels that were initially subtracted from each image (see above) then rescaled using the same throughput-match factors in Equation (3). A median of all these levels is computed and used as a fiducial background for the final co-add. We also generate an image of the uncertainties in the weighted averages S(x, y). For co-add pixel x, y, this can be written:  where

where  and σt is the uncertainty (e.g., a prior) for the input pixel signal at x, y, t. We assume that the noise is spatially and temporally uncorrelated across images. Instead of using explicit priors for σt (e.g., from a pixel-noise model), we approximate σt using an unbiased and unweighted estimate of the population standard-deviation in the stack of

and σt is the uncertainty (e.g., a prior) for the input pixel signal at x, y, t. We assume that the noise is spatially and temporally uncorrelated across images. Instead of using explicit priors for σt (e.g., from a pixel-noise model), we approximate σt using an unbiased and unweighted estimate of the population standard-deviation in the stack of  values. The uncertainty in S(x, y) (Equation (5)) then becomes

values. The uncertainty in S(x, y) (Equation (5)) then becomes

The effective  scaling is implicitly represented by the fractional term involving wt. An image of the pixel depth-of-coverage, Nr(x, y), is also generated.

scaling is implicitly represented by the fractional term involving wt. An image of the pixel depth-of-coverage, Nr(x, y), is also generated.

The astrometric solution in the reference image is validated against the 2MASS PSC using a procedure similar to that described in Section 2. Sources are extracted and measured from the reference image using both aperture (SExtractor) and PSF-fit photometry (DAOPhot). Ancillary products for the PSF-fit catalog include a DS9-region file and estimates of the spatially variable PSF represented in both DAOPhot's look-up-table format and as a grid of FITS-image stamps. QA metrics for the image and catalog products are also generated. The product files are archived and their paths/filenames and associated metrics stored in a relational database.

Each reference image product is uniquely identified according to survey field, CCD, filter, pipeline number, version, and archive status flag. The pipeline number supports variants of the reference image pipeline tailored for different science applications, for example, a specific time range, number of input images, and/or different filtering criteria than the default used to support real-time processing. As mentioned, the reference image library is periodically updated as low-quality or unusable products are identified from analyses of outputs from the real-time pipeline, provided enough good quality images are available (see above).

The reference image and its SExtractor catalog for a given survey field, CCD, and filter are two of the primary products used in PTFIDE (Section 4). As mentioned, these provide an absolute anchor for assigning a photometric ZP to all the new incoming, spatially coincident science images and subtractions derived therefrom. The ZP value in the FITS header of a reference image is the target fiducial value ZPc onto which selected input images were gain-matched prior to co-addition (Equation (3)). The absolute accuracy of ZPc is therefore determined by the accuracy of the input image ZPt values. These were initially derived from photometric calibration in the frame-processing pipeline using the SDSS-DR9 catalog (Ofek et al. 2012; Laher et al. 2014). The input instrumental magnitudes used to perform this calibration are Kron-like aperture measurements from SExtractor, also referred to as mag_auto. At the time of writing, these are the only instrumental magnitudes in iPTF products that can be tied to an absolute photometric system via the image ZPt values. The individual (spatially averaged) image ZPt values are accurate to 2%–4% (absolute rms; Ofek et al. 2012). These could be less accurate on sub-image scales due to possible residual spatial variations in the instrumental response. The ZPt-inherent gain-match errors will propagate into the reference image pixel values following image rescaling (Equation (3)). These errors will only be captured by the empirical uncertainty estimates in Equation (6) (with its implicit  scaling) assuming no systematics in the ZPt derivations upstream. A future goal is to calibrate the ZPt values to better than 1%, preferably using PSF-fit photometry.

scaling) assuming no systematics in the ZPt derivations upstream. A future goal is to calibrate the ZPt values to better than 1%, preferably using PSF-fit photometry.

3.4. Summary of Output Products

PTFIDE output products are files that are generically named: InputImgFilename_type.ext where InputImgFilename is the root filename assigned to the CCD image following pre-calibration upstream (Section 2) and type.ext is a mnemonic for the type of PTFIDE product generated. The extension (ext) can be either fits (for FITS-formatted image), tbl for ASCII table in the standard IPAC format, psf for PSF file in DAOPhot's look-up-table format, reg for DS9 region-overlay file, log for logfile, or txt for other ASCII files.

Table 3 lists the primary PTFIDE products generated per CCD image. By "primary," these represent the products that are later used for real-time transient discovery and/or general archival science applications, for example, light-curve generation using forced-photometry on the difference images. The image, PSF, QA, and log files are copied to long-term storage and their paths/filenames registered in relational database tables. The table (tbl) files contain the extracted transient candidates and associated metrics (Section 4.9.5), one for the positive and another for the negative difference image. The metadata for each transient are later stored in database tables (see Section 5). The metrics in the _diffqa.txt QA files (Section 4.8.2) are stored in a separate database table. The generation of positive (sci—ref) and negative (ref—sci) difference images may seem somewhat redundant since one is simply the negative of the other. The purpose of having a negative difference is to enable detection of transients that dissapear below the reference image baseline level, for example, variable stars that are observed in their "low" state relative to their time-averaged (reference image) flux. Our source detection software is designed to detect positive signals only and therefore it is necessary to negate the positive difference image and extract any new transients (or excursions in variable flux) that happened to be below the reference level at that epoch.

Table 3. Primary Outputs from PTFIDE

| Output file suffixa | Format | Descriptionb |

|---|---|---|

| _pmtchscimref.fits | FITS imagec | Final PSF-matched "science minus reference" difference image |

| _pmtchscimrefpsffit.tbl | IPAC table | Table of extracted transient candidates with PSF-fit and aperture photometry, and source metrics corresponding to the _pmtchscimref.fits difference image |

| _pmtchscimrefpsffit.reg | ASCII | DS9 region/source-overlay file for all transient candidates in _pmtchscimrefpsffit.tbl |

| _pmtchrefmsci.fits | FITS imagec | Final PSF-matched "reference minus science" difference image |

| _pmtchrefmscipsffit.tbl | IPAC table | Table of extracted transient candidates with PSF-fit and aperture photometry, and source metrics corresponding to the _pmtchrefmsci.fits difference image |

| _pmtchrefmscipsffit.reg | ASCII | DS9 region/source-overlay file for all transient candidates in _pmtchrefmscipsffit.tbl |

| _pmtchdiffunc.fits | FITS imagec | Image storing 1-σ pixel uncertainties corresponding to the _pmtchscimref.fits and _pmtchrefmsci.fits difference images |

| _pmtchkerncube.fits | FITS cube | Image stamps of spatially dependent PSF-matching convolution kernels with metadata in header. Each plane of cube stores kernel image for a specific partition in input science image |