Abstract

DNA Nanotechnology is being applied to multiple research fields. The functionality of DNA nanostructures is significantly enhanced by decorating them with nanoscale moieties including: proteins, metallic nanoparticles, quantum dots, and chromophores. Decoration is a complex process and developing protocols for reliable attachment routinely requires extensive trial and error. Additionally, the granular nature of scientific communication makes it difficult to discern general principles in DNA nanostructure decoration. This tutorial is a guidebook designed to minimize experimental bottlenecks and avoid dead-ends for those wishing to decorate DNA nanostructures. We supplement the reference material on available technical tools and procedures with a conceptual framework required to make efficient and effective decisions in the lab. Together these resources should aid both the novice and the expert to develop and execute a rapid, reliable decoration protocols.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Glossary

| DNA nanostructure | A two-dimensional (2D) or three-dimensional (3D) structure fabricated from DNA using sequence recognition and assembly. This includes tiles, origami, bricks, etc. |

| Decoration | Placement of a functional element onto a DNA nanostructure at a specific location |

| Functional element | A nanoscale object that performs a physical, chemical, biological, and/or informational function, e.g. a protein, peptide, metal nanoparticle, quantum dot, chromophore etc. |

| Addressability | The ability to site-specifically decorate DNA labeled elements at locations on a nanostructure |

| Process steps | Separate actions, or unit processes, that are ultimately used in combination to complete the fabrication of a structure |

| Decoration phases | Categories of process steps including design, assembly, purification, and verification |

| Decoration workflow | A complete set of process steps designed to create the desired product |

| Decoration precision | The standard deviation about the mean functional element position relative to the DNA nanostructure |

| Decoration accuracy | The agreement between a designed inter-element distance and the measured mean separation |

| DNA origami | A structure self-assembled from viral-derived scaffolds (approximately 7000–8000 nt) and synthetic 'staple' strands (30–60 nt) |

| DNA oligomer | Single-stranded DNA (ssDNA) with a short, defined, sequence typically shorter than 1000 nt |

| Domain | A defined sequence of nucleotides that comprises part, or all, of a DNA strand |

| Sticky end | Unpaired single-stranded DNA (ssDNA) connected to either a functional element or nanostructure, intended for later addressability, often a single domain on a larger strand |

| Linker modification | Chemical moiety on a DNA oligomer that will bind to a functional element and/or another chemical modification |

| Spacer sequence | DNA nucleotides between the linker moiety and functional element or DNA nanostructure included to increase the distance, or flexibility, of the connection between them |

| Structural yield | Fraction of successfully assembled nanostructure relative to the reagent DNA or relative to the population of characterized products. For origami, the reagent DNA can be either the scaffold or staple strands |

| Migratory yield | Structural yield evaluated as the fraction of product with the expected mobility |

| Imaging yield | Structural yield evaluated as the fraction of product visibly recognizable as being of the 'correct' shape |

| Oligomer inclusion yield | Structural yield evaluated as the fraction of oligomers included in the structure, or included at the expected position within it |

| Site-Occupancy yield | Fraction of sites on a structure which successfully bind a functional element |

| Functional yield | Fraction of structures which perform the desired function |

| Practitioner | Individual who performs the process steps and conducts the project |

| Note | Notes will highlight considerations or pitfalls |

1. DNA nanostructure decoration

This tutorial assumes that the reader is familiar with the basics of DNA nanofabrication [1] and has read our previous tutorial on DNA origami design [2], or other introductory documents [3–5]. While this guide may be applied to the decoration of any DNA nanostructure, we focus on DNA origami due to its ubiquity.

The DNA nanofabrication toolkit provides many options for decorating nucleic acid nanostructures with functional elements at precise, predetermined locations. This is arguably the most important capability it brings to bottom up self-assembly for two reasons: First, DNA bioconjugation methods are sufficiently mature for even novices to readily label a wide variety of functional nanoparticles, proteins, and macromolecules with DNA. Second, since the sequence of the DNA label can be defined, the labeled object may be targeted to bind to any complementary sequence location on a DNA nanostructure, dramatically enhancing the functionality of the 2D and 3D superstructure. A practitioner may decorate a DNA nanostructure with virtually any nanoscale element at almost any spacing or orientation. This flexibility and control are especially valuable for applications where precise spacing is crucial such as interfacing with biochemical systems [6], controlling plasmonic interactions [7, 8], or complex sensing triggered by multiple conformational changes [9, 10].

We approach the topic of DNA decoration holistically, beginning in section 1 with broad definitions of decoration and an explanation of our rationale for dividing the decoration process into phases. In section 2 we articulate the importance of a holistic approach, i.e. systems engineering. Finally, we elucidate specific techniques for each decoration phase in sections 3 through 6.

Note: This document is meant to serve as a tutorial and as a reference material. By intent, it covers nuances left uncovered in research reports and reviews, but communicates neither the novelty of the former, nor the in-depth attribution of accomplishments of the latter.

As for any reference, different details and nuances will be useful to different practitioners. We encourage those readers with specific questions or interests to navigate directly to the relevant section.

Section 1: decoration and its applications

Section 2: the importance of systems engineering to decoration

Section 3: the design of decoration including addressing, accuracy, and precision

Section 4: decorated nanostructure formation, from basic thermodynamics to common protocols

Section 5: purification of decorated nanostructures and challenges thereof

Section 6: verification of decorated nanostructures and uncertainty thereof

1.1. An example decoration workflow

As this tutorial's scope encompasses the breadth of conceptual tools and experimental techniques required to plan a DNA nanostructure decoration workflow it is infeasible to provide protocols for all, or even a portion of the techniques we discuss.

However, it is worth briefly describing how a decoration workflow might proceed in lab to provide a sense of the time investment required and to explain why we advocate for a holistic approach in planning.

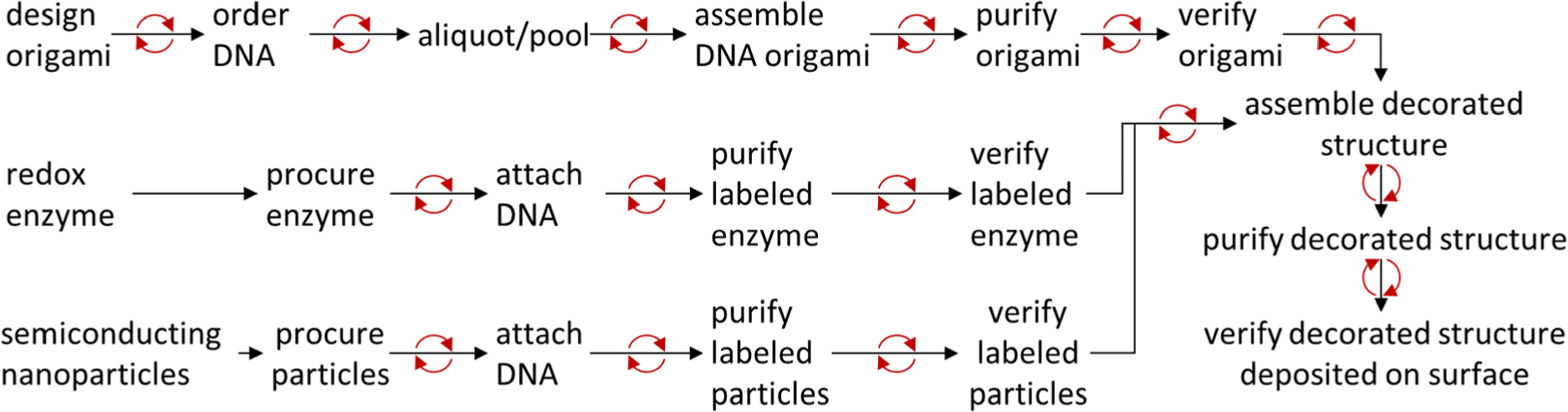

Figure 1 illustrates the steps required for the following counter-factual example (example inspired by [11]). Assume the existence of some redox active enzyme that can be labeled with DNA without impairing function. Semiconducting particles with appropriate band gaps can, when in proximity to the enzymes, optically pump them to provide energy. To create the enzyme's product from its substrate and light, one might desire a surface with densely packed, but regularly spaced enzyme-nanoparticle clusters, over which one could flow the enzyme's substrate during exposure to light.

Figure 1. Example workflow, where red circular arrows indicate steps at which iterative troubleshooting may be necessary.

Download figure:

Standard image High-resolution imageDesigning of an origami that can cluster the enzyme and nanoparticles, choosing the labeling chemistries, and making plans for purification and verification will typically take from a month to a few months. Procuring the DNA, enzymes and nanoparticles often take slightly less than a month.

The DNA strands are pooled together into aliquots to accommodate variations of a structure with different decoration spacings or locations by grouping the strands based on which shared structure variants they will create. This usually takes a few days.

After this step a decoration project is a hierarchy of assembly steps, purification steps, and verification steps, each taking between a few hours and a few days. For example, to assemble origami one would mix buffer, salt, viral scaffold strand, structural staple pools, and the modified strands which address the decoration, and perform an overnight thermal anneal. The product origami would be purified from the reagents, e.g. by filter centrifugation, and the assembly would be verified, e.g. by atomic force microscopy. Analogous steps would be performed to label the enzymes and nanoparticles.

The labeled nanoparticles, enzymes, and origami would then be combined, annealed, purified, and deposited on a surface for verification and performance testing.

If every step works as intended, this could take as little as two to three months in total. Unfortunately, troubleshooting is unavoidable, and one only rarely knows why steps fail.

To isolate a failure mode, one works iteratively backwards from the failure point. For example, if a decorated structure fails to assemble one might confirm that the particles or enzymes really are labeled with the correct strand. It is not unusual to work as far back as re-pooling DNA strands or re-evaluating an entire nanostructure design.

Troubleshooting time can have a factorial dependence on project complexity and represents the bulk of the costs for any decoration project. Optimizing troubleshooting through careful holistic planning is crucial.

1.2. Decoration process

Given the broad range of possibilities (type of nanofabrication, functional element type, spacing, etc), there is considerable variation in decoration workflows. We divide these workflows into phases and the phases into process steps. Process steps include designing a DNA nanostructure, fabricating it, purifying it from its constituent oligomers, etc. The number of steps increases with each additional functional element.

We define phases of related steps as: design, assembly, purification, and verification. In design, the nanostructure is drafted and the functional elements, linker chemistries, and sticky end DNA sequences are chosen. In assembly the reagents are combined and annealed. In purification, the desired structure or decorated structure is separated from reagents and defective products. In verification, the product is characterized and evaluated to determine whether it is within tolerance of the initial design. Notably, in many projects assembly and purification are performed iteratively, while in others verification occurs in-line with purification.

A workflow is a full collection of steps, through all phases, which can result in viable product. We will discuss workflows in more detail in section 1.2 : Systems Engineering.

Note: The steps of decoration and the phases we divide them into must often be duplicated for each unique species of functional element attached to a nanostructure. In practice, some of these steps for separate functional elements may be combined or repeated, as choices made for a new decoration workflow are rarely independent.

The fabrication of a DNA nanostructure is relatively straightforward thanks to design tool advances in recent years [12–17]. However, the techniques for decorating DNA nanostructures are not yet mature. There are several major challenges which can hinder implementation. For example, there are limited purification options for decorated structures given their size [18]. The typical origami nanostructure is 4.5 × 106 g·mol−1 (4.5 MDa), which is too large for most traditional analytical chemistry-based purification but too small for micro- or macro-scale solutions. Yield for DNA nanostructure is difficult to define or measure, making feasibility calculations for new applications difficult. Finally, our understanding of the lifetime of the final product is limited, especially in the context of functionality. These challenges are made more difficult by limitations in the accuracy and throughput of structure metrology and have, to-date, restricted the degree of complexity of DNA nanofabrication and decoration, which in turn limits its accessible application space.

It is therefore best to begin a decoration project with a careful evaluation of the step-by-step strategy one intends to adopt. In this section we describe the key challenges and possible solutions in detail.

1.2.1. Key challenges: purification

For both decorated and undecorated nanostructures, the need for purification stems from constituent multivalent binding interactions. All DNA nanofabrication methods involve oligomer strands with multiple sequence domains, for example origami staples include domains binding different scaffold domains, while many nanoparticle (NP) or quantum dot (QD) systems are covered in multiple copies of a sticky end oligomer. In any system with multivalent binding, it is thermodynamically possible to create many defective products in which the binding positions are satisfied without reaching the designed state, i.e. multiple bound particles where there should be only one.

In the initial fabrication of DNA origami prior to any decoration, purification implies removal of excess unbound DNA oligomers. This is needed for successful downstream decoration as without it, the excess oligomers will compete with the sticky ends on the structure for their complement on the functional element, significantly reducing yield. Luckily, the substantial mass difference between DNA oligomers and assembled structures allow for convenient purification. However, each subsequent decoration step results in a smaller relative change in mass, e.g. that of a structure and functional element versus an un-decorated nanostructure. Additionally, as functional elements are added, purification technique choices are further constrained by compatibility with previously added elements.

In section 3.4 we will discuss commonly used functional elements as well as their specific advantages and disadvantages in the contexts of purification, assembly, and verification. In section 5 we provide a more in-depth technical description of common purification techniques, their strengths and limitations, and common problems in their implementation.

1.2.2. Key challenges: yield

A practitioner should be warned that there is no unambiguous definition of yield, whether of decorated or undecorated DNA nanostructures. In this work, we choose to divide measurements of yield into structural yield and functional yield. As outlined in the glossary, the structural yield is the fraction of structures obtained from raw material after assembly or purification. The functional yield is the fraction of those structures performing their function correctly. Obtaining the former is necessary but not sufficient to determine the latter. Functional yield is application specific, non-trivial to measure, and difficult to predict. Assumptions made regarding anticipated functional yield, how the practitioner intends to measure that yield, and how much yield is necessary for application must therefore be evaluated critically and conservatively. To further complicate the matter, it may also be necessary to determine the yield of particular defective structures. For example, a drug delivery vehicle lacking a targeting moiety could lead to harmful side effects.

A typical approach to obtain functional yield is to normalize some ensemble measurement of function by a measured structural yield. We organize evaluations of structural yield into classes as follows:

- Migratory yield: fraction of product with the expected mobility Techniques: gel electrophoresis, gradient centrifugation, size exclusion chromatography

- Imaging yield: fraction of product visibly recognizable as being of the 'correct' shape Techniques: atomic force microscopy, electron microscopy

- Oligomer inclusion yield: fraction of oligomers included in the structure, or in their expected position Techniques: super-resolution microscopy, radio- or fluorescently labeled oligomers via gel electrophoresis

There is currently no consensus for whether functional yield should be reported relative to the reagents consumed, the initial assembly structural yield, or the post-purification structural yield. Practitioners are therefore well served by paying careful attention to how functional yield is normalized as well as the class of structural yield used.

As structural yield can be measured at multiple points in a workflow, between which loss of product can occur, care should be taken when comparing reported functional yields.

Each structural yield class has separate, specific benefits and drawbacks. Migratory yield is the simplest to measure, but the most poorly correlated with function, and the least precise of the three. Imaging yield provides more detailed information, but is slow, and is subject to significant user uncertainty in evaluating a 'correct' structure. Oligomer inclusion yield can provide exquisite detail but requires assumptions to link the measured signal to the presence or absence of specific staples. This is discussed in more detail in section 6.5, but a good example of how these assumptions can fail is how a nanostructure which is only 10% folded would not have sufficient reference positions to measure oligomer inclusion by DNA PAINT.

The structural yield is influenced by the design, assembly, and purification phases. The anneal rate and strand stoichiometry can, for some designs, significantly improve structural yield, especially when the assembly may be less favorable thermodynamically [19–21]. Similarly, whether one or many repeated iterations of the purification phase are necessary, their <100% efficacy will reduce the structural yield.

If a functional element is destroyed in assembly, purification, or verification it cannot perform its function. Maximizing functional yield therefore constrains workflows via the compatibilities of the functional elements. Commonly, this limits the choice of temperature range, salt concentration, etc. For particularly sensitive systems, conditions that increase structural yield may reduce functional yield.

One example of the complexities inherent to optimizing yield is the standard use of excess, typically of 10× to 100×, of reagent staples, functional elements, or both to drive the assembly. Unless the excess starting material is recovered, it is wasted, reducing the overall yield relative to the amount of reactant molecules used. This can quickly result in monetarily infeasible experiments if the excess starting material includes an expensive chemically modified oligomer.

Finally, we will note that site-occupancy yield—which is the fraction of functional element sites which successfully bind a functional element—is a related but orthogonal concept to structural yield. It is most closely related to imaging yield but is distinct in that it is readily quantifiable with significantly less uncertainty associated with analyst judgement.

1.2.3. Key challenges: lifetime

Best practices for optimizing the storage lifetime of DNA nanostructures are not yet well studied and we believe future tutorials on decorated DNA nanostructures will have to cover loss of functionality over time. Studies exploring this concern mostly do so in the context of lab-scale fabrication, using nuclease digestion [22–25] as a proxy for stability, although there are also comprehensive studies of double-stranded DNA, dsDNA, stability [26] which have limited cross applicability to DNA nanostructure.

A structure's lifetime can be affected by degradation of the functional element or of the structure itself. There are many mechanisms which can degrade DNA, ranging from oxidation to de-hybridization, aggregation, and enzymatic digestion.

The most common lifetime preservation protocol is storage at refrigerated or freezing temperatures in the presence of Ethylenediaminetetraacetic acid, EDTA, which chelates ions that might otherwise activate nucleases. However, freeze/thaw cycles degrade both DNA oligomers and nanostructures. Typical lab-scale administrative controls for freeze/thaw damage [27] include pooling small separate aliquots of stock DNA and modular experiment design to minimize the number of times oligomers must be thawed. Otherwise, current storage approaches come directly from those used for the functional element in question, such as storage of dye labelled structures in dark conditions, or annealed structures at temperatures well below their Tm, at which they de-hybridize.

1.3. Applications of DNA nanostructure decoration

The DNA nanostructure decoration workflow is driven by application and by the functional elements needed for those applications. To date, the DNA nanotechnology community has dedicated more effort to developing new tools rather than cataloging those tools into a well-organized toolbox. As comprehensive reviews of specific strategies exist [3, 28, 29], the following examples are meant to be representative and instructive rather than exhaustive.

1.3.1. Nano-photonic systems

Metal NPs were one of the first functional elements to be labeled with DNA molecules [30]. The nanometer scale of these objects provides attractive optical properties, and many are commercially available. Interactions between metal NPs placed precisely into a predetermined 2D or 3D pattern can further modify those properties. Due to their suitable functionalization chemistries, gold and silver NPs are the most common for photonic applications [31]. DNA decoration allows fine tuning of chiral and plasmonic properties and has led to the creation of nano-antennas, waveguides, plasmonic and thermal sensors etc [32]. DNA nanostructures have also been used to position nucleation sites for metal deposition where the structure acts as a molding tool for nanoparticle growth [33].

As these systems typically use very dense functional elements, i.e. metal nanoparticles, they are often paired with purification methods which operate on changes in density such as rate zonal centrifugation and density sensitive verification methods, e.g. electron microscopy.

1.3.2. Stand-alone biomimetic systems and theranostics

Therapeutic and biosensing systems are popular applications for decorated DNA nanostructures, and include drug delivery, biosensors, cancer therapy, bioimaging, etc [34–36]. These applications take advantage of DNA's inherent biocompatibility and use decoration to impart specific functions [37, 38]. Whether the nanostructure is targeted to specific cells, or is designed to avoid uptake, it must have appropriate targeting or stealth elements in addition to its active ingredients, for example, a conformational change which releases a payload, often linked to the targeting functional element [36]. It is typical to find at least two levels of functionality in these systems.

Layers of functionality, particularly those which work together like the conformational change and targeting elements, significantly increase both the importance and difficulty of optimizing the decoration workflow [39]. The optimization is further constrained by function in a biological environment, which is physiological temperature (37 °C), physiological salt concentration (on the order of 1 mmol·l−1 Mg [40]), and exposure to sources of enzymatic and oxidative degradation. In addition, it is likely regulatory agencies will expect standardized quantification of purity and yield, which presents a verification challenge.

1.3.3. Interfacial systems

We define interfacial systems broadly and include systems where the nanostructure is interacting with elements much larger than itself, like lipid vesicles, electrodes, or microfluidics. While there are limited similarities between DNA nanostructures interfaced with lithographically patterned sensors [41], lipid membranes [42], colloidal systems [43], complex enzymatic cascades [44], ion channels, common hurdles include chemical compatibility and the need for simultaneous characterization at multiple length scales. Multidisciplinary expertise is essential to surmounting these challenges.

Interfacing DNA nanostructures with semiconductor devices is difficult because of the mutual incompatibility between their processing conditions. The high temperatures, reactive plasmas, and aggressive chemistries used in semiconductor manufacturing destroy nucleic acids, while the aqueous solutions of mobile ions needed for DNA nanostructure fabrication can compromise semiconductor systems. DNA nanostructures have typically either been used as sacrificial patterning materials [45] or been applied after the fabrication of a device is complete [41].

2. Systems engineering

As the complexity of decorated systems increases, such as a multicomponent mRNA nanofactory [46] containing multiple enzymes and a tethered substrate working in concert, it becomes more difficult for a single practitioner to balance all possible workflow choices. However, even when a project is sufficiently simple to have a single practitioner, the workflow optimization space is vast. For this, we turn to systems engineering for insight.

Most practitioners reading this document will likely be intuitively familiar with the systems engineering approach. Time and money are finite: the purpose of systems engineering is to avoid mistakes associated with workflow complexity that can consume these precious resources by proceeding methodically through the phases of problem specification, design, development, production, application, and validation.

Note: systematically reviewing the relevant constraints on decoration choices, and how each choice will constrain others, can be the difference between a rapid, successful implementation and a prolonged residence in development hell.

There is rarely a simple answer to tradeoff questions for any complex project, and ones involving decoration are no exception. However, even the small step of writing out one's priorities to guide effort tradeoff decisions can improve consistency and avoid costly mistakes.

2.1. DNA nanostructure decoration workflow

The defining systems engineering feature of a decoration project workflow is its interconnectedness. Any choice within a decoration phase will constrain other choices both in that phase and in others. As there are a wide array of such choices for each phase, the optimization space can become dizzyingly large. We advise the reader to consider the system holistically, and to take an iterative approach, considering first the constraints from the application, then the branching choices starting with those which most constrain the others.

Evaluating the whole system and the constraints that propagate between choices in advance allows the practitioner to cluster sets of compatible choices into feasible workflows. This facilitates comparison between them, resulting in more informed choices. To cluster choices in decoration steps, it is helpful to visually represent the phases of decoration and their relationship to an application, shown in figure 2. Figure 3 gives more specific examples of how choices in each phase can constrain choices in neighboring phases.

Figure 2. The decoration phases (white) are constrained primarily by functional requirements (red) of the application, and secondarily by choices made in other phases (orange).

Download figure:

Standard image High-resolution imageFigure 3. DNA decoration workflow, consisting of decoration phases (white) constrained primarily by functional requirements (red), and secondarily by choices made in other phases (orange).

Download figure:

Standard image High-resolution imageTo cluster steps into potentially feasible workflows, we begin with the chosen application to identify the primary constraints on each phase of decoration. Starting with the most restricted phase, we then identify feasible process step choices. For each choice, we then identify the constraints it might impose on the choices in other phases. These are then separately propagated into the next most constrained phase. This can be imagined as following potential paths in a Galton box [47] or timeline splitting in a science fiction novel. A branch of choices is discarded if it cannot create a feasible workflow—i.e. it cannot be propagated by feasible steps all the way from the most constrained phase to the least constrained phase.

This process is less daunting than it sounds, as many choices are linked by physical properties that play an obvious role in multiple phases. For example, functional elements with a high density provide mass contrast that affects both purification and verification techniques. Similarly, many choices which complicate design also complicate assembly conditions, though they may simplify verification or purification.

For example, a choice that increases mass contrast between the desired structure and the raw material will make both purification (via density gradient centrifugation) and verification, (via electron microscopy) easier. Purification (via density gradient centrifugation) would also be easier for multiple small structures, each with <5 functional elements, than it would for a single large structure with >5 elements, however, a structure built from 5 smaller structures would complicate both the design and assembly phases. As one propagates choices and constraints on each step, these couplings will result in sets of compatible interdependent choices.

Note: without a holistic approach, it is surprisingly easy to 'paint oneself into a corner.'

If one makes choices as they occur in-lab, incompatibilities at the final purification or verification could waste months of effort. While a holistic approach cannot guarantee single iteration cycles, it can minimize lost effort.

2. A decoration case study

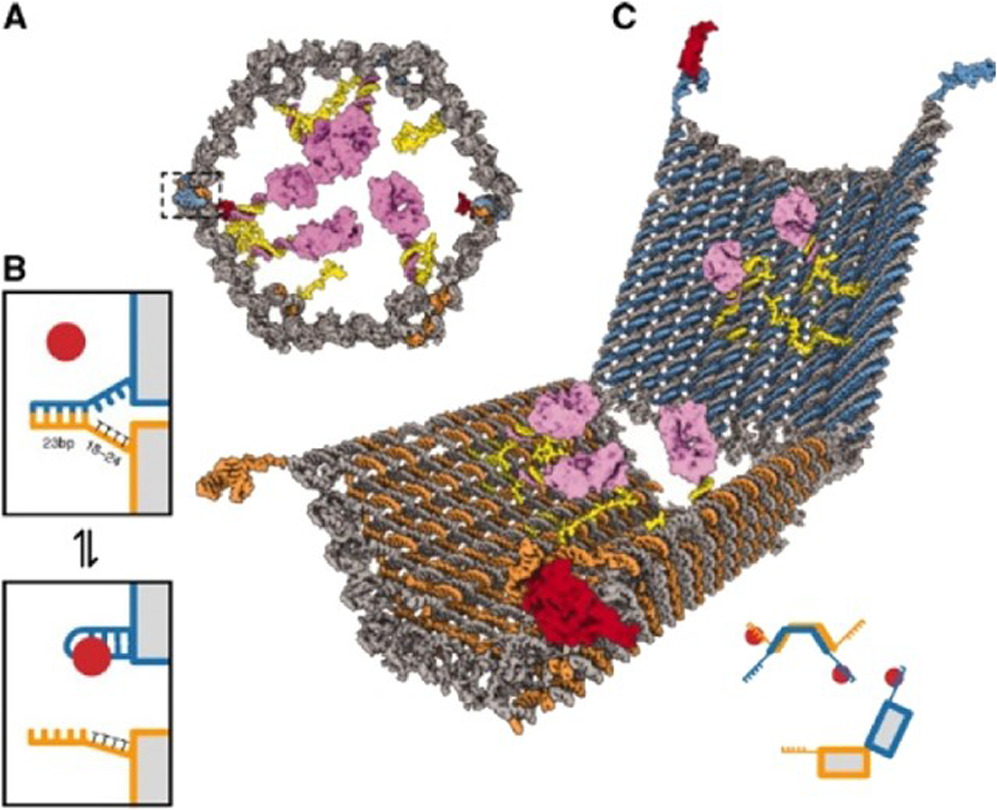

Figure 4 is recreated from the work of Douglas et al and is an archetypal example of DNA origami decoration. At first glance, it may appear to be straightforward as the system comprises an open barrel origami, two aptamer lock motifs, and a choice of two types of payload. However, these features posed interesting system design choices that required modification to existing protocols or development of new ones.

Figure 4. (a), (c)—opened and closed 3D models of the barrel structure, (b)—the aptamer-based lock. Reproduced from Douglas et al [36] with permission from AAAS.

Download figure:

Standard image High-resolution imageIn particular, including the sensitive aptamer-based lock required accommodations in the design, assembly, and verification phases.

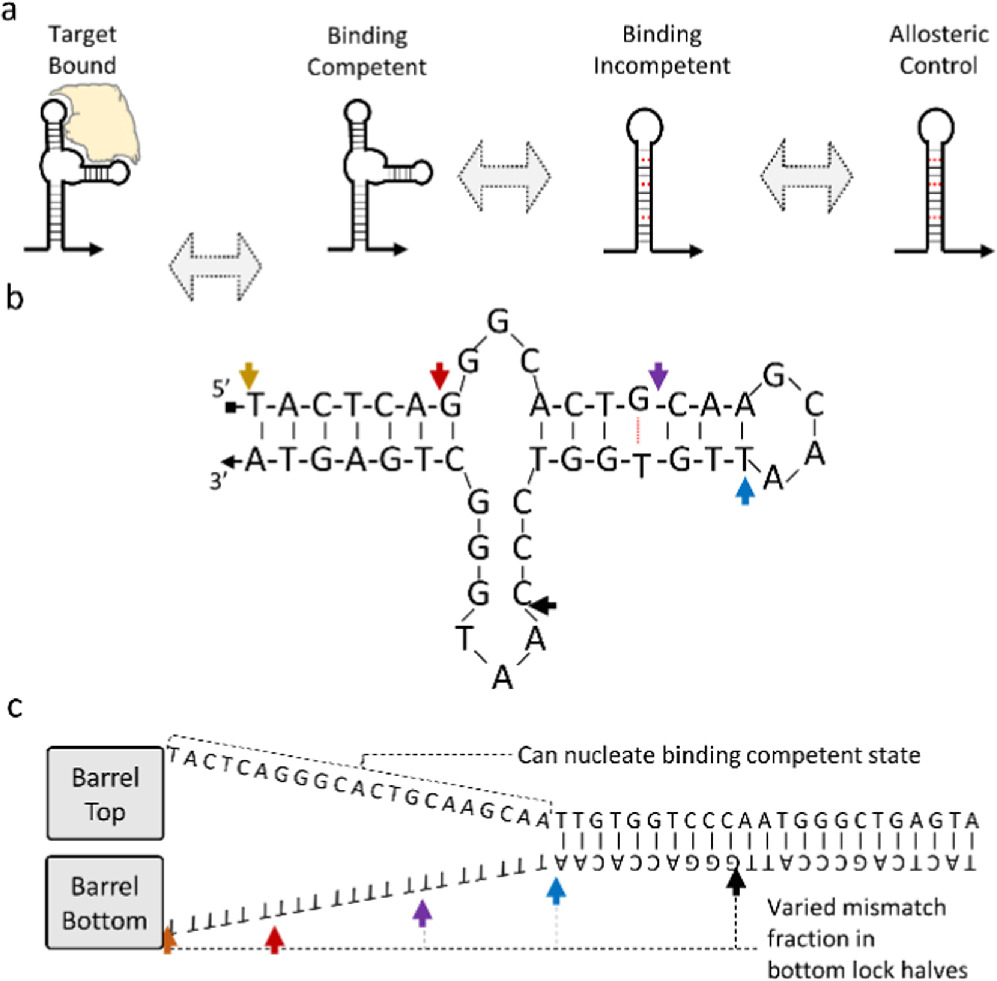

As shown in figure 5(a), aptamers are short sequences of DNA that form some secondary structure which positions the charges and hydrophobic regions of the nucleotides to bind some non-DNA target molecule. By forcing some of an aptamers' ssDNA bases to engage in hybridization that competes with the binding state, one can create two metastable states whose equilibrium is controlled via analyte concentration and Le Chatelier's principle—where increasing reagent concentration will push a reaction to create more product in response.

Figure 5. (a)—Schematic of how an aptamer sequence might be modified to sample binding competent and binding incompetent states, (b)—the aptamer originally reported by Green et al [48] (c)—competing dsDNA state, where the colored arrows in (b) and (c) represent the five different lock designs with varying levels of mis-matched polyT.

Download figure:

Standard image High-resolution imageAn aptamer can lock or move a DNA nanostructure. figure 5(b) shows the sequence of the aptamer used by Douglas et al and adapted from Green et al [48]. The arrows in figure 5(b) correspond to the five different versions of their lock, with the competing state design shown in figure 5(c). Each of the five lock designs varied the fraction of the aptamer sequence capable of nucleating the binding competent state.

This design, and most aptamer lock designs, are constrained in their energetics. The free energy of the dsDNA hybridization in the competing state must be more negative than the binding-competent state but have a higher free energy than the target bound state. Otherwise, the system would not be metastable, and would always remain locked or unlocked.

Douglas et. al manually explored the energetics to find a metastable state and avoid designs which would be fused closed and those which would spontaneously open. In this case, the aptamer locks, which could pop open, were also too weak to form the initial closed state in high yield.

This presents an informative systems engineering challenge. Douglas et al chose to address this problem with a threefold approach: first, by introducing guide strands to hold the structure closed at assembly; second, by removing the 'walls' of the barrel to allow payload loading without actuating the locks, and third, by developing a custom verification assay to measure individual opening and closing events.

These choices further altered the workflow, as Douglas et al introduced steps to remove the guide strands after the payload loading, and as they performed modeling estimates to assess whether the payload would have sufficient time to diffuse through the open barrel walls.

These workflow choices are why we chose this work as a case study. It emphasizes that the best workflow choice is the one which will work in the practitioners' own lab. Douglas et al's custom microbead assay for counting single barrel opening events, their use of live cells and flow cytometry, their choice of Fab' antibody fragment labeling chemistry, and their selection of purification for labeled AuNPs and Fab' fragments all point to the utilization of existing strengths in expertise and equipment.

One could imagine several counterfactual workflows which may, or may not, have succeeded.

- Design of a lock system reinforced with many more locks in parallel, requiring additional design work, and more intense verification to quantify the lock behavior in each design. It also would have increased the concentration of platelet-derived growth factor required to open the barrel.

- Design of a lock system using a stronger aptamer binding the same target, or a similar cell-surface protein target. This assumes one exists or assumes the resources could be dedicated to find one.

- Design of the nanostructure to act as a weaker entropic spring, or one with a neutral state closer to that of the locked barrel. This would reduce the energy required to hold the barrel closed but would likely reduce opening efficacy.

- Purification via some newly developed technique which separates the open and closed barrels. This would reduce the need for the guide strands and accommodate slightly weaker locks. However, even if possible, this choice would likely be dramatically less efficient than the guide strand approach.

Ultimately, any serious decoration project forces the practitioner to choose between a variety of such potential workflows. Navigating this space effectively is often underemphasized in the scientific literature, likely because including discarded workflow choices would be a tedious inclusion into supplementary documents.

One could imagine the same project utilizing very different workflow choices under different conditions. A collaboration with less flow cytometry expertise but more experimental and theoretical thermodynamics might have forgone a custom single barrel opening event assay for in-depth modeling and ensemble measurements. Similarly, a collaboration with significant expertise in synthesizing and testing libraries of DNA sequences for SELEX identification of aptamers might have developed a brute force approach to lock optimization. Ultimately, these counterfactual cases would have provided the scientific community with slightly different proofs-of-concept and would have had different probabilities of success.

The utility of thinking of project workflows in terms of phases, and of phases as groups of individual steps, is that it facilitates finding choices most aligned with the available resources and expertise.

2.3. DNA decoration decision making

Identifying and optimizing workflows, rather than individual phase-to-phase or step-to-step choices, allows prioritization for the success of the entire project. For example, both questions below could be asked of the same system.

'Should I perform (5+) multi-element decorations in a single pot anneal with a single purification, or should I divide my design into multiple single-element sub-structures with multiple purifications and combine them in an additional assembly step?'

And

'Should I pick the workflow that requires significant effort in developing purification, or the one requires significant effort in optimizing design and assembly conditions?'

Abstracting the former question into the latter enables evaluation based on available equipment and expertise. We liken this to balancing the weight of effort across the project, shown in figure 6, where the colored groups of phases represent possible effort tradeoffs. While it can take years of experience to develop an intuitive feel for how the weight of a project workflow is balanced, explicitly articulated planning is a more practical road to success.

Figure 6. Relationships in figures 1 and 2, emphasizing common tradeoffs in time-spent between tasks (white) as constrained by the functional requirements (red), where the diamond in the tradeoffs column is colored to emphasize which tasks are being balanced. The tradeoffs presented here parallel the example discussed above.

Download figure:

Standard image High-resolution imageEach of the following sections of this tutorial will provide a primer to an individual decoration phase describing the possible steps in that phase and what the constraints on those steps imply. The assembly section also includes the thermodynamics of sticky end decoration, and the verification section primer includes a short discussion of uncertainty and potential sources thereof.

3. Design

The first step of any decoration workflow is nanostructure design. The primary feature for this design process, and cornerstone of DNA nanostructure decoration, is addressability, i.e. the use of defined DNA sequences to place labeled elements at precise, predetermined locations on the structure. Locations may be singular, for example on an origami, or arrayed, in a tiling system with a lattice of identical locations. Tiling of non-identical units can be used to produce many unique locations, though at the cost of additional design complexity [49]. We note that this approach is limited: a system comprising thousands of oligomers folding into a single structure is likely to have sequences which, while technically unique, lack sufficient sequence orthogonality. The crosstalk between these strands will either reduce the effective concentration of the strands, potentially causing strand vacancies, or cause unintended strands to hybridize to incorrect parts of the structure.

This section will discuss designing an addressable position and a complementary functional element. Design choices include style of addressability, structure location, number of connections, element type, and element/oligomer connection chemistry. The related topic of thermodynamics of decoration will be addressed in the assembly section 4.1.

The spacing between functional elements is often critical to structure function. However, the sensitivity of function to spacing depends on the physical phenomenona at play and can vary dramatically. For example, distance variability will play a different role for a structure using Förster resonance energy transfer, FRET, than for one organizing ligands to bind to separate pockets on a biomolecule.

It is important to consider the accuracy and precision of programmed inter-element spacings. We define decoration accuracy as the agreement between a designed inter-element distance and the measured mean separation in an ensemble of nanostructures. The decoration precision would then be the standard deviation about this mean.

While these definitions are typical, they convolute structural fluctuations and inter-structure variability. Structural fluctuations vary the inter-element distance on the same structure over time. Inter-structure variability represents the distribution of mean inter-element distances between individual structures in a sample. To our knowledge few studies have deconvoluted these sources of variability, likely due to the difficulty of measuring inter-element distances over time for a statistically relevant number of individual structures. Recent modeling tools and metrology for dynamics structures appear promising approaches to adapt to this purpose [50].

It is not clear what the best achievable decoration precision might be, nor are there clear protocols on how to achieve it. However, recent studies can provide a general expectation for precision, shown in table 1. As they are also typically ensemble measurements, they could not capture fluctuations in inter-element distance over time or variations due to sample polydispersity. While the measurements are sufficiently different to make comparison difficult, they imply that interparticle spacing precision on the order of a nanometer is readily achievable.

Table 1. Example measurements of decoration precision in the literature. The quoted precisions are based on interparticle spacing measurements, not based on some absolute coordinate system, or relative to the nanostructure itself.

| Measured decoration precision | Method | References |

|---|---|---|

| ≈ 1 nm | Single interparticle spacing, via fluorescence lifetime | [7] |

| ≈ 3–6 nm | Single inter-particle spacing via AFM | [51] |

| <2 nm | Pitch measurement via AFM | [52] |

| ≈ 1–2 nm | Pairwise interparticle spacing via XRD | [53] |

Given the state of the art, we will discuss possible sources of inaccuracy and imprecision generally before we consider design in more detail.

3.1. Decoration placement

The most common tool for creating addressable locations on a nanostructure is the use of sticky ends, which are additional lengths of unpaired single-stranded DNA, ssDNA, which exit a structure. The functional element is labeled with ssDNA of complementary sequence to the sticky end on the structure. A key feature of sticky ends is that they do not benefit from the natural stoichiometric controls and cooperativity typical of origami - the target strand is not guaranteed to strand displace a partially complementary sequence incorrectly bound to the sticky end.

Best practice for sticky end driven decoration accounts for incorrect binding in two ways. First, the labeled element is only added after an initial purification to remove excess copies of the sticky end strand which could prevent binding. Second, both the sticky end strand on the nanostructure and the sticky end strand attached to the functional element are purified, typically via polyacrylamide gel electrophoresis, PAGE, to remove off-target sequences. Other approaches include using a high number of duplicate sticky ends on both structure and particle to ensure binding even if several positions do not bind appropriately. An alternative approach is direct labeling, in which the functional element is labeled with a strand that directly hybridizes to the nanostructure in the initial anneal without a facilitating sticky end. This technique is limited by both reduced flexibility and the thermostability of the functional element. However, direct label binding may result in higher yield during scale-up as it combines steps for excess staple purification and for excess functional element purification.

Note: direct labeling is incompatible with nanoparticles that require an excess of the linker modified strand, e.g. thiol/gold nanoparticle connections

Direct labeling is also incompatible with any anchor chemistry which cannot survive thermal or chemical anneal conditions.

Sticky ends are ubiquitous due to their modularity at the lab level: the same sequences are often used for multiple projects, reducing the upfront cost of expensive labeled oligomers. This also allows the location and types of functional elements to be easily exchanged within in a lab's library of raw materials. Section 3.5 will discuss sequence design for sticky ends, and the energetics of sticky ending binding will be discussed in section 4.1.

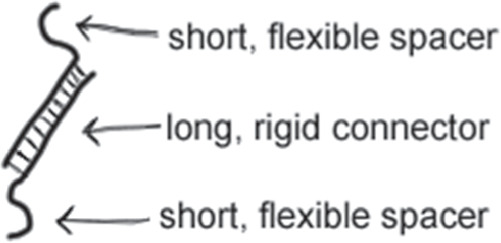

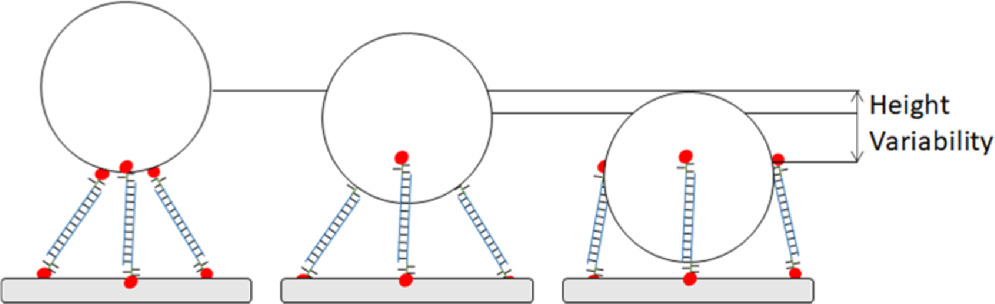

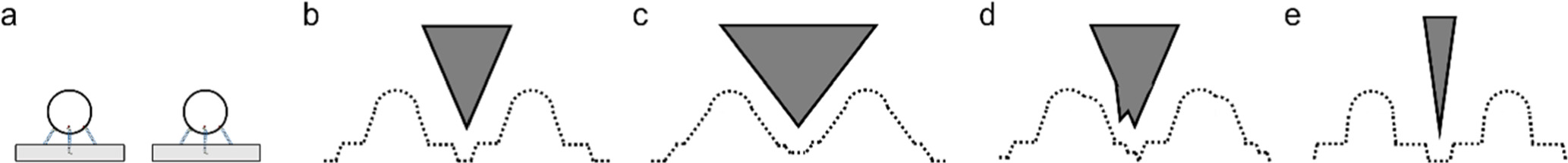

Sticky ends traditionally have spacer nucleotides between both the nanostructure and sticky end, and between the sticky end and the functional element, shown in figure 7. Generally, the longer the sticky end is, the more thermodynamically stable it will be, as discussed in section 4.1. Longer spacers will improve yield by minimizing steric and charge repulsion effects but, as shown in figure 8(b), longer and more flexible sticky ends will also have more freedom to move around the anchor point where the sticky end exits the origami.

Figure 7. Anatomy of a sticky end connection.

Download figure:

Standard image High-resolution imageFigure 8. Schematics of (a) label connection at 5' or 3' position and subsequent binding, the blue line indicates the sticky end sequence and the red dot indicates linker chemical moiety, (b) particle motion and position as a function of the number of sticky ends, (c) common three sticky-end binding positions, (d) multivalent binding of particles to multiple structures, (e) desired binding, with each binding site occupied by one particle and undesired binding, with one particle occupying multiple binding positions.

Download figure:

Standard image High-resolution imageThe length of a sticky end will also play an important role in synthesis. Phosphoramidite synthesis yield is given by equation (1). This is more critical for sticky end strands than for typical staples or tile strands because the sticky ends must be purified, to remove off-sequence products. As material is lost in the purification process, and as chemical linkers are often necessary to connect to the functional element, these strands tend to contribute disproportionately to the cost of the structure.

Note: phosphoramidite synthesis can have a per base yield, or coupling efficiency, as high as 99.5%, this can result, for strands of 20 nt, in at least 10% of sequences having at least one error.

- Off sequence sticky ends or complementary labels can prevent binding, or otherwise hinder assembly. To ensure binding to the correct location, BOTH structure sticky end AND functional element label complement must be purified either by the manufacturer or the practitioner.

- Most manufacturers offer purification of synthetic DNA oligomers. The cost, yield, and purity of product will vary by manufacturer and purification technique.

Another relevant design factor in creating sticky ends is the anti-parallel hybridization of DNA. As shown in figure 8(a), whether the sticky end is attached in the 5' or 3' direction on the functional element and nanostructure can significantly change their binding geometry. As with the addition of spacers, there is some tradeoff between the site occupancy of functional elements and the closeness of binding, likely due to steric hindrance [52].

The number of sticky ends used to connect a nanostructure and functional element can change the position of an element relative to the structure, how much mobility it has around its average position, variation in that position over many copies of a structure, and the thermodynamics of the assembly. This is shown in figure 8(b), while a schematic of a typical sticky end labeling is shown in figure 8(c).

When using multiple binding sites, polymerization is possible, especially for NPs labeled with an excess of sticky end complements. In these cases, it is possible for two or more structures to bind the same particle or vice versa, as shown in figures 8(d) and (e). This is controlled by introducing the structure and element when one or the other is in a large excess. Unfortunately, this necessitates either lower structural yield relative to input reagent or purification and recovery of the excess reagent. As clear rules have yet to be determined for predicting polymerization in these systems, some level of optimization is anticipated for most decoration projects.

While we will describe measuring yield of a desired structure in section 6, it is worth discussing design features that can contribute to improved yield. Relatively few studies have been published systematically examining the site occupancy of functional elements, likely due in part to the expansive design space [51, 52]. Relevant factors include inter-element distance, number of sticky ends on the element, size of the element, number of sticky ends on each anchor patch, and the shape of the nanostructure surface.

Work from Ko et al indicates that for biotin anchor positions binding streptavidin-coated functional elements, that three anchor positions per particle was sufficient for greater than 90% site-occupancy yield. This site occupancy dropped to below 75% for very densely spaced particles. Takabayashi et al [52] used 15 nucleotide sticky ends and tested the role of inter-element distance and number of binding sites. They examined 1, 2, and 4 binding sites, and found a yield of > 97% average site occupancy. They were able to mitigate yield loss due to reduced inter-element distance by interspersing two different sets of sticky end sequences, indicating that single particles bound to multiple binding sites was the primary mode of failure. Their theoretical analysis indicated an asymptote in site occupancy as a function of number of anchors per binding site and suggested 5 anchor positions as a reasonable maximum.

While the ideal arrangement of sticky ends for any given application must still be optimized, 3–5 anchor positions is a common place to start.

3.1.1. Sticky end positioning

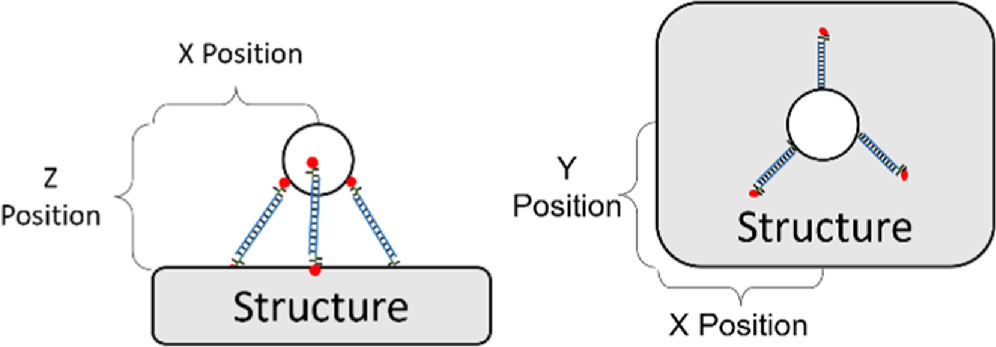

As shown in figure 9, the first step in accounting for the exact X, Y, and Z position of an element follows the simple geometric rules, and depends on the number and types of linkers. Though this is complicated by variability in the internal crossover angle, see figure 12

Figure 9. Relationship between particle X, Y, and Z position relative to the positions of the sticky-ends on the structure.

Download figure:

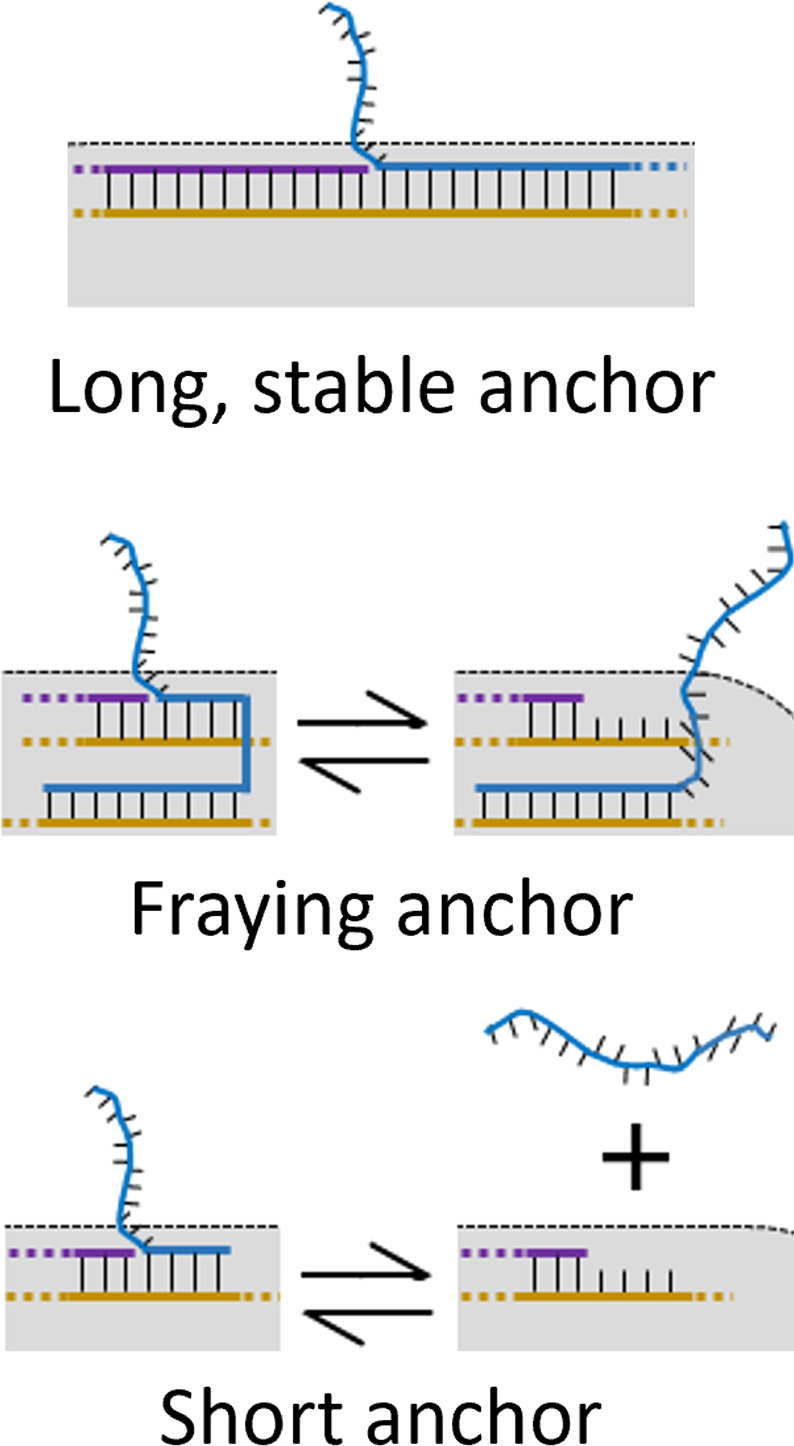

Standard image High-resolution imageIn theory, a practitioner can place a sticky end at any base position of a DNA nanostructure, within the resolution determined by the 0.34 nm base pair spacing along the double helix. However, the location of a sticky end is further constrained by choices made in the design of the nanostructure. The first constraint involves the stability of the sticky end's connection to the rest of the structure. While, to our knowledge, there have been no studies on stability as a function of the length of the anchor to the structure, or associated temperature dependence, it is a generally accepted rule that the sticky end should have at least 5–7 nucleotides, nt, of base pairing uninterrupted by a crossover. This is illustrated in figure 10.

Figure 10. Schematic of how anchor length can result in sticky-end variability. Reproduced from Majikes et al [2].

Download figure:

Standard image High-resolution imageThe second constraint arises from the rotation of the helix. The sticky end will exit the structure at the physical location tangential to the minor groove position for the last nucleotide in the structure, which will rotate around the helix in 3D [54]. Care should be taken to confirm that a sticky end will exit the structure in the desired direction and not point into the structure or exit in the wrong direction. Many computer-aided design (CAD) tools have visual indicators for the rotational position of the minor groove of the helix. These functionalities vary between CAD tools but are a useful feature with which to familiarize oneself.

The process or determining the orientation at a particular base is illustrated in figure 11. One may calculate the exit direction for the sticky end via the right-hand rule combined with the direction of the staple, the direction of the nearest crossover, and the helicity of dsDNA. This can be done by pointing the right-hand-thumb in the 5'→ 3' direction and the index finger initially in the direction of the crossover. As the hand moves along the dsDNA, the fingers should curl at ≈ 0.598 rad per nucleotide (34.3 ° per nucleotide) in the 3' direction. This is approximately 3π/2 rad (270°) for every 8 nucleotides.

Figure 11. Using the right-hand rule to estimate the direction of an arbitrary base relative to the plane of a structure. Reprinted with permission from Majikes et al [2]. Copyright (2021) American Chemical Society.

Download figure:

Standard image High-resolution imageOther constraints include the skew of the crossover junctions, and deformation from internal or external stresses. The presence of neighboring sticky ends may also present topological limits to the length of neighboring staples. Additionally, the shape of a 2D structure can be different in solution as compared to on a surface [55].

While these constraints limit the exact positioning of a sticky end on the structure, they do not necessarily constrain the relative distance between sticky ends, or sets of sticky ends, in the same structure. This, and the lack of explicit studies on the limits of decoration precision make engineering for a predetermined positioning on the first try non-trivial. Often an iterative 'assemble-measure-and-modify' approach is taken, in which it is assumed that the initial predictions of functional element locations will be inaccurate.

3.2. Decoration accuracy

Few studies have reported designed versus measured intended inter-element distances, so there is not, to our knowledge, best practice for achieving a desired spacing in a completed structure. We can, therefore, only describe useful design concepts and give general recommendations. For systems where a highly accurate initial guess is required, we recommend iterative design, and rough structure simulation (finite element [17] or coarse grained [14, 15, 56]) followed by molecular dynamics simulations [57]. For particularly demanding applications, planning alternative versions of the binding locations and strand sequences in advance may be prudent.

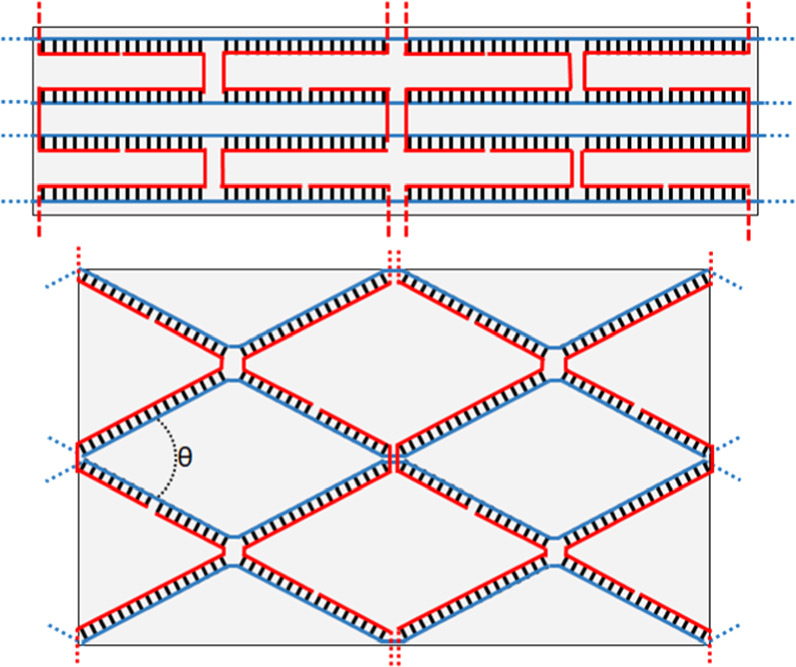

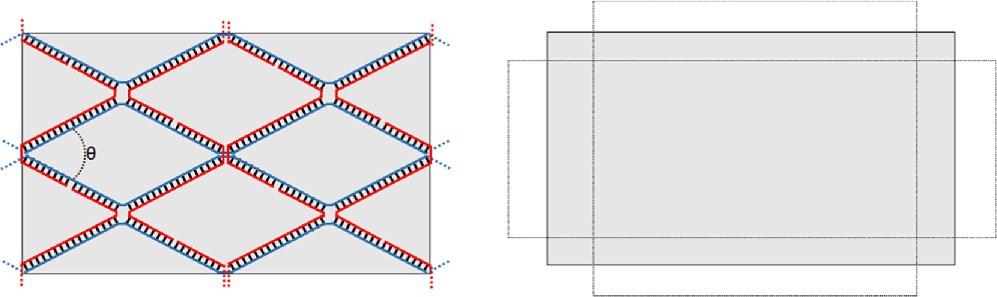

The primary source of decoration inaccuracy is in predicting the exact dimensions of a DNA nanostructure prior to fabrication. As shown in figure 12 the idealized shape of a structure as seen in CAD tools fails to account for the structure and angle of crossover junctions. Beyond what is shown in figure 12, Holliday junctions, the biological analogue of the crossover junction, have a small skew, which would be out-of-page in figure 9, and may flex in that direction to minimize charge density [58]. An in-plane angle of π/3 rad (60°) is a commonly accepted value in biological contexts [58, 59]. This angle is likely to be lower in DNA nanostructures, and may vary between designs, particularly for 2D and 3D structures, and quantification of these angles in origami or other DNA nanostructures has been a subject of few publications, typically in the context of mechanical properties [60], ion dependent reconfiguration [61, 62], novel characterization [63–65], and bistable physical isomorphs [66, 67].

Figure 12. Idealized versus relaxed crossover patterns and estimated relaxed dimensions. Note that the relaxed angle in this figure appears exaggerated as the line form of representation has a lower aspect ratio than real DNA.

Download figure:

Standard image High-resolution imageIt is worth noting that both the schematics in figure 12, and in most CAD tools, can visually imply larger spacings than occur practically. This is because the aesthetics of traditional line representations of dsDNA under-represent the width of the helix. A more accurate, but more cluttered, representation in figure 12 would have helix widths consuming approximately half of the empty space in the lattice.

Other sources of systematic inaccuracies in a designed inter-element distance may include an inaccurate mean functional element size, and charge repulsion for large or highly-charged functional elements.

Given the lack of in-depth studies on this topic, practitioners often take an iterative 'assemble-measure-and-modify' approach, in which it is assumed that the initial predictions of function element locations will be inaccurate.

3.3. Decoration precision

3.3.1. Inter-structure variability

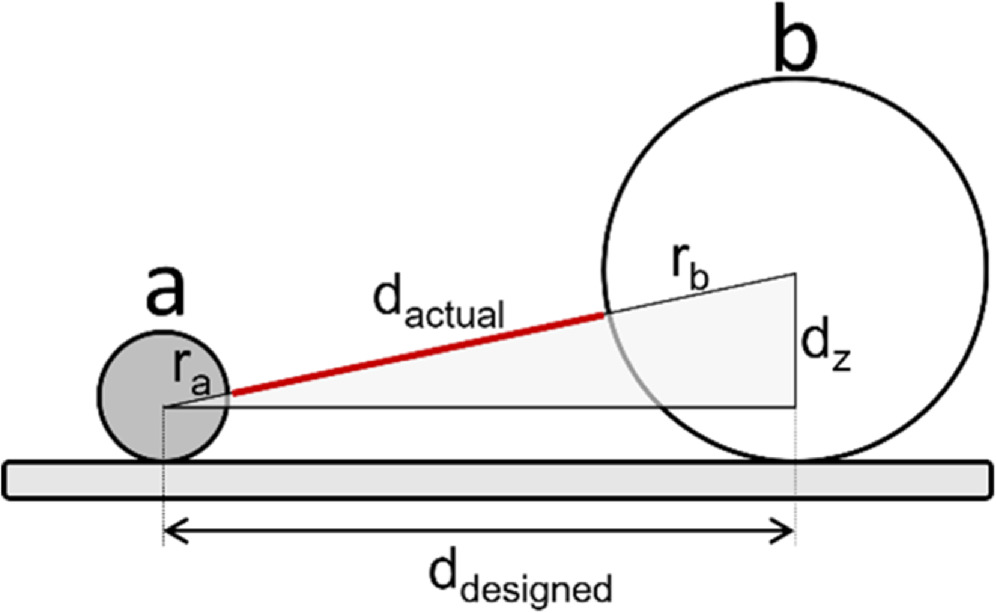

For particles, the largest source of inter-particle spacing variation comes from variation in particle size. As shown in figure 13, the distribution of particle sizes, referred to as polydispersity, maps intuitively onto the inter-particle distances. It is for this reason that we highly recommend either characterizing all NP size distributions prior to decoration or purchasing NPs that include size distribution quantification in their quality control documentation.

Figure 13. Interparticle distance as a function or variability in radius where d4 < d2 < d1 < d3.

Download figure:

Standard image High-resolution imageGenerally, equation (2)–(4) provide the shortest distance between two particles bound to an origami with the assumption of a Gaussian particle radius distribution, neglecting binding height variability, and other factors, shown in figure 14. Note that dhyp is the distance between particle centers and is equal to the dactual+ra

+rb

. To address the more common log-normal NP radius distribution we would recommend Monte Carlo simulation. We discuss uncertainty propagation more generally in section 6, though this is a useful and specific example. We will use the notation where  refers to uncertainty on some value

refers to uncertainty on some value  represented by the standard deviation of the distribution of multiple measurements of

represented by the standard deviation of the distribution of multiple measurements of  In this case

In this case  would likely come from the shape of the structure and the crossover junction angle, while

would likely come from the shape of the structure and the crossover junction angle, while  would be from the polydispersity of the particle a and b ensembles.

would be from the polydispersity of the particle a and b ensembles.

Figure 14. True interparticle distance as a function of ideal distance and difference in radius.

Download figure:

Standard image High-resolution imageIn this description ddesigned is the designed distance between particle attachment points, dhyp is the distance between particle centers, and dactual is the distance between particle surfaces. If this model is accurate, we can recast the uncertainty in the true interparticle distance as a function of the particle radii and uncertainty in their radii.

![${\sigma }_{\mathrm{actual}}=\sqrt{{{d}_{\mathrm{hyp}}}^{2}\left[{\left({2d}_{\mathrm{designed}}^{2}\tfrac{{\sigma }_{\mathrm{designed}}}{{d}_{\mathrm{designed}}}\right)}^{2}+{\left(2{d}_{z}^{2}\tfrac{{\sigma }_{z}}{{d}_{z}}\right)}^{2}\right]{+\sigma }_{a}^{2}+{\sigma }_{b}^{2}}$](https://content.cld.iop.org/journals/0957-4484/35/27/273001/revision3/nanoad2ac5ieqn6.gif)

For sensitive applications, such as FRET lifetime engineering [7], or for more complex distributions of particle radius, we suggest Monte Carlo simulation of distances.

The National Institute of Standards and Technology provides an online Monte Carlo simulation tool, the NIST uncertainty machine [68]. Documents for best practice in expression of uncertainty include the international Guide to the expression of Uncertainty in Measurement, or GUM [69].

A less easily quantifiable source of variability is degenerate binding positions for particles with a random distribution of sticky-ends, or other anchor modifications, across their surface. The three bound particles in figure 15 all would be expected to have roughly the same free energy of binding if one neglects electrostatic repulsion and the loss of nanoparticle translational entropy from left to right. One would therefore expect a distribution of those possible binding positions in any fabricated sample.

Variability between nanostructures in a single sample can also be ascribed to assembly errors, either in the binding of the functional elements, or in the structural DNA maintaining distance between those elements.

3.3.2. Structural fluctuations

Motions within individual structures over time are a final source in inter-element distance variation. There are three major sources: slack in functional element binding, fluctuation in crossover shape, and overall structural flexibility.

As we discussed in section 3.1, small spacers are often left between a structure or functional element and its linking modifications or sticky ends. These spacers can improve site occupancy [50, 59], however, they also allow functional elements to float around the center of the binding site, shown in figure 8(b).

Figure 15. Height variability due to locations of binding strands on nanoparticle.

Download figure:

Standard image High-resolution imageThe angles made between strands participating in a crossover junction are not completely static, figure 16. It has been shown in the literature that they can be bistable and jump between equivalent parallelograms [67]. While these transitions are rare unless they are explicitly designed in, it is reasonable to expect that the angle of the crossover junctions to fluctuate slightly in solution, and that these fluctuations cause slight variations in the distance between functional elements. Like the exact value of the crossover junction angle, this is likely to vary between structures, particularly between 2D and 3D designs.

Figure 16. Variation in the angle of the crossover shifting the aspect ratio of a structure, as the average θ changes, the overall shape of the structure can change. Note that the relaxed angle in this figure appears exaggerated as the line form of representation has a lower aspect ratio than real DNA.

Download figure:

Standard image High-resolution imageFinally, structural bending will play a role in distances between functional elements, particularly for 2D structures. In the direction of the helices, 2D structures are highly rigid. However, the crossover junctions may act as hinges in the direction perpendicular to the helices. 2D structures therefore have high and low persistence lengths in the direction of, and perpendicular to, their helices [60, 70]. Most studies measuring interparticle distance have either used the highly rigid 3D six helix bundle or placed particles on the same helix of a 2D origami. As such, there is limited quantification of this phenomena, although bending has been used in conformational sensors [71].

3.4. Types of functional elements

A comprehensive list of functional elements that have been, or could be, attached to DNA nanostructures is beyond the scope of this tutorial, though we suggest the following review [28]. Similarly, there are reviews which cover specific families of functional elements in more detail, e.g. proteins [72] and aptamers [73]. Here we list common functional elements, linker moieties, and chemical modifications to DNA. Table 2 details common functional elements that have been published frequently.

Table 2. Common nanoscale functional elements, typical linker chemistry, and compatibilities. Many of those listed here can be implemented using commercially available functional elements and linkers.

| Element | Function | Common linkers | Notes | |

|---|---|---|---|---|

| Peptide | ||||

| Molecules | 2–50 amino acids | Binding biomolecules | Click chemistry | Can thermally anneal |

| <103 g·mol−1 | Binding small molecules | NHS ester | Can chemically anneal | |

| <1 nm | Can spin-filter purify | |||

| Typically, non-toxic | ||||

| Protein | ||||

| >50 amino acids | Binding biomolecules | Click chemistry | Toxicity depends on protein | |

| >5 × 104 g·mol−1 or more | Binding small molecules | NHS ester | Denature both chemically & thermally | |

| >3–10 nm | Enzymatic catalysis | |||

| Biological interface | ||||

| Covalent modifications (Fluorophores, Quenchers) | Fluorescence | Usually as part phosphonamidite synthesis, or post synthesis NHS ester addition | Can thermally anneal | |

| Hundreds of g.mol−1 <1 nm | Toxicity depends on modification | |||

| Aptamers (15–90) nucleotides, nt (6 × 103 to 3 × 104) g·mol−1 ≈ 3 nm | Binding biomolecules | None needed | Can thermally anneal | |

| Binding small molecules | Toxicity depends on aptamer, generally low immunogenicity | |||

| Lipids bilayers or vesicles of varying size | Separate chemical | Cholesterol | May non-specifically interact, particularly | |

| environments | with hydrophobic surfaces and molecules | |||

| Nanoparticles | Gold nanoparticles (AuNPs) | Quenching fluorophores | Thiol | High mass contrast (good for EM, gel purification, gradient ultra-centrifugation) |

| Plasmonic coupling | Alkyne | Toxicity depends on stabilizing ligands | ||

| Seeding metal growth | Poly-T | Thermostability depends on stabilizing ligands | ||

| Quantum dots (QDs) | Fluorescent | Depends on outer shell material | High mass contrast | |

| Typically toxic | ||||

| Semiconducting nanoparticles | Tuned bandgap (Usually, to absorb light and transfer energy to some other element) | Silane | High mass contrast | |

| Can be toxic | ||||

| Iron oxide nanoparticles | Magnetic separations | Multi-layer ligand addition | High polydispersity | |

Table 3 lists common linker moieties. It should be reiterated that it is far from exhaustive. The protein bioconjugation literature is deep, with a wide variety of strategies for covalent linkages [74]. We refrain from discussing them in detail as many require development of more specific skills than those new to DNA nanotechnology might be expected to have. The aptamers discussed in table 2 are sequences of nucleic acid that form a 3D structure which binds some target and can be used as a linker without requiring a chemical modification.

Table 3. Common commercially available linker moieties, their approximate size, as well as their typical constraints and uses. Other moieties such as silanes, acrydites, amines, etc are often also available, but to our knowledge are more commonly used in the biochemical community.

| Name | Chemical structure | Size | Notes | Commonly used for |

|---|---|---|---|---|

| Thiol | −S | <1 nm | Forms di-thiols | Gold |

| Non-covalent protein binding | Biotin (Streptavidin Binding) | ≈ 5 nm | Streptavidin is tetravalent | Proteins and large particles |

| Thermally denatures | ||||

| Digoxigenin (Antibody Binding) | ≈ 10 nm | Most antibodies are divalent | Proteins and sandwich assays | |

| Thermally denatures | ||||

| Covalent bonding | Click Chemistry (copper catalyzed alkane and azide cycloaddition) | <1 nm | Requires protection for proteins (typically an intercalator for metal ion catalyst) | Any appropriately labeled molecule or particle |

| NHS ester and amino groups | <1 nm | Reagent NHS esters are stable 4 to 8 h. in water | ||

| Non-specific surface binders | Cholesterol, Alkyne, etc | <1 nm | Lipids, proteins, gold surfaces |

Table 4 shows common modified backbones that might be used in a structural context. Numerous other commercially available modified bases exist to support biochemical assays for applications ranging from fluorescent reporting to nuclease resistance and photo crosslinking. See the cited review for a more thorough description of modified bases relevant to biological applications [39].

Table 4. Common modified DNA backbones for structural applications.

| Name | Chemical structure | Description |

|---|---|---|

| Deoxyribonucleic acid (DNA) |

| Standard DNA, bases attached to a sugar-phosphate backbone |

| Peptide nucleic acid (PNA) |

| Nucleic Acid in which the sugar-phosphate backbone is replaced with a peptide backbone. They are more nuclease resistant, and have less charge |

| Bridged nucleic acid (BNA) |

| A DNA backbone where the 2 and 4 carbons are connected by a bridge where X can be a variety of atoms |

| This prevents the base from rotating, which in turn reduces the loss in entropy on hybridization, stabilizing the dsDNA state | ||

| Locked nucleic acid (LNA) |

| A class of BNA in which the bridging moiety comprises a carbon and an oxygen |

| Abasic DNA |

| A nucleotide without the nucleobase, preventing base pairing |

| Often used as a spacing motif |

3.5. Sticky end sequence design

The thermodynamics of DNA hybridization will be discussed in section 4.1, however the choice of DNA sequences in the context of design merits discussion. For DNA origami, the staple strand sequences are determined by where they bind to the scaffold. Little to no manual sequence design is necessary for origami staple strands. Sticky ends are often the only sequences that a practitioner will design.

As the number of sticky ends, or strands generally, in a system increases, so to does the probability of unintended complimentary interactions. For small numbers of sticky ends less than 30 nt in length, random sequences are often sufficient. However, as decorated DNA nanostructures increase in complexity, more rigorous design will be required.

For single digit numbers of sticky ends, this often involves manual checks for stable hairpins and for stable, but undesirable, complementarity between supposedly orthogonal sticky ends. Hairpins on a sticky end can dramatically reduce hybridization kinetics. Unintended stable complementarity between strands can reduce their effective concentration or result in polymerization. In both cases, the kinetics of assembly are slowed [75].

Numerous webtools exist to evaluate hairpins and partial strand complements [76–80]. While most strands will have some hairpin interaction with themselves, or partial complementarity with other strands, many of these interactions will be unstable at or above room temperature.

One trick for manual sequence design and optimization is to use only three of the four nucleobases on each strand, e.g. one sticky end would only be comprised of the letters A, G, and C while its complement would only be comprised of T, C, and G. In a strand with only A, G, and C, the A bases cannot contribute to hairpin stability as they have no T bases to pair with. Similarly, two orthogonal strands which are only comprised of T, C, and G can only have partial complements between their C and G bases. However, this does have the disadvantage of reducing the number of possible sequences.

To our knowledge, there have been no studies on the optimization of large numbers of sequences in the decoration context. However, there is significant literature on sequence design for tiles and junctions [81], algorithmic assembly [82], strand displacement [75, 83, 84], and data storage. This includes tools to minimize unintended complementarity in silico, which could presumably be easily adapted to sticky end design [81, 85, 86].

4. Decoration assembly

Assembly of DNA nanostructures inherently involves ssDNA to dsDNA hybridization reactions. These reactions are mostly performed across an energy gradient, typically via thermal anneals.

We begin with the thermodynamics of plain DNA hybridization before addressing the thermodynamic considerations of sticky end binding. The energetic contributions associated with the topology changes in DNA nanostructure folding are still an open challenge and are beyond the scope of this tutorial.

4.1. Plain DNA hybridization and melting thermodynamics

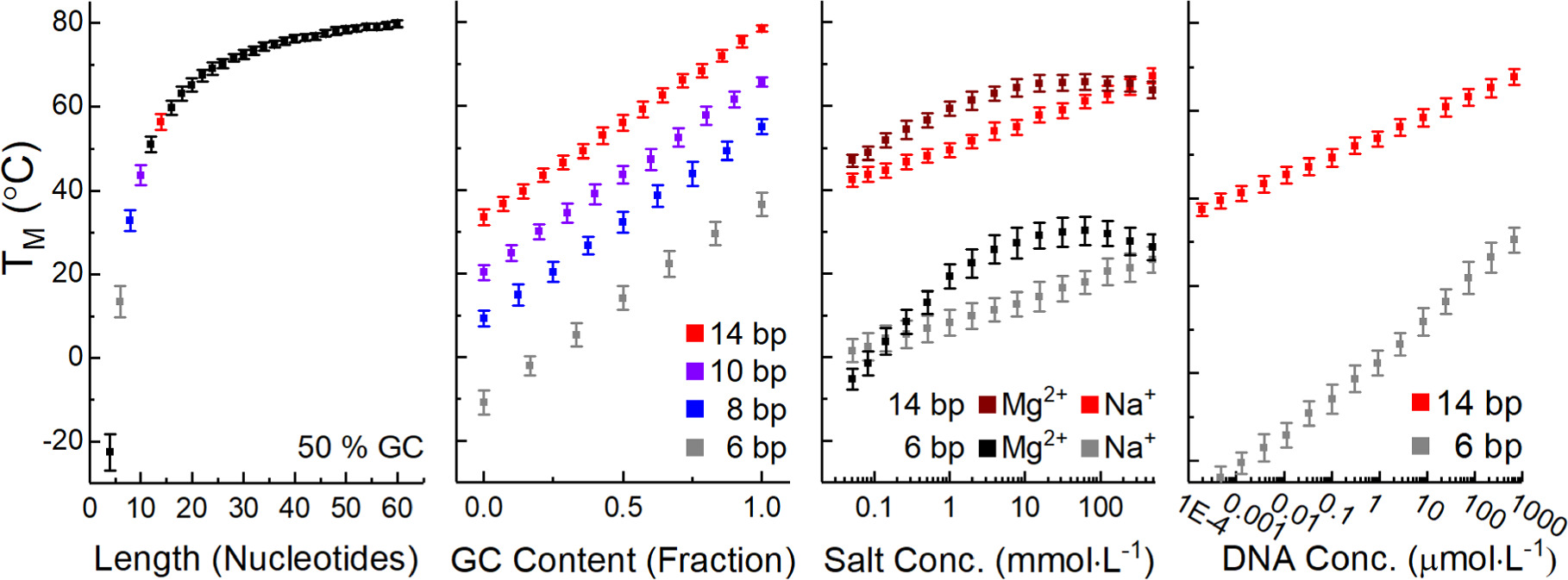

Control over the melting temperature and sharpness of the melting transition are two useful but relatively underutilized design degrees of freedom. These parameters are determined by the energetics of hybridization, which can be modeled to provide a melting temperature, Tm, defined as the point at which half of the strands are released. It is also possible to determine, more or less accurately, the width of the melt curve. The degree of accuracy depends on how similar the system is to ssDNA ↔ dsDNA hybridization.

In some cases, one need only know the approximate stability of some sequence at room temperature that singly connects an element to a nanostructure. In others, a practitioner might model the energetic contributions to optimize the sticky end sequences: modifying sequence length may allow creation of structures with minute shifts in element location or may remove undesirable cross-hybridization with other strands or may simply shorten strands and reduce costs. In other projects, such modeling is critical, e.g. when engineering multiple meta-stable binding states, the population of which is controlled by temperature, pH, or analyte concentration.

For many applications, existing predictive webtools [76–78], or even empirical equations relating Tm and GC content may be sufficient. For those, it is enough to remember trends in length, GC content, concentration, and salt content, depicted in figure 17, and that unimolecular reactions like hairpins are more stable. For those practitioners who will need to model the hybridization in their own system we introduce the relevant nuances below.

Figure 17. Nearest neighbor model predicted Tm as a function of oligomer length, fractional GC content, and salt concentration. Unless otherwise indicated in the x axis all predictions in this figure used a dsDNA concentration of 40 μmol·l−1, a GC content of 50%, and 1 mol·l−1 NaCl. Uncertainty bars represent a single standard deviation across the Tm of 50 randomly generated sequences. The error bars represent a single standard deviation across the Tm of those 50 sequences. The data point colors are set to be consistent between subplots.

Download figure:

Standard image High-resolution imageIn B-form DNA the major factors controlling hybridization stability and melting temperature, Tm, are sequence, length, salt concentration, and strand concentration. The main driver of hybridization is the hydrophobic π–π stacking between bases up and down the helix, with minor contributions from hydrogen bonding between base pairs and electrostatic repulsion. The shorter a strand is, the more the unfavorable interaction between the terminal bases and water contributes relative to favorable interactions between bases within the helix. Similarly, as guanine and cytosine bases have more favorable base stacking, a higher GC content will result in a more stable helix. Salts release the water molecules bound to the phosphate backbone and shield its negative charge, entropically increasing stability. Finally, increasing strand concentration shifts equilibrium to hybridization at higher temperatures, as one would expect via Le Chatelier's principle—where increasing reactant concentration will push a reaction to create more product in response.

Typical thermodynamic prediction uses the van't Hoff relation by identifying the form of the equilibrium constant and the predicted ΔH and ΔS for a sequence under specific conditions, then solve for the fraction, or concentration, of ssDNA. Prediction of ΔH and ΔS can be done through the nearest neighbor, NN, model. Here we use the NN parameterization presented by Santa Lucia et al [87]. The conditions under which these models have been parameterized do not perfectly translate to the those present for DNA nanostructures, but they do provide a useful foundation from which to build.

To find a predicted ΔH and ΔS, proceed through the sequence in neighboring sets of bases and add up the energetic contributions for the terminal bases and each set of neighbors. For ATGCAT, one would add ΔH and ΔS pairs for A+T, T+G, G+C, C+T, and A+T, then add the appropriate energetic penalties for termination in A and T. The summed ΔH and ΔS would then be adjusted for salt concentration [88] or other DNA motifs. This model assumes ΔH and ΔS are temperature independent, i.e. they neglect change in heat capacity ΔCp. While this is an oversimplification [89], it is sufficient for our purposes as the ΔCp primarily increases the asymmetry of the melt curve and does not significantly shift the Tm.

The van't Hoff relation is given in equation (5), where [ ] brackets indicate concentration relative to the standard concentration (1 mol·l−1), T is the temperature, and R is the gas constant.

For unimolecular reactions the equilibrium constant is given in equation (6) where [ssDNA] is the concentration of denatured ssDNA molecules and [dsDNA] is the concentration of hybridized dsDNA. As most melt curve measurements do not give absolute concentrations, this is often rewritten in terms of the fraction of DNA that has melted, fss

. In such cases ![$\left[{ssDNA}\right]={f}_{{ss}}* \,\mathrm{conc}$](https://content.cld.iop.org/journals/0957-4484/35/27/273001/revision3/nanoad2ac5ieqn7.gif) where the term conc describes the total DNA concentration. It should be noted that this term is still relative to 1 mol·l−1, and is thus unitless, which ensures that

where the term conc describes the total DNA concentration. It should be noted that this term is still relative to 1 mol·l−1, and is thus unitless, which ensures that ![$\left[K\right]$](https://content.cld.iop.org/journals/0957-4484/35/27/273001/revision3/nanoad2ac5ieqn8.gif) is unitless regardless of reaction form.

is unitless regardless of reaction form.

For unimolecular reactions, the absolute concentration terms cancel to give equation (6)