Physics for health

Published March 2024

•

Copyright © 2024 The Editors. Published by IOP Publishing Ltd.

Pages 5-1 to 5-127

You need an eReader or compatible software to experience the benefits of the ePub3 file format.

Download complete PDF book, the ePub book or the Kindle book

Abstract

Chapter 5 presents an introduction and sections on: accelerators for health; bionics and robotics; physics for health science; physics research against pandemics; further diagnostics and therapies.

Original content from this work may be used under the terms of the Creative Commons Attribution NonCommercial 4.0 International license. Any further distribution of this work must maintain attribution to the editor(s) and the title of the work, publisher and DOI and you may not use the material for commercial purposes.

All rights reserved. Users may distribute and copy the work for non-commercial purposes provided they give appropriate credit to the editor(s) (with a link to the formal publication through the relevant DOI) and provide a link to the licence.

Permission to make use of IOP Publishing content other than as set out above may be sought at permissions@ioppublishing.org.

The editors have asserted their right to be identified as the editors of this work in accordance with sections 77 and 78 of the Copyright, Designs and Patents Act 1988.

5.1. Introduction

Ralph W Assmann1,4, Giulio Cerullo2 and Felix Ritort3

1Deutsches Elektronen-Synchrotron (DESY), Hamburg, Germany

2Politecnico di Milano, Milan, Italy

3Universitat de Barcelona, Barcelona, Spain

4Present affiliation: GSI Helmholtzzentrum für Schwerionenforschung, Darmstadt, Germany

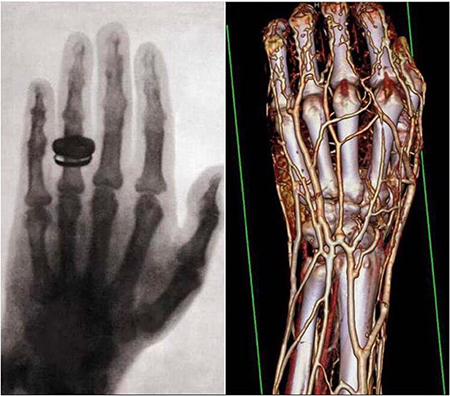

The fundamental research on the physics of elementary particles and nature's fundamental forces led to numerous spin-offs and has tremendously helped human well-being and health. Prime examples include the electron-based generation of x-rays for medical imaging, the use of electrical shocks for treatment of heart arrhythmia, the exploitation of particle's spin momenta for spin tomography (NMR) of patients, and the application of particle beams for cancer treatment. Tens of thousands of lives are saved every year from the use of those and other physical principles. A strong industry has developed in many countries, employing hundreds of thousands of physicists, engineers, and technicians. Industry is designing, producing, and deploying the technology that is based on advances in fundamental physics.

Major research centers have been established and provide cutting-edge beams of particles and photons for medical and biological research, enabling major advances in the understanding of structural biology, medical processes, viruses, bacteria, and possible therapies. Those research infrastructures serve tens of thousands of users every year and help them in their research. Modern hospitals are equipped with a large range of high technology machines that employ physics principles for performing high-resolution medical imaging and powerful patient treatment. Professors and students at universities use even more powerful machines for conducting basic research in increasingly interdisciplinary fields like biophysics and robotics. New professions have developed involving physicists and reaching out to other domains. We mention the rapidly growing professions of radiologists, health physicists, and biophysicists.

While physics spin-offs for health are being heavily exploited, physicists in fundamental research keep advancing their knowledge and insights on the biochemical mechanisms at the origin of diseases. New possibilities and ideas keep constantly emerging, creating unique added value for society from fundamental physics research. This chapter does not aim to provide a full overview of the benefits of physics for health. Instead, the authors concentrate on some of the hot topics in physics- and health-related research. The focus is put on new developments, possible new opportunities, and the path to new applications in health.

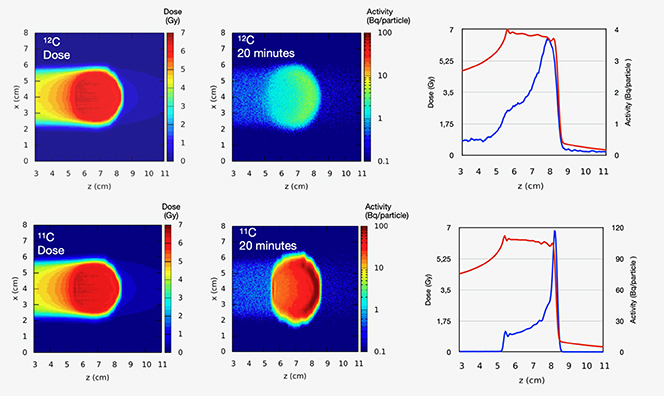

Angeles Faus-Golfe and Andreas Peters describe the role of particle accelerators and the use of their beams for irradiating and destroying cancer cells. State-of-the-art machines and possibilities for new irradiation principles (i.e., the FLASH effect) are introduced. As physics knowledge and technology advance, tumors can be irradiated more and more precisely, damage to neighboring tissue can be reduced, and irradiation times can be shortened.

Darwin Caldwell looks at the promise and physics-based development of robotic systems in the macroscopic world, where they are complementing human activities in a number of tasks from diagnosis to therapy. Friedrich Simmel looks at the molecular and cell-scale world and explains how nanorobotics, biomolecular robotics, and synthetic biology are emerging as additional tools for human health (e.g., as nano-carriers of medication that is delivered precisely).

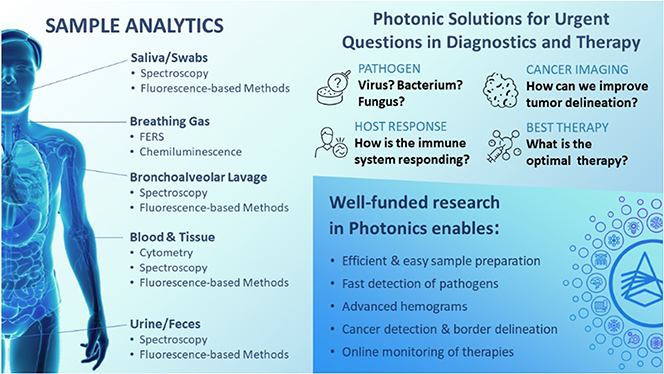

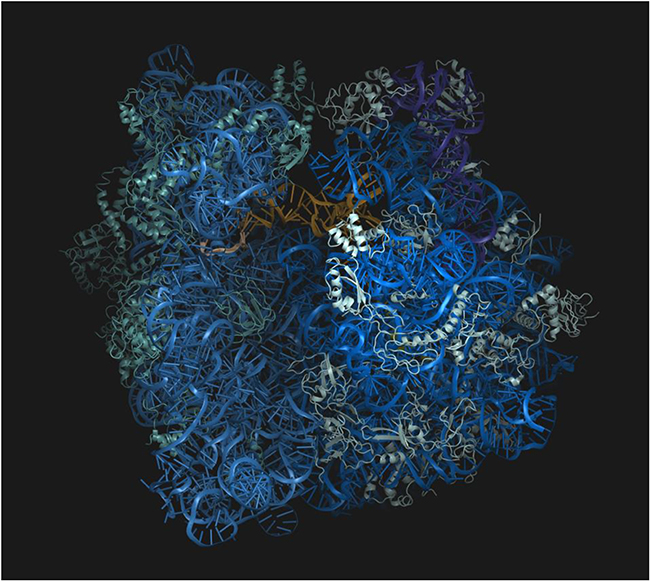

Henry Chapman and Jürgen Popp describe the benefits of light for health. Jürgen Popp is considering the use of lasers that have advanced tremendously in recent years in terms of power stability and wavelength tunability. Modern lasers are used in several crucial roles in cell imaging, disease diagnosis, and precision surgery. Henry Chapman considers the use of free-electron lasers for understanding features and processes in structural biology. He shows that the advance of those electron accelerator-based machines has allowed tremendous progress in the determination of the structures of biomolecules and the understanding of their function.

Aleksandra Walczak, Chiara Poletto, Thierry Mora, and Marta Sales describe physics research against pandemics, a multi-disciplinary problem at the crossing of immunology, evolutionary biology, and networks science. Pandemics are also multi-scale problems at the spatial and temporal levels: from the small pathogen to the large organism; and from the infective process at cellular scale (hours) to its propagation community-wide (months). Simple mathematical models such as SIR (susceptible-infected-recovered) have been a source of inspiration for physicists who model key quantities at an epidemic outbreak, such as the effective reproductive number R, in situations where a disease has already spread. A prominent example is the recent COVID-19 pandemic that has been more than a health and economic crisis. It illustrates our vulnerability where interdisciplinary and multilateral science play a crucial role addressing a global challenge such as this.

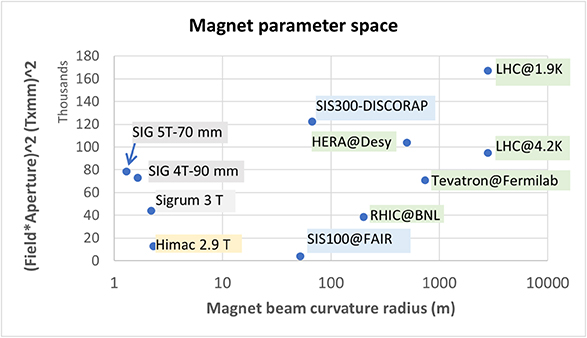

Promise and progress in further diagnostics and therapies are also considered. Lucio Rossi explains the progress in magnetic field strength as it can be achieved with superconducting magnets, while Marco Durante discusses the progress in charged particle therapy for medical physics.

5.2. Accelerators for health

Angeles Faus-Golfe1 and Andreas Peters2

1Laboratoire de Physique des 2 Infinis Irene Joliot-Curie,IN2P3-CNRS—Université Paris-Saclay, Orsay, France

2HIT GmbH at University Hospital Heidelberg, Germany

Energetic particles, high-energy photons (x-rays and gamma rays), electrons, protons, neutrons, and various atomic nuclei and more exotic species are indispensable tools in improving human health.

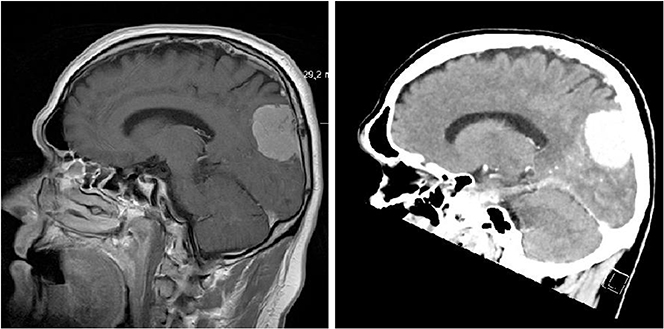

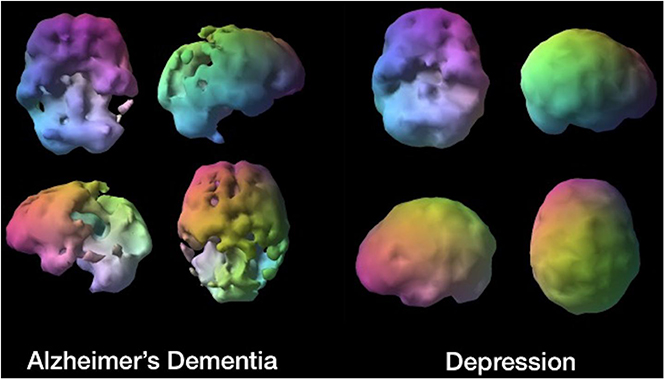

The potential of accelerator-reliant therapy and diagnostic techniques has increased considerably over the past decades, playing an increasingly important role in identifying and curing affections, such as cancer, that otherwise are difficult to treat; they also help to understand how major organs such as the brain function and thus to determine the underlying causes of diseases of growing societal significance such as dementia.

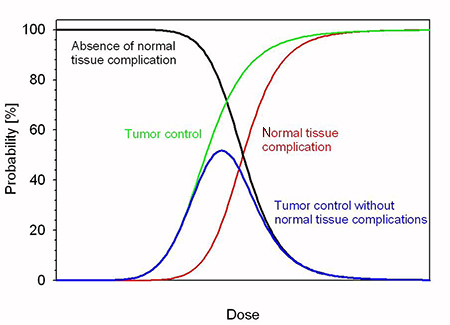

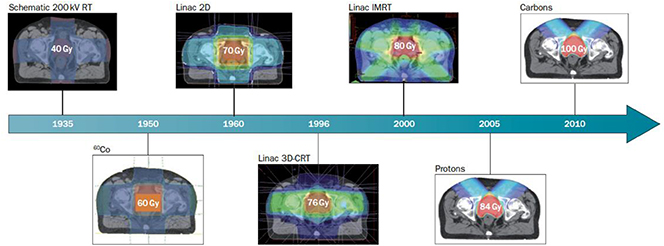

5.2.1. Motivation to use and expand x-rays and particle therapy

The use of x-rays in radiotherapy (RT) is now the most common method of RT for cancer treatment. While x-ray therapy is a mature technology there is room for improvement. The current challenges are related to the accurate delivery of x-rays to tumours involving sophisticated techniques to combine imaging and therapy. In particular, the ability to achieve better definition and efficiency in 4D reconstruction (3D over time) distinguishing volumes of functional biological significance. Further technical improvements to reduce the risk of a treatment differs from the prescription and moving towards 'personalised treatment planing' are being made. Some of these techniques such as: image-guided radiation therapy (IGRT), control of the dose administered to the patient (in vivo dosimetry) or adaptive RT to take into account the morphology changes in the patient, are the state of the art and are being implemented in the routine operation of these types of facilities. An example is the so-called MR linac, which provides magnetic resonance (MR) and RT treatment at the same time. Finally, the reduction of the accelerator costs and the increase of reliability/availability in challenging environments are also important research challenges to expand this kind of RT in low- and middle-income countries (LMICs).

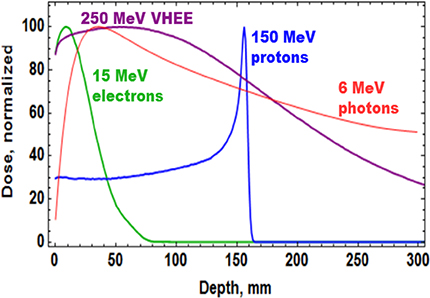

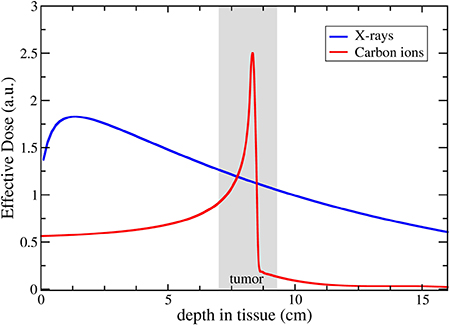

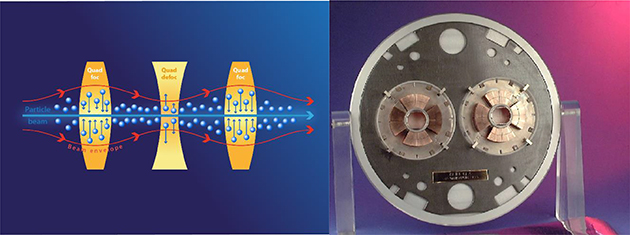

Low-energy electrons have historically been used to treat cancer for more than five decades, but mostly for the treatment of superficial tumours given their very limited penetration depth. However, this limitation can be overcome if the electron energy is increased between 50 and 200 MeV (i.e., very high-energy electrons, VHEE; figure 5.1). With the recent developments of high-gradient normal conducting (NC) radio frequency (RF) linac technology (figure 5.2) (CLIC Project n.d.) or even the novel acceleration techniques such as the laser-plasma accelerator (LPA) (figure 5.3), VHEE offer a very promising option for anticancer RT. Theoretically, VHEE beams offers several benefits. The ballistic and dosimetry properties of VHEE provide small-diameter beams that could be scanned and focused easily, enabling finer resolution for intensity-modulated treatments than is possible with photons beams. Electron accelerators are more compact and cheaper than proton therapy accelerators. Finally, VHEE beams can be operated at very high-dose rates and fast electromagnetic scanning providing uniform dose distribution throughout the target and allowing for unforeseen RT modalities in particular the FLASH-RT.

Figure 5.1. Dose profile for various particle beams in water (beam widths r = 0.5 cm).

Download figure:

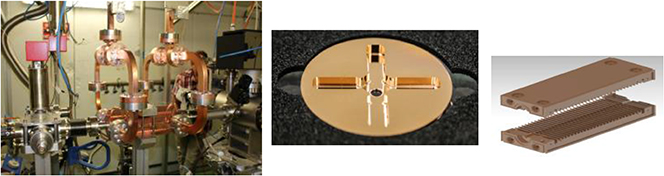

Standard image High-resolution imageFigure 5.2. CLIC RF X-band cavity prototype (12 GHz, 100 MV m−1).

Download figure:

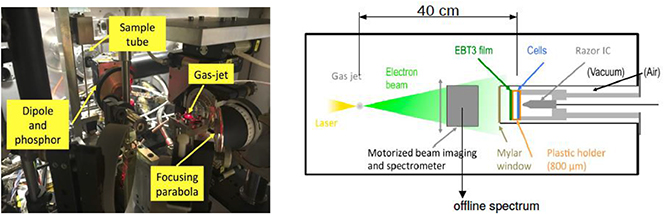

Standard image High-resolution imageFigure 5.3. Setup of Salle Noire for cell irradiation at LOA-IPP laser-driven wakefield electron accelerator.

Download figure:

Standard image High-resolution imageFLASH-RT is a paradigm-shifting method for delivering ultra-high doses within an extremely short irradiation time (tenths of a second). The technique has recently been shown to preserve normal tissue in various species and organs while still maintaining anti-tumour efficacy equivalent to conventional RT at the same dose level, in part due to decreased production of toxic reactive oxygen species. The 'FLASH effect' has been shown to take place with electron, photon, and more recently for proton beams. However, the potential advantage of using electron beams lies in the intrinsically higher dose that can potentially be reached compared to protons and photons, especially over large areas as would be needed for large tumours. Most of the preclinical data demonstrating the increased therapeutic index of FLASH has used a single fraction and hypo-fractionated regimen of RT and using 4–6 MeV electron beams, which do not allow treatments of deep-seated tumours and trigger large lateral penumbra (figure 5.4). This problem can be solved by increasing the electron energy to values higher than 50 MeV (VHEE), where the penetration depth is larger.

Figure 5.4. FLASH preservation of the neurogenic niche in juvenile mice (courtesy of C Limoli).

Download figure:

Standard image High-resolution imageMany challenges, both technological and biological, have to be addressed and overcome for the ultimate goal of using VHEE and VHEE-FLASH as an innovative modality for effective cancer treatment with minimal damage to healthy tissues.

From the accelerator technology point of view the major challenge for VHEE-RT is the demonstration of a suitable high-gradient acceleration system, whether conventional, such a X-band or not, with the stability, reliability, and repeatability required to be operated in a medical environment. In particular, for the VHEE-FLASH is the delivery of very high dose rate, possibly over a large area, providing uniform dose distribution throughout the target (Faus-Golfe 2020).

All this asks for a large beam test activity in order to experimentally characterize VHEE beams and their ability to produce the FLASH effect and provide a test bed for the associated technologies. It is also important to compare the properties of the electron beams depending on the way they are produced (RF linac or LPA technologies). Preliminary VHEE experimental studies have been realized using NC RF accelerator facilities in NLTCA at SLAC and CLEAR at CERN, giving very promising results for the use of VHEE electron beams. Furthermore, some experimental tests have been carried out with laser-plasma sources at ELBE-DRACO in HZDR, at LOA in IPP, and at the SCAPA facility at the University of Strathclyde. In particular for the FLASH, experimental studies have been realized at low energies with the Kinetron linac at the Institute Curie at Orsay and the eRt6-Oriatron linac at CHUV and at very high energies at CLEAR at CERN.

Proton and ion beam therapy has growing potential in dealing with difficult-to-treat tumours, for example, because of the risk of damaging neighbouring sensitive tissues such as the brainstem or visual nerves in the case of head tumour treatments. Also, some treatments may benefit from the use of particles that deliver doses with greater radiobiological effectiveness (RBE) and higher local precision, notably carbon, and in the near future also helium ions.

Recent investigations using ultra-short and ultra-high dose rates (called FLASH) of electron beams showed growth retardation of tumours with the same effect as in conventional therapy, but with minimized impact to the surrounding tissue. FLASH with proton and ion beams is expected to offer additional healthy tissue sparing from beam stopping in the tumour—but the research on this topic is still not completed, and the experiments and evaluations are ongoing. Healthy tissue sparing with FLASH would enable a dose increase as well as a significant reduction of treatment time without additional aggravations. These new key findings may influence the accelerator development for particle therapy considerably in the next future.

In x-ray radiotherapy the integration of imaging devices for image-guided radiation therapy is standard. In-room CTs and now the introduction of magnetic resonance imaging (MRI) in the form of the recent clinical adoption of the MR linac show these necessary advances for better positioning of the patients and online observation of the tumours. Whereas CTs are also introduced in particle therapy facilities, the combination of MRI and hadron beams is delicate due to the high magnetic fields of these diagnostic devices diverting the proton/ion beam. In addition, also the stray fields may lead to effects on the treatment systems in the near future. The investigations on this topic have started (see below).

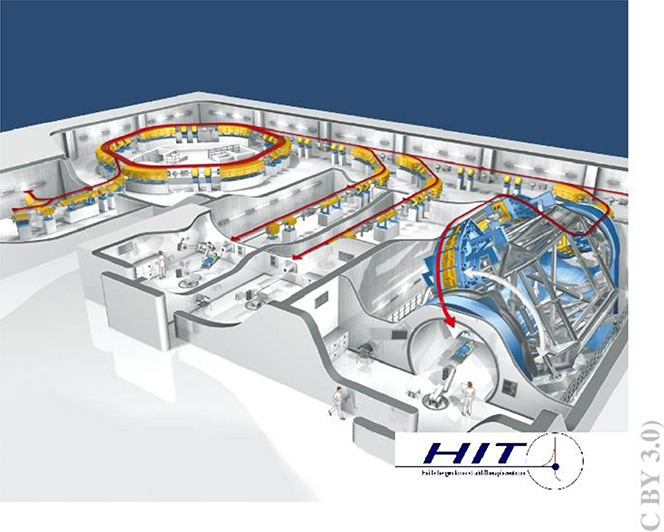

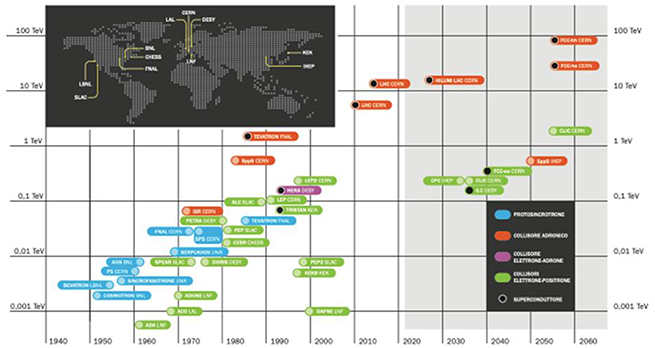

In the last three decades about 25 proton and 4 hadron therapy facilities were built in Europe (figure 5.6). But only a few more are actually under planning or construction, among them only one hadron facility project (SEEIIST 1 ). Thus, new efforts are required to make these techniques smaller, cheaper (in investment and operating costs), and easier to maintain, which will be discussed in a separate chapter below showing potentials for the next three decades.

Figure 5.6. Particle therapy facilities in Europe; see https://www.ptcog.ch/index.php/facilities-world-map. SEEIIST—The South East European International Institute for Sustainable Technologies http://seeiist.eu/.

Download figure:

Standard image High-resolution image5.2.2. Further developments in the next decades

5.2.2.1. Introduction of helium for regular treatment

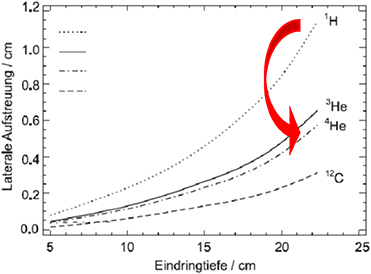

For protons and 3He/4He similar radio-biological properties have been determined, but the lateral scattering is reduced by nearly 50% in the case of helium ions versus protons (figure 5.7). In recent years, helium ions again became of interest for clinical cases where neither protons nor carbon ions are ideally suited, especially for treating paediatric tumours. Currently, patient irradiations with scanned 4He ions at the Heidelberg Ion beam Therapy Center (HIT) in Germany are used only in 'treatment attempts' ('individuelle Heilversuche') and will go into regular operation by mid 2024. Other ion therapy facilities in Europe (e.g., CNAO in Italy and MedAustron in Austria) have also started technical upgrades to produce helium ion beams in the near future.

Figure 5.7. Lateral scattering of different light ions, adapted from Fiedler (2008).

Download figure:

Standard image High-resolution imageRecent studies have shown that 3He ions can be a viable alternative to 4He, as they can produce comparable dose profiles, demanding slightly higher kinetic energy per nucleon, but less total kinetic energy. This results in 20% less magnetic rigidity needed for the same penetration depth which may be of importance for the design of future compact therapy accelerators like superconducting synchrotrons or energy-variable cyclotrons.

5.2.2.2. Image-guided hadron therapy using MRI

The observation of the patient's position during treatment has become more and more a standard procedure. 3D camera systems make it possible to control the location of the patient within tenths of millimetres observing the exterior of the body. But organs struck by tumours can move dramatically within the abdomen, which is not visible from outside. To observe the soft tissues an MRI scanner is the preferred diagnostic tool. But due to the moderate to high fields used the charged particle beam is affected. In addition, conventional MRI scanners are not constructed to have an inlet for radiation to be applied in parallel to the diagnostic procedure. Nevertheless, studies like ARTEMIS at HIT in Heidelberg have been started to investigate possible arrangements of open, low-field MRIs (figure 5.8). Still the deflection and/or distortion of the beam can be observed, but algorithms will be developed to cancel out this influence of the MRI's magnetic field. A second goal of such studies is to optimize the design of the measurement coils to spare out space for the beam entrance fields. A further aspect should not be underestimated—the impact of the magnetic (stray) fields on the QA devices and the online monitoring detectors used during treatment. All these have to be examined and possibly adapted to be magnetic field compatible; at worst new or alternative detector principles have to be applied. A lot of technical solutions have to be found in the next years before MRI scanners can be introduced in regular particle beam therapy.

Figure 5.8. Setup of an MRI scanner from Esaote at HIT's experimental place.

Download figure:

Standard image High-resolution image5.2.2.3. The future of compact accelerator concepts

To enhance the coverage of particle therapy in Europe and worldwide and to enlarge the number of patients that can profit from this special treatment, the investment costs of such facilities should be reduced as much as possible, which requires smaller and simpler machines to reduce manufacturing and operating effort. Especially the size of the accelerator has an important influence on the building costs. And the amount of beam losses demands more or less concrete for radiation shielding, and should be minimized by design. In addition, the operating and maintenance team needed should be small, but adequate and well-trained, and sustained by a modern control system, which predicts pre-emptive maintenance measures through AI algorithms and thus guarantees highest availability.

Energy-variable cyclotrons

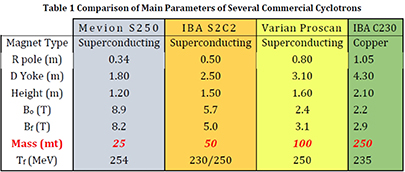

Most proton therapy facilities use cyclotrons to produce the beam, as these accelerators are compact, have only a few tuning parameters, and are thus simple to operate. Over the last three decades the size and weight have dramatically shrunk by factors 3–10 while the magnetic fields using superconductivity were increased up to a factor 4 (figure 5.9). These improvements still show the potential of cyclotrons while parallel developments like proton linacs are still larger and have a much more complex technique. But the actual cyclotron generation consists still of fixed energy machines, which demands a degrader for energy variation causing two main drawbacks: (a) high local beam losses and (b) relatively low currents for mid-depth and skin-deep tumours, which may be a problem to use the FLASH-based treatment procedure. To overcome these disadvantages first studies of energy-variable cyclotrons were undertaken and published. These show the possibilities to consequently advance the cyclotron systems to lighter, high-field superconducting arrangements, but now iron-free and thus capable to vary the magnetic field and energy in reasonable times. In addition, the produced currents of such a setting do not depend on the energy because no loss mechanism is involved anymore. The resulting machines would be 'FLASH'-ready and have the additional possibility to be enhanced to 3He/4He beam combined with proton therapy in one cyclotron setup. More simulation studies and prototype constructions are needed to reach these goals in the next 5–10 years.

Figure 5.9. Comparison of main parameters of several commercial cyclotrons; see 'Compact, low-cost, lightweight, superconducting, ironless cyclotrons for hadron radiotherapy', PSFC/RR-19-5.

Download figure:

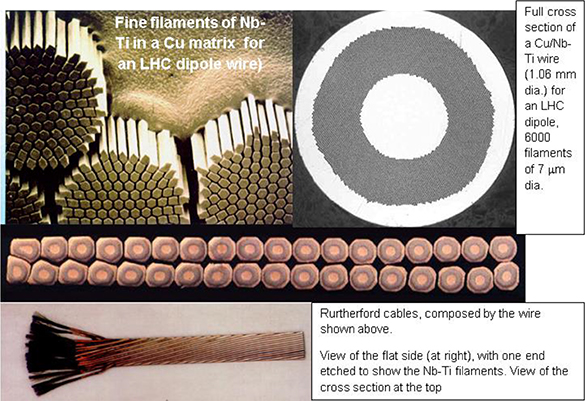

Standard image High-resolution imageSuperconducting fast-ramped synchrotrons

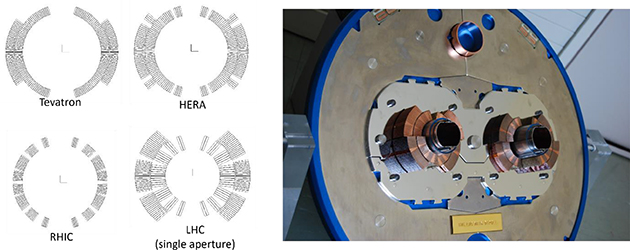

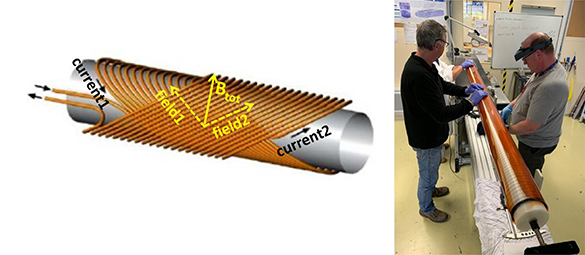

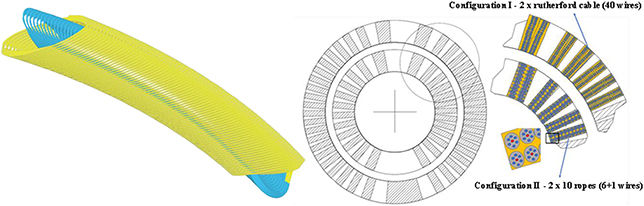

Wherever the flexibility of different ions from protons, helium, carbon to oxygen ions is asked to have the ability to study different dose distributions and linear energy transfer (LETs), a flexible accelerator concept with a high bandwidth of magnetic rigidities is needed. Today small synchrotrons with circumferences of 60–80 m and iron-dominated normal-conducting dipoles and quadrupoles are built for such purposes. Together with the injector linac and a variety of ion sources on one side and all the high-energy beam transport lines on the other side such a facility has a large required space in contrast to the treatment rooms. As a result, the building costs are high and such facilities were set up only in combination with large university hospitals. To shrink the size of these accelerator arrangements the main idea is to equip the synchrotron with superconducting magnets. This is a big challenge, because curved 3–4 T magnets ramped at 1–2 T s−1 are needed for an efficient operation. Studies at Toshiba in Japan and at CERN (NIMMS, HITRIplus 2 ) are underway to prepare prototype magnets.

In addition, modern control systems providing the multiple energy extraction method—using several post-accelerations in the same synchrtotron cycle—have to be developed to enhance the duty cycle of such machines. A new approach using time-sensitive networking (TSN), the next generation of (real-time) ethernet in industry, will be implemented at HIT (partly within HITRIplus) in the next years.

However, an open question still exists: Can synchrotrons be used later on for FLASH therapy, as the particle filling is limited by space charge restrictions? In addition, the necessary high dose rates would demand short extraction times (or fast extraction methods) with rapid refilling of the synchrotron and short cycle times (see above). These are all big challenges.

The first proton and ion linac-based facilities

After several years of R&D and developments in research at international laboratories (CERN, TERA, ENEA, INFN, ANL) the first linac-based proton therapy facilities are under construction and commissioning in UK (STFC Daresbury) and in Italy. With the use of high-frequency copper structures, designed to achieve relatively compact solution and high repetition rate operation, linacs will allow the production of beams with fast energy variation (without the need for mechanically moved beam energy degraders), as well as small emittance beams that are potentially suited for the further development of mini-beam dose-delivery techniques. The shielding around the linac can also be reduced compared to other installations allowing a more flexible solution that could be installed in existing buildings and other restricted space settings. Also, dedicated designs for He and C ions are being studied, which would require less power compared to presently operating synchrotrons and allow for flexible pulsed operation. HG RF technology as well as high-efficiency klystrons are key developments for the future and further spread of this approach. Furthermore, the linac technology is attractive as a booster option to increase the output energy of cyclotron-based existing facilities.

Towards a VHEE RT facility

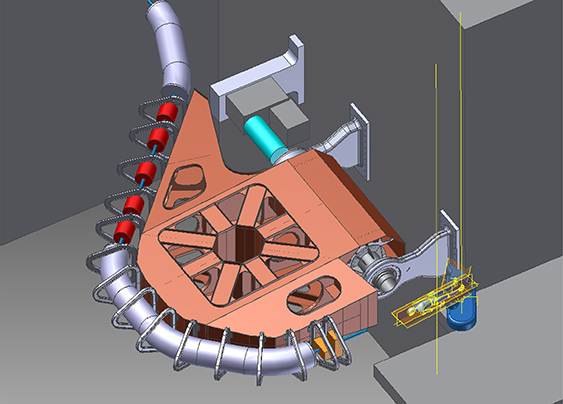

NC RF linac is the technology being used for most of the VHEE research. The main advantages of the linacs are the flexibility and the compactness. Regarding the linac design in the energy range of interest for VHEE applications there are different possibilities offering the desired performances and compactness with different degrees of technology maturity. The S-band technology is the most mature one; HG compact linacs of this type are already available from various industrial partners. The C-band and X-band RF linacs are still less mature and are mainly constructed in labs with the help of industries for machining. Recently a considerable effort is being made from the industrialization point of view. The current and next future available machines for VHEE are the eRT6-Oriatron at Centre Hospitalier Universitaire Vaudois in Laussane; ElectronFlash at IC in Orsay; CLARA at Daresbury; AWA at ANL; and CLEAR at CERN (all based on NC RF linacs). A VHEE-FLASH facility based on a CLIC X-band 100 MeV linac is being designed in collaboration with CHUV to treat large, deep-seated tumours in FLASH conditions. The facility is compact enough to fit on a typical hospital campus (figure 5.5). Another proposal in this sense is the upgraded PHASER proposal at SLAC. Finally, ELBE at HZDR and the next future PITZ at DESY are based on SCRF linac technology.

Figure 5.5. FLASH facility cartoon for CHUV—Lausanne.

Download figure:

Standard image High-resolution imageRecent advances in the high-gradient RF structures where more than 100 MeV m−1 are now achievable in the lab environment are transforming the landscape for VHEE RT. VHEE RT requires beam energies between 50 and 200 MeV, an improved dose conformity and scale to higher doses rates, in the case of the FLASH-RT until 50 Gy s−1 are needed. Novel high-gradient technologies could enable ultra-compact structures, with higher repetition rates and higher currents. An international R&D global effort is being made by major accelerator laboratories and industry partners and is focused on two aspects: material origin and purity, surface treatments, and manufacturing technology on the one hand and the consistency and reproducibility of the test results on the other. Some promising R&D in the next decade are the distributed coupling accelerator developed at SLAC and the use of cryogenic copper that is transforming the linac design offering a new frontier from beam brightness, efficiency, and cost capability. Another approach for the next generation of compact, efficient, and high-performance VHEE accelerator is the use of higher-frequency millimetric waves (∼100 GHz) and higher repetition rates using THz sources.

An important R&D effort to apply these technologies in the medical industry has to be made in the next decade, if successful, this could be a step further in the quest for compact and efficient VHEE RT in the range of hundreds of MeV. For achieving these aims, a synergistic and multidisciplinary research effort based on accelerator technology as well as physical and radiobiological comparisons to see how well VHEE can meet the current assumptions and become a clinical reality is needed (Very High Energy 2020).

Therapy facilities based on laser plasma acceleration

As high-performance lasers have increased greatly in recent years in terms of power and repetition rate their use for particle therapy may be possible in the future. The actual limit of about 100 MeV achieved for the highest proton energies driven by ultra-intense lasers using Target Normal Sheath Acceleration (TNSA) depicts a major milestone on the way to the needed energies. But still the broad energy spread of the accelerated protons is not feasible for treatment modalities. The reached energies for laser-accelerated ions is still a magnitude lower and thus far from the necessary values. The very short dose peaks may be attractive for FLASH therapy, but then the repetition rate of the Petawatt lasers should reach 100 Hz and more, which is not the case today. In addition, the target configuration has to resist this high load on a long-term basis—a therapy facility runs several 1000 h a year. Furthermore, the reliability of a laser-based proton or ion accelerator must reach 98% or more to be of practical use in a medical facility. But this technique should be explored with high effort in the next decade to identify the long-term potential.

Concerning the VHEE there is an intense R&D effort in LPA to be applied in the next generation of VHHE-RT facilities. The major challenge for the LPA technique is the beam quality, reproducibility, and reliability needed for RT applications. This R&D is being carried out in facilities such as the DRACO at ELBE at Helmholtz-Zentrum Dresden-Rossendorf (HZDR) and in the Laboratoire d'Optique Appliquée (LOA) at Institute Polytechnique de Paris (IPP) where a new beamline dedicated to VHEE medical applications known as IDRA is being constructed. The new beamline will provide stable experimental conditions for radiobiology and dosimetry R&D (Very High Energy 2020).

A wide international R&D programme, in particular we highlight the role of the EU network EUPRAXIA (http://www.eupraxia-project.eu/), will be needed in the next decade in order to convert these 'dream' facilities into reality.

5.3. Bionics and robotics

5.3.1. Bioinspired micro- and nanorobotics

Friedrich Simmel1

1Technische Universität München, Garching, Germany

5.3.1.1. General overview

Robotic systems transform the way we work and live, and will continue to do so in the future. At the macroscopic scale, robots greatly speed up and enhance manufacturing processes, provide assistance in diverse areas such as healthcare or environmental remediation, and perform robustly in environments that are inaccessible, too harsh, or too dangerous for humans. In the lab they can, in principle, perform large numbers of experiments in parallel, reproducibly and without getting tired. This supports, for example, the search for new chemical compounds in combinatorial approaches, and can also generate the large datasets required by data-hungry machine learning techniques.

Robotic systems are 'reprogrammable multifunctional manipulators' and typically comprise sensors and actuators connected to and coordinated by an information-processing unit. Sensors provide information about the environment, which is evaluated by a computer and then used to decide on the necessary actions—which often means mechanical motion of some sort. At a macroscopic scale, a wide range of sensors, electromechanical components, and powerful—potentially networked—electronic computers are available to realize robotic systems, which are essentially all powered by electricity. Is it possible to realize robotic functions also at the micro- or even nanoscale, where we have to work with molecular components, and on-board electronics is not available?

In fact, over the past years researchers have begun to work on the development of 'molecular robotic systems', in which sensors, computers, and actuators are integrated within molecular-scale systems. Among the many possible applications for molecular-scale machinery and robots are, most prominently, the generation of nanomedical robots that autonomously detect and cure diseases at the earliest stages, and the generation of molecular assembly lines that will enable the programmable synthesis of chemical compounds.

Biology as a guide for molecular and cell-scale robotics

Biology has inspired the development of robotic systems at the macroscale in manifold ways—many robot body plans are derived from those of animals (humanoid, dog, insect-like robots, etc), and the movements and actions of these robots resemble those of their living counterparts. Roboticists are concerned with 'motion planning', 'robotic cognition', etc, and therefore ask similar questions as neuroscientists. The field of swarm robotics is inspired by the observation of social behavior in biology.

But also at the cellular and molecular scale we can find inspiration for robotics—cells, like robots, have sensors and actuators; they store and process information, they move, manufacture, interact with other cells, they can even self-replicate (which robots cannot, so far). To name but a few, specific examples for biological functions that are of direct relevance for molecular robotics are protein expression, molecular motors, bacterial swimming and swarming, chemotaxis, cell shape changes and the cytoskeleton, cell-cell communication, the immune system, muscle function, etc.

What's important is that biology is a very different 'technology' than electromechanics and electronic computers—biological systems are self-organized chemical systems far from thermal equilibrium. If we want to build bioinspired robots at this small scale, we will have to apply other principles than those developed for macroscale robotics.

From molecular machines to robots

Over the past decades, one of the major topics in biophysics (and in supramolecular chemistry as well) was the study of molecular machines and motors. Research in this field has clarified how machines operate at the nanoscale and how they differ from macroscopic machines. The small size of the machines changes everything—these machines operate in a storm of Brownian motion, in which the forces they are able to generate are small compared to the thermal forces. Motion sometimes is achieved via a ratchet mechanism that utilizes and rectifies thermal motion (which is only possible out of equilibrium); in some cases it also involves a power stroke, in which a chemical reaction (ATP hydrolysis) effects a conformational change in the machine or motor, biasing its movement in one direction.

Research on biological molecular machines informs molecular robotics in several ways. First, these machines have taught us how they work (at least in principle), and have given examples for what they can achieve (e.g., transport molecules from point A to B, synthesize molecules, exert forces, pump ions/molecules across membranes, etc). They also have indicated speeds (μm s−1) and forces (piconewton) that can be achieved with molecular machinery. Their spatial organization often plays a role in their function (e.g., huge numbers of myosin molecules acting together in muscle, ATP synthase or flagellar motors embedded in membranes, etc) and thus needs to be controlled.

Even if the construction of powerful synthetic molecular machines will not succeed in the near future, experimental work in biology and biophysics has provided protocols that allow the extraction, purification, and chemical or genetic modification of biological machines and their operation in a non-biological context. Many researchers have already started to harness biological motors such as kinesin and myosin in an artificial context (e.g., for molecular transport, biosensing, agent-based computing, as active components of synthetic cell-like structures or synthetic muscles). Other molecular machines such as ATP synthase have been used to power biochemical reactions within synthetic cells.

But then, not every machine or motor should be called a robot. What we expect from a molecular robot is the programmable execution of multiple and more complex tasks (as opposed to non-programmable, repeated execution of always the same task), potentially with some sort of decision-making or context-dependence. This likely will require the combination of multiple molecular components into a consistent system that can be continuously operated or driven out of equilibrium. This requirement also defines one of the major challenges for the field, namely systems integration of molecular machines and other components to perform useful tasks, which also comes with challenges for energy supply and interfacing with the environment or non-biological components.

DNA-based robots

DNA molecules turn out to be ideal to experimentally explore ideas in nanoscale biomolecular robotics. DNA intrinsically is an information-encoding molecule, based on which a wide variety of schemes for DNA-based molecular computing have already been developed. Further, DNA nanotechnology—notably the so-called 'DNA origami' technique—has enabled the sequence-programmable self-assembly of almost arbitrarily shaped molecular objects. Various chemical and physical mechanisms have been employed to switch DNA-based molecular objects between different conformations, and to realize linear and rotary molecular motors. Thus, in principle, all the major functional components of a robotic system—sensors, actuators, and computers—can be realized with DNA alone.

As mentioned, in order to realize robot-like systems, these separate functions have to be integrated into consistent multifunctional systems. However, only few experimental examples have convincingly demonstrated such integration so far. In one example by Nadrian Seeman and coworkers, a 'molecular assembly line' was shown to be capable of programmable assembly of metallic nanoparticles by a molecular walker. The walker could collect nanoparticles from three assembly stations, which were controlled to either present a nanoparticle or not. This resulted in a total of  different assemblies that could be 'programmably' realized with the system. In the context of nanomedicine, origami-based molecular containers have been realized that open up only when certain conditions—e.g., the presence of certain molecules on the surface of cells—are met. The containers can then present previously hidden molecules that trigger signaling cascades in the cells, or release drugs. This also exemplifies the two main fields of application that have been envisioned for DNA robots: programmable molecular synthesis with molecular 'assembly lines', and the delivery of drugs by nanomedical robots.

different assemblies that could be 'programmably' realized with the system. In the context of nanomedicine, origami-based molecular containers have been realized that open up only when certain conditions—e.g., the presence of certain molecules on the surface of cells—are met. The containers can then present previously hidden molecules that trigger signaling cascades in the cells, or release drugs. This also exemplifies the two main fields of application that have been envisioned for DNA robots: programmable molecular synthesis with molecular 'assembly lines', and the delivery of drugs by nanomedical robots.

While these prototypes are extremely promising, many challenges remain—and some of these probably pertain also to nanorobots realized with other molecules than DNA. First, information-processing capabilities of individual molecular structures are quite limited—essentially, they are based on switching between a few distinct states (i.e., of similar energy), but separated by a 'high enough' activation barrier), which means that their computational power should be similar to that of finite state machines. Second, current instantiations of DNA robots are quite slow (movements with speeds of nm s−1 rather than μm s−1), and do not allow fast operation or response to changes in the environment. Thirdly, molecular robots are, of course, small, which poses a problem in many instances, where we might want to integrate them into larger systems, let them move across larger length scales, and operate many of them in parallel.

Active systems

One of the major visions in bioinspired nano- and microrobotics is the realization of autonomous behaviors, which, as a subtask, involves autonomous motion. In this context, over the past decade there has been huge interest in the realization and study of active matter systems, which include self-propelling colloidal particles, and active biopolymer gels actuated by ATP-consuming molecular motors. In contrast to other, more conventional approaches based on manipulation by external magnetic or electric fields, such systems promise to move 'by themselves' and also display interesting behaviors such as chemotaxis or swarming.

As before, autonomously moving particles or compartments alone will not make a robot, and again the challenge will be to integrate such active behavior with other functions. For instance, it would be desirable to find ways to control and program active behavior—the output of a sensor module could be used to control a physicochemical parameter that is important for movement. Active particles that move in chemical gradients need to be asymmetric, and potentially this asymmetry (or some symmetry-breaking event) could be influenced by a decision-making molecular circuit. Another challenge will be to find the 'right chemistry' that allows active processes and other robotic modules to operate under realistic environmental conditions—(e.g., inside a living organism).

Collective dynamics and swarms

If single nanorobots are unavoidably slow and rather dumb, maybe a large collection of such units can do better? In order to overcome their limitations, a conceivable strategy is to couple large numbers of robots via some physical or chemical interaction and let them move, compute, operate collectively. First steps in this direction have been taken by emulating population-based decision-making processes such as the 'quorum sensing' phenomenon known from bacteria, or swarm-like movement of microswimmers. Ideally, for a robotic system one would like to be able to couple such collective behaviors to well-defined environmental inputs and functional outputs—(e.g., if there is light, self-organize into a swarm, move towards the light source and release (or collect) molecules, etc).

There are various challenges associated with these ideas. How can one program the behavior of a swarm? As swarming depends on the interactions between the constituting particles/robots and their density, one could think of changing these control parameters in response to an external input. 'Programming' the behavior of such dynamical systems would mean to choose between different types of behaviors that are realized in different regions of their phase space. Potentially, the behavior of a whole collection of particles could be influenced by a single or a few particles (leader particles) and programming the swarm would amount to programming or selecting these leaders.

Cells as robots

As mentioned above, biological cells really behave a little like microscale robots. Biology has tackled the 'systems integration' challenge and realized out-of-equilibrium systems, in which various functionalities play together, behave in a context-dependent manner, and which are controlled by genetic programs. From a robotics perspective we can therefore ask whether we can (i) build synthetic systems that imitate cells but perform novel functions and thus act as cell-scale soft robots, or (ii) engineer extant cells to become more like robots?

Essentially the same approaches to engineer biological systems are pursued in synthetic biology, and they come with the same challenges. Regarding the first ('bottom-up') approach—putting together all the necessary parts to generate a synthetic living system is yet another systems engineering challenge. In order to realize synthetic cells, metabolic processes need to be compartmentalized and coupled to information processing, potentially growth, movement, division, etc, which has not succeeded so far (cf the separate EPS challenge section 4.4). The second approach circumvents the challenge of realizing a consistent multifunctional molecular system, but engineering of extant cells is difficult due to the sheer complexity of these systems. Engineered modules put additional load on a cell (whose exclusive goal is to self-sustain, maybe grow and divide), which compromises their fitness, and they also often suffer from unexpected interactions with other cellular components (also known as the 'circuit-chassis problem' in synthetic biology).

Power supply

A major issue that has to be tackled for all of the approaches described above is power supply. How are we going to drive the systems continuously to generate robotic behaviors? Cells come with their own metabolism, which means that cell-based robots would simply have to be fed in the same way as cell or tissue cultures. In the absence of a metabolism, however, molecular robotic systems driven by more complex chemical fuels such as ATP or nucleic acids probably will need to be supplied with these fuels using fluidics. When operation inside of a biological organism is desired, biologically available high-energy molecules might be used as fuels.

In the context of nano- and microrobotics, external driving with magnetic or electric fields via light irradation or heating is heavily investigated. Here one challenge is to convert these globally applied inputs into local actions—potentially by some local amplification mechanism (e.g., plasmonic field enhancement) and/or by combining actuation with molecular recognition or computation that lead to action only when certain conditions are met. So far, in most cases externally supplied energy was used for mechanical actuation (e.g., motion or opening/closing of containers), but only rarely to power complex behaviors or information processing.

In some cases it is not yet clear whether the energy balance will work out—depending on the mechanism employed and the efficiency of the robots, it may not be possible to deliver enough power to small systems to enable movement or more complex behaviors in the presence of overwhelming Brownian motion and other small-size effects. For instance, rotational diffusion of nanoparticles can be too fast to allow for directional movement, energy dissipation in aqueous environments may be too fast to heat up nanoscale volumes, etc.

Hybrid systems

In light of the limitations in our ability to generate autonomous biomolecular robots with similar capabilities as macroscopic robots, a realistic and potentially powerful approach will be to focus on hybrid systems, in which non-autonomous molecular systems are combined with already established robotic (electromechanical) systems. Such an approach would combine the advantages of the different technologies involved—bionanotechnology and synthetic biology on the one hand, and electronic computing and electromechanical actuation on the other. For instance, it will be very hard if not impossible to outcompete electronic computers using the limited capabilities of molecular systems alone. On the other hand, biomolecular systems and/or cells are at the right scale and 'speak the right language' to interact with other molecular/biological systems.

It is obvious that one of the major challenges for this approach is interfacing—finding effective ways to transduce biological into electronic signals, and vice versa. In one direction this coincides with the well-known challenges involved in biosensing, for which a biological signal (the presence of biomolecules) needs to be converted into electrons or photons (which also can be further converted into electrons). In the robotics context, an additional requirement will be the speed of the sensing event, which has to be quick enough to allow responding to changes in the environment in which the robot operates.

In the other signaling direction, efforts have been made to control molecular machines with light, electric, or magnetic fields, which enables potentially fast and computer-controlled external manipulation of these systems. Also various attempts have been made to influence the behavior of cells using external stimuli, notably in areas such as neuroelectronics or optogenetics. Similar approaches might be adopted to control bio-based micro- and nanorobots.

In the case a bidirectional biointerface has been successfully established, one can imagine hybrid robotic systems, in which computer-controlled signals direct the behavior of the biomolecular part of the robot, and sensory information is fed back to the computer, enabling the implementation of feedback or more complex control mechanisms—in this approach, micro- and nanorobots will be all sensors and actuators, with a brain outsourced to an external electronic computer.

Applications envisioned for micro- and nanorobotics

Micro- and nanorobots will be used when a direct physical interaction with the molecular or cellular world is required. As already mentioned above, the main application envisioned for such robotic systems will be in nanomedicine. One instantiation of nanomedical robots are advanced delivery vehicles that can sense their environment, release drugs on demand, or stimulate cell-signaling events. They may potentially be equipped with simple information-processing capabilities that can integrate more complex sensory information (e.g., to evaluate the presence of a certain tissue, cell type, and thus location in the body), and which may also be used to evaluate diagnostic rules based on this information (such as 'if condition X is met, bind to receptor Y, release compound Z', etc). Given the limited capabilities of small-scale systems, it is not clear how programmable such robotic devices will be. It is well conceivable, however, to come up with modular approaches, in which the same basic chassis is modified with different sensors and actuators, depending on the specific application. Autonomous robots will have to find their location by themselves, which for some applications may be achieved by circulation and targeted localization in the organism. Alternatively, hybrid approaches are conceivable, and allow for active control from the outside (e.g., by magnetic or laser manipulation, depending, of course, on the penetration depth of these stimuli in living tissue). There are many additional challenges for such devices, which are similar to those for conventional drugs (e.g., degradation, allergenicity, dose, circulation time, etc).

Apart from nanomedical robots, for which the first examples are already emerging, a wide range of applications can be envisioned in biomaterials and hybrid robotics. Hybrid robots could use a biomolecular front end that acts as sensor for (bio)chemicals and actuator that allows the release or presentation of molecules (here the overall robot would not be microscale). Potentially, surfaces or soft bulk materials (such as gels) could be modified with robotic devices, resulting in novel materials that can be programmed and change their properties in response to environmental signals in manifold ways (resulting in materials that are smarter than 'smart materials').

One of the most fascinating applications would be programmable, molecular assembly lines. Rather than aiming for a universal assembler, a more modest and achievable goal is the programmable assembly of a finite number of possible assembly outcomes starting from a defined set of components—similar to the DNA-based molecular assembly line mentioned above. This is not unlike a macroscopic assembly line, which is optimized for the production of one defined product with optional variations. Notably, biological processes such as RNA or protein synthesis already look a little like programmable assembly: RNA polymerase and ribosomes read off instructions from a molecular tape (DNA and mRNA, respectively), and use them to assemble other macromolecules. Maybe similar systems can be conceived that allow sequence-programmable synthesis of non-biological products.

In any case, molecular assembly means control over chemical reactions, which also means that we cannot ignore basic chemical rules and simply synthesize anything we want. Another issue is the scale of the process: in order to synthesize appreciable quantities of molecules or molecular assemblies, large numbers of assembly robots will have to be embedded into the active medium of a synthesis machine (similar, maybe, to a DNA or peptide synthesizer), where they can synthesize larger quantities in parallel.

5.3.1.2. Challenges and opportunities

In the sections above, a wide range of challenges and opportunities associated with the development of future bioinspired micro- and nanorobots were mentioned, which are summarized more concisely here. A variety of challenges relate to the way robotic functions will be implemented in the first place:

- Some robotic functions will be realizable with nanoscale systems (composed of supramolecular assemblies, DNA structures, biological motors); others will require larger cell-like systems into which multiple functions are integrated. It will be important to understand the size dependence of these functions and clarify what can be achieved at which length scale, and with which components. Systems integration—combining the components into consistent and functioning systems—will be the major challenge for bioinspired small-scale robotics.

- Autonomous behavior versus external control. How complex do robotic systems need to be to act autonomously—and is autonomy required? For many applications, external control will be a more feasible and powerful approach, which also benefits from established macroscale technologies.

- Hybrid robots that combine the advantages of biological systems and traditional robotics seem promising—a major challenge is the realization of efficient interfaces for signal transduction and actuation between biological and conventional robotics.

- Robotic systems should perform useful tasks, which means they need to be operated under realistic conditions. Depending on the field of application, various practical issues will have to be tackled—operation inside a living organism or on the surface of a sensor chip come with very different requirements.

Many physical challenges arise from the question of whether we can create something like a robot at such small scales at all:

- Can small robots sense and respond to small numbers of molecules or photons? There are biological examples of extremely sensitive sensors that require special molecular architectures and amplification processes, which may guide the design of microrobotic sensors. A related question that has been studied extensively in biology is chemotaxis, where cells sense the presence of a concentration gradient.

- What forces can be usefully applied by small robots? Biological molecular motors are known to generate piconewton forces, and the same magnitude is also expected from artificial motors. However, synthetic molecular motors have not yet been used to perform any useful task.

- How fast can a nano- or microrobot move or act? Do we need to achieve directional movement or will diffusion be sufficient? Of course, this again will depend on the application. Interestingly, in biology we do not find molecular motors for transport of molecules in bacteria (diffusion is sufficient), but in the much larger eukaryotic cells. Further, the smallest bacteria tend to be non-motile—apparently motility only pays off above a certain size. There are various further issues such as the dominance of Brownian effects at the nanoscale.

- How much energy is required to perform robotic functions—and how will it be supplied? Should nano- or microrobots be autonomously driven by chemical reactions, or actuated externally via physical stimuli?

- What is the computational power of small robots? How much information can be stored, how fast can it be processed? As the computing power of small systems necessarily is very limited, nanorobotics means 'robotics without a brain'. Nanorobots will tend have 'embodied intelligence', in which input-output relationships between sensor and actuator functions are custom-made and hardwired rather than freely programmable.

- Can one realize collective behaviors of large numbers of nanorobots—and how can one 'program' such systems?

Ultimately, the development of micro- and nanorobotics will lead to advancements in many application areas:

- Nanomedical robots operating in living organisms will be able to deliver potent drugs at the right spot, and in a context-dependent manner. They can also act as sentinels, permanently monitoring, recording, and reporting the presence of disease indicators.

- Small robots will extend the capabilities and enhance the sensory spectrum of larger robots. They will constitute the molecular interface of hybrid robotic systems, which enables bidirectional communication with biological systems. Such systems can be integrated (e.g., with mobile robotic units that perform environmental sensing and monitoring).

- Robotic components extend the functionality of 'smart' materials and surfaces. Embedding robotic functions into materials will enhance their ability to adjust to their environment and change shape and mechanical properties in response to environmental cues. Conceivably, such materials will have something akin to a metabolism that supports these functions and enables continuous operation. Surfaces coated with nanorobotic components will have enhanced sensor properties; they may be able to actively transport matter, take up or release molecules to the surroundings, and change their structure and physicochemical properties.

- Not the least, work on bioinspired small robotic systems will elucidate physical limits of sensors and actuators, and will clarify what it takes to display intelligent (or seemingly intelligent) behavior; it will also result in methodologies that allow programming the behavior of dynamical, out-of-equilibrium systems. Ultimately, the realization of bioinspired robots may also contribute to a better understanding of complex biological phenomena and behaviors.

5.3.2. Robotics in healthcare

Darwin Caldwell1

1Instituto Italiano di Tecnologia, Genoa, Italy

5.3.2.1. Increasing demands for healthcare

The provision of appropriate healthcare is unquestionably a major worldwide societal challenge that impacts all nations and peoples. The growth in medical robots driven by advances in technologies such as actuation, sensors, control theory, materials, AI, computation, medical imaging, and of course supported by increased doctor/patient acceptance may be a solution to a number of these convergent problems that include:

- Aging society and the increasing burden of dementia. This has been recognized in developed countries for many years, but is now becoming increasingly common globally. According to the OECD around 2% of the global population is currently over 80, and this is expected to reach 4% by 2050. In Europe, people over 80 already represent around 5% of the population and are expected to reach 11% by 2050 (OECD 2020).

- Increased healthcare spending and costs. According to the OECD in almost every country spending on healthcare consistently outstrips GDP growth. It has risen from 6% in 2010 to a predicted 9% of GDP by in 2030 and will continue to increase to 14% by 2060 (https://www.oecd.org/health/healthcarecostsunsustainableinadvancedeconomieswithoutreform.htm) (Moses et al 2013). The per capita health spending across OECD countries grew in real terms, by an average of 4.1% annually over the 10-year period between 1997 and 2007. By comparison, average economic growth over this period was 2.6%, resulting in an increasing share of the economy being devoted to health in most countries (OECD 2009).

- Shortage of workers in medical and social care professions. A relative decrease in the proportion of healthcare workers is increasing the demands on the active workforce, which is itself ageing in many countries. Data from the World Health Organization (WHO) indicates that the average age of nurses is over 40 in several European countries. Furthermore, the WHO states that the global health worker shortfall is already over 4 million (WHO 2008).

- The rapidly growing population and the need to provide basic medical needs in developing countries.

- Changing family structures. Family sizes are much smaller than in the past and the percentage of elderly people living alone is rapidly increasing.

- Increased acceptance of technology (Abou Allaban et al 2020a)—Although there is among many groups (particularly elderly users) an ambivalence about the use of robot technology and a preference for human touch, there is little hostility, and among younger people who are more familiar with computers, AI, smart technology, and advanced communications, there is a growing acceptance of the benefits of robotic and related technologies (Wu et al 2014). This increasing acceptance, and indeed in some instance reliance, on robots has been accelerated by COVID-19 (Zemmar et al 2020).

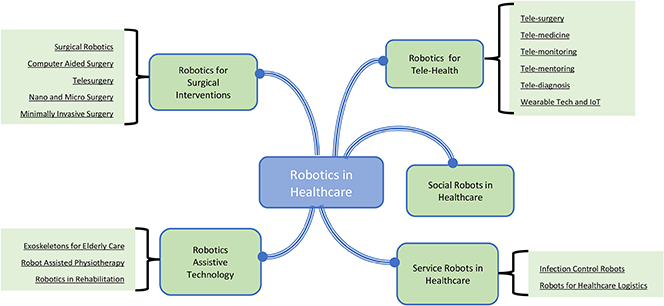

Against all of these demands and counter demands there is a widespread belief that medical and healthcare robotics is essential to transform all aspects of medicine—from surgical intervention to targeted therapy, rehabilitation, and hospital automation (figure 5.10).

Figure 5.10. Uses of robotics in healthcare.

Download figure:

Standard image High-resolution image5.3.2.2. Robotics and sustainable healthcare (Yang et al 2018)

As the demands on health systems grow, it is perhaps inevitable that we should turn to technology and particularly robotics, both to provide the extra capacity/productivity that will be needed by aging and rapidly increasing populations, and also to continue to enhance and improve the quality of life provided by the healthcare systems. Although robots and robotic systems represent a significant investment cost, experience in others sectors, such as manufacturing, has demonstrated that the use of robotic technology can also offer significant savings and increases in efficiency/productivity, while also contributing to the establishment of high-quality, sustainable, and affordable healthcare systems. Important application domains that could benefit include medical training, rehabilitation, prosthetics, surgery, diagnosis, and physical and social assistance to disabled and elderly people (Stahl et al 2016, Wang et al 2021).

5.3.2.3. Robotics for medical interventions (Mattos et al 2016)

Surgical robotics

Robotic surgery involves using a computer-controlled motorised manipulator/arm that has small instruments attached to this assembly. Using artificial sensing this arm can be programmed to move and position tools to carry out surgical tasks. The surgeon may or may not play a direct role during the procedure. The history of surgical robotics dates back almost 40 years and arose from a convergence of several key advances in technology (Satava 2002).

Minimally invasive surgery (MIS): Driven by the potential to create smaller incisions, with lower risks of infection, reduced pain, less blood loss, shorter hospital stays, faster recovery, and better cosmesis, the first laparoscopic cholecystectomy was performed in the mid-1980s (Antoniou et al 2015). The benefits of this approach over conventional open surgery quickly became apparent to surgeons, patients, and healthcare providers. However, although the potential benefits were clear there were also several technical and human factors problems. These included:

- Poor visual access and depth perception, due to the quality of the 2D cameras and displays.

- Difficult hand-eye coordination due to the fulcrum effect of using long instruments inserted through a cannula. This produced motion reversals, and a scaled and limited range of motion that was dependent on the insertion depth of the tooling.

- Little or no haptic feedback and a reduced number of degrees of freedom and dexterity.

- Camera instability and loss of spatial awareness within the body cavity (the which way is up problem).

- Increased transmission of physiologic tremors from the surgeon through the long rigid instruments.

As a consequence, when performing MIS a surgeon must master a new and different set of technical and surgical skills compared to performing a conventional procedure.

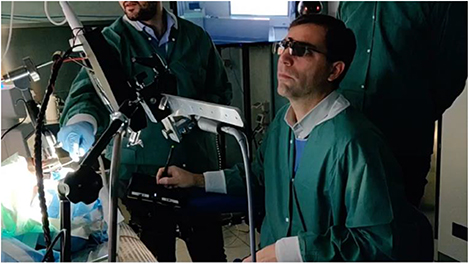

VR, telepresence, and telesurgery: Around the time of the first developments in MIS, NASA was studying options for providing medical care to astronauts. Their research teams were particularly interested in using emerging concepts in virtual reality (VR), haptics, and telepresence. Teams within the US Army (Satava 2002) became aware of this work and were interested in the possibility of decreasing battlefield mortality by bringing the surgeon and operating theatre closer to the wounded soldier (figure 5.11).

Figure 5.11. VR/AR, advanced user interfaces and telecommunications, and robot technology combine to create an enhanced surgical experience.

Download figure:

Standard image High-resolution imageRobots in surgery: Although the first MIS was only performed in 1987, the history of surgical robotics does in fact slightly pre-date this, with Kwoh et al performing neurosurgical biopsies in 1985 (Kwoh et al 1988), and in 1988 Davies et al performed a transurethral resection of the prostate (Davies 2000). While these and other surgical robots were being developed, clinicians working with the various robotics teams realised that surgical robots and concepts in telepresence/telesurgery had the potential to overcome limitations inherent in MIS by:

- Using software to eliminate the fulcrum effect and restore proper hand-eye coordination. At the same time the software made movement and force scaling possible so that large movements or grasp forces at the surgeon's console could be transformed into micro motions and delicate actions inside the patient.

- Increasing dexterity using instruments with flexible wrists (designed to at least partially mimic human wrist action) to give increased degrees of freedom which greatly improves the ability to manipulate tissues.

- Using software filtering to compensate for any surgeon induced tremor, making increasingly more delicate operations possible.

- Improving surgeon comfort by designing dedicated ergonomic consoles/workstations that eliminate the need to twist, turn, or maintain awkward positions for extended periods.

Computer-assisted surgery (Buettner et al 2020)

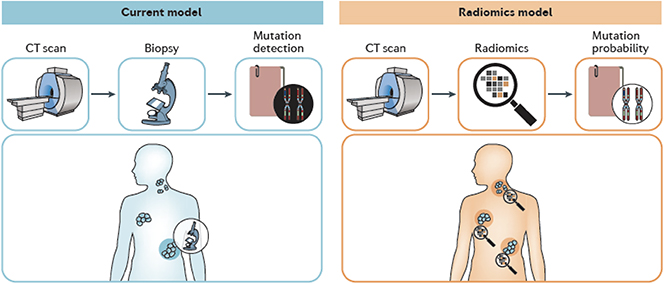

Computer assisted surgery (CAS) is also on occasion known as image-guided surgery (IGS), computer-aided surgery, computer-assisted intervention, 3D computer surgery, or surgical navigation. It is a broad term used to indicate a surgical concept and set of methods whereby the motions of the surgical instruments being manipulated by the clinician are tracked and subsequently integrated with intraoperative and/or preoperative images of the patient. The pre/interoperative images can be produced by a combination of x-rays, computers, and/or other equipment. (e.g., medical ultrasound, ionizing techniques such as fluoroscopy CT, x-ray, and tomography, fixed C-Arms, CT, or MRI scanners). This information is used either directly or indirectly to safely and precisely navigate to and treat a condition: tumour, vascular malformation, lesion, etc, as demanded by the treatment. This increases the efficiency and accuracy of the procedure, and reduces the risk to nearby critical tissues and organs.

Although CAS can be used in traditional open surgery the need for and use of CAS, as with robotic surgery, has been driven by advances in MIS, where the minimal access needed to provide medical benefits results in restricted visualisation of the site and can create difficulties in understanding of the exact spatial location of the tooling within the body. The key difference between CAS and robotic surgery is the lack of a robot!

CAS and IGS systems can use different tracking techniques including mechanical, optical (cameras), ultrasonic, and electromagnetic or some combination of these systems to capture, register, and relay the patient's position/anatomy, the surgeon's precise movements relative to the patient, and the motion of the tooling/instruments, to a computer which displays images of the instruments' exact position inside the body on a monitor (2D or 3D). It is also possible to relay these images to virtual and augmented reality (VR/AR) headsets. This imaging and display are usually (and ideally) performed in real-time, although there can be delays of seconds depending on the modality and application. The tracking technology and the ability to track the location of the instruments within the body is often likened to GPS.

CAS and IGS have become the standard of care in providing navigational assistance during many medical procedures involving the brain, spine, pelvis/hip, knee, lung, breast, liver, prostate, and in otorhinolaryngology, orthopaedic, and cardiovascular systems. The clinical advantages include decreased intraoperative complications, increased surgeon confidence, improved preoperative planning, more complete surgical dissections, and safer junior physician training/mentoring.

Tele and remote surgery (Choi et al 2018)

Telesurgery allows a surgeon to operate on (or be virtually present with) a remote patient, by using a robotic surgical system at the patient's site. This involves the use of teleoperation technologies that use real-time, bidirectional information flow: surgical commands must be sent (in real time) to the remote robot, and everything that is happening in the surgical/patient site must be immediately perceived by the surgeon, through visual, auditory, and on occasion haptic feedback. This separation of the surgeon and the patient is already common with most current surgical robots (e.g., the Da Vinci Surgical system), with the surgeon sitting at an operating console that is a few metres away from the robot and patient, but connected with a dedicated wired connection. Telesurgery, on the other hand, refers to medium- and long-distance teleoperation with distances measured in kilometers or even thousands of kilometers between the surgeon and the robot/patient. The connection may use wired as well as 'conventional' broadband, a dedicated connection, wireless, or some combination of wired and wireless.

Potential benefits of telesurgery include:

- Eliminating the need for long-distance travel, along with associated costs and risks. This will provide for urgent emergency interventions and expert surgical care in underserved regions such as remote/rural areas, underdeveloped countries, in space, at sea, and on the battlefield.

- Surgical training: Telementoring can enhance the training of novice surgeons and bring new skills to more experienced medics. This could revolutionize surgical education.

- Surgical collaboration: Real-time collaboration between surgeons at different medical centers using shared, simultaneous perceived, high-definition visual feedback.

- Surgical data: Telesurgery focuses on moving data instead of the patient or the surgeon. This data is extremely rich, and includes sensory information, records of the surgical workflow, actions, and decision-making processes. This data can be used to assess surgical quality, or develop AI to enable autonomous surgical supervision, assistance, or even fully robotic surgery.

- Robotic precision: The use of robotic systems increases surgical precision and quality, removing physiologic tremor, reducing adjacent tissue damage, and permitting previously impossibly delicate surgery.

This large separation between the surgeon and surgical robot brings significant medical advantages but also creates many technical challenges, most of which are directly linked to the need to instantaneously transfer, in a safe, secure, and reliable way, massive amounts of data between both ends of the system. Fortunately, advances in data communication, fibre optic broadband, and particularly the increasingly widespread availability of 5G mean that the long-dreamed goal of truly remote telesurgery is now increasingly possible (Acemoglu et al 2020a). This will be explored in the later section on general requirements in Robotics in Tele-Healthcare.

Micro- and nanomedical robots (Nelson et al 2010, Li et al 2017, Soto et al 2020)

Current medical and surgical robots draw most of their design inspiration from conventional industrial robotics, with modifications to suit the particular requirements of operations in, on, and near tissue. Miniaturization of these robotic platforms, and indeed creation of completely novel, versatile micro- and nanoscale robots, will allow access to remote and hard to reach parts of the body, with the potential to advance medical treatment and diagnosis of patients, through cellular level procedures, and localized diagnosis and treatment. This will result in increased precision and efficiency. These advances will have benefits across domains such as therapy, surgery, diagnosis, and medical imaging.

Medical micro/nanorobots are untethered, small-scale structures capable of performing a pre-programmed task using conventional (electric) and unconventional (chemical, biological) energy sources and actuators, to create mechanical actions. Due to their size (sub mm) they face distinct challenges when compared to large (macroscale) robots: with viscous forces dominating over inertial forces, and motion and locomotion being governed by low Reynolds numbers and Brownian motion. Thus, the design requirements, parameters, and operation of these robots is almost unique in the mechanical world, although these features are common in biological systems. Many micro/nanorobots are made of biocompatible materials that can degrade and even disappear upon the completion of their mission.

Despite the challenges caused by the miniature scale, the potential benefits are significant in areas such as targeted delivery, precision surgery, sensing of biological targets, and detoxification.

Targeted drug delivery—Motile micro/nanorobots have the potential to 'swim' directly to a very specific target site within any part of the body and deliver a precise dosage of a therapeutic payload deep into diseased tissue. This highly directable approach will retain the therapeutic efficacy of the drug/payload while reducing side effects and damage to other tissue.

Surgery—micro/nanorobots could navigate through complex biological media or narrow capillaries to reach regions of the body not accessible by catheters or invasive surgery. At these sites they can be programmed or teleoperated to perform the required intervention (e.g., take biopsy samples or perform simple surgery). The use of tiny robotic surgeons could help reduce invasive surgical procedures, thus reducing patient discomfort and post-operative recovery time.

Medical diagnosis—isolating pathogens or measuring physical properties of tissue in real-time could be a further function performed by micro/nanorobots. This would provide a much more precise diagnosis of disease and/or vital signals. The integration of micro/nanorobots with medical imaging modalities would provide accurate positioning inside the body.

The use of micro- and nanorobots in precision medicine still faces technical, regulatory, and market challenges before their widespread use in clinical settings. Nevertheless, recent translations from proof of concept to in vivo studies demonstrate their potential in the medium to longer term.

Medical capsule robots (MCRs) are smart medical systems that enter the human body through natural orifices (often they are swallowed) or small incisions. They are an already operational form of micro/nanorobot that use task specific sensors, data processing, actuation, and wireless communication to perform imaging and drug delivery operations by interacting with the surrounding biological environment. MCRs face a number of challenges such as size (for non-invasive entry they must be less than 1cm in diameter), power consumption (they must carry on-board batteries), and fail safe operation (they operate deep within the body). MCR design and development focuses on miniaturization of the electronics and mechanical structure, packaging, and software (Beccani et al 2016).

5.3.2.4. Robotics in tele-health (De Michieli et al 2020)

Tele-healthcare involves the remote (tens of km to thousands of km) connection of different subjects involved in the healthcare process. This includes the patients, the clinicians, and others. Tele-health systems allow different kind of interactions: (i) clinician to clinician, (ii) clinician to patient, and (iii) patient to mobile health technology. Each interaction has a different purpose and requirements.

A common scenario where tele-health can provide an important benefit is when the patient or medic is not able to travel to the clinical setup, due to distance limitations, cost, poor transportation links, or other external factors. The COVID-19 pandemic has made this scenario even more real (Chang et al 2020, Leochico 2020, Prvu Bettger 2020), yet through the technology and application of tele-medicine, medical treatment, assessment, monitoring, or rehabilitation can continue to be provided without the need to come in person to the clinic. This offers important advantages in terms of infection prevention.