From Particle Physics to Medical Applications

Published June 2017

•

Copyright © IOP Publishing Ltd 2017

Pages 1-1 to 1-21

You need an eReader or compatible software to experience the benefits of the ePub3 file format.

Download complete PDF book, the ePub book or the Kindle book

Abstract

CERN is the world's largest particle physics research laboratory. Since it was established in 1954, it has made an outstanding contribution to our understanding of the fundamental particles and their interactions, and also to the technologies needed to analyse their properties and behaviour. The experimental challenges have pushed the performance of particle accelerators and detectors to the limits of our technical capabilities, and these groundbreaking technologies can also have a significant impact in applications beyond particle physics. In particular, the detectors developed for particle physics have led to improved techniques for medical imaging, while accelerator technologies lie at the heart of the irradiation methods that are widely used for treating cancer. Indeed, many important diagnostic and therapeutic techniques used by healthcare professionals are based either on basic physics principles or the technologies developed to carry out physics research. Ever since the discovery of x-rays by Roentgen in 1895, physics has been instrumental in the development of technologies in the biomedical domain, including the use of ionizing radiation for medical imaging and therapy. Some key examples that are explored in detail in this book include scanners based on positron emission tomography, as well as radiation therapy for cancer treatment. Even the collaborative model of particle physics is proving to be effective in catalysing multidisciplinary research for medical applications, ensuring that pioneering physics research is exploited for the benefit of all.

Introduction: technologies for particle physics

Since its foundation in September 1954, CERN has become the world's largest particle physics laboratory. More than 12 000 scientists now work at the facility, both from CERN's 22 member states and from other countries around the world. The combined creativity of so many people with different nationalities, backgrounds and areas of expertise is crucial for CERN to achieve its mission, which is to reveal the nature of the particles that constitute matter and their interactions. Particle physics seeks to explain the formation and structure of the Universe at its most fundamental level, and how it has evolved since the very beginning.

Alongside its scientific objectives, CERN is an important source of technological innovation and expertise. Advanced particle accelerators, cutting-edge particle detectors and sophisticated computing techniques are the hallmarks of particle physics research, and highly specialized instruments and breakthrough technologies are needed to push their performance beyond the available industrial know-how. It is therefore necessary for researchers at CERN to innovate and invent, developing tools and techniques that will enable them to carry out their scientific mission. And while these technological innovations are crucial building blocks for research in particle physics, they can also be exploited in many other ways that provide a direct benefit to society—including important medical applications, such as imaging and radiation therapy.

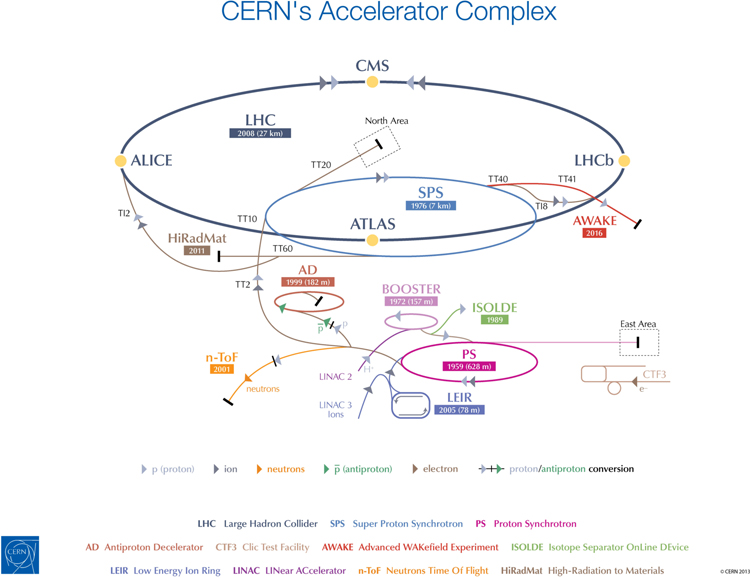

In recent years, the key driver for that technological development has been CERN's Large Hadron Collider (LHC), the flagship machine within the CERN accelerator complex (figure 1). One of the most complex experimental facilities ever constructed, the LHC achieves the highest energies of any particle accelerator in the world. It was built at CERN between 1998 and 2008 through a collaboration between scientists and engineers from more than 100 countries, and is now the largest example of a type of machine that was first built in the 1930s. As both our technical capabilities and our understanding of physics have advanced, the size of particle accelerators has progressively increased to open up the way to new studies and discoveries [1].

Figure 1. The accelerator complex at CERN is a succession of machines that accelerate particles to increasingly high energies. Each machine boosts the energy of a beam of particles before injecting it into the next machine in the sequence. © CERN 2013.

Download figure:

Standard image High-resolution imageToday, the LHC is used to study the outcomes of collisions between beams of protons accelerated to velocities approaching the speed of light, enabling scientists to confirm the existence of particles predicted by theory and also to discover new ones. These collisions generate a myriad of sub-atomic particles, which are detected and analysed by four separate experiments installed in huge underground caverns at the four collision points on the LHC ring (figure 2). Each of these experiments, which are run by large collaborations of scientists who come from research institutes all over the world, relies on cutting-edge particle detectors and enormous computing resources.

Figure 2. An aerial view of the LHC, showing a section of the accelerator and the four major experiments: ATLAS, CMS, ALICE and LHCb. © CERN 2017.

Download figure:

Standard image High-resolution imageWhat distinguishes each experiment from the others are the technologies that are deployed in their different detectors. The two largest experiments, ATLAS and Compact Muon Solenoid (CMS), have a broad remit to investigate all current topics in particle physics. These two independent detectors exploit different technologies, which is crucial for crosschecking and confirming any new discoveries. By contrast, the other two experiments, (ALICE) A Large Ion Collider Experiment and (LHCb) A Large Hadron Collider beauty, have detectors that are optimized for specific purposes.

On 4 July 2012, both the ATLAS and CMS collaborations announced that they had observed a new particle consistent with the Higgs boson, the particle that was the final missing piece of what particle physicists refer to as the standard model. This was almost 50 years after the particle had first been predicted in theoretical calculations by Peter Higgs, Robert Brout and François Englert. Shortly afterwards, in October 2013, the Nobel Prize for Physics was awarded jointly to François Englert and Peter Higgs 'for the theoretical discovery of a mechanism that contributes to our understanding of the origin of mass of subatomic particles'.

The work leading to the discovery—what The Economist lauded as 'science's great leap forward' [2]—represented the culmination of decades of effort in accelerator and detector design. Without thousands of people working over many years to conceive, design and build ever more sophisticated tools to investigate the fundamental building blocks of nature, the Nobel Committee could not have made such an award. It is interesting to note that it was not until the discovery of the Higgs boson that François Englert and Peter Higgs met for the first time at CERN (figure 3), although sadly Robert Brout had passed away in 2011.

Figure 3. The Nobel Prize for Physics in 2013 was awarded jointly to François Englert (left) and Peter Higgs (right) 'for the theoretical discovery of a mechanism that contributes to our understanding of the origin of mass of subatomic particles'. © CERN 2013.

Download figure:

Standard image High-resolution imageWhile this long-awaited breakthrough was only made possible by the LHC and its experiments, particle physicists believe it is only the beginning of what will be achieved with this machine. The hope is that the LHC will now produce and detect other particles—ones that the standard model does not predict and that might account for phenomena that are not yet understood, such as 'dark matter'. And looking beyond the LHC's achievements in particle physics, the technological advances needed to realize the LHC and its experiments are now driving numerous other developments in many other research fields and applications, including medical imaging and radiation therapy.

Medical applications of particle physics

It is clear that physics, and in particular particle physics, has made a major contribution to the development of instrumentation for biomedical research, diagnosis and therapy. This is particularly, although not exclusively, true for medical imaging and radiotherapy. Indeed, the curative role of ionizing radiation in the treatment of cancers has been exploited ever since the pioneering work of physicists and medical doctors led to the discovery of x-rays and other types of radiation at the end of the 19th century.

Accelerators have been used for cancer therapy since 1953, when the first linear accelerator to be purpose-built for medical applications started to treat patients at the Hammersmith Hospital in London [3]. Meanwhile, the technologies developed for particle detectors have played a major role in the evolution of medical imaging, as epitomized by the development of positron emission tomography (PET), which exploits many of the tools originally developed to detect subatomic particles. Data handling and simulation tools developed by physicists have also found use in the biomedical field, for example in establishing personalized treatment plans for cancer patients.

Beyond the actual technologies, the collaborative spirit at the heart of particle physics can also make a major contribution to ensuring that breakthrough technologies can be exploited to improve medical diagnosis and therapy. Particle physics collaborations, such as those at CERN, have brought together thousands of scientists from every corner of the world to work on the largest and most complex experiments. This mode of working has become second nature for particle physicists, who have learned to work collectively towards a common goal and who rely on consensus to take decisions. This can serve as a model for emerging multidisciplinary ventures in medical applications, since to transfer technological innovations to healthcare it is not only essential to identify promising technologies, but also to understand their relevance for the medical community.

In other words, for us to maximize the societal benefit from particle physics, it will be crucial for physicists and medical doctors to bring their research activities together. This can be achieved through multidisciplinary networks and collaborations, where scientists with different specialties all bring their contributions to establish a common roadmap. Physicists, engineers and computer scientists can share their knowledge and technologies, providing the medical community with first-hand information on the latest technical progress. At the same time, medical doctors and biologists can present their needs and vision for the medical tools of the future, triggering innovative ideas and driving technical developments in high-priority areas. In this book, we will see how this collaborative approach is an essential tool for developing more effective technologies for medical diagnosis and treatment.

Particle detectors for medical imaging

Medical imaging has radically transformed medicine, changing the way doctors can detect, diagnose and treat a variety of diseases. Technological breakthroughs have made imaging faster, more precise and less invasive, thanks to a wealth of new instrumentation and techniques. These innovations have often been driven by the latest developments in particle detectors, including new scintillators and pixel detectors, improved reconstruction algorithms, high-performance electronics and a vast increase in computing power.

CERN and its collaborators have made seminal contributions to these technological advances. Indeed, physicists working at CERN have been investigating medical applications for particle detectors since the 1970s. Two key pioneers were Georges Charpak, who in 1968 revolutionized particle physics with his invention of the multiwire proportional chamber (MWPC), and David Townsend, who made major contributions to the development of PET imaging for medical applications.

Charpak and the multiwire proportional chamber

Charpak's innovative design for the MWPC enabled physicists to detect thousands of ionizing particles per second for the first time, unlike previous techniques, such as the bubble chamber, which could only record a few photographs per second. Its design also made it much easier to track the path of individual particles, where previously a series of proportional counters had to be deployed to follow particles as they moved through large areas.

Similar to the Geiger counter, an MWPC uses high-tension wires running through a chamber filled with gas. If an electrically charged particle passes through the chamber, it ionizes atoms of gas along its path. The resulting ions and electrons are accelerated by the electric field generated between the wires and the walls, causing a cascade of ionization in the gas. The ions collected on each wire generate an electric current that is proportional to the energy of the original particle, which makes it possible to count particles and determine their energy and trajectory.

Charpak's first MWPC measured 10 × 10 cm2 and had wires positioned a millimetre apart. Each of these wires was able to detect the pulses produced by a nearby ionizing particle in an independent way, increasing the data collection rate by a factor of 1000 over previous techniques. By exploiting thousands of wires—with each one acting as an independent detector—these wire chambers enabled physicists to observe the particle's path with high precision, typically better than 1 mm. And since they are capable of detecting thousands of particles per second, wire chambers can even be used to study very rare processes in particle physics [4].

The MWPC rapidly became a standard tool in particle physics, and it enabled several major discoveries, including the first observation of the Z and W bosons at CERN in 1983, the top quark at Fermilab in Chicago, and the charm quark at the Stanford Linear Accelerator Laboratory and Brookhaven [5]. Even today, experiments at the LHC exploit chambers that are derived more or less directly from Charpak's original design. Although faster detectors exist—such as those made from silicon—experimentalists still use MWPCs because they can equip very large detection planes and withstand a high flux of particles.

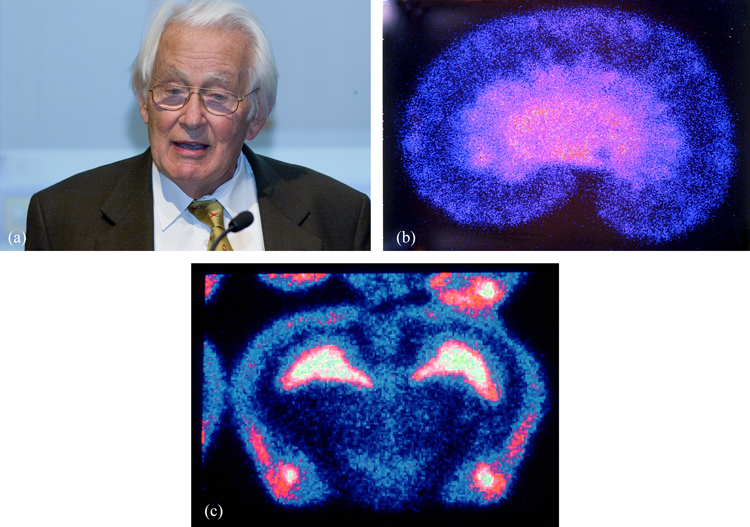

Charpak devoted considerable effort to ensuring that these detectors could be exploited in medical radiology (figure 4). Here the trend is for digital read-out to replace photographic film, since this improves both sensitivity and spatial resolution. Increasing the recording speed also enables faster scanning and lower body doses when using medical diagnostic tools based on radiation or particle beams.

Figure 4. (a) George Charpak invented the multiwire proportional chamber to track particle trajectories, and later applied the same technique to medical imaging. © CERN 2005. These images of (b) a rat kidney and (c) a rat brain were produced using Charpak's multiwire detector, called the parallel plate avalanche chamber (PPAC). © CERN 1993–2017.

Download figure:

Standard image High-resolution imageTownsend and PET imaging

Charpak's multiwire chambers also played an important role in the development of PET imaging, a functional technique that can be used to observe metabolic processes in the body. To record a PET image, a positron-emitting radionuclide tracer is first introduced into the body by attaching it to a biologically active molecule. Positrons emitted by the tracer travel a short distance in the body (about 1 mm) before annihilating with a nearby electron, in the process producing two gamma rays travelling in opposite directions. PET scanners detect these gamma rays, and from the recorded data an image can be reconstructed using computational methods.

The PET technique was not invented at CERN, but some early and essential work that contributed to the development of three-dimensional PET was carried out at the laboratory in the 1970s. David Townsend was working with another CERN physicist, Alan Jeavons, who had built some small wire chambers known as high-density avalanche chambers (HIDACs) for another application. Jeavons and Townsend thought the HIDACs could be useful for collecting PET data, and Townsend developed software to enable an image to be reconstructed from the raw data. This work yielded the first PET image of a small mouse, which was taken at the Cantonal Hospital in Geneva in 1977 (figure 5). A few years later, in 1980–82, Townsend worked together with Rolf Clackdoyle and CERN mathematician Benno Schorr to further extend the mathematics used for image reconstruction.

Figure 5. David Townsend (a) produced the first PET image of a mouse (b) in collaboration with the University Hospital of Geneva. The PET data were obtained with high-density avalanche chambers developed at CERN. © CERN 2005.

Download figure:

Standard image High-resolution imageA rotating PET scanner, an early prototype of modern multi-ring instruments, was also developed at CERN from 1989 to 1990, while Townsend was working in collaboration with CTI PET Systems in Knoxville, USA. It was evaluated clinically at the Cantonal Hospital in 1991, which led to the idea of combining PET with another imaging approach called computed tomography (CT). Over the next nine years Townsend worked with electrical engineer Ronald Nutt, then president of CTI PET Systems, to develop a combined PET–CT scanner—which in 2000 was named the medical invention of the year by Time magazine.

This combined PET–CT approach has had a huge impact on clinical practice, since the PET technique is most valuable when used alongside the anatomical imaging provided by CT scanning [6]. Modern PET scanners, which in most cases are now equipped with integrated high-end multi-detector CT scanners, have become an essential imaging tool for a number of medical applications. Around 90% of PET scans in standard medical care are used to image cancer metastases, while other applications include neuroimaging, cardiology and drug development.

Following the individual efforts of pioneers such as Charpak and Townsend, CERN embraced the first collaborative endeavours in medical imaging to emerge in the 1990s. These included the Crystal Clear and Medipix collaborations, which began exploring the possible medical applications of detector technologies developed for the LHC (hybrid silicon pixel detectors and scintillating crystals, respectively). The Crystal Clear project developed a number of PET scanners with variable geometry, which were suitable for both small and large animals.

Today, scientists are developing more precise time-of-flight (TOF) PET, which will improve the image quality by providing more accurate and faster localization of positron annihilation events in the patient. TOF PET works by recording the time difference between the detection of the two photons that are produced in the positron–electron annihilation, and then using this to infer the most likely location of the annihilation event. Current clinical TOF-PET systems have a timing resolution of around 350–400 ps, which locates events to within 6 cm. Recent research has further driven down the resolution to 100 ps, and experts in the field are now chasing the holy grail of achieving PET with a timing resolution below 10 ps, which would deliver a tenfold reduction of the uncertainty of the location.

New developments in mathematical and computational modelling have also continued to improve image-reconstruction software, which in turn provides more accurate PET quantification. The end goal is to achieve precise real-time imaging to enable early and accurate diagnosis and treatment.

Hadron therapy

The idea of hadron therapy dates back to 1946, when Robert Wilson, physicist and founder of Fermilab, was the first to propose that high-energy beams of charged particles could be used to treat cancer [7]. His idea was taken up by John Lawrence, an expert in nuclear medicine, and his older brother Ernest Lawrence, who won the 1939 Nobel Prize for Physics for his invention of the cyclotron—and who gave his name to both the Lawrence Livermore National Laboratory and the Lawrence Berkeley National Laboratory. The first patients were treated with protons by the Lawrence brothers and their collaborators at Berkeley in September 1954, the same month and year that CERN was founded [8].

Particle therapy is believed to have an advantage over even the most modern x-ray delivery methods, because the dose can be targeted at the tumour without damaging the surrounding healthy tissue. Unlike photons, charged particles only deposit a small amount of their energy as they travel through the body, allowing them to penetrate deep inside it. They only release their energy when they come to a complete stop, and their penetration depth can be controlled by altering the energy at which the particles enter the body. This means that the radiation dose can be localized precisely within the tumour site, and the dose profile can be shaped to match the geometry of the tumour by using narrowly focused and scanned pencil beams of variable penetration depth. In contrast, x-rays deposit their energy in a more continuous way, with healthy tissue receiving some of the dose both before and after the radiation reaches the tumour, and most of the radiation is delivered within a few centimetres of the patient's skin.

Charged particles can also control tumours more effectively than x-rays, due to their higher relative biological effectiveness—which means that they cause more damage to the tumour cells per unit of energy deposited in the tissue. This is due to the higher density of ionization events that charged particles generate as they interact with human tissue, which also means that heavier ions, such as carbon, can be even more effective than protons for treating radio-resistant tumours.

Since the first patient was treated at Berkeley, a number of research institutes have demonstrated the effectiveness of proton therapy for treating cancer. These include the Uppsala accelerator in Sweden, which treated the first patient in Europe, the Harvard Cyclotron Laboratory in Boston, USA, and three Russian facilities in Moscow, Dubna and St Petersburg. Research studies were also carried out at the Chiba and Tsukuba accelerators in Japan, Clatterbridge Hospital in the UK, and the Paul Scherrer Institute in Switzerland. Following these successful studies, the first dedicated proton therapy centre was built at the Loma Linda University Medical Centre in California, with the help of Fermilab. The first patient was treated in 1990, and since then this first hospital-based facility has treated more than 17 500 cancer patients. Soon after, in 1994, the first clinical treatment to use carbon ions got underway in Chiba, Japan, and this employed a heavy-ion accelerator that had been purpose-built for medical applications [9].

The PIMMS collaboration

This growing interest in hadron therapy was reflected in activities at CERN Prompted by Ugo Amaldi of the TERA Foundation, a non-profit institution established in 1992 to develop hadron therapy, and Meinhard Regler of the MedAustron therapy centre in Austria, CERN initiated a study to review the available technologies and determine what further developments would be needed to meet the requirements of this emerging treatment modality. The outcome was the Proton Ion Medical Machine Study (PIMMS), a collaboration carried out under the technical leadership of CERN and coordinated from 1996 to 2000 by Phil Bryant. MedAustron and TERA were key partners in the project, and were later joined by Onkologie 2000 of the Czech Republic, while the study group also worked closely with Gerhard Kraft from the GSI Helmholtz Centre for Heavy Ion Research in Darmstadt, Germany.

The remit for the PIMMS project was to design a light-ion hadron therapy centre that would be fully optimized for medical applications, without making any compromises to take account of financial or space limitations. The primary aim was to identify and design a facility capable of actively scanning the tumour with proton and carbon-ion beams, enabling conformal treatment of complex-shaped tumours in three dimensions with sub-millimetre accuracy. The PIMMS design favoured a synchrotron facility, which is better at accelerating heavier carbon ions than a cyclotron and also provides the pulse-to-pulse energy variation needed for active scanning.

The outcome of the four-year study was a design that combined many innovative features, allowing it to produce an extracted pencil beam of particles that is very uniform in time and yet has an energy that can be varied and a shape that can be easily adjusted. The general structure was detailed in two reports published in 2000 [10], and includes a number of different elements (figure 6). In the design, beams of protons and carbon ions are first generated by two separate ion sources and accelerated to 400 keV u−1. The two beams are transported to a linear accelerator that further accelerates them to energies of 7 MeV u−1, at which point the beams are injected into the synchrotron. With a diameter of about 25 m, the synchrotron accelerates the beams to a preset energy, ranging from 60 to 250 MeV for protons and from 120 to 400 MeV u−1 for carbon ions. The PIMMS study envisaged three rooms for proton therapy—two of which would be equipped with rotating gantries—and two rooms for the irradiation of deep tumours with carbon ions. One of these features a rotating cabin referred to as the Riesenrad gantry.

Figure 6. The PIMMS design study was co-ordinated by CERN 1996–2000. It combines a number of different elements, including linear accelerators for both protons and carbon ions, the main synchrotron accelerator and five treatment rooms using different beams and configurations. © CERN 2017.

Download figure:

Standard image High-resolution imageOnce the study reported its results in 2000, two treatment facilities in Italy and Austria were built that were largely based on the PIMMS design. It was further refined by TERA and then implemented at these two treatment centres: CNAO in Pavia, Italy, which opened in 2011, and the MedAustron Ion Beam Therapy Centre in Wiener Neustadt, Austria, which treated its first patient in 2016 (figure 7). Beyond the initial design study, CERN also provided expertise in accelerators and magnets, in particular for MedAustron, and trained key personnel. In both cases, networks of national and international collaborations were crucial to the successful completion of the projects.

Figure 7. The accelerators at (a) the CNAO facility in Pavia, Italy and (b) the MedAustron Ion-Beam Therapy Centre in Austria. Both centres based their design on the outcomes of the PIMMS design study. Courtesy of CNAO and MedAustron.

Download figure:

Standard image High-resolution imageWhile the PIMMS study was being pursued, GSI Darmstadt was working on a pilot project aimed at treating cancer patients with heavy ions. This project exploited the centre's heavy-ion synchrotron, and in collaboration with the Heidelberg University Hospital, the German Cancer Research Centre and the Helmholtz Centre in Dresden-Rossendorf, the project developed the advanced technologies of raster scanning and in-beam PET. In December 1997 the first two patients were treated at the experimental facility, and by 2000 nearly 150 patients had been successfully treated with carbon ions [11]. Based on the successes of the pilot project, the Heidelberg Ion Therapy Centre (HIT) was proposed and approved in 2001 [12].

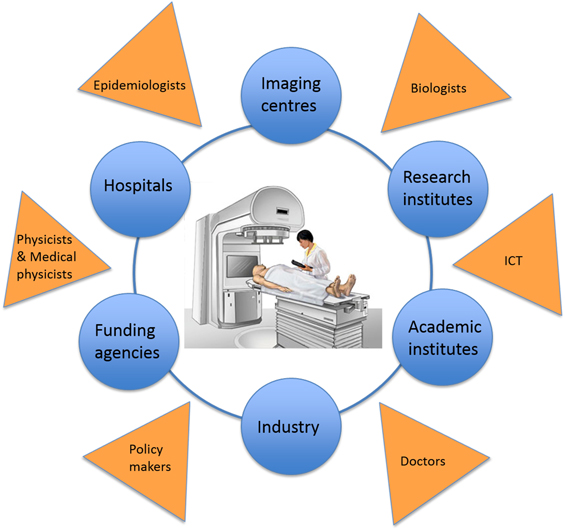

ENLIGHT: collaboration is key

By 2001, a number of projects were planning to build ion-therapy treatment centres in Austria, France, Germany, Italy and Sweden. There was therefore a clear opportunity to create a network that would allow the different project groups to work together, and to collaborate with other partners who could offer expertise or who might be interested in developing their own projects. After all, harnessing the full potential of particle therapy requires the knowledge and skills provided by physicists, physicians, radiobiologists, engineers and information technology experts, as well as collaboration between academic, research and industrial partners (figure 8).

Figure 8. ENLIGHT is a multidisciplinary network that brings together physicists, physicians, radiobiologists, engineers and information technology experts, and one of its key purposes is to enable collaboration between academic, research and industrial partners in particle therapy.

Download figure:

Standard image High-resolution imageThe result was the European Network for Light Ion Therapy (ENLIGHT), which held its inaugural meeting at CERN in 2002. It was established not only to co-ordinate the various European projects, but also to promote international discussions aimed at evaluating the effectiveness of hadron therapy for cancer treatment. Funded by the European Commission for three years, the network was a collaboration between clinical centres and research institutions involved in advancing and implementing hadron therapy in Europe. About 70 specialists from different disciplines, including radiation biology, oncology, and imaging and medical physics, attended this first gathering—a considerable achievement at a time when 'multidisciplinary' was not yet a buzzword [13].

When the European Commission funding for ENLIGHT came to an end in 2006, CERN hosted a brainstorming session that brought together key stakeholders from around 20 countries. The participants believed strongly that the collaboration enabled by ENLIGHT was a key ingredient for future progress, and that it should be maintained and broadened even further. They also agreed that the goals of the network could best be met by two complementary aspects: targeted research in areas needed for effective hadron therapy, and networking to establish and implement common standards and protocols for treating patients. As a result, the primary mandate for the project's co-ordinator was to develop strategies for securing the funding that would be needed to continue these two fundamental objectives of the initiative [14, 15].

Various programmes were identified and, under the umbrella of ENLIGHT, four projects won further European funding: PARTNER, ENVISION [16] and ENTERVISION, all co-ordinated by CERN, and ULICE, co-ordinated by CNAO. These projects were all directed towards developing, establishing and optimizing new techniques for cancer treatment, while also following the model of international cooperation that had been so successful within the particle physics community.

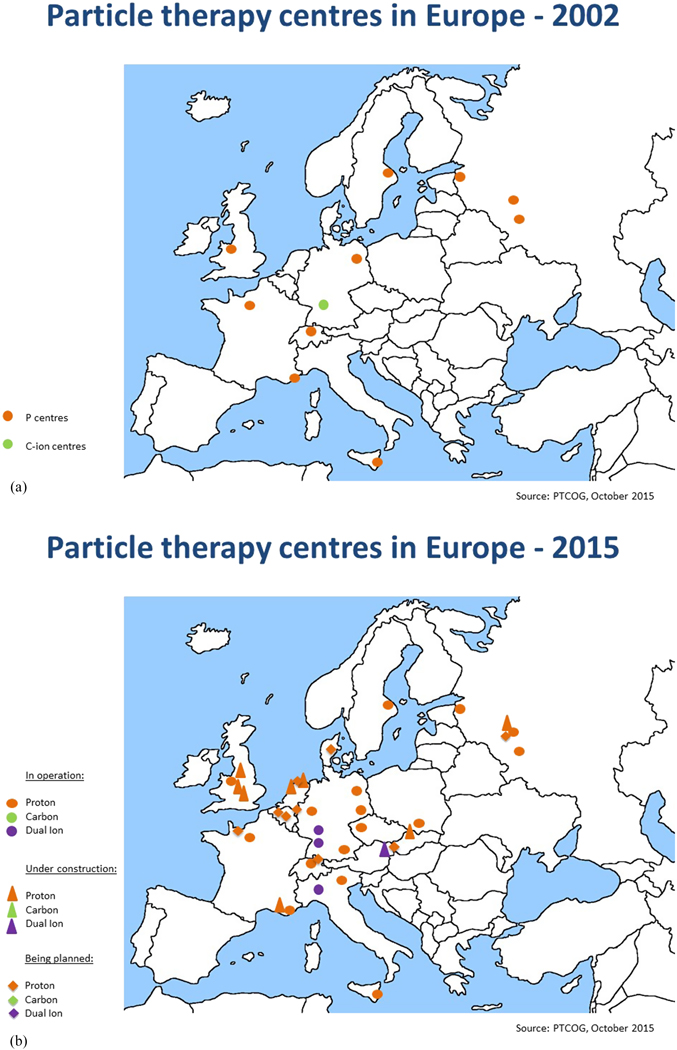

It is clear that the focus of R&D for hadron therapy has shifted since ENLIGHT was first established, if only for the simple reason that the number of clinical centres has increased dramatically—particularly for protons (figure 9). In Europe alone there are currently around 20 centres at various stages from approved to operational, and the number is set to double by 2020. The same trend is reflected globally, with around 50 centres currently planned or operational, and this is set to increase to 100 by 2020 (figure 10). To date, some 150 000 patients have been treated with protons and more than 20 000 have been treated with carbon ions.

Figure 9. This picture shows the distribution and number of hadron therapy centres in 2002 in Europe when the ENLIGHT network was launched and the distribution and number of centers in 2015, illustrating the increasing interest in particle therapy (http://www.ptcog.ch/index.php/).

Download figure:

Standard image High-resolution imageFigure 10. Particle therapy facilities in operation worldwide and under construction in 2015 show a similar trend globally to the situation in Europe (PTCOG, 2015) (http://www.ptcog.ch/index.php/).

Download figure:

Standard image High-resolution imageIt is important to note that while technological developments are still needed in order to ensure optimized and more cost-effective treatment, proton therapy is now solidly in the hands of industry. The advent of single-room facilities is also set to bring proton therapy to smaller hospitals and clinical centres, albeit with some restrictions.

From a clinical standpoint, the major challenge for ENLIGHT in the coming years will be to catalyse collaborative efforts to define a roadmap for randomized trials, and study the issue of relative biological effectiveness—which is currently based on experimental results rather than being a well-defined physical quantity—in detail. Key technological developments will include improved imaging techniques for quality assurance, and the design of compact accelerators and gantries for ions that are heavier than protons. Information technologies will also take centre stage, as data sharing, data analytics and decision support systems become increasingly important. Meanwhile, training and education will also be a major focus for the network, as the growing number of facilities will require more and more specialized personnel. The aim here will be to train professionals who are highly skilled in their specialty, but at the same time are familiar with the multidisciplinary aspects of hadron therapy.

Over its 15 years of life, ENLIGHT has shown a remarkable ability to reinvent itself, while maintaining its cornerstones of multidisciplinarity, integration and openness, and its attention to the needs of future generations. The network is well placed to face the evolving challenges of a frontier discipline such as hadron therapy, and ENLIGHT continues to play a central role in the development and diffusion of hadron therapy, and in meeting the needs of the growing community.

Medical imaging for radiotherapy

While hadron therapy is becoming an increasingly important tool for cancer treatment, continuing advances in imaging and computational methods are crucial to improve the outcomes from traditional radiotherapy. The primary aim here is to deliver the radiation dose to the entire tumour volume while minimizing or eliminating the dose received by healthy tissues and organs. In other words, a specified dose has to be deposited in the tumour, and the dose absorbed by healthy tissues must be limited within given constraints. To this end, the past 20 years have seen the development and deployment of sophisticated new treatment techniques designed to target and deliver radiation to the tumour volume precisely.

To achieve the best results, clinicians use treatment-planning software to map the position of the tumour and determine the optimal dose profile. An important input to these systems is an accurate anatomical model of the tumour and its surroundings, which is provided by a high-resolution CT image. The software then helps the clinicians to choose key parameters for the irradiation sessions, such as the geometry and the intensity of the x-ray beams. Any geometrical uncertainties can reduce the amount of the dose that is delivered to the tumour site, so minimizing these uncertainties remains a key priority for optimizing the effectiveness of radiotherapy.

During each step in the treatment, it is therefore crucial to pinpoint the position of the tumour with as much precision as possible. However, this can be difficult to achieve, as the tumour moves and changes shape during the irradiation sessions, which is mainly caused by breathing, but is also due to patient movement and the shrinkage of the tumour as the treatment progresses. Various medical imaging techniques are exploited at each stage of the treatment to cope with this problem. However, given the complexity of the breathing motion and the absence of an imaging technique that can track the position of the tumour reliably, this movement still causes significant geometrical uncertainty during irradiation.

Several techniques are available to control the movements that affect tumour position, and clinicians must choose which technique is best for each individual patient. For example, recent studies have indicated that increasing the radiation dose can improve outcomes for patients who are being treated for lung cancer, but that it is dangerous to increase the dose without reliable motion management. Any movements due to breathing are particularly difficult to manage, and they require time-consuming techniques that demand dedicated equipment and strict quality assurance. For these techniques to be effective, it is also desirable to verify the correlation between any surrogate signal and the movement of the tumour prior to and during treatment.

Part of the planning process involves calculating the exact range of each treatment beam needed to irradiate the entire tumour, as well as the beam energy needed to achieve this range. However, noise and distortions in the CT image, as well as uncertainty in the composition and density of each patient's tissues, compromise the accuracy of these calculations—as do limitations in the algorithms used to determine the rate at which the beams deliver their dose within different tissues.

New horizons in CT imaging

Today, the most common tool for patient positioning and verification is cone-beam CT (CBCT) imaging, a version of computed tomography where the x-rays are divergent, forming a cone. New and improved CBCT algorithms are developed every year, but current clinical reconstructions are all essentially based on the Feldkamp–Davis–Kress (FDK) algorithm, which is named after its developers L A Feldkamp, L C Davis and J W Kress, who were all research staff at the Ford Motor Company in Michigan, USA, when the algorithm was first published in 1984 [17].

The FDK approach is a single-pass algorithm that enables high-speed image reconstruction, which is one of the factors that has contributed to its popularity. However, raw speed is not the only important criterion. Alternative algorithms exist that can match the image quality of FDK, but require fewer projections as input and so can reduce the radiation dose to the patient. In addition, iterative methods have emerged recently that could make it possible to compensate for the motion of the tumour.

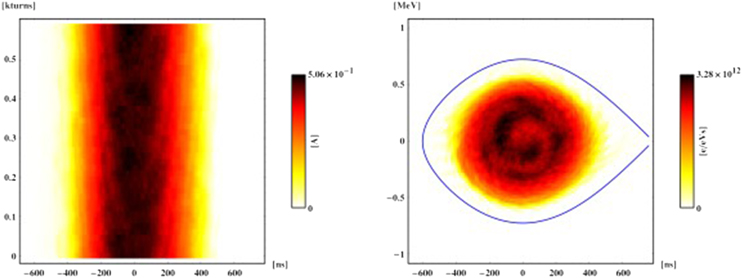

One such approach is phase-space tomography [18], a hybrid algorithm that was originally pioneered at CERN to image particle beams circulating in an accelerator, and in particular obtain specific information about the distribution of the particle bunch (see box). This algorithm combines particle-tracking techniques with iterative tomography to produce an extraordinarily detailed two-dimensional picture of the bunch, based on the one-dimensional electric current profiles that are generated as the charged particles travel around the accelerator (figure 11).

Compensating for movement in accelerators

The energy distribution of the particles in a bunch is not known, but phase-space tomography allows it to be reconstituted from the bunch shape, which can be measured. In effect, this is equivalent to extending conventional tomography to enable it to deal with non-rigid bodies.

The technique exploits the concept of longitudinal phase space, an abstraction taken from classical mechanics in which each point in a plane represents the position and energy of a particle. Since one of the two dimensions in this plane is energetic rather than spatial, conventional tomography cannot be used. However, the ensemble of particles in the plane effectively rotates, allowing different projections onto its other axis, and this corresponds to a physical spatial position that can be measured at successive times.

Each projection is acquired completely as a single snapshot on one turn of the machine, but only one projection can be measured at a given time. The downside is that the motion is nonlinear. Nevertheless, by tracking test particles to understand how the geometry of phase space deforms as it rotates, it is possible to translate all the discrete time slices back to the same instant and tomographically combine them into a single image. The analysis of the motion is entirely decoupled from the tomographical part of the code, allowing the arbitrarily complex motion to be treated independently of the mathematics of the tomography.

Figure 11. This early example from 1999 shows how phase-space tomography can transform one-dimensional profile data for a particle bunch in a circular accelerator (left) into a two-dimensional image of particle density in the longitudinal plane (right). The resultant particle distribution is consistent with all the measured profiles and the physics of synchrotron motion, and reveals an internal bunch structure that is caused by the nonlinearity of the motion. This measurement was made at the CERN Proton Synchrotron Booster.

Download figure:

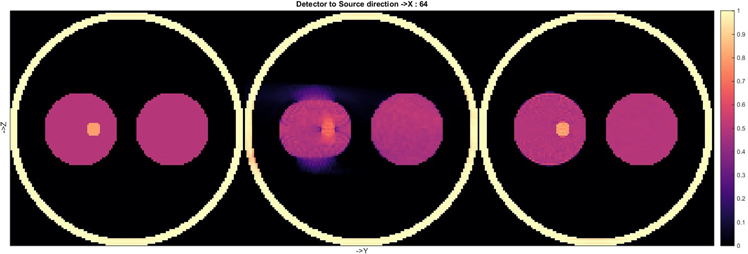

Standard image High-resolution imageThe success of phase-space tomography in accelerators inspired a study at CERN that aims to use the same technique to improve motion compensation in medical imaging. To test the approach, a proof of principle was created using a simple thorax-like phantom, which was mimicked in computer simulations by a collection of spheres. Three reconstructions made using the new method, called the simultaneous algebraic reconstruction technique (SART), are shown in figure 12.

Figure 12. Three reconstructions of the same phantom using SART. The third reconstruction (far right) shows that the image is effectively 'frozen' at the starting point of the cyclic motion, demonstrating the success of the motion compensation algorithm. The density (colour) scale is in arbitrary units.

Download figure:

Standard image High-resolution imageAll elements of the phantom were completely static for the first reconstruction, while the second one showed the expected degradation when the data were generated when the left 'lung' was moving (without deformation) during the simulated CBCT scan. In this case the motion was assumed to be sinusoidal, with an amplitude of 1.25 times the radius of the 'tumour', and it was only in the vertical direction for the reconstructed plane shown. The third image was produced from the same dynamic dataset as the second one, using the same SART algorithm, but the geometry of the reconstructed image was modified as a function of time while the data were being recorded. This image clearly shows that this technique is capable of 'freezing' the image before the onset of the cyclic motion, thus compensating for the known movement. The entire dataset was used in each reconstruction, and it is a feature of the CERN algorithm that the motion is not constrained to be cyclic, which means that any type of movement can be compensated for.

Furthermore, because the mathematics of the reconstruction remains untouched and only the specific geometry is modified, the same approach can in principle be applied to any iterative algorithm. Indeed, motion compensation based on these ideas is being added to the algorithms available from an open-source software repository, the Tomographic Iterative GPU-based Reconstruction (TIGRE) toolbox [19, 20], which offers state-of-the-art CT reconstruction code. The algorithms available through TIGRE provide higher quality images than those used in current medical practice, and also minimize the radiation dose to the patient. The ability to reconstruct full and accurate three-dimensional images using a reduced radiation dose would be an important advance for CBCT, and the hope is that TIGRE will act as a platform to help bridge the gap between academics and clinicians—and so enable the technology to be adopted more widely.

The development of phase-space tomography is a great example of how collaboration can benefit both particle physicists and the medical profession. The idea behind it was originally inspired by medical imaging, it proved a success in accelerator physics, and now it is delivering new benefits in the domain from which it came. Most importantly, these new advances, which have in part been enabled by ongoing research at CERN, could help to improve outcomes for patients being treated for cancer.

Conclusions

Advances from basic research have been at the heart of medical progress for centuries. Today, particle accelerators and detectors have become fundamental tools in the treatment and diagnosis of cancer and other serious diseases. Hadron therapy is an increasingly important tool for medical doctors and oncologists, while all radiotherapy techniques require a complex combination of technologies, including multi-modality imaging, computational methods and treatment planning, as well as the irradiation treatment itself. CERN continues to play a pivotal role in advancing these techniques, through both ongoing research and knowledge transfer—which has the mission of maximizing the impact of CERN's science, technology and expertise on society.

Major challenges for future technologies and research will be the emergence of new diseases related to an ageing population, the need for a more patient-specific approach to cancer treatment and public pressure to control rising health costs while still delivering better outcomes for patients. Scientists working at the frontiers of particle physics have much to contribute to these goals, and the culture of collaboration at the heart of organizations such as CERN will ensure that breakthrough technologies find their way into the medical clinics of the future.

References

- [1]Heuer R-D 2012 The future of the Large Hadron Collider and CERN Phil. Trans. R. Soc. A 370 986–94

- [2]Editorial 2012 The Higgs boson: science's great leap forward The Economist (July 2012) www.economist.com/node/21558254

- [3]Bewley D K 1985 The 8 MeV linear accelerator at the MRC Cyclotron Unit, Hammersmith hospital, London Br. J. Radiol. 58 213–7

- [4]Charpak G 1992 Electronic imaging of ionizing radiation with limited avalanches in gases (Nobel lecture) www.nobelprize.org/nobel_prizes/physics/laureates/1992/charpak-lecture.pdf

- [5]Giomataris I 2010 Georges Charpak—a true man of science Cern Courier (30 November 2010) http://cerncourier.com/cws/article/cern/44361

- [6]Bressan B 2005 PET and CT: a perfect fit Cern Courier (7 June 2005) http://cerncourier.com/cws/article/cern/29359

- [7]Wilson R R 1946 Radiological use of fast protons Radiology 47 487–91

- [8]Lawrence J H et al 1958 Pituitary irradiation with high-energy proton beams: a preliminary report Cancer Res. 18 121–34

- [9]Hirao Y et al 1992 Heavy ion synchrotron for medical use—HIMAC project at NIRS-Japan Nucl. Phys. A 538 541–50

- [10]Badano L et al 1999 Proton-ion medical machine study (PIMMS) 1 CERN CERN-PS-99-010-DI https://cds.cern.ch/record/385378?ln=en Bryant P J et al 2000 Proton-ion medical machine study (PIMMS) 2 CERN CERN-PS-2000-007-DR https://cds.cern.ch/record/449577/

- [11]Kraft et al 1998 First patient treatment at GSI with heavy ions Proc. Joint Accelerator Conf. http://accelconf.web.cern.ch/AccelConf/98/PAPERS/FRX02A.PDF

- [12]Debus J, Gross K D and Pavlovic M (ed) 1998 Proposal for a Dedicated Ion Beam Facility for Cancer Therapy (Darmstadt: GSI)

- [13]2002 Cancer therapy initiative is launched at CERN CERN Courier (24 April 2002) http://cerncourier.com/cws/article/cern/28632

- [14]Bressan B and Dosanjh M 2006 ENLIGHT++ extends cancer therapy research network CERN Courier (7 June 2006) http://cerncourier.com/cws/article/cern/29639

- [15]Dosanjh M et al 2007 Status of hadron therapy in Europe and the role of ENLIGHT Nucl. Instrum. Methods Phys. Res. A 571 191–4

- [16]ENVISION and CERN Video Productions 2013, innovative medical imaging tools for particle therapy CERN-MOVIE-2013-122-001 http://cds.cern.ch/record/1611721?ln=en ENLIGHT and CERN video 2015 Virtual particle therapy centre http://cds.cern.ch/record/2002120

- [17]Feldkamp L A, Davis L and Kress J 1984 Practical cone-beam algorithm J. Opt. Soc. Amer. 1 612–9

- [18]Hancock S and Lindroos M 2000 Longitudinal phase space tomography with space charge Phys. Rev. ST 3 124202

- [19]TIGRE: tomographic iterative GPU-based reconstruction toolbox https://github.com/CERN/TIGRE

- [20]Biguri A et al 2016 TIGRE: a MATLAB-GPU toolbox for CBCT image reconstruction Biomed. Phys. Eng. Express 2 055010