Abstract

A lot of underdeveloped nations particularly in Africa struggle with cancer-related, deadly diseases. Particularly in women, the incidence of breast cancer is rising daily because of ignorance and delayed diagnosis. Only by correctly identifying and diagnosing cancer in its very early stages of development can be effectively treated. The classification of cancer can be accelerated and automated with the aid of computer-aided diagnosis and medical image analysis techniques. This research provides the use of transfer learning from a Residual Network 18 (ResNet18) and Residual Network 34 (ResNet34) architectures to detect breast cancer. The study examined how breast cancer can be identified in breast mammography pictures using transfer learning from ResNet18 and ResNet34, and developed a demo app for radiologists using the trained models with the best validation accuracy. 1, 200 datasets of breast x-ray mammography images from the National Radiological Society's (NRS) archives were employed in the study. The dataset was categorised as implant cancer negative, implant cancer positive, cancer negative and cancer positive in order to increase the consistency of x-ray mammography images classification and produce better features. For the multi-class classification of the images, the study gave an average accuracy for binary classification of benign or malignant cancer cases of 86.7% validation accuracy for ResNet34 and 92% validation accuracy for ResNet18. A prototype web application showcasing ResNet18 performance has been created. The acquired results show how transfer learning can improve the accuracy of breast cancer detection, providing invaluable assistance to medical professionals, particularly in an African scenario.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Introduction

Breast cancer

In the year 2023, 1,958,310 new cancer cases and 609,820 cancer deaths are projected to occur in the United States [1]. Cancer was the second-leading cause of mortality in 2018, with 9.6 million fatalities, according to the World Health Organisation [2]. Particularly, the prevalence of female breast cancer is substantially higher in developing nations than it is in industrialised ones. For instance, 1.38 million people are diagnosed with breast cancer each year in Pakistan, and one-third of them pass away [3]. 9.6 million people worldwide died from cancer in 2020, and 1.7 million survived [2]. Early breast cancer diagnosis can result in lower mortality rates and better treatment outcomes, because of a variety of methods, such as screening tests, self-awareness, and medical consultations [26]. Breast cancer is diagnosed by radiologists using techniques like mammography and biopsy; mammography images are critical for spotting early cancer symptoms and boosting survival rates [4].

The goal of earlier studies in the field of Science and Technology was to identify breast cancer by examining and analysing cancer cells, specifically by identifying whether they were benign or malignant using nuclei analysis and cell classification techniques [14–16]. Effective algorithms have been created in the field of medical image processing to recognise and categorise areas of interest in the diagnosis of breast cancer [17]. However, a highly accurate framework or system is still required to accurately identify and categorise breast cancer [18]. Since performance degradation can affect outcomes, it is difficult to achieve efficiency and accuracy in medical diagnostics [19]. An automated system that can propose the type of disease is essential in resource-constrained locations like tribal territories or where access to skilled medical personnel and high-end equipment is constrained [20]. A system like that would make it possible for doctors to quickly start the right kind of care. The extensive use of computer-aided diagnosis (CAD), particularly in the field of breast cancer detection, is a result of improvements in medical diagnosis. The accuracy and effectiveness of CAD systems have significantly increased thanks to artificial intelligence, including machine learning and deep learning [5].

Deep learning

A promising new method for detecting breast cancer is deep learning. Medical images can be used to teach deep learning models [8]. Sophisticated features can be utilised to distinguish between benign and malignant tumors. Two well-known deep learning architectures, ResNet18 and ResNet34, have been applied to the diagnosis of breast cancer [8]. Convolutional layers make up these models, which can be used to extract features from photos. In order to categorise the images as benign or malignant, the features are then passed through a number of completely connected layers [34]. ResNet18 was used by [34] to categorise breast cancer images from the INbreast dataset. The ResNet18 model was improved using a transfer learning method by the authors with the help of INbreast dataset. The model had a 95% accuracy rate [34]. ResNet34 was used by [20] to categorise breast cancer images from the Digital Database for Screening Mammography (DDSM) dataset. ResNet34 was used by [36] to categorise breast cancer images from the Mammographic Image Analysis Society (MIAS) dataset.

Convolutional neural network

Convolutional Neural Network (CNN) is a potent classifier that excels at feature extraction and picture classification, making it ideally suited for classifying breast cancer [7]. The CNN model improves the detection of malignant cells by learning features from images through hidden layers [54]. By matching patterns in the images, CNN can successfully distinguish between benign and malignant breast cancer lesions with a big dataset [19, 20]. Breast cancer detection accuracy has greatly increased because of CNN integration into CAD systems [20, 23]. For instance, a study by [42] discovered that a CNN-based CAD system could detect breast cancer in mammography images with a sensitivity of 96% and a specificity of 93%. Another study by [13] discovered that a CNN-based CAD system was capable of detecting breast cancer in histological images with a sensitivity of 92% and a specificity of 95%.

Finally, a study by [19] discovered that a CNN-based CAD system was capable of detecting breast cancer in ultrasound images with a sensitivity of 91% and a specificity of 94%. Using a pre-trained ResNet18 and ResNet34 models, deep transfer learning is essential [21]. The trained ResNet18 and ResNet34 models are used to extract pertinent characteristics from breast x-ray mammography images. These models are renowned for their remarkable performance in computer vision tasks [21].

Deep transfer learning with ResNet18 and ResNet34

For classifiers adaptation, the pre-trained ResNet models feature extractor and classifier is usually kept unchanged because it has learned general image features that are likely to be useful for the new task [44]. However, the final classifier, which was originally trained to classify images into a large number of categories, is adapted for the specific task of breast cancer detection. A model that has been trained for one task, as shown in figure 1, can be utilised as the basis for another model using the machine learning technique known as transfer learning [40]. To accomplish this, the first model's weights can be frozen and then adjusted for the second task. When there is only a small amount of data available for the second job, transfer learning is advantageous [38, 40]. The second model can be trained more quickly and effectively by utilising the first model's weights as shown in figure 1. Additionally, it can be applied to enhance a model's performance on the second job. The initial model can pick up universal traits that are advantageous for both tasks. The second model can then take advantage of these qualities to help it learn more quickly and precisely [32, 40]. There are two basic methods for transferring knowledge;

- I.Fine-tuning: Except for the final few layers, the weights of the initial model are frozen during fine-tuning. On the second task, these layers are then fine-tuned [33].

- II.The weights of the initial model are wholly frozen during feature extraction. The first model's retrieved features are then sent to the second model as input [34].

Download figure:

Standard image High-resolution imageThe fine-tuning strategy approach can be used to enable the pre-trained model to absorb knowledge from given data sets [35, 39].

ResNet18 and ResNet34

Figure 1 shows a basic illustration of how transfer learning process works, when convolutional layers of the ImageNet are passed to proposed models convolutional layers, which requires less data.

Task 1 displays CNN model on more general data (ImageNet). Where Data 1 is ImageNet dataset, Model 1 is Convolutional layers, Head 1 is fully connected layers and Prediction 1 is Predicted labels [42]. In this case the knowledge learned from task 1 (model 1) with the help of ResNet18, Inception V3, and ResNet34 is re-used in other to boost performance on a related task in model 2 of Task 2, which displays ResNet18 and ResNet34. Data 2 is a given medical image dataset such as Breast Mammography Images, Model 2 is convolutional layers, New Head is fully connected layers and Prediction 2 is Predicted labels.

In-depth research on ResNet18 for image classification tasks outperformed architectures [27] with a top-1 error rate of 30.24%. Since then, many image classification benchmarks have used ResNet18 as their baseline model. On many datasets, including the Canadian Institute for Advanced Research (CIFAR), CIFAR-10, CIFAR-100, and Street View House Numbers (SVHN) datasets, researchers have assessed their performance [27–29]. ResNet18 frequently outperforms shallower topologies while being computationally efficient, achieving consistently competitive results [27–29]. Additionally, research on ResNet18's transfer learning capabilities has shown that it is good at adjusting to new datasets with few labelled examples [30, 31]. Researchers have demonstrated the model's ability to generalise well across various image classification tasks by fine-tuning the pre-trained ResNet18 model on a particular task, achieving impressive accuracy on a variety of domain-specific datasets [39–41].

ResNet34 has also undergone a thorough evaluation for image classification while having a somewhat deeper architecture than ResNet18. ResNet34 outperformed ResNet18 on the ImageNet dataset [27], obtaining a top-1 error rate of 26.70% [27]. ResNet34 has also been evaluated on other datasets, just like ResNet18. On datasets including CIFAR-10, CIFAR-100, and Pascal Visual Object Classes, researchers have examined its performance [27–29]. ResNet34 routinely outperforms shallower models in terms of accuracy and the ability to catch more complex patterns [27–29]. Additionally, transfer learning tests using ResNet 34 have produced encouraging outcomes. Researchers have achieved excellent classification accuracy in a variety of domains, including medical imaging, satellite imagery, and natural scene understanding [9, 29], by utilising pre-trained ResNet34 models and fine-tuning them on particular datasets.

Residual blocks

Through the use of skip connections and identity mapping, residual blocks in ResNets overcome the problem of disappearing gradients [10, 37]. While identity mapping maps the input straight to the output, skip connections allow the network to skip over particular layers. After the network has been compressed, many informational aspects are explored [46]. A typical residual block used in the ResNet18 and ResNet34 CNN models is shown in figure 2.

Download figure:

Standard image High-resolution imageBreast cancer image classification

Imaging-based and patient-based methods can both be used for breast cancer image classification. The imaging-based technique uses data in the form of photographs taken from a particular cancer patient for training and testing [11]. The availability of data from a single patient restricts this method. The imaging data of many patients is used for training and testing in the patient-based method [22]. Although this method can be applied more generally, a larger dataset is needed.

The patient-based approach can be used to divide breast cancer photos into four categories using cancer negative, cancer positive, implant cancer negative, and implant cancer positive. In the more advanced approach breast cancer images can be divided into benign and malignant. Breast tumors that are benign do not spread to other body areas and are not malignant. They often grow slowly and are small [6]. Benign breast tumors can take a variety of forms, including: the most prevalent form of benign breast tumor is a fibroadenoma. They are constructed of glandular and fibrous tissue [24, 25].

Cysts which are sacs filled with fluid may be brought on by breast damage or hormonal changes. Cancerous malignant breast tumors have the potential to metastasis to other body organs. They typically grow more quickly and are larger than benign tumors [9]. Typical forms of cancerous breast tumors include carcinoma, the most prevalent. It begins in the milk ducts and moves from there to the nearby tissue. Breast cancer that begins in the lobules, the breast milk-producing glands, is known as invasive lobular carcinoma. Breast cancer that has metastasized means it has spread to the bones, liver, or lungs, among other organs [8, 9, 20].

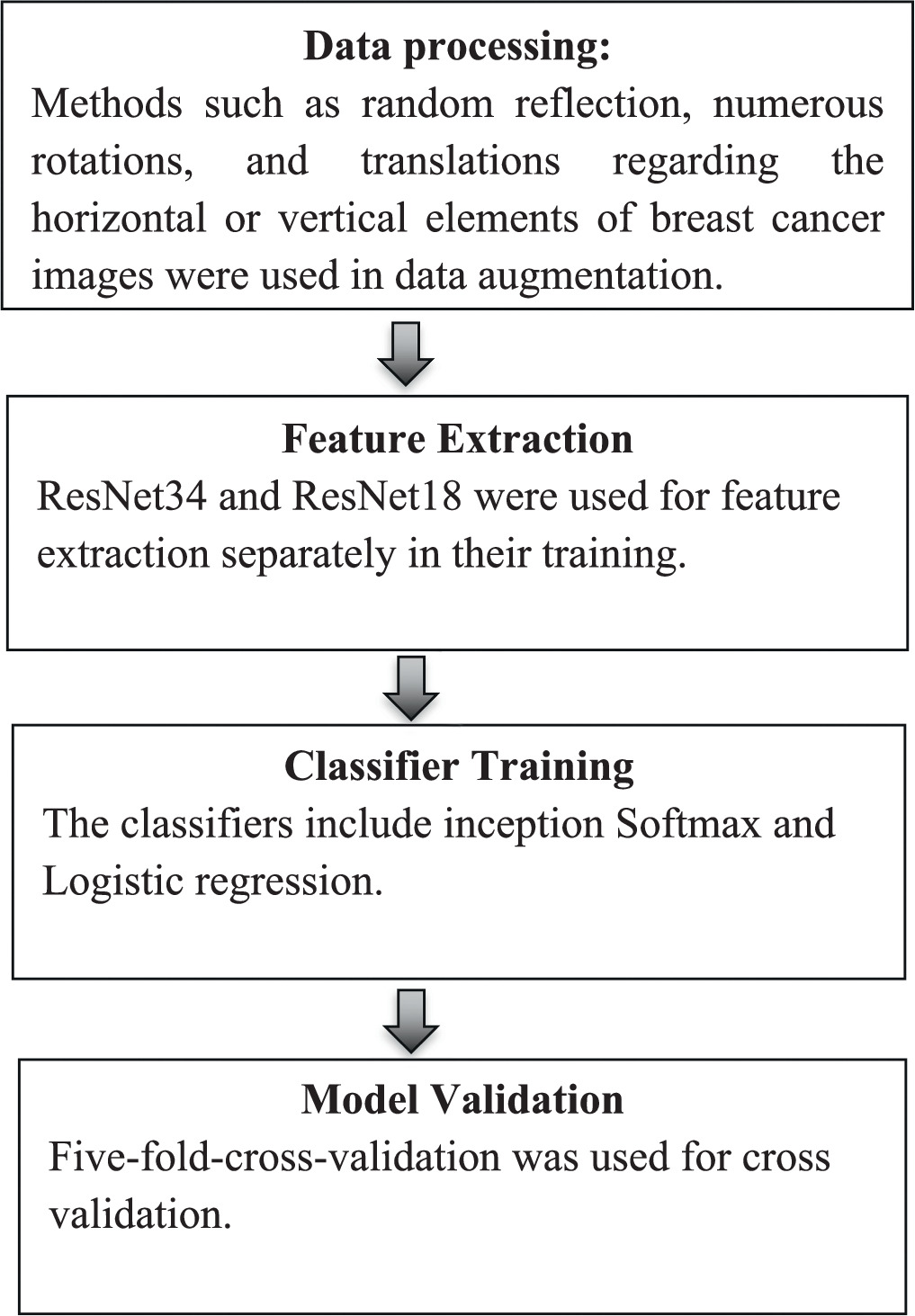

For improving training, Inception V3, SoftMax and Logistic Regression classifiers may be necessary. Inception V3 extracts features from input images, Softmax and logistic regression classifiers utilize these features for classification, with Softmax handling multi-class classification and logistic regression handling binary classification. This combination allows the model of the study accurately detect breast cancers leveraging transfer learning as elaborated in figure 3.

Figure 3. Flowchart of research methodology.

Download figure:

Standard image High-resolution imageInception V3

This is a deep CNN architecture designed by Google. Inception V3 serves as the feature extractor. It is pre-trained on a large dataset (e.g., ImageNet) and has learned to extract high-level features from images. In transfer learning, it is likely to fine-tune the weights of the Inception V3 model on the dataset specific to breast cancer detection, leveraging the features learned from ImageNet [53]. By doing so, it is possible to benefit from the generalization power of the pre-trained model while adapting it to a specific task.

SoftMax

Softmax is a function used for classification tasks, where it converts raw scores (logits) generated by the previous layers (e.g., Inception V3) into probabilities. Each output neuron in the Softmax layer represents the probability of a particular class. Multiple classes represent different types or stages of breast cancer. The Softmax classifier ensures that the output probabilities sum up to one, making it suitable for multi-class classification tasks [54].

Logistic regression

Logistic regression is a statistical method used for binary classification tasks. It serves as a binary classifier to distinguish between benign and malignant tumors. Unlike Softmax, which handles multiple classes, logistic regression outputs a probability score between 0 and 1 for the positive class (e.g., cancer_positive) [55] and it is a complement for the negative class (e.g., cancer_negative). It is a simpler model compared to deep neural networks like Inception V3, but it can still be effective for certain classification tasks, especially when dealing with binary outcomes.

Methodology

Figure 3 outlines the steps involved in the research methodology.

Data collection and input

1,200 dataset of breast x-ray mammography images from the National Radiological Society's (NRS) archives [56] was employed in the study. The dataset was divided into four categories:

- implant_cancer_negative,

- implant_cancer_positive,

- cancer_negative,

- cancer_positive.

The dataset displayed in figure 4 was split into a training set (80%) and a validation set (20%) in order to ensure an objective assessment.

Download figure:

Standard image High-resolution imagePre-processing

Image pre-processing was used for all input photos from the NRS in order to increase the consistency of classification results and produce better features. A large-scale image dataset was necessary for the CNN approach's massively repetitive training in order to avoid the risk of over-fitting. Image Re-sizing of NRS dataset was carried out with the help of 6000 4000-pixel versions of each image to obtain 128 × 128 as the new size of the dataset. As a result, the processing time was sped up.

Data augmentation

The dataset was subjected to a data augmentation technique with the help of Convolutional neural networks to expand the data for training and improves system performance and balances the dataset.

Training and evaluation

To train the deep transfer learning model, the authors leveraged on the Fastai Package, which provides high-level abstractions for training deep learning models [12]. The pre-trained ResNet18 model acted as the feature extractor, while the last layers were fine-tuned for the specific breast cancer detection job. During training, stochastic gradient descent (SGD) optimization was applied with a learning rate schedule to update the model's parameters. The training method entailed iterating through the training set for 100 epochs, allowing the model to capture the underlying patterns in the data and converge to an ideal solution. The performance of the model was examined using the accuracy, confusion matrix, precision and Recall metrics, and the harmonic mean of precision and recall (F1 Score) to measure its authenticity and effectiveness.

Results and discussion

Data augmentation

Figure 5 shows images obtained after data augmentation when random reflection, numerous rotations, and translations regarding the horizontal or vertical elements of breast cancer images were taken into consideration.

Figure 5. Data appearance after augmentation.

Download figure:

Standard image High-resolution imagePre-training deep neural network models: RestNet18, RestNet34

Proposed model performance on image dataset

The evaluation metrics of the ResNet18 and ResNet34 architecture, where 100 epochs and 64 batch sizes, were used as shown in table 1. For ResNet34, the transfer learning technique predicted right with 86.7% certainty (validation accuracy), with 13.3% error rate and for ResNet18 the transfer learning technique predicted right with 92% certainty (validation accuracy), with 8% validation error rate, on the images after training as shown in table 1. This underscores the model's potential as an objective decision-making tool for radiologists and oncologists.

Table 1. The evaluation metrics of the models.

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet18 | 92.0% | 0.952 | 0.953 | 0.912 |

| ResNet34 | 86.7% | 0.932 | 0.932 | 0.897 |

The confusion matrix in figure 6 shows the number of patients with cancer that were correctly classified as cancer (true positives, TP), incorrectly classified as not having cancer (false negatives, FN), incorrectly classified as having cancer (false positives, FP), and correctly classified as not having cancer (true negatives, TN). The precision of the model is computed by dividing the number of true positives by the total of the true positives and false positives. In this scenario, the result after model training is 0.932. The recall of the model is obtained by dividing the number of true positives by the total of the true positives and false negatives. In this scenario, the obtained Recall after model training is 0.953. The F1 score is a measure of Accuracy and Recall that takes into account both parameters. In this scenario, the calculated F1 Score after model training is 0.912. The F1 score is a measure of accuracy and recall that takes into account both parameters. In this scenario, the achieved F1 Score after model training is 0.897.

Figure 6. Confusion Matrix of ResNet18 and ResNet34 Architecture.

Download figure:

Standard image High-resolution imageThe comparisons of ResNet18 and ResNet34 to other models are based on the comparisons of transfer learning. ResNet18 was first used from data processing and augmentation, feature extraction, data training, performance evaluation through to model deployment and later used ResNet34 for same process. Both models used feature extraction and classification processes as shown in tables 2, 3 and 4.

Table 2. Layers for feature extraction and model classification.

| Task | Model | Layers for Feature Extraction | Classification Model | Metrics | Performance Illustration |

|---|---|---|---|---|---|

| Image Classification | ResNet18 | Conv1-conv5 | Dense (linear) | Top-1 Accuracy (%) | ResNet18 versus ResNet34 on ImageNet: [Image showing comparative accuracy curves] |

| View table 3 | |||||

| ResNet34 | Conv1-conv5 | Dense (linear) | Top-5 Accuracy (%) | ResNet18 versus ResNet34 on CIFAR-10: [Image showing comparative accuracy bars] | |

| View table 4 |

Table 3. The evaluation metrics of the ResNet18 model.

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet18 | 92.0% | 0.952 | 0.953 | 0.912 |

Table 4. The evaluation metrics of the ResNet34 model.

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet34 | 86.7% | 0.932 | 0.932 | 0.897 |

Tables 2, 3 and 4 specify the layers used for feature extraction and the models utilised for classification tasks

Research limitations

Dataset Size and Diversity: The dataset is tiny and not diverse enough in terms of patient demographics and imaging modalities. This may limit the generalizability of the model's performance to different populations and contexts.

Imbalance in Class Distribution: The dataset utilised in the research is imbalanced, indicating that there are more samples of one class (i.e., healthy patients) than the other class (i.e., patients with cancer). This imbalance can impact the model's performance and lead to biased results.

Conclusion

The study focused on developing a deep transfer learning model for breast cancer detection utilising ResNet18 and ResNet34 and the method used was compared with other methods as shown in table 5. Based on the results after 100 epochs of training, the results affirm that the models demonstrated excellent performance metrics, including accuracy, precision, recall, and F1-score, demonstrating its capacity to effectively identify breast cancer cases. The findings are similar to earlier researches that have proven the efficiency of deep transfer learning for breast cancer detection. The study has successfully applied transfer learning from pre-trained ResNet18 and ResNet34 architectures to the task of breast cancer detection using x-ray mammography images. The specific application with two residual network models that is ResNet18 and ResNet34 using the FastAI framework to breast cancer detection is a novel contribution of this work. The successful adoption of this methodology holds considerable promise for supporting healthcare workers, particularly in African settings where access to specialist medical expertise may be limited. Figure A1 and A2 and A3 are the codes demonstrating the development of the web demo in figure A4 using ResNet18 and ResNet34 are shown in the appendix which can also be done practically using the link below.

Table 5. Comparison of experimental results of different models.

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| ResNet18 | 92.0% | 0.952 | 0.953 | 0.912 |

| ResNet34 | 86.7% | 0.932 | 0.932 | 0.897 |

| ResNet18 [45] | 89.4% | 0.921 | 0.918 | 0.919 |

| ResNet34 [46] | 90.1% | 0.927 | 0.924 | 0.925 |

| ResNet18 [47] | 91.2% | 0.945 | 0.938 | 0.941 |

| ResNet34 [48] | 93.1% | 0.958 | 0.954 | 0.956 |

| ResNet18 [49] | 88.7% | 0.915 | 0.910 | 0.912 |

| ResNet34 [50] | 91.4% | 0.938 | 0.933 | 0.935 |

https://huggingface.co/spaces/Addai/Breast_cancer_detection_with_deep_transfer_learning

Recommendations

Further study and validation are necessary to increase the applicability of this approach, including comprehensive testing on varied datasets and in real-world clinical situations. Additionally, the inclusion of interpretability approaches could enhance the transparency of the model's decision-making process, ensuring its acceptance and reliability among healthcare professionals. The findings contribute to the increasing body of information on deep transfer learning for breast cancer diagnosis and pave the path for future breakthroughs in this sector. The usage of sophisticated technology and machine learning algorithms can be used to transform healthcare delivery and make a substantial impact on breast cancer detection and treatment.

Acknowledgments

We would like to express our sincere gratitude to the reviewers for their invaluable time, expertise, and constructive feedback in reviewing the manuscript. Their thoughtful insights have greatly contributed to the improvement and refinement of the work, enhancing its quality and scholarly merit. We are truly appreciative of their dedication to the peer-review process, which has been instrumental in shaping the final version of the manuscript. The authors would also like to express their sincere gratitude to the individuals who contributed significantly to the preparation and completion of this research paper. In particular, we would want to acknowledge Mr Osei Samuel Nyarko, Miss Amaniampong Emmanuella Konadu, Miss Mercy Agyei, and Mr Isaac Amoah, all from Kwame Nkrumah University of Science and Technology, for their hard work and dedication. These students played crucial roles at various stages of this project, and their commitment significantly contributed to its success.

Data availability statement

All data that support the findings of this study are included within the article (and any supplementary files).

Ethical statement

The authors utilized 1,200 datasets in the study, which comprised scanned x-ray photographs of various patients' breasts obtained from the National Radiological Society's archives. Below is the link of the society; 'https://ishrad.org/historical-archive/original-publications/history-of-national-radiological-societies-and-organisations' [56].

Appendix

Figure A1. Codes demonstrating the development of the web using ResNet18.

Download figure:

Standard image High-resolution imageFigure A2. Codes of ResNet 34 model after training for 100 epochs.

Download figure:

Standard image High-resolution imageFigure A3. Codes of ResNet 18 model after training for 100 epochs.

Download figure:

Standard image High-resolution imageFigure A4. Confusion Matrix of ResNet18 Architecture.

Download figure:

Standard image High-resolution image