Abstract

Objective. The primary purpose of this study was to investigate the electrophysiological mechanism underlying different modalities of sensory feedback and multi-sensory integration in typical prosthesis control tasks. Approach. We recruited 15 subjects and developed a closed-loop setup for three prosthesis control tasks which covered typical activities in the practical prosthesis application, i.e. prosthesis finger position control (PFPC), equivalent grasping force control (GFC) and box and block control (BABC). All the three tasks were conducted under tactile feedback (TF), visual feedback (VF) and tactile-visual feedback (TVF), respectively, with a simultaneous electroencephalography (EEG) recording to assess the electroencephalogram (EEG) response underlying different types of feedback. Behavioral and psychophysical assessments were also administered in each feedback condition. Results. EEG results showed that VF played a predominant role in GFC and BABC tasks. It was reflected by a significantly lower somatosensory alpha event-related desynchronization (ERD) in TVF than in TF and no significant difference in visual alpha ERD between TVF and VF. In PFPC task, there was no significant difference in somatosensory alpha ERD between TF and TVF, while a significantly lower visual alpha ERD was found in TVF than in VF, indicating that TF was essential in situations related to proprioceptive position perception. Tactile-visual integration was found when TF and VF were congruently implemented, showing an obvious activation over the premotor cortex in the three tasks. Behavioral and psychophysical results were consistent with EEG evaluations. Significance. Our findings could provide neural evidence for multi-sensory integration and functional roles of tactile and VF in a practical setting of prosthesis control, shedding a multi-dimensional insight into the functional mechanisms of sensory feedback.

Export citation and abstract BibTeX RIS

1. Introduction

Dexterity and manipulation abilities of the human hands are enabled by the complex biological structure, the sophisticated sensory system and the bidirectional sensorimotor control loop [1]. The sensory pathway continuously encodes the hand configuration (proprioception) and interactions with the environment (exteroception). Then the encoded information is transferred to the spinal cord and brain circuitries. In general, humans plan and execute movements under the guidance of vision, proprioception and tactile input [2, 3]. For example, complex grasping movements are achieved by integrating estimates of hand positions or contact forces with object information (including object size, shape, stiffness and so on) from multiple sensory modalities, weighting each of them by its relative reliability [4–7].

Acquired or congenital amputation has a profound impact on the quality of life. Amputees are often equipped with myoelectric prostheses to restore the missing functions [8]. During the contraction of residual muscles, surface electromyogram signals are recorded by EMG electrodes. The collected signals are processed by the prosthesis controller to command the prosthesis to perform a desired action (e.g. hand closing/opening) [9]. This type of myoelectric control has been developed for decades as an intuitive approach to control hand prostheses. However, the absence of sensory feedback impedes the functionality and efficiency of dexterous hand prostheses, which is likely to play an important role in prosthesis rejection rates [10–12].

In analogy with the sensory capabilities of human hands, an ideal prosthetic hand should deliver sensory information about prosthetic hand positions (proprioceptive feedback) as well as its contact states with target objects in the human-centered environments (tactile feedback (TF)). Several methods with invasive [13–15] and non-invasive [16, 17] techniques have been explored for restoring sensory feedback, wherein hand apertures and grasping forces are the most common encoded measurements transmitted to the user. D'Anna et al implemented remapped proprioceptive feedback by delivering prosthesis joint angle information through intra-neural stimulation [18]. Cipriani et al transmitted grasping force information by modulating the frequency of a single pager-type vibration motor [19]. Using a different method, Raspopovic et al showed that participants were able to distinguish three different force levels and effectively modulate the grasping force of the prosthesis with the feedback of median and ulnar nerves stimulation [13]. Such artificial feedback is deemed to improve user experience [10, 20] and manipulation performance of myoelectric prostheses [21].

Apart from the artificial feedback, vision is assessed to be an essential sensory modality during the interaction with the environment. Studies have compared the individual effectiveness of visual and TF to motor control. Some studies clearly suggested that TF was beneficial [22, 23]. For example, electrotactile feedback was reported to significantly improve the force control even in the presence of abundant visual cues [24]. Similarly, a longitudinal study reported that amputees were consistently better in performing a delicate grasping task when vibrotactile feedback was provided [25]. However, in some cases, TF alone did not bring more improvement than vision [19, 26, 27]. Nunzio et al reported that there was no significant difference in performance (accuracy and precision) across target force levels when using vibrotactile compared to visual feedback (VF) [28]. A similar result was reported by Dosen et al where the electrotactile feedback using multichannel electrodes led to a similar performance in grasping force control (GFC) as the VF do [29]. Focusing on a more diverse set of prosthesis control tasks, Marasco et al revealed that integrating the bionic modalities of touch, kinesthesia and visuomotor control enabled amputees to perform tasks more similarly to able-bodied subjects [30]. Taken together, the results about the functional roles of tactile and VF have been so far inconclusive in the context of different situations. The evaluations have mainly relied on behavioral performance. How the encoded sensory information is reflected in the brain circuits and what is the electrophysiological mechanism underlying sensory feedback remain elusive. Extensive studies should be conducted to provide neural evidence for the integrated allocation of different feedback sources and the functional mechanism of sensory feedback. As such, we will have a multi-dimensional understanding for the functional role of different modalities of sensory feedback, which is not only limited to the behavioral performance, but also from the perspective of electrophysiological evaluation. The findings of these studies will also guide the design and configuration of sensory feedback in different motor tasks within the framework of a closed-loop prosthesis control system.

In the current study, we developed a closed-loop setup for three typical prosthesis control tasks, i.e. prosthetic finger position control (PFPC, corresponding to remapped proprioception sense), equivalent GFC (corresponding to remapped tactile sense projecting proportional myoelectric signal) and box and block control (BABC, corresponding to restored dexterity). All the three tasks were conducted under TF, VF and tactile-visual feedback (TVF), respectively, with a simultaneous recording of the electroencephalogram (EEG) to assess the cortical activities underlying sensory feedback. We performed a comprehensive comparison of EEG responses and task performance among different types of feedback. This explorative study aimed to further elaborate on the functional roles of tactile and VF in typical prosthesis control tasks from a view of EEG evaluation. Additionally, we also focused on how tactile and visual information was integrated during task accomplishment. The results would provide a new framework for understanding the electrophysiological mechanism of sensory feedback and were expected to guide the design of sensory feedback for myoelectric forearm prostheses.

2. Materials and methods

2.1. Subjects

Fifteen able-bodied subjects (11 males and 4 females, all right-handed subjects, mean age ± SD = 23 ± 3) participated in this study. Each subject was informed of the experimental procedures and signed a written informed consent. The study was in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Shanghai Jiao Tong University.

2.2. EEG recording

EEG signals were simultaneously recorded during the three tasks. Sixty-four channels of EEG were acquired at a sampling frequency of 1200 Hz using a g.HIamp system (g.tec, Austria) and a quick cap with active electrodes. Electrodes were arranged according to the extended 10–20 system. The left earlobe was used as the reference while the forehead (AFC electrode) served as the ground. The band-pass filter setting was 0.5–100 Hz with a sampling rate of 1200 Hz, and a 50 Hz notch filter was used to diminish power line interference. Impedances of all electrodes were kept below 5 kΩ. In the three tasks, subjects started and stopped a trial by pressing two separate buttons with their left hands. The recorded sensor signals (from pressure and flex sensors) and button states were processed and encoded in a microprogrammed control unit (MCU, Arduino). Specifically, the sensor data were projected to the corresponding feedback values in each task, while the button state was projected to the capital letter of 'S' (trial start) or 'E' (trial end). The MCU then sent the projected capital letter and feedback values to MATLAB via USB communication. The control program in MATLAB was automatically processed to control the electrical stimulator. Once the projected letters were 'read' by MATLAB, triggers were 'written' to the parallel address and the EEG was synchronized via parallel port communication. The main objective of the three tasks was to investigate the functional roles of tactile and VF in the context of prosthetic finger position control, force control and object transfer, especially using the method of electrophysiological evaluation.

2.3. Prosthetic finger position control

Sensing the hand posture (proprioception) is critical for grasping. Here, a substitution approach is used to perceive the joint angle of prosthetic fingers. Finger position information was provided using sensory substitution based on electrotactile stimulation. The stimulation was produced by a pulse stimulator (STG4008, GmbH, Germany), outputting a multi-pulse train of biphasic, rectangular current pulses on the fingertip of the right index via an independent stimulation electrode (Shanghai Global Biotech Inc. China, 10 mm in diameter). Subjects were fitted with two electromyography (EMG) electrodes and a proportional EMG controller (SJQ18-T, Danyang Prostheses Factory, CO., LTD, China), allowing control of a prosthetic hand (SJQ18 hand, Danyang Prostheses Factory, CO., LTD., China) opening and closing using EMG signals. The two EMG electrodes were placed on a set of antagonistic muscles of the right forearm which were responsible for the wrist flexion and extension. Two pressure sensors (FlexiForce Sensor A101, Weike Electronics Co., Ltd China) and a flex sensor (MCU-103, Risym Co., Ltd China) were integrated within prosthetic digits (thumb and index) and the metacarpophalangeal joint, respectively. The prosthetic hand was fixed on the table using a custom-molded socket. The averaged measurement of the two pressure sensors was used to quantify the contact force between the prosthetic fingers and objects. The pinch distance between thumb and index fingers was set by a normalized value of the flex sensor characterizing the geometrical relationship between the pinch distance and the bending angle of the flex sensor. Subjects were required to wear a wrist strap placed on the skin between the stimulation electrode and EMG electrodes to reduce the artifact caused by electrical stimulation.

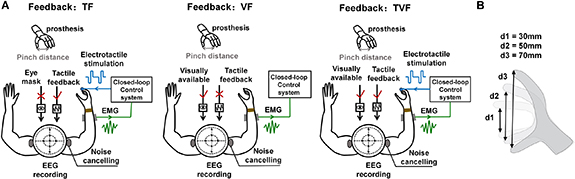

The pinch distance of the prosthesis ranged from 0 mm closing movement to 100 mm wide opening movement. Pinch distances of three target positions were 30 mm, 50 mm and 70 mm (figure 1(B)). Subjects were instructed to control the prosthetic hand to reproduce a target position under one of the three types of feedback: TF, VF and TVF, randomly. As shown in figure 1(A), subjects were visually isolated in TF. The position information was conveyed solely through sensory substitution using electrotactile stimulation. Encoding of the position information delivered from the prosthetic hand (pinch distances ranged from 0 mm to 100 mm) was achieved using a simple linear encoding scheme. The value of measured pinch distance was used to modulate the stimulation frequency, meanwhile pulse width and current amplitude were fixed as 200 µs and 3 mA, respectively. These two parameters have been used in our previous work [31, 32]. Eight subjects in the previous study were recruited again in this study. Although the rest seven subjects in this study did not experience such test before, there was a short pilot to make sure the feasibility of the chosen parameters (200 µs, 3 mA and 1 Hz). All the subjects reported that they could sense the stimulation under such parameters and no uncomfortable sensation was evoked. Frequency modulation resulted in changes of the perceived sensation density. It has been demonstrated that subjects were more sensitive to the change of stimulated frequencies when compared to pulse widths [32]. Here, the frequency modulation was used for stimulation encoding to get a more effective discrimination for the applied stimulation and a better matching between the perceived sensation and feedback information. The stimulation parameter was computed as follows

where  was the stimulation frequency of the remapped proprioceptive feedback;

was the stimulation frequency of the remapped proprioceptive feedback;  was the minimum frequency that could elicit a perceived sensation;

was the minimum frequency that could elicit a perceived sensation;  was the maximum value allowing to differentiate the change of stimulation frequencies (with the step of 10 Hz); pd was the measured value of pinch distance; and

was the maximum value allowing to differentiate the change of stimulation frequencies (with the step of 10 Hz); pd was the measured value of pinch distance; and  was the maximum value of pinch distance (100 mm). Individual-specific

was the maximum value of pinch distance (100 mm). Individual-specific  and

and  for each subject were detected before the experiment. Here, the overall average frequency range was 1–150 Hz. All the subjects underwent a brief learning session (

for each subject were detected before the experiment. Here, the overall average frequency range was 1–150 Hz. All the subjects underwent a brief learning session ( 20 min) to help map the stimulation frequency to the pinch distance. They were familiarized with the evoked sensations at the three chosen positions, then controlled the prosthetic hand back to the same position by contracting corresponding muscles. In VF, there was no electrotactile stimulation, but vision of the spatial position of the prosthetic hand was enabled. Subjects actively controlled the prosthetic hand by visually judging the spatial distance between the thumb and index. During the training, verbal correction from the experimenter was provided. In TVF, both vision and electrotactile stimulation were provided to the subjects. Subjects were required to wear noise-canceling earplugs in TF, VF and TVF to avoid acoustical effects.

20 min) to help map the stimulation frequency to the pinch distance. They were familiarized with the evoked sensations at the three chosen positions, then controlled the prosthetic hand back to the same position by contracting corresponding muscles. In VF, there was no electrotactile stimulation, but vision of the spatial position of the prosthetic hand was enabled. Subjects actively controlled the prosthetic hand by visually judging the spatial distance between the thumb and index. During the training, verbal correction from the experimenter was provided. In TVF, both vision and electrotactile stimulation were provided to the subjects. Subjects were required to wear noise-canceling earplugs in TF, VF and TVF to avoid acoustical effects.

Figure 1. Overview of the GFPC task. (A) Experimental setup. The prosthetic hand was driven by EMG signals acquired from a set of antagonistic muscles on the right forearm of subjects and classified into hand opening and closing movements. Subjects were instructed to control the prosthetic hand to three target positions under TF, VF and TVF while EEG signals were simultaneously recorded. In TF, subjects were visually isolated and the position information was conveyed using sensory substitution via electrotactile stimulation. In VF, there was no electrotactile stimulation, but only a visual view of the prosthetic hand was enabled. In TVF, electrotactile stimulation and visual view were both provided to subjects. To reduce the artifact due to electrical stimulation, a wrist strap was placed between the stimulation electrode and EMG electrodes. Subjects were required to wear noise-canceling earplugs during the task. (B) The three target positions in the PFPC task, corresponding to three levels of pinch distances.

Download figure:

Standard image High-resolution imageAfter training, subjects quickly expressed confidence in interpreting the evoked sensation/spatial distance, as well as a readiness to initiate the task. To ensure a standardized setup across the trials and subjects, the initial position of the prosthetic hand was fully closed (minimum pinch distance). To remind subjects of target positions, the target pinch distance was visually shown before each VF trial, while electrotactile stimulation with frequency matching to the target pinch distance was applied to subjects before each TF trial. During the task, subjects controlled the prosthetic finger position by adjusting the muscle contraction until they felt the target position was reproduced. The reproduced pinch distance and EEG signals were simultaneously recorded. There were 30 trials (10 trials for each position) for each type of feedback. The order of the three target positions was randomized within each feedback. The three types of feedback were randomly conducted for each subject.

2.4. Equivalent GFC

Grasping forces were generated during the contact with objects. In a typical myoelectric prosthesis, the generated myoelectric signal is associated with the hand closing velocity and the resulting contact force, i.e. a stronger contraction leads to faster closing and a stronger grasp [33]. Importantly, the myoelectric signal anticipates the prosthesis outputs (velocity and force) as an input for the prosthesis control. The user could therefore make predictions on the force before touching the object and adjust the myoelectric command during prosthesis closing by relying on the feedback on the EMG. It has been demonstrated that adding the EMG feedback increased grasping performance compared to force feedback only [34]. The following study further indicated that as a fully independent feedback source, the online EMG feedback was superior to the classic force feedback in a realistic grasping task [33]. As such, in the GFC task, subjects were not required to control a real prosthetic grasping with different forces, but to control the EMG signals by modulating muscle contractions. The generated EMG signals were calibrated to an equivalent grasping force. The strategy was to feedback directly the calibrated EMG value, instead of the state of the prosthesis (e.g. grasping force). A customized EMG acquisition system with embedded amplification, filtering, and rectification circuits was developed, collecting EMG signal with the sampling rate of 1000 Hz. The preprocessing procedures, including filtering (bandpass filtering from 20 to 450 Hz) and rectification, were sequentially implemented via hardware circuits to remove artifacts and stabilize the baseline [35]. The processed EMG signals were then additionally filtered with a 50 Hz notch filter and a 0.1–5 Hz 2nd order Butterworth bandpass filter. The EMG electrode was placed on the extensor carpi ulnaris of the right forearm. The root mean square (RMS) value of the filtered EMG was calculated with a 200 ms analysis window and a 50 ms slide increment, representing the equivalent grasping force. To normalize the acquired RMS, the maximum activation was measured as the strongest contraction that subjects could maintain for 2 s without fatigue. The maximum voluntary contraction (MVC) was defined as the average of five measurements. Finally, the myoelectric control was calibrated by linearly mapping [0 , 80

, 80 ] of MVC to [0

] of MVC to [0 , 100

, 100 ] of the maximum equivalent target force. The level of muscle activation was therefore calibrated to the equivalent grasping force.

] of the maximum equivalent target force. The level of muscle activation was therefore calibrated to the equivalent grasping force.

Subjects were instructed to perform the task by contracting the wrist extensor muscle to reproduce one of the three equivalent target forces (20 , 50

, 50 and 80

and 80 maximum equivalent target force) under TF, VF and TVF, respectively. In TF, the experimental setup was the same as that in the PFPC task. The generated equivalent grasping force was encoded using a linear frequency modulation scheme to map the perceived stimulation density with the equivalent grasping force. The electrotactile stimulation parameter was described here

maximum equivalent target force) under TF, VF and TVF, respectively. In TF, the experimental setup was the same as that in the PFPC task. The generated equivalent grasping force was encoded using a linear frequency modulation scheme to map the perceived stimulation density with the equivalent grasping force. The electrotactile stimulation parameter was described here

where  was the stimulation frequency of the TF; the settings for

was the stimulation frequency of the TF; the settings for  and

and  were similar to those for

were similar to those for  and

and  ; gf was the recorded grasping force which was quantified by the calibrated RMS value; and

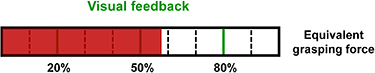

; gf was the recorded grasping force which was quantified by the calibrated RMS value; and  was the maximum target force. The pulse shape, current amplitude and pulse width were the same as those in the PFPC task. Prior to the task, individual-specific MVC was determined for each subject. Before starting with the data acquisition, the principles of equivalent GFC, including the proportionality between the muscle contraction and the equivalent grasping force, as well as the TF interpretation (i.e. mapping between tactile sensation and equivalent target force) were explained to subjects both verbally and with practical training until they were confident in these operations. Three frequency codes corresponding to the three target forces were presented randomly to subjects. Then they were asked to reproduce the equivalent target force by contracting muscles according to the evoked tactile sensation. In VF, only visual information shown on the PC screen was provided. The three equivalent target forces were marked as green vertical lines. The red bar depicted the measured equivalent grasping force in real-time, as shown in figure 2. In TVF, both electrotactile stimulation and visual information were provided. The experimental protocol for the GFC task was similar to the PFPC task. There were 30 trials per feedback, with 10 trials per target force. The three types of feedback were randomly presented across subjects. The order of the three equivalent target forces were randomized within each feedback. During the task, subjects performed the trials at a comfortable pace, usually with a few seconds between trials to avoid muscle fatigue. The reproduced grasping force and simultaneous EEG signals were recorded for analyses.

was the maximum target force. The pulse shape, current amplitude and pulse width were the same as those in the PFPC task. Prior to the task, individual-specific MVC was determined for each subject. Before starting with the data acquisition, the principles of equivalent GFC, including the proportionality between the muscle contraction and the equivalent grasping force, as well as the TF interpretation (i.e. mapping between tactile sensation and equivalent target force) were explained to subjects both verbally and with practical training until they were confident in these operations. Three frequency codes corresponding to the three target forces were presented randomly to subjects. Then they were asked to reproduce the equivalent target force by contracting muscles according to the evoked tactile sensation. In VF, only visual information shown on the PC screen was provided. The three equivalent target forces were marked as green vertical lines. The red bar depicted the measured equivalent grasping force in real-time, as shown in figure 2. In TVF, both electrotactile stimulation and visual information were provided. The experimental protocol for the GFC task was similar to the PFPC task. There were 30 trials per feedback, with 10 trials per target force. The three types of feedback were randomly presented across subjects. The order of the three equivalent target forces were randomized within each feedback. During the task, subjects performed the trials at a comfortable pace, usually with a few seconds between trials to avoid muscle fatigue. The reproduced grasping force and simultaneous EEG signals were recorded for analyses.

Figure 2. The GFC task in VF. The generated equivalent grasping force was displayed with a moving red bar on the screen. The three target levels of grasping force were marked as green vertical lines. Other levels of grasping force were indicated with dashed lines.

Download figure:

Standard image High-resolution image2.5. Box and blocks control

The box and block task is a typical method for testing the manual dexterity of prosthetic hands [36]. As shown in figure 3, the prosthetic hand was attached to the right forearm of subjects through a custom bracket. The setup consisted of ten wooden blocks (3 × 3 × 3 cm3) and a wooden box (50 cm × 25 cm) which was physically separated in the middle. Subjects were required to control the prosthesis to transfer the wooden blocks from one side of the box to the other.

Figure 3. Overview of the BABC task. The prosthetic hand was attached to the right forearm of subjects through a custom bracket and was controlled by contracting corresponding wrist extensor/flexor muscles. Subjects were instructed to control the prosthesis to transfer the wooden blocks from one side of the box to the other in the conditions of TF, VF and TVF, respectively. Meanwhile, EEG signals were simultaneously recorded. In TF, electrotactile stimulation was used to provide touch hint while vision was isolated. In VF, only the vision was available. In TVF, both electrotactile stimulation and vision were provided to subjects. During the task, subjects were acoustically isolated and wore the metal wrist trap between EMG and stimulation electrodes to reduce artifact caused by electrical stimulation.

Download figure:

Standard image High-resolution imageDuring the task, subjects needed to open and close the prosthetic hand by contracting wrist extensor/flexor muscles, while moving the forearm horizontally. In TF, subjects were required to wear an eye mask to block visual input. Due to the requirement of transferring blocks with the prosthesis, subjects were trained to be familiar with the procedure of block moving and the relative positions of blocks and boxes. The block was placed exactly at the starting position of the prosthetic hand. Once the trial started, the subjects just moved the prosthetic hand down and then the block was grasped. 'Touch' events were delivered to subjects via electrotactile stimulation without any visual view. The 'touch' was detected when the grasping force crossed the threshold (0 N). This event was simultaneously passed to the subject by delivering a 500 ms electrotactile stimulation on the fingertip of the right index. The parameters of the rectangular pulses were set as: 3 mA of the current, 200 µs of the pulse width and 30 Hz of the frequency. In VF, subjects were required to transfer blocks only relying on vision. In TVF, the 'touch' hint and vision were both available to subjects. The successful transfer of ten wooden blocks was considered a complete trial. Once the block dropped during the task, the experimenter would guide the subjects prosthesis back to the starting position, and the grasping and moving procedures should be re-stared. The three types of feedback were randomly assigned to subjects. Each type of feedback included 10 trials. The EEG signals and time spent on transferring ten blocks were recorded for analyses.

2.6. Data analysis

2.6.1. EEG preprocessing

EEG data were corrected before analyses. The signals were first inspected visually and the trials with artifacts such as large drifts (amplitude change greater than 200 µv during idle state) and electrode spikes (peak response with the order of amplitude larger than mv) were removed. After that, independent component analysis was employed on the remaining trials to remove the artifacts of blinks and movements. The average number of trials removed for artifacts was 19.8 out of 210 trials (9.4 ), and the average independent components removed per subject was 5.4.

), and the average independent components removed per subject was 5.4.

2.6.2. Task-related brain response localization

The EEG data were segmented into 7 s epochs including [−4 −0.5] s prior to trial onset (baseline) and [0.5 4] s post trial onset (task state). The time/frequency decomposition of each trial was carried out to construct the spatial-spectral-temporal structure corresponding to each type of feedback. We used R2 to describe the distribution of the EEG discriminative information between task state and baseline in the spatial-spectral-temporal domain. We defined the baseline and task state as two class labels, respectively. And we assumed that there was a linear relationship between EEG features and class labels. The R2 index was then defined as the squared Pearson-correlation coefficient between EEG features and class labels [37]. It was calculated as:

where xk

=  was the spatial estimation of the kth epoch and the chth channel with frequency = f and time = t.

was the spatial estimation of the kth epoch and the chth channel with frequency = f and time = t.  , representing that xk

belonged to class b and s, respectively. When kth epoch was from the baseline, corresponding class

, representing that xk

belonged to class b and s, respectively. When kth epoch was from the baseline, corresponding class  . When kth epoch was in the task state, the class

. When kth epoch was in the task state, the class  . Therefore, the bivariate sequence of (Xk

,Yk

) was established. We could get the contrast imaging between the EEG features of baseline and task state in the spatial-spectral-temporal domain:

. Therefore, the bivariate sequence of (Xk

,Yk

) was established. We could get the contrast imaging between the EEG features of baseline and task state in the spatial-spectral-temporal domain:

The distribution of R2 in the spatial-spectral-temporal domain indicated the contribution of different frequency components and electrode channels to the distinction between the task state and baseline, i.e. the related channel and frequency band for the brain activation during the task.

Accordingly, the R2 was calculated in the spatial-spectral-temporal domain, i.e. 1:64 channel, 2:45 Hz, [−4 −0.5] s for baseline and [0.5 4] for task state. Here, R2 values were averaged along the 3.5 s epochs during the baseline and task states. With R2 value distributions, we can better understand the brain activations of the related channel and frequency band in different types of feedback.

2.6.3. Event-related desynchronization/synchronization(ERD/ERS)

ERD/synchronization which is called ERD/ERS reflected the cortical rhythm power suppression/enhancement with respect to a baseline reference. In this study, the alpha band (8–13 Hz) was investigated. The baseline was [−1.5 −0.5] s prior to the trial onset, while the task state was [0.5 4] s after the trial onset. Data were bandpass filtered (8–13 Hz) to calculate ERD/ERS. A 0.5 s sliding window with the step of 0.004 s was used to segment the data. The amplitude of the samples within the window was squared and averaged. The ERD/ERS was obtained by dividing their baseline value after subtracting the baseline.

2.6.4. Behavioral and psychophysical measurements

For the PHPC and GFC tasks, the outcome measure was the mean absolute error (MAE), defined as the averaged absolute difference between the reproduced and target pinch distances/grasping forces. The task time of successfully transferring ten blocks was adopted as outcome measure for the BABC task. To evaluate subjects' psychophysical assessment on feedback, a modified version of NASA task-load index (TLX) questionnaire was administered for TF, VF, and TVF after each task, respectively. The questionnaire not only included common TLX questions to evaluate physical and mental task load [36], but also involved questions regarding the benefit of feedback and the perceived prosthesis embodiment during the task execution. Specifically, subjects answered the question 'Did you have the feeling that the prosthesis was part of your body' to assess the prosthesis embodiment, and feedback benefit was indicated using the question 'To what extent could you benefit from the feedback'. Subjects were asked to report how much they agreed with each statement on a scale ranging from 0 (not at all) to 100 (completely) for each question.

2.6.5. Statistical analysis

The Kolmogorov-Smirnov test was performed to assess the normality of various datasets. The test showed that all the datasets were normally distributed. A one-way analysis of variance (ANOVA) was applied to compare the overall task performance/EEG response (premotor cortex) across the three types of feedback in each task. In the PFPC and GFC tasks, a one-way ANOVA was also applied to compare the difference across the three levels of pinch distances/grasping forces. Bonferroni correction was used for the one-way ANOVA analyses whenever it applied. For the analyses of EEG response on somatosensory/visual cortex, a paired-samples t-test was conducted to compare the difference in the evoked alpha ERD between TF/VF and TVF. All computations were performed using the software package IBM SPSS Statistics Version 22.

3. Results

3.1. Task performance

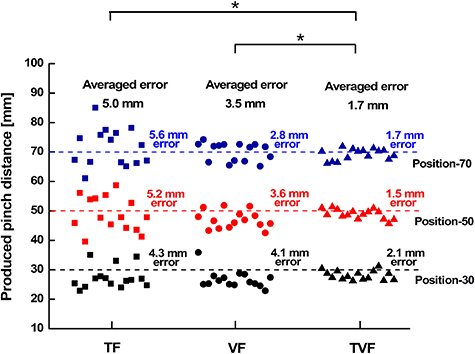

We first characterized the acuity of the remapped proprioceptive sense in the PFPC task. The precisions of reproduced pinch distances for the three target positions under TF, VF, and TVF were shown in figure 4. The performance was the best under TVF with an overall smallest MAE ± SD (standard deviation) = 1.7 ± 0.2 mm, showing significant differences compared to TF (p = 0.01, overall MAE ± SD = 5.0 ± 0.5 mm) and VF (p = 0.03, overall MAE ± SD = 3.5 ± 0.5 mm). There was no significant difference in the reproduced precision between TF and VF (p > 0.05). The addition of visual information significantly improved the precision of reproduced positions compared to the tactile modality alone, and vice versa.

Figure 4. PFPC precisions for three target positions in the conditions of TF, VF and TVF, respectively. The precision was quantified by the mean absolute error between the reproduced and target pinch distances. The asterisk indicated a significant difference with a level of p < 0.05.

Download figure:

Standard image High-resolution imageFigure 5 reported the precision of reproduced grasping force in the GFC task. Interestingly, there was no significant difference (p > 0.05) in overall MAE of reproduced grasping force between VF (overall MAE ± SD = 1.38 ± 0.8  ) and TVF (overall MAE ± SD = 1.36 ± 0.8

) and TVF (overall MAE ± SD = 1.36 ± 0.8  ). With or without tactile information, subjects achieved similar precision in generating target forces as long as they could see the explicit visual indication of the grasping force. When the visual information was removed, the reproduced forces were distributed more dispersedly around target forces in TF (overall MAE ± SD = 4.78 ± 3.0

). With or without tactile information, subjects achieved similar precision in generating target forces as long as they could see the explicit visual indication of the grasping force. When the visual information was removed, the reproduced forces were distributed more dispersedly around target forces in TF (overall MAE ± SD = 4.78 ± 3.0  ), showing larger MAE than TVF (p = 0.02) and VF (p = 0.03). This indicated that VF was more effective than TF in the GFC task.

), showing larger MAE than TVF (p = 0.02) and VF (p = 0.03). This indicated that VF was more effective than TF in the GFC task.

Figure 5. GFC precisions for three target grasping forces in the conditions of TF, VF and TVF, respectively. The precision was quantified by the mean absolute error between the reproduced equivalent grasping force and the target grasping force. The asterisk indicated a significant difference with a level of p < 0.05.

Download figure:

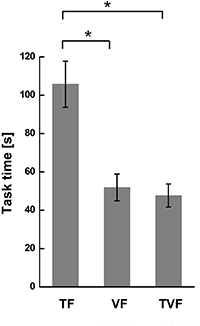

Standard image High-resolution imageTask time was calculated to characterize the performance of the BABC task, as shown in figure 6. The performance under TF, VF and TVF exhibited a similar trend as that in the GFC task. Namely, TF resulted in the longest task time, showing significant differences with VF (p = 0.01) and TVF (p = 0.008). Whereas no significant difference in task time was found between VF and TVF ( 0.05).

0.05).

Figure 6. Task time in the BABC task in the conditions of TF, VF and TVF, respectively. The asterisk indicated a significant difference with a level of p < 0.05.

Download figure:

Standard image High-resolution image3.2. Psycho-physiological self-assessments

As shown in figure 7, the mean NASA-TLX score for PFPC task was significantly higher than the other two tasks (p < 0.05), indicating a higher task complexity for the PFPC task. Interestingly, there were significant differences in the NASA-TLX scores between TF and TVF in GFC (p = 0.03) and BABC (p = 0.04) tasks. This suggested that visual information in TVF significantly decreased the mental workload for these two tasks. However, no significant difference in the NASA-TLX score was found between TF and TVF in PFPC task (p > 0.05). The addition of visual information did not significantly reduce the mental workload because of the high complexity of PFPC task.

Figure 7. Results of NASA-TLX score under TF, VF and TVF in the three tasks, respectively. The solid dot represented the NASA-TLX score for each subject. The asterisk indicated a significant difference with a level of p < 0.05.

Download figure:

Standard image High-resolution imageAs shown in figure 8(A), significant differences (p < 0.05) in the prosthesis embodiment between TVF and VF appeared. TF resulted in significantly stronger prosthesis embodiment compared to VF (p < 0.05). As shown in figure 8(B), we noticed that the benefit score in VF was significantly higher than that in TF (p < 0.05), but no significant difference was found between VF and TVF (p > 0.05) in GFC and BABC tasks. This suggested that VF contributed more to the accomplishment of these two tasks than TF. Instead, the benefit score was significantly higher for the TVF than VF (p = 0.02) in PFPC task, and there was no significant difference between TF and VF. This suggested that TF was clearly beneficial to the accomplishment of the PFPC task.

Figure 8. Results of embodiment score (A) and benefit score (B) under TF, VF and TVF in the three tasks, respectively. The mean was reported as a horizontal line, and the box represented the interquartile. The whiskers encompassed all data samples (no outliers removed).

Download figure:

Standard image High-resolution image3.3. R2 distribution across feedback

The R2 index was used to localize the component of EEG channels and frequency bands to the evoked brain activity during tasks. Figure 9 showed the grand averaged R2 distribution across the spatial-spectral domain for each type of feedback, which was averaged among all tasks and subjects. R2 contrast values all emerged out in the alpha band (8–13 Hz) for VF, TF and TVF (see figure 9(A)). Therefore, R2 values were averaged within the alpha band, and corresponding topographical R2 contrast images were obtained, as shown in figure 9(B). In the TF condition, the contrast information was concentrated at electrodes over the contralateral somatosensory cortex. In the VF condition, the R2 distribution was focused over the occipital cortex. Interestingly, superadditive response regions of R2 distribution appeared in the TVF condition, with additional activity over premotor regions which could be related to multisensory integration.

Figure 9. Grand-averaged R2 distributions among all subjects and tasks. (A) The R2 value distributions across spatial-spectral domain in TF, VF and TVF, respectively. (B) The topographical plots of R2 value averaged within the alpha band (8–13 Hz) in TF, VF and TVF, respectively.

Download figure:

Standard image High-resolution image3.4. Task-related alpha ERD/ERS

Based on the brain response localizations under different types of feedback, we chose channels CP3, CP5, P3 and P5 for the calculation of somatosensory alpha ERD/ERS, channels of P1, PZ, P2, PO3, POZ and PO4 for the calculation of visual alpha ERD/ERS, and channels F1, FZ, F2, FC1, FCZ and FC2 to calculate the alpha ERD/ERS over premotor regions.

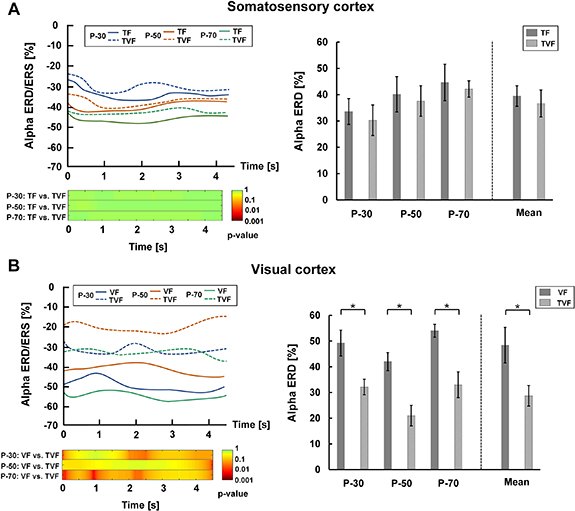

For PFPC task, alpha ERD/ERS over the somatosensory cortex was shown in figure 10(A). There was a power reduction compared to the baseline during the epoched task period for the three target positions under TF and TVF (see the left plot of figure 10(A)). Statistical analysis showed that there was no significant difference in the mean somatosensory alpha ERD between TF and TVF (p > 0.05) (see the right plot of figure 10(A)). This indicated that the somatosensory cortex was involved during proprioceptive position reproduction in both the TF and the TVF condition. Figure 10(B) showed alpha ERD/ERS over the visual cortex. There were obvious visual alpha ERD during the task under VF and TVF, and the visual alpha ERD in VF was stronger than that in TVF for each target position (left plot of figure 10(B)). The mean visual alpha ERD was significantly stronger in VF than TVF (p < 0.05) (right plot of figure 10(B)). This suggested that the recruitment of the visual cortex significantly decreased when the TF was added.

Figure 10. Alpha ERD/ERS over the somatosensory and visual cortices in the PFPC task. (A) Left plot. The upper subplot represented the changes of somatosensory alpha ERD/ERS during task state for the three target positions under the conditions of TF and TVF. The lower subplot reported the post hoc comparisons between TF and TVF for the statistical tests (p-value). Right plot. Mean somatosensory alpha ERD for the three target positions in TF and TVF. (B) Left plot. The upper subplot represented changes of visual alpha ERD/ERS during task state for the three target positions under the conditions of VF and TVF. The lower subplot reported the post hoc comparisons between VF and TVF for the statistical tests (p-value). Right plot. Mean visual alpha ERD for the three target positions in VF and TVF.

Download figure:

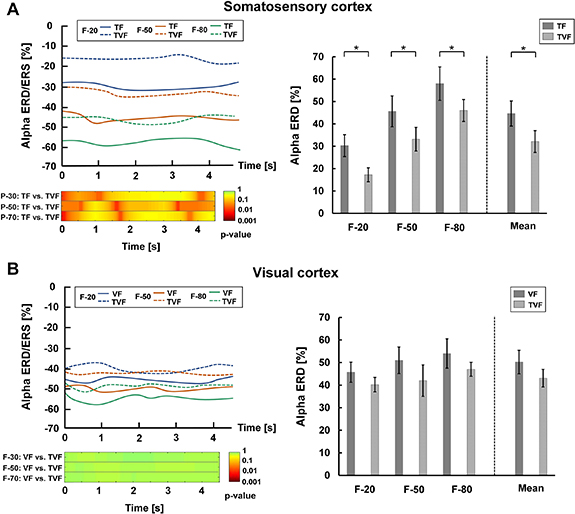

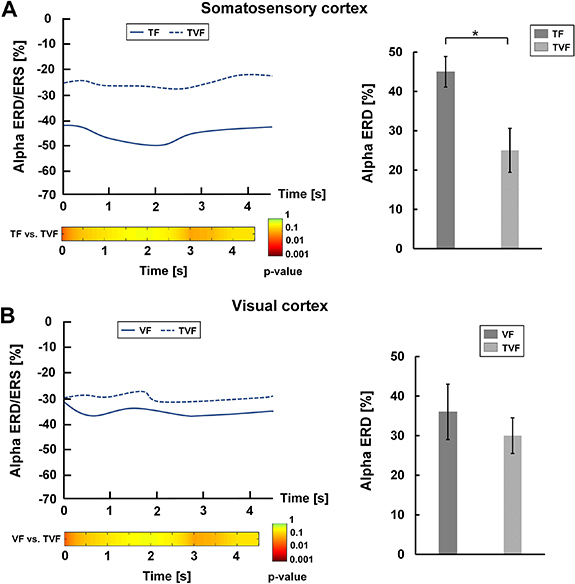

Standard image High-resolution imageFor the GFC task, alpha ERD/ERS over the somatosensory cortex was shown in figure 11(A). Obvious differences in power reduction were observed between TF and TVF during the task period for each target position (left plot of figure 11(A)). The mean somatosensory alpha ERD was significantly stronger in the TF condition compared to the TVF condition (p < 0.05) (right plot of figure 11(A)). This suggested that there was weaker activation of the somatosensory cortex when additive visual information was provided. Figure 11(B) showed alpha ERD/ERS over the visual cortex. The power reduction under VF and TVF was similar in the epoched duration (left plot of figure 11(B)). No significant difference in the mean visual alpha ERD was found between VF and TVF (p > 0.05) (right plot of figure 11(B)), suggesting that the visual cortex in TVF remained activated to the same extent as that in VF. The results in the BABC task were similar to those in the GFC task, as shown in figure 12.

Figure 11. Alpha ERD/ERS over the somatosensory and visual cortices in the GFC task. (A) Left plot. The upper subplot represented changes of somatosensory alpha ERD/ERS during task state for the three target grasping forces under the conditions of TF and TVF. The lower subplot reported the post hoc comparisons between TF and TVF for the statistical tests (p-value). Right plot. Mean somatosensory alpha ERD for the three target grasping forces in TF and TVF. (B) Left plot. The upper subplot represented changes of visual alpha ERD/ERS during task state for the three target grasping forces under the conditions of VF and TVF. The lower subplot reported the post hoc comparisons between VF and TVF for the statistical tests (p-value). Right plot. Mean visual alpha ERD for the three target grasping forces in VF and TVF.

Download figure:

Standard image High-resolution imageFigure 12. Alpha ERD/ERS over the somatosensory and visual cortices in the BABC task. (A) Left plot. The upper subplot represented changes of somatosensory alpha ERD/ERS during task state under the conditions of TF and TVF. The lower subplot reported the post hoc comparisons between TF and TVF for the statistical tests (p-value). Right plot. Mean somatosensory alpha ERD in TF and TVF. (B) Left plot. The upper subplot represented changes of visual alpha ERD/ERS during task state under conditions of VF and TVF. The lower subplot reported the post hoc comparisons between VF and TVF for the statistical tests (p-value). Right plot. Mean visual alpha ERD in VF and TVF.

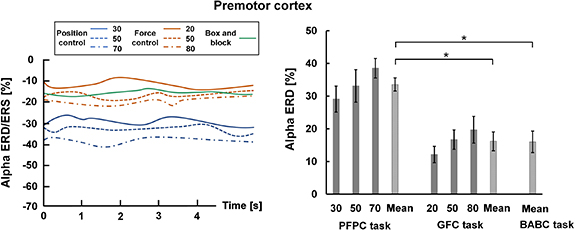

Download figure:

Standard image High-resolution imageAlpha ERD/ERS over the premotor cortex was shown in figure 13. During the task period, EEG signals in the three tasks all exhibited alpha power reduction over the premotor cortex (the left plot of figure 13). Specifically, the mean premotor alpha ERD was significantly stronger in the PFPC task compared to the GFC and BABC tasks (p < 0.05) (the right plot of figure 13). This supported the view that there was more premotor cortical activation in integrating tactile and visual information to build a cross-modal representation of task-related perceptions when compared to the GFC and BABC tasks.

Figure 13. Alpha ERD/ERS over the premotor cortex in the GFC task. Left plot. Changes of alpha ERD/ERS over the premotor cortex during task state for the three tasks. Right plot. Average alpha ERD over the premotor cortex for the three tasks.

Download figure:

Standard image High-resolution image4. Discussion

4.1. Functional roles of tactile and VF

Many behavioral studies have shown the general principle that the brain could compute the sensory weights of different feedback sources according to their relative reliability for interpreting the actual state [4, 38]. In the current study, the functional roles of tactile and VF in three typical prosthesis control tasks were characterized using an electrophysiological method of EEG evaluation, in combination with behavioral and psychophysiological evaluation. In PFPC task, behavioral results showed that tactile and visual information both contributed to the task accomplishment. Similar results were also reported that TF could improve task performance in situations where vision was not sufficient enough during hand aperture control [21, 39]. This behavioral principle was further confirmed by the EEG results in our study. Significantly stronger visual alpha ERD was observed under the condition of VF when compared to TVF. The somatosensory alpha ERD in TF was also, on average, stronger than TVF but this difference was not significant (figure 10). Therefore, activations of somatosensory and visual cortices under the condition of multimodal feedback (TVF) tended to be weaker compared to the unimodal conditions (TF and VF). This probably because subjects should pay their attention to the synchronized tactile and visual information rather than single tactile or visual information. This exactly indicated that VF and TF were both beneficial to the task. Subjects interpreted the two modalities of sensory information separately, and then integrated them into a synthetic judgment for the current prosthesis position. The contribution of tactile and visual feedback (TF and VF) was further confirmed by the significantly higher benefit score in the psychophysiological questionnaires when additive tactile/visual information (TVF) was provided, as shown in figure 8(B). They reported that it was not easy to judge the precise prosthesis position with single tactile or visual information. The minimum distinguishable frequency difference of their index fingers limited the ability of identifying small changes of prosthesis positions encoded by continuous frequency modulation with the step of 1 Hz. The resolution of vision was theoretically superior to tactile frequency perception [40], but the visual precision in judging spatial distance was also affected by the dynamic change of prosthesis positions. Therefore, subjects utilized the encoded tactile information to roughly locate hand positions, while direct visual information was used to check the position in real-time and make minor corrections.

However, the results in the GFC and BABC tasks differed from those of the PFPC task. Behavioral performance in VF and TVF was both significantly better when compared to TF (figures 5 and 6). It was shown that the somatosensory alpha ERD in TF was significantly higher than that in TVF for both GFC and BABC tasks (figures 11(A) and 12(A)). But there was no significant difference in the visual alpha ERD between VF and TVF (figures 11(B) and 12(B)). The addition of visual information significantly affected the distribution of cortical activation, showing a transition of activation from the somatosensory cortex to the visual cortex. This might be because subjects shifted their attention from tactile information to visual information. It was further confirmed that visual information was predominant when both tactile and visual information were provided in these two tasks. Due to the high resolution and sensitivity, VF could often dominate over artificial TF, which exactly happened in GFC and BABC tasks [41]. The results of the psychophysiological evaluation were consistent with the behavioral and EEG results. In the GFC and BABC tasks, benefit scores in VF and TVF were both significantly higher when compared to TF (figure 8(B)). Matching a fluctuating bar to the static line indicating a target force was an obvious candidate for the GFC task in which VF was all that was needed to obtain maximum performance. Similarly, subjects could visually determine whether the prosthesis had grasped the block when transferring blocks in the BABC task. The nature of this task was such that contact feedback was simply not required for successful execution, as also shown for box and block test in the work of Markovic et al [36].

Interestingly, we found that the behavioral results in the PFPC task was not statistically consistent with the EEG results. The task performances in TF and VF were significantly worse than that in TVF. However, there was no significant difference in somatosensory ERD between TF and TVF. This discrepancy in EEG response might be attributed to the formation of prosthesis embodiment during the PFPC task. Studies have reported the importance of tactile afferent to the sense of body ownership [42]. This was also supported by the psychophysiological results in the current study (as shown in figure 8(A)), in which TF showed significantly higher embodiment scores when compared to VF in the PFPC task. When both tactile and visual information were provided (TVF), the multi-modality information was integrated to form a coherent percept of the prosthesis, facilitating the generation of prosthesis embodiment, especially in such a proprioceptive position control task. Therefore, the recruitment of the somatosensory cortex during PFPC task might not fully contribute to the task performance, but partly associated with the formation of prosthesis embodiment.

4.2. Tactile-visual integration

Psychological studies have shown that multisensory information can powerfully interact to form a coherent perception [43–45]. For example, the brain relies on multisensory information to build a unified embodiment of our own body and its interactions with the environment. Somatosensory information is often combined with information of other sensory modalities, such as vision, with cognitive mechanisms (e.g. previous experience, learning, memory) to produce a percept that can be interpreted and acted upon quickly. Neuroimaging studies showed the involvement of the premotor cortex and intraparietal sulcus in tactile-visual integration processing [46], supporting observations previously reported in monkeys [47, 48]. The premotor cortex has also been found to subserve sensorimotor transformations for the guidance and control of actions [49]. Therefore, the premotor region has clear multimodal properties including motor, somatosensory and visual integrations, and contributes to transforming multisensory information into a motor format [50]. Here, in the context of prosthesis control tasks, we observed additional activation over the premotor cortex in TVF (figure 9). This suggested that the premotor cortex was involved in the tactile-visual integration when tactile and VF were both provided. Specifically, consistent results for multi-sensory integration were found between EEG and psychophysiological evaluation. The averaged premotor alpha ERD in the PFPC task was significantly higher as compared to the GFC and BABC tasks (figure 13), reflecting a more integration between the tactile and visual information in the PFPC task. A similar trend was also observed in the results of NASA-TLX questionnaires, showing significantly higher NASA-TLX scores in the PFPC task compared to the other two tasks (figure 7). Subjects reported higher physical and mental workload when performing the PFPC task due to the higher task complexity. Therefore, more tactile-visual integration was needed to provide multi-dimensional sensory information for better task performance.

4.3. Implication

The three tasks (PFPC, GFC and BABC tasks) tested in this study represented typical activities in the practical prosthesis application, including proprioceptive position senses and interactions with the environment. To the best of our knowledge, this is the first study to simultaneously investigate the task performance and brain responses underlying different types of sensory feedback (TF, VF and TVF) in a series of motor tasks. Previous studies mostly focused on behavioral performance without concurrently assessing task-related changes in cortical activities [9]. Moreover, the functional roles of different types of feedback were revealed from a multi-dimensional view, including EEG, behavioral and psychophysiological assessments. The benefit of feedback depended on the type of task. TF contributed to the performance of PFPC task but was not essentially beneficial in GFC and BABC tasks. The integration of tactile and visual information was needed in PFPC task to make a more precise judgment for the prosthesis position, because vision was not precise enough in the estimation of spatial distances. Contrary to the PFPC task, subjects could easily evaluate the reproduced grasping force/block state with explicit VF in the GFC and BABC tasks, and the tactile information would be redundant in these situations. Therefore, when multi-modal sensory information were provided, the functional roles of feedback depended on their relative reliability in the task. The effectiveness of TF was emphasized in tasks related to proprioceptive position perception. In such tasks, visual information was not enough to ensure good task performance and prosthesis embodiment. For the tasks that involved the interaction with external environment (such as object grasping), VF would play a predominant role. Besides, even though TF marginally increased the physical and mental workload, it contributed a lot to the prosthesis embodiment.

However, the simplified experimental design was a potential limitation of the current study. For example, we did not use a real prosthesis in GFC task. The control of grasping force between the prosthetic hand and the object was simplified to the control of muscle contraction, wherein the bidirectional muscle contraction during grasping was simplified to the contraction of the extensor carpi ulnaris. In addition, the tactile sensation corresponding to the target position/force was provided to subjects in PFPC and GFC tasks, whereas such a cue is not realistic in the application of prosthesis control. As such, the control of prosthesis position and grasping force was actually simplified to a sensation matching task, in which subjects need to reproduce the position/equivalent force corresponding to the given target sensation. Meanwhile, only three target positions/forces were set in PFPC and GFC tasks, limiting the applied scenario of prosthesis activities. Taken together, further investigations should be conducted in the future to extend the experimental design to more practical prosthesis application situations. What's more, amputee subjects were not recruited in this study. Instead, we selected the fingertips of able-bodied subjects as the interface of electrotactile feedback. There are some similarities between amputees and able-bodied subjects regarding sensory feedback. For amputees, by transferring the information from sensors in the fingers of a prosthetic hand to specific locations on the skin of the stump, the fingers of the phantom hand could be stimulated, which is called phantom finger sensation [9, 17]. In analogy, the phantom finger sensation of amputees corresponded to the real finger sensation of able-bodied subjects. Choosing the fingertip as the feedback area could maximally approximate the evoked sensations of the amputees in sensory feedback. In our previous work, we have also proved that the sensory mechanisms of the phantom finger area were similar to normal fingers in terms of EEG response to the electrotactile stimulation [51]. From this perspective, choosing the fingertip as the feedback area could also provide a reference for investigating the brain response of amputees in the closed-loop prosthesis control task. Anyway, amputees are actual users of prostheses, more amputees should be recruited in the future to further validate the current results.

5. Conclusion

In conclusion, this study shed new insight into the functional roles of TF and VF in three typical prosthesis control tasks (PFPC task, GFC task and BABC task) based on EEG evaluations, accompanied by behavioral and psychophysical assessments. Results showed that TF was essential in realizing the tasks related to proprioceptive position perception (PFPC task). VF played a predominant role in the tasks that involved the interaction with external environment (GFC and BABC tasks). Task-related alpha ERD over premotor cortex indicated tactile-visual integration processes when tactile and VF were congruently implemented. Our findings have the potential for understanding electrophysiological mechanisms of sensory feedback, improving the design of sensory feedback for myoelectric forearm prostheses.

Acknowledgments

We thank all the subjects for their participation in the study. This work is supported in part by the China National Key R&D Program (Grant No. 2020YFC207800), the National Natural Science Foundation of China (Grant Nos. 91948302, 51805320), the Science and Technology Commission of Shanghai Municipality (Grant No. 18JC1410400) and Shanghai Pujiang Program (Grant No. 20PJ1408000).

Data availability statement

The data generated and/or analyzed during the current study are not publicly available for legal/ethical reasons but are available from the corresponding author on reasonable request.