Abstract

The journey of a prosthetic user is characterized by the opportunities and the limitations of a device that should enable activities of daily living (ADL). In particular, experiencing a bionic hand as a functional (and, advantageously, embodied) limb constitutes the premise for promoting the practice in using the device, mitigating the risk of its abandonment. In order to achieve such a result, different aspects need to be considered for making the artificial limb an effective solution to accomplish ADL. According to such a perspective, this review aims at presenting the current issues and at envisioning the upcoming breakthroughs in upper limb prosthetic devices. We first define the sources of input and feedback involved in the system control (at user-level and device-level), alongside the related algorithms used in signal analysis. Moreover, the paper focuses on the user-centered design challenges and strategies that guide the implementation of novel solutions in this area in terms of technology acceptance, embodiment, and, in general, human-machine integration based on co-adaptive processes. We here provide the readers (belonging to the target communities of researchers, designers, developers, clinicians, industrial stakeholders, and end-users) with an overview of the state-of-the-art and the potential innovations in bionic hands features, hopefully promoting interdisciplinary efforts for solving current issues of upper limb prostheses. The integration of different perspectives should be the premise to a transdisciplinary intertwining leading to a truly holistic comprehension and improvement of the bionic hands design. Overall, this paper aims to move the boundaries in prosthetic innovation beyond the development of a tool and toward the engineering of human-centered artificial limbs.

Export citation and abstract BibTeX RIS

1. Introduction

Over the past 20 years, poly-articulated upper limb prostheses (ULPs) have undertaken several technological and scientific developments to satisfy the different needs of the upper limb amputee community. Nonetheless, in a recent study, Salminger et al (2020) observed overall abandonment rates of ULPs of about 44% in a population of mainly (92%) myoelectric prostheses users. They also highlighted how the past decade of developments still presents technological limiting factors that did not permit the restoration of the full functionalities of a missing limb, hence leading to a substantial increased rate of prosthesis abandonment. The main cause of such ineffectiveness mainly resides in a non-sufficiently patient-tailored design process (Salminger et al 2020).

According to the American Orthotic and Prosthetic Association (Aopa 2016), partial amputations, i.e. finger amputations, represent the majority of upper-limb losses (75.6%), while trans-radial and trans-humeral amputations constitute a percentage oscillating between 5% and 6%. Despite this, the level of impairment caused by trans-radial and trans-humeral amputations is greater than for partial amputations.

Without tracing back all the evolution of ULPs—the reader might find useful the reviews of Trent et al (2019) and Ribeiro et al (2019). Trent et al (2019) work focuses on a classification of the upper-limb prostheses architectures based on the type of adopted actuation, e.g. passive, body-powered or active. On the other hand, Ribeiro et al (2019)'s research investigates the most relevant control signals used for the man-machine interface.

This work focuses on trans-radial and trans-humeral devices, excluding partial amputations, and it details the latest and most technologically advanced solutions, namely poly-articulated myoelectric prostheses. Moreover, this review aims at presenting and analyzing the key elements of state-of-the-art ULPs in a user-centered and human-in-the-loop fashion and to provide guidelines for the development of such prostheses and the relative control algorithms, to possibly achieve solutions capable of promoting the systems use and overcoming the elevated abandonment rates observed so far. Overall, the reader could take advantage of this review as an analytical collection of solutions constituting a premise to provide the user with a seamless control experience.

2. Upper limb prosthetics classification: a twofold perspective

An ULP system can be observed from two main points of views: its mechatronics, namely the combination of the mechanical and electronic components necessary for its operation, and the control strategies and algorithms implemented to orchestrate its functions. Research groups have therefore attempted to solve the prostheses abandonment problem by addressing different technological and scientific challenges, either focusing on mechatronic design, or on control strategies aimed at increasing the human-machine interaction and, in some cases, introducing feedback sources, as detailed in the next sections.

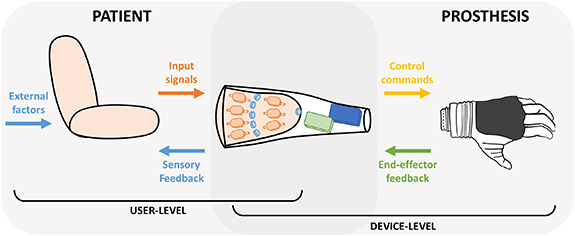

ULP control can be divided into two synergistically interacting sub-systems: the user-level and the device-level, as depicted in figure 1. The user-level includes the patients and the most proximal device component interacting with the user (i.e. the socket), while the device-level extends from the socket to the ULP device. These two sub-systems overlap at the socket level, which is involved in a bidirectional flow of information. On one hand, it receives inputs from the user (i.e. movement intentions) and translates them into movement commands for the device; on the other hand, it receives information (both from the device and the environment) and communicates it to the user through sensory feedback (figure 1). Importantly, the socket itself severely limits the user comfort, and together with the prosthetic weight highly contributes to the prosthetic abandonment.

Figure 1. Graphical representation of a ULP system and its elements. The user level (left panel) includes: input data sent from subject to the prosthesis (input signals), artificial sensory feedback information delivered from the prosthesis to the user (sensory feedback), and external sources of interaction (external factors), such as actuation coming from the unimpaired limb or environmental/accidental sources of feedback such as vision and sound. The device-level (right panel) includes the control commands used to drive the prosthesis and the feedback information collected by the end-effector. The user-device interface is characterized by a bidirectional exchange of information (overlap of the two panels).

Download figure:

Standard image High-resolution imageEven if the state-of-the-art in prosthetic research encompasses studies based on psychological processes too, commercial ULP systems have focused on restoring functional capabilities by capitalizing on the device-level only, therefore on mechatronic, and several solutions can be found on the market for trans-radial level of amputations. Commercially available systems merge basic functionalities and aesthetic requirements, targeting the clinical needs given by a certain kind of amputation, rather than focusing on each patient's specific needs.

Commercial solutions range from tri-digital hands, e.g. VaryPlus Speed, SensorHand Speed by Ottobock (Ottobock 2020c) and Motion Control Hand by Fillauer (Fillauer 2021); through polyarticulated hand under-actuated, e.g. Michelangelo by Ottobock (Ottobock 2020b); to fully actuated polyarticulated hand, e.g. BeBionic by Ottobock (Ottobock 2020a), i-Limb by Ossur (Ossur 2020b), Vincent Hand by Vincent Systems (Systems 2020), TASKA hand by Taska Prosthetics (Taska 2022), BrainRobotics Hand by BrainRobotics (Brainrobotics 2022) and Ability Hand by Psyonic (Psyonic 2022).

In the last decades, many research groups have focused on the mechatronic development of ULP devices, entrusting the intelligence of the device to the embedded mechanics in a very thorough design, structuring the development of the concept of under-actuation, such as the Vanderbilt Multigrasp Hand (Bennett et al 2014), the MIA Hand (Controzzi et al 2016), the SoftHand Pro (Godfrey et al 2018), the KIT Hand (Weiner et al 2018), and the Hannes Hand (Laffranchi et al 2020).

On the other hand, there is a family of very dexterous devices, not yet market-ready, that mimic the complexity of the human hand, implementing a fully-actuated multi-degrees of freedom mechatronics, e.g. the University of Bologna Hand (Meattini et al 2019) or the Shadow Hand (Company 2020).

High level of amputations, as the trans-humeral ones, require prosthetic elbows, such as the Dynamic Arm (Ottobock 2022a), the Dynamic Arm Plus (Ottobock 2022b), and the ErgoArm (Ottobock 2022c) from Ottobock; the Espire Elbow (Classic, Classic Plus, Pro and Hybrid,) from Steeper Inc. (Steeper 2022); and the Fillauer Motion E2 Elbow (Fillauer 2022a) and the Utah Arm 3 (Fillauer 2022b) from Fillauer. In the research context, full robotic arms include the DLR hand system (Grebenstein et al 2011), the APL modular prosthetic limb (Johannes et al 2011), the LUKE Arm (Bionics 2022), the Rehabilitation Institute of Chicago arm (Lenzi et al 2016), and Edinburgh Modular Arm System (Gow et al 2001).

However, this great variety of products does not match with the elevated abandonment rates, demonstrating the lack of satisfaction of the patients' needs from a mechatronic perspective. In particular, structural and supporting part lack of adjustability of user size, allow limited kinematic and motion possibilities and more advanced systems present limited operational time (Harte et al 2017). This leads to limited satisfaction and feeling of security. Moreover, these systems generally present poor personal and social acceptance because of limited anthropomorphism, high weight and presence of acoustic disturbances during use (Harte et al 2017), This suggests that ULP development should not only focus on the device level, but improvements at the user level could play a key role for truly meeting the user requirements and consequently obtain device acceptance. Motivated by this, in this review, we analyze all the possible approaches that could potentially address the user needs in terms of device controllability, robustness and hence embodiment and user experience. To this end, it is fundamental not only to focus on the functionality restoration but also on the sensory information recovery, which are fundamental to effectively control the device. All the described approaches range from improvements in decoding user intentions, hence analyzing all possible input sources and their related control strategies, to inclusion of additional sources of feedback capable to restore the sensory information. These approaches tackle the issues related to poor device control because of lack of intuitiveness and sensory feedback.

Therefore, in this review we present current and emerging methods in ULP development, detailing various sources of input and feedback signals, as well as control strategies. We also highlight current challenges and open issues in the field, specifically focusing on the importance of user experience and involvement in the design and development process. This is fundamental to promote patient-tailored approaches leading to the development of truly personalized devices, which are currently lacking. We finally provide an overview of the most promising approaches that if followed, may one day provide upper limb amputees with a true substitute of their missing arm.

3. Input and feedback signals for prosthetic control

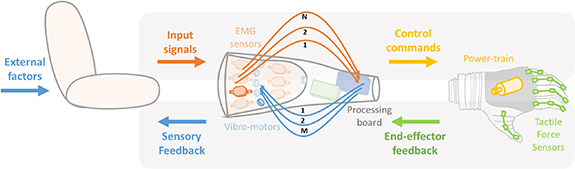

Prosthetic control is regulated by a flow of signals, as depicted in figure 2. Input signal runs from the user to the device and they are often of biological or electrophysiological nature, in which case are called biosignals. Signals flowing in the opposite direction convey information from the device to the user and are therefore defined as sensory feedback signals. Moreover, some external factors convey to the user additional source of feedback (i.e. incidental feedback), such as visual or auditory information that can be used to estimate the prosthesis state (Wilke et al 2019, Sensinger and Dosen 2020, Gonzalez et al 2021).

Figure 2. Graphical representation of information flow of a possible ULP architecture. Input flow (top panel): from user (input signals i.e. from EMG sensors) to prosthesis (control commands i.e. through power train). Feedback flow (bottom panel): from prosthesis (end-effector feedback i.e. from tactile force sensors) to user (sensory feedback i.e. through vibrotactile motors).

Download figure:

Standard image High-resolution imageInput signals include all the sources of information that can be taken from the amputee and translated into motor commands for driving the prosthesis (e.g. electromyography—EMG), see section 3.1. Instead, sensory feedback information encompasses different prosthetic sensing solutions acquired either from the prosthetic device or from the environment, see section 3.2 that can be translated into sensory stimuli for the amputee (e.g. vibrotactile stimulation, see section 3.3). All types of signals can be classified according to their level of invasiveness, with consequent advantages and drawbacks.

3.1. Input signals

In recent years, many research activities have focused on the extraction of useful information from the biological signals in order to suitably control ULPs. Traditionally, the surface EMG (sEMG) is the most widespread signal for prosthesis control but its use still faces many drawbacks (Kyranou et al 2018). In the following, we describe various methods to employ EMG as input signal for ULP control and we also explain how other input sources can be exploited to obtain more dexterous prosthetic behavior, overcoming the limitations of current ULP systems.

Figure 3 collects input signals for ULP control that will be described in the following subsections, ranging from those used by commercial systems, up to those currently under investigation.

Figure 3. Input sources for ULP.

Download figure:

Standard image High-resolution image3.1.1. Biosignals

The term biosignal indicates every possible signal that can be detected and measured from biological beings, humans—in our case. Usually, the term is used for signals of electric nature (i.e. EMG), but actually every signal collected from the activity of different tissues or organs belonging to the human body, can be considered as a biosignal.

We here adopt this latter definition to group input sources that are described next. Given its large use both in research and commercial ULP devices, EMG deserves a dedicated subsection, while other biosignals are grouped together. We also dedicate a whole subsection to brain-derived signals, which are especially used in brain-machine and brain-computer interfaces (BMIs, BCIs), but that are also showing potential use for ULP applications. Table 1 summarizes biosignals for ULP control that will be described in the following subsections.

Table 1. Biosignals used as input sources in prosthetic applications.

| Measured Property | Sensors' placement | PROs | CONs | Sensor Fusion | Examples | ||

|---|---|---|---|---|---|---|---|

| Electromyography (EMG) | Surface EMG | Muscle electric potentials | On the skin over targeted muscles 2–32, up to 192 sensors | Non-invasive, long-term use, a large number of people | Sweating, electrodes shift, muscle fatigue, electromagnetic noise | NIRS, IMU, FMG, SMG, MMG | (Merletti et al 2010) up to 27 gestures |

| Invasive EMG | Underneath the skin, on or inside targeted muscles 4–8 sensors | High signal/noise ratio, directly on the nerve, no shift with respect to the source | Invasive, infections | (Cipriani et al 2014, Ortiz-catalan et al 2020) | |||

| Force-myography (FMG) | Change of muscle morphology measured on the skin surface | Over targeted muscle, over related tendons 8, up to 126 sensors | Physiologic, small size, high signal/noise ratio, flexible | Muscle fatigue, sensors shift, pre-load force, small spatial resolution, crosstalk | EMG | (Xiao and Menon 2019) up to 8 gestures | |

| Mechano-myography (MMG) | Muscle fiber oscillations using microphone or accelerometers | Over targeted muscle 6–20 sensors | Low cost, no pre-amplification, no precise positioning, no skin impedance or sweat influence | Ambient acoustic noise, Adjacent muscle crosstalk, Sensor displacement | EMG, IMU | (Wilson and Vaidyanathan 2017, Guo et al 2017a, Castillo et al 2020) up to 5 gestures | |

| Sono-myography (SMG) | Change of muscle morphology | Over targeted muscle, over related tendons transducers of different shapes | Deep and superficial muscles, some models are cheap and energy-efficient | Probe shift, tissue impedance, no wireless, some models expensive and bulky | EMG | (Dhawan et al 2019) up to 15 gestures | |

| Near-Infrared Spectroscopy (NIRS) | Tissue oxygenation through the amount of scattered light | Over targeted muscle 2–4 sensors | Deep and superficial muscles, high spatial resolution, no electronic interference | Ambient light, Muscle fatigue, tissues heating | EMG, IMU | (Paleari et al 2017) up to 9 gestures | |

| Electrical Impedance Tomography (EIT) | Tissue impedance | Over targeted muscle, over related tendons 8, up to 64 sensors | No need precise positioning | Low time resolution, sweating, electromagnetic noise, high consumption | — | (Zhang et al 2016, Wu et al 2018) up to 8 gestures | |

| Capacitance sensing | Tissue capacitance | Over targeted muscle, over related tendons 3 receiver sensors | Non-invasive, low cost, deep and superficial muscles | Sweating, Electromagnetic noise, displacement, ambient temperature | — | (Cheng et al 2013, Truong et al 2018) up to 2 gestures | |

| Magneto-myography | Magnetic fields generated by muscle | Over/inside targeted muscle 7 sensors | Not sensitive to sensor's shift and sweat | Magnetic interference, can be invasive, movement artifacts | — | (Zuo et al 2020) concept | |

| Peripheral neural interfaces (PNIs) | Electrical activity of the nerves | Microelectrode arrays placed on different fascicles within the median and ulnar nerves | Intuitive, direct maps of complex movements, high accuracy, robust | Invasive, difficult to separate EMG and PNI components, recording channels really closed each other | — | (Nguyen et al 2020) up to 15 DoFs | |

| Intracortical neural signals | Intracortical neural signals from the brain, action potentials of individual neuron | 16–192 high-density channels electrodes inserted into the motor cortex tissue | Accurate and capable of collecting the most information-rich data, high spatial resolution | Very invasive, influenced by tissue reactions | — | (Hochberg et al 2006, Hochberg et al 2012, Collinger et al 2013, Wodlinger et al 2014) 7–10 DoFs | |

| Electrocorticography (ECoG) | Electrical activity of brain's surface | 32–128 high-density channels on sensorimotor regions | Less attenuated than EEG, good spatial resolution and wide frequency content | Surgical procedure and lack to measure single cell activity | — | (Wang et al 2013, Fifer et al 2013, Bleichner et al 2016, Hotson et al 2016) 4 gesture recognition and wrist movements | |

| Electroencephalography (EEG) | Electrical activity of the brain | 6–32 channels headsets | Not invasive, low cost, portable, stable, and very easy to use | Signal attenuated by the dura, the skull, and the scalp, loss of important information | — | (Mcfarland et al 2010, Yang et al 2012, Edelman et al 2019, Fuentes-Gonzalez et al, 2021) single Degree of Freedom (DoF) | |

| Functional near-infrared spectroscopy (fNIRS) | Activity-related brain oxygenation, near-infrared led, and a photodetector measure the amount of IR light absorbed by the hemoglobin in the brain | 10–200 channels of optodes | Non-invasive, simultaneous detection information under the skin, low cost | Few centimeters penetration of cortical tissue, not great accuracy, and system too cumbersome | — | (Syed et al 2020) 3 DoFs trans-humeral amputees | |

3.1.1.1. EMG

While cosmetics, electronic components and computational efforts have undergone a significant improvement, the control strategies currently used in prosthetic applications have not changed since their first appearance in the 1960s (Schmidl 1965). The EMG has been one of the major sources to control ULPs (Merletti and Farina 2016). These signals carry information about neuromuscular activity, and they are used to retrieve human intention. EMG is indeed a technique for studying the activation of the skeletal muscles through the recording of electrical potentials produced by muscle contraction (Hudgins et al 1993). The theory behind the sEMG electrodes is that they form a chemical equilibrium between the detecting surface of the electrode and the skin of the body through electrolytic conduction, so that the current can flow into the electrode.

Multiple methods have been used to obtain the intended gesture from the processed EMG signals, all of which exploit the fact that the amputees can still generate different and repeatable muscular patterns related to each forearm movement with residual muscles of the stump. Low-density EMG is commonly used in prosthetic application, both in research and commercial context. Noteworthy, EMG signals can also be collected with invasive methods. The sEMG can be thus classified according to the level of resolution and density of the sensors. In the following, we provide an overview of the different types of EMG-based biosignals.

3.1.1.1.1. sEMG

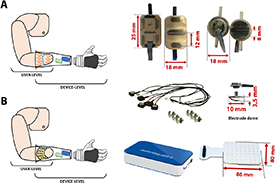

The sEMG can be classified according to the number of electrodes used (figure 4). Low-density EMG generally refers to the use of a small (<10) number of EMG bipolar sensors, that can be either wet, i.e. contain an electrolytic substance that serves as interface between skin and electrodes, or dry (Jamal 2012). Conversely, high-density EMG is typically composed by wet monopolar sensors spread on a planar patch, around 1 cm apart, and with the ground reference generally placed on the wrist or on the elbow (Drost et al 2006). Importantly, sEMG electrodes also differ in their electronic configuration, as they can be either preamplified or not (Zheng et al 2021). Merletti and Muceli (2019) provided a guide with the best practice to acquire and manipulate EMG data according with the different aims, from signal analysis to motion prediction.

Figure 4. sEMG electrodes. (A) Bipolar dry sensors, Ottobock and IIT/INAIL (Marinelli et al 2021) respectively. (B) High-density wet sensors (OT Bioelettronica).

Download figure:

Standard image High-resolution imageProsthetic control with low-density EMG is generally obtained by using two bipolar electrodes placed on antagonist muscles. This configuration allows the control of the prosthetic system in a robust and simple way (Hudgins et al 1993). However, the detection of complex and simultaneous movements of the phantom limb can be improved by using an array of EMG electrodes placed on the superficial skin of the residual forearm (Coapt 2017, Dellacasa Bellingegni et al 2017, Ottobock 2019, Marinelli et al 2020), The use of sEMG in prosthetic applications has become the most widespread source of information about voluntary movement (Schmidl 1965) because of the direct correlation between EMG activity and subjects' intentions.

Differently from the low-density, the high-density sEMG (HD-sEMG) is based on a higher number of electrodes placed on a small portion of the body. Recently, a growing number of researchers has focused on the use of these electrodes aiming to increase the amount of collected data, although at the cost of a greater computational burden. HD-sEMG sensors have been used to discriminate muscular patterns related to different gestures. Their signals can be handled in various ways to retrieve unique and repeatable information, as described in section 4. These sensors have to be positioned according to the distribution of the underlining muscle fibers and this configuration provides a low resolution map of the synergistic activation of the muscles during movement production (Winters 1990, Sartori et al 2018). For example, from contraction of the muscles under the acquisition grids, it is possible to extract bi-dimensional images, in which the EMG amplitude is mapped to a color scale. These maps can be thus handled by complex algorithms, as the ones used for objects detection in robotic navigation (Chen et al 2020). The main limitation of the HD-sEMG, which currently bounds its application to a laboratory scenario, is the skin-electrode contact since it requires conductive gel to reduce the interface impedance. The wet area is mainly needed to reduce artifacts in the EMG signals since it is generally acquired in monopolar configuration. Another disadvantage of this technique consists in the fact that computation is time-consuming.

Overall, the main drawback of sEMG-based approaches is constituted by the influence that skin impedance, sweat, and electrode shift have on the stability of the input signals (De Luca 1997). Additionally, muscle crosstalk and the difficulty to reach deep muscles further limit the quality of the collected signal. In the context of ULP, the use of sEMG can be further complicated by the fact that the amputation strongly affects muscles strength and organization and therefore signal quality, as discussed in section 6.4.

3.1.1.1.2. Invasive EMG and surgical procedures

The invasive approach has been exploited to explore the activity related to the production of movement for many years (Adrian and Bronk 1929) and it is still investigated by many groups. However, the main drawback of this approach is constituted by the surgery and by the technological barriers still faced by the available equipment. On the other hand, invasive electromyography (iEMG) allows to measure single motor unit action potentials, enabling a higher selectivity and a better accuracy of the input signal, overcoming the limitations imposed by sEMG. There are several examples of iEMG, which vary in the type of electrodes and level of invasiveness, as detailed hereafter.

EMG can be invasively detected by inserting electrodes into the internal surface of muscles (Merletti and Farina 2009). This invasive technique exploits two different percutaneous electrodes: needles and fine wires (Jamal 2012, Rubin 2019). The most used are needle electrodes. These electrodes are concentric, and their bare hollow needles contain an insulated fine wire into their cannula, which is exposed on the beveled tip, which is the active recording site. Wire electrodes are typically made of non-oxidizing and stiff materials with insulation, they can be implanted more easily and are usually less painful than needle electrodes.

Since both these sensors are percutaneous, i.e. passing through unbroken skin and leaving an open passage between the internal structures of the body and the external world, the risk of infection is quite probable. For this reason, and because of their intrinsic discomfort due to the percutaneous wire that can easily break, their usage is limited to laboratory research (Hargrove et al 2007, Cloutier and Yang 2013a). A detailed description of invasive electrodes both to record biological signals and to deliver electrical stimulation can be found in Raspopovic et al (2021a).

In the last decades, growing attention has been paid to the development of intramuscular electrodes that could be implanted under the skin of the subject to achieve the advantages of invasive sensors and simultaneously avoid the risks and inconvenience of percutaneous instruments. For example, Weir et al (2008) developed an implantable myoelectric sensor (IMES), a system able to receive and process up to 32 implanted sensors with wireless telemetry. A transcutaneous magnetic link between the implanted electrodes and the external coil allows reverse telemetry, which transfer data from the sensors to the controller, commanding the control of the prosthesis, and forward telemetry to supply power and configuration settings to the electrodes. These sensors are designed for permanent long-term implantation without any kind of servicing requirement and have been tested on animals. Four months after the implantation of IMESs in the legs of three cats, the sensors were still functioning (Weir et al 2008). Intramuscular electrodes have been used in prosthetic application to decode 12 different hand gestures from 4 healthy subjects (Cipriani et al 2014). Moreover, it has been shown that the application of this invasive approach enhances the simultaneous control of multi-DoFs system (Smith et al 2014).

Recently, the group of Ortiz-Catalan showed an invasive procedure for ULP control. They positioned EMG electrodes under the skin of amputated subjects and sutured them directly on the external surface of the muscles (Ortiz-Catalan et al 2020). More precisely, sensors were sewn onto the epimysium of the two heads of the biceps' muscles and the long and lateral heads of the triceps muscles. These invasive electrodes were used in combination with an osseointegrated prosthesis, i.e. a system obtained following a very invasive surgical procedure, which allows to anchor the prosthesis to the remaining limb's bone (Ortiz-Catalan et al 2020). In the context of ULP, osseointegration is offered for trans-humeral amputees, and the prosthesis is anchored to the humerus with two mechanical elements: the fixture, a screw made of titanium placed inside a hole made in the bone that becomes osseointegrated, and the abutment, placed within the fixture and extending outside of the body in a percutaneous way, onto which the prosthesis is connected. This technique was tested on four osseointegrated patients.

This latter example indicates that also surgical approaches can be taken to improve the quality of the collected EMG. A promising surgical technique that is performed in case of high-level amputation is targeted muscle reinnervation (TMR). This method was developed by the group of Kuiken in the early 2000s and consists in transferring residual arm nerves to alternative muscle sites. Following reinnervation, these target muscles are able to produce EMG that can be collected and used to control prosthetic arms (Kuiken et al 2009). This strategy works at the condition that each reinnervated muscle produces an EMG signal in response to only one transferred nerve, with the consequence that native nerves innervating the target muscle has to be cut during the surgical procedure to avoid unwanted EMG signals (Kuiken et al 2017). In the last 15 years, TMR has allowed intuitive control of ULP to several subjects with high-level amputation for whom standard ULP devices allowed a poor restoration of motor functions (Kuiken et al 2017). Importantly, given that it is performed on complex amputations, this technique is strongly tailored to each patient's physical and clinical status (Cheesborough et al 2015, Mereu et al 2021).

Recently, a new surgical method for improving EMG-based control has emerged: the regenerative peripheral nerve interface (RNPI) (Vu et al 2020a). Just as TMR, its goal is to turn a muscle into a biological amplifier of the motor command, in order to improve the quality of the EMG signal recorded, processed and used to drive the prosthesis. To this end, RNPI exploits the regeneration capabilities of nerves and muscles, to implant a transected nerve into a free muscle graft. Following regeneration, revascularization and reinnervation by the transected nerve, the muscle graft effectively becomes a stable peripheral nerve bioamplifier, able to produce high-amplitude EMG signals (Urbanchek et al 2012). The potential of this novel interface has been tested by Vu et al (2020b): they used EMG signals collected by intramuscular bipolar electrodes implanted into RNPIs obtained in amputated individuals, who could successfully perform real-time control of an artificial hand. Surprisingly, subjects were able to control the device with a high level of accuracy even 300 d post-implantation, without recalibration of the control algorithm.

Another surgical technique, not directly related to EMG signals but worth mentioning, is cineplasty, an old method revived in the last years with a new and more modern approach. This method was introduced for the first time by Vanghetti in 1899 and then replicated by Sauerbruch ten years later (Tropea et al 2017). It consisted of the direct mechanical linking of residual muscles and/or residual tendons of the affected limb to the prosthesis through external cables (i.e. Bowden cables). In 2001, Heckathorne and Childress (2001) implemented an evolution of this surgical solution for the control of one DoF ULP by exploiting exteriorized tendons directly linked to a force sensor.

3.1.1.2. Other biosignals

The limitations imposed by the use of EMG (either invasive or non-invasive), have led researchers to study new approaches, aiming at increasing algorithms robustness and accuracy. Some may be soon used in commercial prosthetic systems, while others represent promising research scenarios, but still far from real-life applications. We here describe some of these peripheral signals, both non-invasive and invasive.

For example, forcemyography (FMG) has been widely investigated in the past 20 years (Xiao and Menon 2019) (table 1). This approach is based on force sensors able to record muscle stiffness around the forearm during different movements. The muscle deformation of the stump can be measured with various types of sensors, such as: force sensing resistors (Prakash et al 2020), optical fiber transducers (Fujiwara et al 2018), capacitance-based deformation sensors (Truong et al 2018), Hall-effect based deformation sensors (Kenney et al 1999), barometric sensors (Shull et al 2019), thin arrays of adhesive stretchable deformation sensors (Jiang et al 2019), or high density myo-pneumatic sensors for topographic maps of pressures and residual kinetic images of the stump (Phillips and Craelius 2005, Radmand et al 2016). The accuracy of the sensors may limit the robustness of FMG-based control. Therefore, FMG is often fused with other input sources, such as inertial measurement unit (IMU) (Ferigo et al 2017) or EMG (Nowak et al 2020). FMG is indeed complementary to EMG due to its capability to get information about extrinsic hand muscles placed in several layers underneath the skin, and therefore difficult to be detected with the EMG sensors. Moreover, with respect to EMG-based control strategies, FMG is not influenced by electrode shifting.

Another technique is mechanomyography (MMG), which measures the lateral oscillations, detected as low-frequency vibrations (in the range of 1–100 Hz), generated by deformation in muscle fibers actively involved in the contraction (table 1). This approach can be considered as the mechanical counterpart of EMG and it is also known as acousticmyography, phonomyography or vibromyography, depending on the type of sensor used. It can actually be based on different types of sensors, such as: low mass accelerometers (Farina et al 2008, Youn and Kim 2010), microphones (Castillo et al 2020, Meagher et al 2020), piezoelectric contact (Orizio et al 2008, Tanaka et al 2011), force sensing resistors (Esposito et al 2018), and laser distance sensors (Scalise et al 2013). With respect to EMG, this technique shows some advantages: it is low cost, it does not require pre-amplification or precise positioning, and signals are not influenced by skin impedance or sweat. However, it is very susceptible to environmental noise and motion. Artifact removal can be implemented with the integration of an IMU, as proposed by (Wilson and Vaidyanathan 2017) and (Woodward et al 2017). MMG has also been used in combination with EMG signals (Guo et al 2017a), achieving better control performance and robustness.

The sonomyography (SMG) measures muscle volume changes and thickness using reflected ultrasound waves (table 1). Wave amplitude depends on the acoustic impedance of the tissue, and it can be detected using ultrasound transducers. Currently, no portable prosthetic systems based on SMG have been developed, but the results obtained using this technique are very promising. For example, Dhawan et al (2019) were able to detect 11 different movements in real-time placing the sensor on the stump of a trans-radial amputee, obtaining better results than using EMG signals alone. This non-invasive approach allows a faster user training and the detection of both superficial and deep muscles, but even a small shift of the sensor can change the cross-section view and bring to the failure of the control algorithm. SMG signals have been used in combination with EMG signals, leading, to improved performances with respect to EMG alone (Xia et al 2019, Engdahl et al 2020a).

Near-infrared spectroscopy (NIRS) is a non-invasive technique measuring the level of oxygenation of active muscles under contraction (table 1). The detection unit consists of a near-infrared led emitter and a photodetector, placed on the skin surface. The emitted infrared (IR) light is partly absorbed by the tissue, mostly by hemoglobin, and partly scattered back to the skin surface and detected by the photodetector. NIRS thus detects changes in the amount of IR light scattered back due to muscle contraction (Schneider et al 2003). This technique has a high spatial resolution and is immune to electronic interference. However, tissue heating may take place after prolonged use. Recently, Paleari et al (2017) developed a wireless NIRS unit for hand gesture recognition, indicating the potentiality of this technique for ULP control. NIRS has indeed been used in this context in conjunction with EMG (Guo et al 2017b) and IMU (Zhao et al 2019).

The electrical impedance tomography (EIT) measures the internal electrical impedance of the tissues in the cross-section plane covered by specific surface electrodes (table 1), which may range from 8 to 64 (Padilha Leitzke and Zangl 2020). The measurement is executed by exciting a sine wave of electrical current (amplitudes ranging from 10 µA to 10 mA and frequencies from 10 kHz to 1 MHz (Grushko et al 2020)) and by recording the voltages collected by surface electrodes. The detected changes in phase and amplitude represent the distribution changes of internal conductivity within the affected area, identifying patterns of movement. Wearable systems for ULP control have been developed, such as the ones proposed by Zhang et al (2016) capable to recognize hand gestures, and by Wu et al (2018), who also tested an EIT-based hand prosthesis control system on healthy people, achieving an accuracy of 98.5% with a grouping of three gestures and an accuracy of 94.4% with two sets of five gestures. This non-invasive method does not require a precise positioning of the electrodes, it only needs changes in impedance to be large enough. On the other hand, the current available systems have slow measurement and long processing time, leading to a high-power consumption. Moreover, the technique is affected by surface electrodes issues, namely skin contact conditions, electromagnetic interference, etc.

Capacitance sensing measures capacitance variations between two or more conductors (table 1). A capacitance exists when the two conductors are separated by a given distance d. In ULP context, electrodes may be placed on the prosthetic fingers, which work as capacitor plates. When a user performs a gesture, the skin deformation will cause a change in distance (d) between the conductors. This technique was used for hand gesture prediction in (Cheng et al 2013) and in (Truong et al 2018), using wearable systems. This technique is low cost, non-invasive, and it is capable to detect deep and complex signals, but it owns the standard disadvantages affecting surface electrodes, and it is susceptible to ambient temperature changes.

Magnetomyography is a promising approach aimed at measuring the magnetic fields produced by electrical currents propagating through muscles during contraction (table 1). This technique foresees the placement of magnetometers on the muscle, either non-invasively or beneath the skin, following a surgical procedure. The magnetometers convert the magnetic fields into measurable quantities, such as currents or voltages that can be used for the control of the prosthesis. Small implantable magnetometers have been proposed in Zuo et al (2020), but they still need to be clinically tested. This technique is less sensitive to sensors' shift or sweat but may be strongly influenced by the environmental magnetic noise and the magnetic field of the Earth.

Peripheral neural interfaces measure the electrical activity of the motor peripheral with an invasive approach (table 1). There are three types of electrodes: extraneural, like CUFF or flat interface nerve electrodes (FINEs), which embrace the nerve; intraneural, which run longitudinally (longitudinal intrafascicular electrodes (LIFEs)) or transversally (transverse intrafascicular multichannel electrodes (TIMEs) or Utah slanted electrode array (USEA)) through the nerve; and regenerative, such as SIEVE or Microchannel, attached between the two extremities of a severed nerve (del Valle and Navarro 2013, Raspopovic et al 2021b). Nguyen et al (2020) enabled an amputee to control a 15 DoFs prosthesis, by using 4 implanted LIFE arrays, 2 in the medial nerve and 2 in the ulnar one. The negative aspects of this method reside in its profound invasiveness and in the acquisition of noisy signals.

3.1.1.3. Brain signals

The first neuroprosthetic application on humans was reported by the group of Donoghue, who demonstrated that tetraplegic individuals implanted with arrays of microelectrodes over the motor cortex were able to remotely control the movement of a cursor on a screen (Hochberg et al 2006). This clinical trial was soon followed by another from the same group reporting the control of reaching and grasping actions of a robotic arm (Hochberg et al 2012). The group of Schwartz also showed similar results of an individual with tetraplegia successfully controlling a seven DoF robotic arm (Collinger et al 2013). In all these examples, intracortical brain signals were used, i.e. action potentials of individual neurons were detected with an array of electrodes inserted into the brain, usually in the motor cortex (table 1).

Less invasive measurements of cortical currents using electrocorticography (ECoG) have been widely used for neuroprosthetic control in the lab. ECoG detects the electrical activity of the brain with strips of electrodes laid on the brain's surface, usually in the motor cortex area. ECoG signals have been used for hand gesture recognition (Bleichner et al 2016), for the control of a virtual prosthesis (Wang et al 2013) and of a robotic limb (Fifer et al 2013), and also with a detached prosthesis with active digits (Hotson et al 2016).

ECoG provides an ideal trade-off between the invasiveness of intracortical recordings and the poor spatial resolution of electroencephalography (EEG) (Thakor et al 2014). However, whether non-invasively collected signals convey enough motor information to control a neuroprosthetic hand is still debated (Fukuma et al 2016).

EEG measures the electrical activity of the brain with an external helmet made of electrodes (table 1). In a ULP application, a motor imagery task is typically used, and the subject only needs to think about the movement. EEG signals corresponding to the intention of the movement are therefore used to drive the end-effector. Recently, McDermott et al 2021 were able to extract from EEG recordings relevant brain states in real-time and indicated such states as prospective therapeutic targets for motor neurorehabilitation (McDermott et al 2021). Similarly, the group of Wolpaw showed that paralyzed patients could use EEG signals to control a cursor in three-dimensional space (Mcfarland et al 2010), suggesting that noninvasive EEG-based BCIs can be exploited for control of robotic devices or neuroprostheses. EEG-based neuroimaging is indeed emerging as a useful tool for robotic device control, as demonstrated by Edelman et al (2019).

Another promising technique is the functional near-infrared spectroscopy (fNIRS), which detects activity-related brain oxygenation. The instrumentation is the same used for NIRS, i.e. a near-infrared led, and a photodetector are used to measure the amount of scattered back light and, therefore, the amount of IR light absorbed by the hemoglobin in the brain, which increases during brain activity. In 2020, Syed et al (2020) used these hemodynamic brain responses to control a ULP for trans-humeral amputees with three DoFs, gaining eight out of ten classified movements in real-time.

These examples demonstrate that groundwork for brain control of motor prosthetics has been laid. However, it has been limited to the lab and mostly addresses paralyzed patients. Nevertheless, there is a growing interest in brain-derived measures for prosthetic applications and different recording techniques have been investigated for ULP control.

3.1.2. Other techniques under investigation

Besides the detection of physiological changes in residual muscles of the stump or in the brain during movements, described above, there are many other input sources and techniques capable or with the potentiality to control an upper limb prosthesis. Some of these are mainly used in the research field and since they lack usability, they do not find a real application in everyday life of amputees, or they are conceived for patients without the possibility to exploit other more convenient and intuitive sources (i.e. tetraplegic people). Some of them, instead, have been still only proposed as proof-of-concept.

The most studied approach is based on the use of IMUs. IMU sensors are cheap, small and can therefore by easily embedded in the prosthesis. They can increase the amount of data useful to successfully discriminate between different gestures of ULP during distinct phases of the reaching movement. These devices exploit accelerometers, gyroscopes and magnetometers to understand which is the actual altitude, position and orientation of the prosthesis. These sensors deliver information through quaternions and they are often used together with EMG to improve the classifier robustness (Georgi et al 2015). Zhang et al (2011) depicted the possibility to manipulate objects and perform complex tasks using both IMU and EMG sensors. As a matter of fact, the accelerometers can capture information that sEMG sensors cannot easily detect, such as hand withdrawal or rotation (Chen et al 2007). It has been shown that the use of IMU sensor coupled to EMG is more advantageous than increasing the number of EMG sensors (Fougner et al 2011). Similar results have been achieved by Krasoulis et al (2017), who have combined EMG and IMU to feed pattern recognition systems (see section 4.3.1). They demonstrated that this combination could significantly improve the real-time completion rates compared to the traditional methods, exclusively based on sEMG signals. Moreover, the data coming from IMU can be used alone to control a single module, usually the wrist or the elbow (Merad et al 2018) or to realize other types of control for rehabilitation purposes, such as shadow control, in which the control policy consists in replicating the movement captured by the IMU sensors (Rapetti et al 2020). These devices were also placed on feet to directly control an ULP by implementing precise foot movements (Resnik et al 2014). The adoption of IMU sensors is specifically promising in sensor fusion approaches, as discussed in section 3.1.3. Besides EMG signals, IMU data have been also combined with NIRS (Zhao et al 2019) and MMG (Wilson and Vaidyanathan 2017, Woodward et al 2017).

Table 2 summarizes the use of IMU and other input sources investigated for the control of ULP, many of which are described in (Grushko et al 2020).

Table 2. Alternative input sources investigated for the control multi-DoF prosthesis devices.

| Input source | Measured property | Sensors' placement | PROs | CONs | Sensor fusion | Examples |

|---|---|---|---|---|---|---|

| IMU | Specific force, angular rate, orientation of the body | Up to 8 IMU sensors located on feet | Non-invasive, simple, low cost | Problems during walking, not intuitive, unnatural | EMG, NIRS, MMG | (Resnik et al 2014) DEKA Arm control |

| Myokinetic control | Change of muscle morphology trough magnetic fields | Permanent magnet markers implanted over targeted muscles and external three-axis magnetic field sensors placed in the socket | Intuitive control, force and position feedback | Magnetic interferences, misalignments between socket and initial position, invasive | — | (Tarantino et al 2017, Clemente et al 2019) |

| Voice | Throat vibration | Piezoelectric sensor on the throat | Ease of use sequence of movements | External noise, input sound level, unintuitive control | EMG | (Mainardi and Davalli 2007) |

| Voice commands | Microphone near mouth | IMU | (Alkhafaf et al 2020) | |||

| Tongue | Pressures made by the tongue | Board of coils on the palate and activation unit on the tip of the tongue | Mobile, wireless, invisible | Unintuitive control, uncomfortable | EMG | (Johansen et al 2016, Johansen et al 2021) |

| Feet | Pressures made by the feet | Insole made of force sensing resistors | Simple low cost | Unintuitive control, problem during walking, need of accurate calibration | IMU EMG | (Carrozza et al 2007) |

| Optical myography (OMG) | Skin surface deformations caused by underlying muscle contraction | Single low-resolution camera and marker-based tracking methods | Simple low cost | No space for camera in the socket, low robustness | — | (Nissler et al 2016, Wu et al 2019) |

3.1.3. Integrative sources

An integrative input source is not used as the main responsible for the actual command of the prosthesis, but it is used to help and to facilitate its control, which usually depends on EMG signals. The integrative input sources work in parallel and together with the main ones, integrating their information and implementing the so-called data-fusion or sensor-fusion methods, see also section 4.3.3. Table 3 summarizes integrative sources found in the literature that have been used for ULP control.

Table 3. Integrative sources of information used to improve prosthesis control.

| Integrative input source | Instruments and measured information | Application | PROs | CONs | Fusion | Examples |

|---|---|---|---|---|---|---|

| Computer vision | Two cameras used to collect images and estimate depth | Estimation of size, distance and grasp type for a semi-autonomous control of the prosthesis | Ease of use fixing of errors without looking at the prosthesis automatic help in controlling the prosthesis | Expensive, cumbersome and uncomfortable | EMG IMU | (Markovic et al 2014) Stereovision (depth?) |

| Depth estimated by the color intensity of the pixel collected by the camera | (Mouchoux et al 2021) Depth and color camera RGB | |||||

| Eye movements | 4 Superficial electrodes for the measuring of the corneo-retinal standing potentials between the front and the back of the human eye | Estimation of the position/length/width/orientation of a final target and preparation of the preshape and direction of the hand | Ease of use automatic help in controlling the prosthesis | Distinction with random eye movements, cumbersome and uncomfortable | EMG | (Hao et al 2013) Electro-oculography |

| Camera mounted on a pair of glasses measuring the reflection of infra-red (IR) light from the eyeball | (Krausz et al 2020) Eye tracking glasses | |||||

| Optical sensor | Led-based optical sensor mounted on fingertips | Slip detection and eventual automatic suppression | Accurate, robust simple, low cost and power consumption | Poor detection with transparent surfaces | EMG | (Sani and Meek 2011) LED motion detection sensor |

| Miniature reflective optic sensor that combines an Infrared LED and a phototransistor in the same package. | (Nakagawa-Silva et al 2018) Reflective optic sensor | |||||

| IMU | Accelerometers, Gyroscopes, Magnetometers | Decreased #sensors, better controllability, artifact detection | Non-invasive, simple, low cost, motion artifact deletion | Prone to error cumulate over time | EMG, NIRS, MMG | (Krasoulis et al 2017, Krasoulis et al 2019b) up to 6 gestures |

3.2. Prosthetic sensing

Natural movements occur with a bidirectional flow of neural information, i.e. motor commands on one direction and sensory feedback on the other. In prosthetic applications, while many efforts have been spent to provide signals carrying motor intentions, a less explored path is the integration of sense of touch into the prosthesis (Clemente et al 2015). This lack is highly responsible for the missing perception of the prosthesis as part of one's own body and is also precluding a closed-loop control of the prosthesis.

More recently, the scientific community has started exploring different methods to equip prosthetic devices with perception of tactile and pressure information (Schmitz et al 2008, Tee et al 2012, Hammock et al 2013, Lucarotti et al 2013, Taunyazov et al 2021), although often resulting in very complex, unreliable, or unpractically cumbersome solutions. The few solutions tested on real prosthetic setups impacted on their anthropomorphism and dexterity.

To integrate touch sensors into robotic and prosthetic devices (figure 2, end-effector feedback) (Lucarotti et al 2013, Iskarous and Thakor 2019, Dimante et al 2020), different technologies have been investigated and employed (Ciancio et al 2016), namely capacitive (Jamali et al 2015, Maiolino et al 2013), resistive (Beccai et al 2005, Tee et al 2012, Zainuddin et al 2015), piezoelectric (screen printed piezoelectric polymer, PVDF) (Alameh et al 2018), and magnetic sensors (Ahmadi et al 2011). Other examples include technologies based on electrical impedance (Zainuddin et al 2015, Wu et al 2018), pressure and electrical impedance (Lin et al 2009), optical fibers (Bragg fiber (Massari et al 2019)), micro-electro-mechanical systems (texture sensing (Mazzoni et al 2020)) combined with spiking based on Izhikevich neuron model (Gunasekaran et al 2019) and optoelectronic (Alfadhel and Kosel 2015).

Examples of the application of these sensors into prosthetic devices include the E-dermis (piezoelectric sensors integrated on the Bebionic's fingertips) (Osborn et al 2018), E-skin (integrating different types of sensors) (Iskarous and Thakor 2019), and BioTac (impedance sensor integrated on the Shadow Hand (Robot 2022)) (Fishel and Loeb 2012).

Among commercial devices, the SensorHand Speed (Ottobock 2021) made by Ottobock is the only one including tactile sensors based on resistive technology (Ottobock 2021).

Therefore, tactile sensation is the first step toward novel and more efficient control strategies that do make use of feedback information (Raspopovic et al 2014). To this end, artificial intelligence can be exploited to detect the grasp of different objects from sensor data (Alameh et al 2020).

3.3. Sensory feedback

Sensory feedback patterns are designed to enrich the perceived responsiveness of the device and the subjective experience of its use as a limb (Antfolk et al 2013b, Svensson et al 2017, Raspopovic et al 2021a). Such a result derives from the elicitation of physiological and psychological reactions that promote embodiment processes (described in section 5.1). Furthermore, such stimulations (haptic feedback in many cutting-edge devices) are designed as a fundamental component of bidirectional human-machine interfaces empowering prosthetic control (Navaraj et al 2019). Establishing such a closed-loop can trigger learning processes even for artificial sensations (Cuberovic et al 2019), pointing at somatosensory plasticity processes. These phenomena provide the user with an engaging guidance within a natural interaction, facilitating the execution of prosthetic maneuvers during calibration, training, and daily use. Importantly, such an enhanced practice will ease the production of consistent biosignals that will progressively become easier to interpret as user commands.

However, current commercial prostheses generally do not incorporate an explicit haptic feedback but the incidental feedback, like visual and the sound cues, could be exploited by the user to estimate the prosthesis state (Wilke et al 2019). For example, the acoustic feedback provides a guidance on how to reach target during the rehabilitation session, in this way the rehabilitation step can be more interactive and engaging if appropriately designed (never obnoxious, possibly plausible). Overall, the next sub-sections will discuss the design of sensory feedback in prosthetics, distinguishing invasive and non-invasive stimulation modalities.

3.3.1. Non-invasive methods

Non-invasive feedback restoration for upper limb amputees is a hot topic in the research community, and yet it has not achieved broad clinical application (Sensinger and Dosen 2020). Many solutions have been proposed, but the main problem lays in their poor robustness. (Ribeiro et al 2019) highlighted the most widespread types of non-invasive feedback, described in table 4.

Table 4. Non-invasive methods for sensory feedback in ULP.

| Feedback sense | Instruments and feedback information | Application | PROs | CONs | Examples | |

|---|---|---|---|---|---|---|

| Touch (cutaneous stimulation) | Vibrational | Eccentric rotating motors, proprioception, force | Array over the forearm or over the arm | Non-invasive, robustness control, brief training period, intuitive, cheap, small | (Bark et al 2014, Markovic et al 2019) up to 3 DoFs or different force levels | Non-physiological, need calibration, coupled intensity and rotation frequency, position displacement |

| Mechanotactile | Linear actuator, pressure sensation, spatial touch sensation | Detected areas to reproduce real touch sensation, array over the arm | Non-invasive, intuitive, brief training period, decoupled intensity and frequency | (Antfolk et al 2013a, Svensson et al 2017, Tchimino et al 2021) different pression level, touch sensation | Need spatial and intensity calibration, bulky, position displacement | |

| Electrical | Transcutaneous stimulation using bipolar electrodes, pressure, slip, proprioception | Array over the forearm or arm | No electrode displacement, low power consuming, high sensor skin contact, intensity or frequency modulation | (Jorgovanovic et al 2014, Xu et al 2015, Garenfeld et al 2020) touch location, pression, proprioception | Noise during acquisition, long calibration, not localized sensation | |

| Sound (acoustic) | Acoustic speaker, proprioceptive movements | Laptop speaker to guide the training acquisition and improve the pattern recognition strategy | Low cost, no calibration, intuitive | — | (Gigli et al 2020) multiple arm positions | |

| Vision (visual) | Camera on board, external camera | Head-mounted displays, laptop displays, virtual reality, augmented reality | Increase perceptual experience, engagement, intuitive, promote training | Bulky, not portable, uncomfortable | (Clemente et al 2016, Markovic et al 2017, Sharma et al 2018, Hazubski et al 2020, Sun et al 2021b) trajectory, force | |

The most investigated feedback relies on the sense of touch and therefore consists of cutaneous stimulation. This can be performed with different modalities namely, vibrational, mechanotactile or electrical stimulation.

The vibrational feedback is generally implemented with the addition of eccentric rotating motors placed in contact with the skin surface of the stump (Ribeiro et al 2019). This method is generally employed to augment the robustness of the control system by providing the user with additional information regarding the position of the prosthetic device but it lacks intuitiveness, as the association between perceived sensation and the corresponding information has to be learned by the user. For example, in Bark et al (2014), the motors were placed in four distinct areas of the stump to guide the user through the desired trajectory while grasping object and the results showed a significant decrease in the root mean square angle error of their limb during the learning process. More recently, Markovic et al (2019) proposed a joint-oriented feedback criterion consisting of three vibromotors placed on the arm to provide the information on which joint is currently activated by the user, thus restoring proprioceptive sensation. The experiment was performed by 12 able-body subjects and 2 amputees controlling 3 DoF prosthesis, and it was found that the myoelectric multi-amplitude control outperformed the pattern recognition method when the feedback was applied.

Differently from the vibrational, the mechanotactile feedback is based on the application of linear actuators on the skin and provides pressure sensation. Antfolk et al (2013a) exploited this technique and proposed a multisite mechanotactile system to investigate the localization and discrimination threshold of pressure stimuli on the residual limbs of trans-radial amputees. They demonstrated that subjects were able to discriminate between different location of sensation and to differentiate between three different levels of pressure. This study demonstrated that it is possible to transfer tactile input from an artificial hand to the forearm skin after a brief training period. Recently, Svensson et al (2017) used it to translate the interaction between a virtual reality (VR) environment and a virtual hand into user sensation. The authors showed that by placing the tactile actuators in correspondence with the areas of the skin involved in object manipulation, subjects were able to feel a real touch sensation that increased their sense of body ownership. For example, pressure applied to the prosthetic fingers was perceived as a tactile sensation on the skin (Svensson et al 2017).

The electrical feedback is based on transcutaneous stimulation. The elicited sensations range from perception of pressure (Jorgovanovic et al 2014) to slip sensations (Xu et al 2015), depending on the electrical parameters (i.e. current amplitude, pulse frequency, pulse width). One advantage of this approach with respect to the vibrotactile and mechanotactile ones is the lack of moving components avoiding problems of electrode displacement and, thus, improving the sensors-skin contact. Nevertheless, it is important to take into account that the noise introduced by the electric stimulation can corrupt the acquisition of muscular activity, causing errors if the ULP is myoelectrically controlled. Moreover, the perceptions are not strictly confined to the zone under the stimulating device but they can spread in a wider region if the area above a nerve is considered.

Another sensory modality exploited for feedback delivery is the acoustic one. Gigli et al (2020) recently tested a novel acquisition protocol with additional acoustic feedback in 18 able-body participants to improve myoelectric control. The protocol consisted in dynamically acquiring EMG data in multiple arm positions while returning an acoustic signal to urge the participants to hover with the arm in specific regions of their peri-personal space. The results showed that the interaction between user and prosthesis during the data acquisition step was able to significantly improve myoelectric control. Auditory feedback has also been employed to convey artificial proprioceptive and exteroceptive information. Lundborg et al (1999) and Gonzalez et al (2012) employed auditory feedback by encoding the movement of different fingers into different sounds. The method demonstrated that the inclusion of auditory feedback reduces the mental effort and increase the human-machine interaction; furthermore, better temporal performance and better grasping performance were obtained.

In the last years, there have been some examples exploiting vision to deliver sensory feedback. Indeed, visual stimulation can be provided as explicit feedback through screens during game-like exercises, helping the prosthetic user to learn how to control the device (e.g. adjusting trajectory or grasping force) (Markovic et al 2018). However, adding sensory information to the prosthetic user's perceptual experience in real contexts requires solutions like augmented reality (AR, occurring when computer-generated items overlay a real setting) or mixed reality (MR, a term that represented different combinations of real and virtual items) (Milgram and Kishino 1994, Speicher et al 2019). AR and MR environments, implemented through wearable solutions like head-mounted displays, can support the actual control of a prosthetic device through visual feedback that does not occlude the real context (Clemente et al 2016, Markovic et al 2017, Hazubski et al 2020). However, they can also be used for prosthetic use training (Anderson and Bischof 2014, Sharma et al 2018)—in such a case, VR (a fully computer-generated setting) can offer visual feedback too (Lamounier et al 2010, Sun et al 2021b), especially within game-based frameworks (Nissler et al 2019) for engaging the users and motivating their activity.

3.3.2. Invasive methods

There are different technologies that can be employed to provide a sensation directly to the nerve (Cutrone and Micera 2019, Raspopovic et al 2021a). The most used employ intrafascicular electrodes, such as TIME and wire and thin-film LIFE, which can both record muscle activity (e.g. iEMG) and stimulate nerves. Other solutions are characterized by the fact that the electrodes are placed around the nerves, such as cuff electrodes and FINEs.

The first example of ULP with sensory stimulation dates back to 1979 and it was based on the remapping between pressure signals acquired by prosthesis sensors to an amplitude-frequency modulation. This consisted of a series of pulses delivered with a pulse rate proportional to the increment of the pinch force and provided through dry electrodes placed over the skin in correspondence of the median nerve, as described in Shannon (1979). Later, the group of Micera employed thin-film intrafascicular electrodes longitudinally implanted in peripheral nerves (tf-LIFE4) to deliver electrical stimulation. With this method, they were able to elicit sensation of missing hand in the fascicular projection territories of the corresponding nerves and to modulate the sensation by varying the pulse width and pulse frequency (Benvenuto et al 2010). Importantly, this method avoids muscle crosstalk, fundamental for guaranteeing myoelectric control. More recently, new bioinspired paradigms have been suggested to better induce natural sensations (Raspopovic et al 2021a). In particular, the study of Oddo et al (2016) showed that it is possible to restore textural features recorded by an artificial fingertip. This device embedded a neuromorphic real-time mechano-neuro-transductor, which emulated the firing dynamics of SA1 cutaneous afferents. The emulated firing rate was converted into temporal pattern of electrical spikes that were delivered to the human median nerve via percutaneous microstimulation in one trans-radial amputee.

Valle et al (2018) suggested a 'hybrid' encoding strategy based on simultaneous biomimetic frequency and amplitude modulation. This kind of stimulation was perceived more natural with respect to classical stimulation protocol, enabling better performance in tasks requiring fine identification of the applied force. This paradigm was tested and validated during a virtual egg test (Valle et al 2018), where the subject needed to modulate the force applied to move sensorized blocks. This encoding strategy not only improves gross manual dexterity in functional task but also improved the prosthesis embodiment, reducing abnormal phantom limb perceptions.

Similarly, Osborn et al (2018) implemented a neuromorphic feedback paradigm based on Izikevich neuron model to generate the current spike train to inject directly in the median and ulnar nerves, using beryllium copper (BeCu) probes. Their prosthesis proposes a neuromorphic multilayered artificial skin to perceive touch and pain. Their transcutaneous electrical nerve stimulation allows to elicit innocuous and noxious tactile perceptions in the phantom hand. The multilayered electronic dermis (e-dermis) produces receptor-like spiking neural activity that allows to discriminate object curvature, including sharpness in a more natural sensation spanning a range of tactile stimuli for prosthetic hands. The authors were able not only to restore finger touch discrimination and objects recognition, but also to provide a pain sensation when the prosthesis touched sharp objects. In particular, they found that pain sensation is generated by a stimulation of 15–20 Hz.

Tan et al (2014) suggested that simple electronic cuff placed around nerves in the upper arm can directly activate the neural pathways responsible for hand sensations. This neural interface enabled the restoration of different sensations at many locations on the neuroprosthetic hand. Different stimulation patterns could transform the typical 'tingling sensation' of electrical stimulation into multiple different natural sensations, enabling the amputees to perform fine motor tasks and improving the embodiment.

In George et al (2019) a biomimetic method was described to restore both force and haptic sensation. The sensory feedback was implemented to restore the force sensation and promote objects recognition: USEA electrodes were used to deliver stimulation proportional to the variation of contact force exchanged between the prosthesis and the object during manipulation. Instead, the haptic sensation was based on the distribution of stimulation delivered during contact with the object with a fixed frequency and amplitude. The characteristic of this encoding scheme is based on electrical biphasic, charge—balanced of 200 or 320 µs phase durations. The biomimetic model describes the instantaneous firing rate of the afferent population using the contact stimulus position, velocity, and acceleration simulating all tactile fibers to any spatiotemporal deformation of the skin and hand. This strategy allows the amputee to augment the active exploration experience and to discriminate object size and stiffness.

Liu et al (2021b) have shown that primary afferents encode different stimulus features in distinct yet overlapping ways: scanning speed and contact force are encoded primarily in firing rates, whereas texture is encoded in the spatial distribution of the activated fibers, and in precisely timed spiking sequences. When multiple aspects of tactile stimuli vary at the same time, these different neural codes allow for information to be multiplexed in the responses of single neuron and populations of neurons. Exploiting this sensory architecture with invasive methods may lead to the development of prosthetic devices able to truly evoke natural sensations.

Another promising approach is targeted sensory reinnervation (TSR), i.e. the sensory version of TMR, which consists in coupling a pressure sensor placed on the prosthetic device to surgically redirected cutaneous sensory nerves (Marasco et al 2011). This technique strongly helps discrimination of objects size and stiffness during active exploration, especially if the tactile feedback is biomimetic (George et al 2019). Recently, Marasco et al (2021) have developed a prosthetic system based on both targeted sensory and motor reinnervation. TSR was used to deliver both touch and kinesthetic feedback. The authors showed that the system was able to significantly improve device control and promote embodiment.

These results indicate that, in order to close the loop on user and provide useful sensation (regardless the specific feedback modality), an optimal feedback control policy is necessary (Sensinger and Dosen 2020), as discussed in section 4.4.

4. Prosthetic control strategies and algorithms

Although the focus of this section is on the active prosthesis, it is worth mentioning that an important portion of the amputees still uses body-powered prosthesis (Carey et al 2015). These are cable-operated devices usually equipped with split hook or hand as terminal part (Millstein et al 1986).

Ranging from standard control approaches (e.g. dual-site control (Scott and Parker 1988)) to simultaneous control of multiple degrees of freedom (e.g. pattern recognition (Hahne et al 2018)), the literature offers disparate solutions for ULP control depending on the type of input signal and the sensors density.

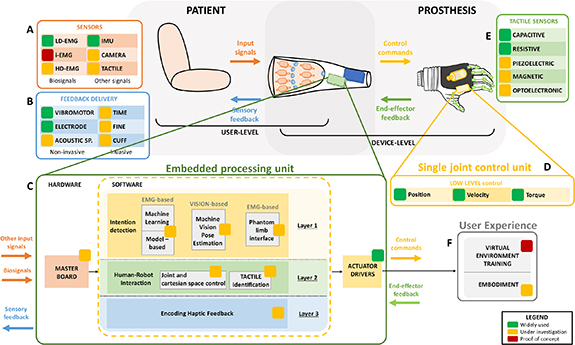

In general, prosthetic control is performed at different levels. The low level refers to motor actuation (figure 5(D)) and, more in general, to the control of the active degrees of freedom of the device; the medium level consists of the translation of movement intentions into joint references and gestures (figure 5(C)); the high-level control translates input signals collected from the user (figure 5(A)) into movement intentions (figure 5(C), yellow panel—layer 1). In the next sections, we describe these different levels of control and provide examples of the different strategies that can be used.

Figure 5. Architecture of ULP control: actuation and feedback. Input signals collected from the user (A) are processed into the embedded processing unit (C) to generate control commands for the single joint control unit (D). Feedback information coming from the prosthesis or its interaction with the environment (E) are also processed in the embedded processing unit (C) to deliver sensory feedback (B). The embedded processing unit (C) can be set up by different layers: layer 1 (intention detection, yellow panel) is the software turning the input signals (A) sampled by master board into detected movement intentions, by means of specific control algorithms (e.g. machine learning or deep learning (DL) algorithms); layer 2 (human-robot interaction, green panel) is the software responsible of processing prosthesis position (joint and Cartesian space control) and external information (tactile identification, (E)); layer 3 (encoding haptic feedback, blue panel) is the software responsible for encoding the information processed in layer 2 into sensory feedback. The output of the embedded processing unit are control commands (mediated by actuator drivers) both to move the device and to provide sensory feedback. This has a direct impact on the user experience (F) in terms of learning how to use the device (training) and of user-prosthesis integration (embodiment).

Download figure:

Standard image High-resolution image4.1. Low-level control: from control commands to motor actuation

The low-level control combines the well-known strategies implemented in the automation industry to operate autonomous machines, e.g. industrial robots. We will not detail the structure and mathematical formality of these control architectures. However, if the readers are curious, a more complete and detailed analysis of robot lower-level control is provided by the comprehensive work of Siciliano et al (2010).

In brief, at the base of these controls, there is always an active and controllable actuator, that for upper limb prosthetic solutions coincides—most of the times—with an electrical motor (either brushed or brushless) often coupled to a dedicated transmission system (e.g. a planetary gear) to reach the desired torque-speed characteristic. It is possible to present the low-level control of ULPs as the combination of three possible nested controllers: the current, the speed and the position control loops (figure 5(D)).

The current control loop takes care of reliably tracking desired current trajectories. To be implemented, it requires the presence of reliable and precise current measurement sensors. The current control also provides a relatively good force/torque control of the system, being the current absorbed by the actuator directly proportional to the generated output torque. On top of the current controller, it is usually found a speed control loop to regulate the rotational speed of the motor and, thus, the speed of the actuated system. The combination of an external speed controller with an internal current control guarantees the possibility of safely operate the actuating unit in terms of desired speeds and torques. Sometimes, on top or in substitution to the speed controller, systems also implement a position control loop. The position controller guarantees the tracking of desired angular trajectories. It is therefore preferable to use the speed controller if the goal is to precisely track given trajectories in specific time intervals. The implementation and application of speed and position controllers can be performed either before (fast shaft) or after (slow shaft) of the transmission system. The decision depends on the availability of sensing devices (e.g. angular sensors such as encoders or resolvers) to measure the required physical quantities.

All these controllers are implemented in a negative feedback architecture and typically controlled by means of PID controllers, whose proportional (P), integrative (I) and derivative (D) parameters are tuned to reach the desired system response in terms of control reactivity (rise time and settling time), precision (steady-state error and overshoot) and stability. It is worth mentioning that a negative feedback architecture is typically only bounded to the low-level control of the prosthesis, while higher level controllers and especially high-level control (see section 4.3) are often treated in an open-loop fashion, where the user directly generates the reference control signal without any feedback verification. The generated reference commands will then be directly sent to the low-level controller.

4.2. Mid-level control: from movement intention to control commands

The mid-level techniques (figure 5(C), yellow panel—layer 1) aim to synthetize the control commands to suitably activate the electric motors of the multiple DoFs ULP (actuation drivers in figure 5(C)). These signals are the input of the aforementioned low-level control.

A major classification of the mid-level control strategies for multi-DoFs robots divides them in two categories: joint-space and task-space (Cartesian) controllers (Siciliano et al 2008, Corke and Khatib 2011).