Abstract

Quantum computing is gaining popularity across a wide range of scientific disciplines due to its potential to solve long-standing computational problems that are considered intractable with classical computers. One promising area where quantum computing has potential is in the speed-up of NP-hard optimisation problems that are common in industrial areas such as logistics and finance. Newcomers to the field of quantum computing who are interested in using this technology to solve optimisation problems do not have an easily accessible source of information on the current capabilities of quantum computers and algorithms. This paper aims to provide a comprehensive overview of the theory of quantum optimisation techniques and their practical application, focusing on their near-term potential for noisy intermediate scale quantum devices. The paper starts by drawing parallels between classical and quantum optimisation problems, highlighting their conceptual similarities and differences. Two main paradigms for quantum hardware are then discussed: analogue and gate-based quantum computers. While analog devices such as quantum annealers are effective for some optimisation problems, they have limitations and cannot be used for universal quantum computation. In contrast, gate-based quantum computers offer the potential for universal quantum computation, but they face challenges with hardware limitations and accurate gate implementation. The paper provides a detailed mathematical discussion with references to key works in the field, as well as a more practical discussion with relevant examples. The most popular techniques for quantum optimisation on gate-based quantum computers, the quantum approximate optimisation algorithm and the quantum alternating operator ansatz framework, are discussed in detail. However, it is still unclear whether these techniques will yield quantum advantage, even with advancements in hardware and noise reduction. The paper concludes with a discussion of the challenges facing quantum optimisation techniques and the need for further research and development to identify new, effective methods for achieving quantum advantage.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 license. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

The principles of quantum mechanics have enabled the development of a new paradigm of computing, known as quantum computing. This innovative approach leverages the principles of superposition and entanglement to offer faster and more efficient solutions to specific industrial challenges that classical computers struggle to address. For example, the ability to find prime factors of large numbers very quickly on a quantum computer has the potential to break widely used encryption algorithms that rely on the difficulty of this problem [1]. Additionally, quantum computing has implications for drug discovery [2], materials science [3], and machine learning algorithms [4], amongst other fields. While the aforementioned applications of quantum computing are promising, a significant amount of research is being carried out on the development of quantum-enhanced optimisation techniques for logistics [5–8], automotive [9], finance [10–12] and the defence sector [13].

In the classical domain, optimisation problems [14] are defined as mathematical problems that involve finding the best solution from a set of possible solutions. The goal of optimisation is to find the solution that maximizes or minimizes an objective function—a measure of success—subject to a set of constraints limiting the allowable solutions. Typically, classical optimisation algorithms rely on searching through the space of all possible solutions, evaluating each solution until the best one is found. This process can be time-consuming and inefficient, especially for problems with many variables or constraints. As such the search typically proceeds according to some heuristic approach that can speed up the process considerably. A well-known example is the traveling salesman problem (TSP) [15], in which a salesman must visit a set of cities, each only once, and return to his starting point while minimizing the total distance travelled. This is a combinatorial optimisation problem, which means that the number of possible solutions grows combinatorially with the number of cities. As a result, finding the optimal solution to the TSP for many cities becomes time-consuming and computationally expensive for classical computers. In fact, the TSP is an example of an NP-hard problem, which means that there is no known efficient classical algorithm that can solve it in polynomial time. The best classical algorithms for the TSP rely on heuristics and approximation methods, which can still take a long time to reach a solution, especially for large instances of the problem.

Quantum computers are, in principle, inherently well suited to the task of solving optimisation problems thanks to the key phenomena of superposition and entanglement [16]. Superposition refers to the ability of qubits—the basic building blocks of quantum computers—to exist in multiple states simultaneously. It is commonly claimed that superposition will enable a quantum computer to explore all possible solutions to a problem simultaneously, thereby leading to an advantage over classical computers. Such claims must be considered carefully because, in reality the probabilistic nature of measurement outcomes in quantum mechanics means that superposition alone is not enough to yield an advantage. Superposition must be combined with clever algorithm design in order for quantum computers to have high probabilities of outputting useful information. Entanglement, on the other hand, refers to the strong correlations that exist between qubits in a quantum system. A key property of this correlation is that it is non-local i.e. two qubits can be entangled even if they are separated by arbitrarily large distances. Entanglement is a fundamentally non-classical phenomenon and it is believed that this will be a key resource that enables quantum advantage. Indeed, entanglement is a key ingredient in many quantum optimisation algorithms, such as quantum annealing and variational quantum algorithms (discussed in this review), which are specifically designed to exploit the benefits of quantum mechanics for solving optimisation problems. However, the actual mechanisms by which we can best exploit entanglement in algorithms are not currently known. This is primarily because entanglement is still not well understood in these contexts. Finally, another key reason optimisation has been an early application area of quantum computing is the existence of mappings of many problem instances onto Ising problems which in turn can be mapped onto a quantum computer. In general, it is highly non-trivial to map a problem to a form that can be executed on a quantum computer. As such the existence of a simple mapping is itself a good reason to examine a class of problems.

One of the most exciting aspects of quantum computing is the concept of quantum advantage [17]. Although exact definitions differ somewhat, it is generally accepted that quantum advantage will have been achieved once a quantum computer can solve a practically useful problem (significantly) faster than any classical computer. The potential advantages that quantum computers offer in solving complex optimisation problems have made the topic highly popular among both the scientific community and the public. This is evident from the significant media coverage that it has received. However, there are several misconceptions surrounding this topic, particularly regarding the speed and universality of the optimisation problems that can be addressed. While it is correct that quantum computers may have the capacity to solve some problems faster than classical computers, true quantum advantage has not yet been established in practice. Additionally, an optimisation problem that can genuinely benefit from a quantum device has yet to be identified.

Approaching quantum optimisation to solve real-world industrial challenges can be a daunting task for practitioners exploring quantum computing as a potential solution. Distinguishing between facts and myths among the plethora of available articles on this subject can be challenging. Furthermore, there is currently no clear understanding of the potential benefits of algorithms or any prospective quantum advantage. In this work, we aim to help practitioners from various disciplines involving optimisation problems to understand the state of the art of using quantum computers and algorithms to solve optimisation problems. Specifically, we provide a parallelism between classical and quantum optimisation problems, analysing analogies and differences, and explaining the kind of problems that will, in principle, benefit from quantum acceleration. We analyse the difference between digital and analog quantum computers and why quantum algorithms will perform differently. All of this information lays the foundation to understand what a quantum computer can and cannot do to solve optimisation problems efficiently, with the ultimate benefit of setting clear community expectations. For interested readers, we provide a detailed mathematical overview of the most common quantum algorithms for optimisation, such as quantum alternating operator ansatz (QAOA), useful for understanding why such algorithms may lead to quantum advantage. Each section provides an overview of the some of the most relevant work in the field, redirecting the reader towards compelling practical case studies to inspire new work. Finally, we conclude this work by discussing the pros and cons of applied quantum computing to optimisation problems, as well as future directions.

2. Classical optimisation

The field of classical optimisation encompasses a large number of specific problems that often have useful, practical applications across a wide variety of fields. Depending on whether the variables are discrete or continuous, optimisation problems can be divided in two classes, discrete optimisation and continuous optimisation. In this work we focus almost exclusively on discrete optimisation problems. In this section we want to provide the reader with a general overview of the most common optimisation problems encountered by the scientific community, explaining why these can be computationally intractable using classical computing methodologies

2.1. Discrete optimisation

Discrete optimisation problems—also known as combinatorial optimisation problems [18, 19]—involve decision variables that take on discrete or categorical values, such as integers, binary values, or categories. Combinatorial optimisation problems involve searching through a finite or countable set of possible solutions, making this class of problems computationally intractable, as the number of possible solutions can be very large. Many combinatorial optimisation problems are known to be NP-hard problems, meaning that as the size of the problem increases, the time required to find an optimal solution grows exponentially as well (assuming P≠NP). This makes this class of optimisation problems incredibly challenging to be solved exactly in a satisfactory amount of time. There has been a great deal of effort from the community directed towards solving these problems as accurately and as quickly as possible. This has resulted in a plethora of heuristic algorithms. Rather than exhaustively searching the space of all possible solutions for the exact solution, heuristic methods are designed to find a good-quality approximate solution. These methods are typically able to find solutions efficiently (in polynomial time) but, this comes at the cost of providing a sub-optimal solution [20, 21].

In practice, the choice of algorithm depends on the size and structure of the problem instance, as well as the desired trade-off between solution quality and computation time. For small problem instances, exact methods may be preferred, as they can guarantee optimality. However, for large problem instances, exact methods may become computationally infeasible, and approximate methods may be necessary to find a good-quality solution within a reasonable amount of time. Many combinatorial optimisation problems are in fact known to be APX-hard. The APX complexity class is comprised of NP problems that are able to approximate in polynomial time to within a constant multiplicative factor of the optimal solution. This has allowed for the development of many very useful approximate classical algorithms for a wide variety of combinatorial optimisation problems. It is generally believed that quantum computers will not be able to exactly solve NP-hard optimisation problems efficiently. This is because the quantum versions of these problems are QMA-hard [22] (the QMA complexity class can be thought as the quantum analogue of NP). Quantum algorithms are therefore typically approximate in nature and the real question is whether or not quantum computers are able to yield better and/or faster approximate solutions relative to classical algorithms.

In this subsection, we will discuss some of the most important classes of discrete combinatorial optimisation problems, including the travelling salesman problem, Max-Cut, Max-Flows and the Knapsack problem.

2.1.1. The travelling salesman problem.

The travelling salesman problem (TSP) is perhaps the most well-known combinatorial optimisation problem. In order to setup the problem, consider a salesman that must visit a number of cities, each with various distances between them. The canonical form of the problem is to find the shortest path that visits all cities. The problem lends itself well to being mapped to a graph problem. Given an undirected, weighted graph  , each city is a vertex V, and cities are connected by edges E with weights representing distances. Despite the simple setup of the problem it is very challenging to solve, in fact it is NP-hard to solve exactly. There are a large number of applications of TSP (or variants of the problem that have extra constraints) including logistics, circuit board manufacturing and planning to name but a few.

, each city is a vertex V, and cities are connected by edges E with weights representing distances. Despite the simple setup of the problem it is very challenging to solve, in fact it is NP-hard to solve exactly. There are a large number of applications of TSP (or variants of the problem that have extra constraints) including logistics, circuit board manufacturing and planning to name but a few.

2.2. The Max-Cut problem

The maximum cut problem is a well-known optimisation problem that requires partitioning the vertices of an undirected graph into two disjoint sets, such that the number of edges between the two sets is maximized. Given a graph  , a cut represents a partition of the graph into two distinct subgraphs such that no vertex is shared between either graph. The Max-Cut problem consists of finding a cut in the graph such that the number of edges between the graph partitions is maximised. More generally in the case of weighted graphs, the problem extends to finding a cut that maximises the cumulative value of the edges. An example of the Max-Cut problem is shown in figure 1.

, a cut represents a partition of the graph into two distinct subgraphs such that no vertex is shared between either graph. The Max-Cut problem consists of finding a cut in the graph such that the number of edges between the graph partitions is maximised. More generally in the case of weighted graphs, the problem extends to finding a cut that maximises the cumulative value of the edges. An example of the Max-Cut problem is shown in figure 1.

Figure 1. An illustration of a simple 5 node Max-Cut problem. The coloring of the nodes (black and white) demonstrates one example of a partitioning into two separate sets that yields a maximum cut.

Download figure:

Standard image High-resolution imageThe Max-Cut problem has applications in a variety of fields, including computer vision, social network analysis, and computational biology. It is known to be NP-hard, which means that finding the optimal solution is generally believed to be computationally intractable for large instances of the problem. Therefore, many heuristic (approximate) algorithms have been proposed to solve the problem efficiently. The Max-Cut problem can be mapped to an Ising problem which makes it a good candidate for a quantum computer.

2.2.1. The Max-Flow problem.

A natural problem in transport and logistics is to attempt to model and optimise the flow of goods and services to customers. In the most basic example, one could consider the transport of goods from a depot to a destination along a network where certain routes have only limited capacities for transport. A problem of this form can be mapped to a graph problem. The goal is to maximise the flow through the graph given the constraints. A better value for the max flow corresponds to a more efficient delivery process.

Given a graph  with edge capacities cij

, a flow f is defined as a function

with edge capacities cij

, a flow f is defined as a function  such that

such that  . Given two vertices s and t labelled the source and sink respectively, we constrain the flow such that it is equal when entering and exiting at all vertices except the source and sink vertices,

. Given two vertices s and t labelled the source and sink respectively, we constrain the flow such that it is equal when entering and exiting at all vertices except the source and sink vertices,

A natural consequence of this constraint is that flow is conserved. For a single source-sink pair with such a constraint, the flow may be given a value Cf describing the net output of the source vertex s, that is,

The maximum flow problem concerns finding a flow  such that

such that  is maximum,

is maximum,  . The maximum flow problem represents a canonical optimisation problem over a weighted graph. Real world applications often require additional or different constraints on transport. For example, the vehicle routing problem [23] which requires that multiple vehicles service multiple destinations (or have different source depots). Variations to the problem include the introduction of capacity constraints on the vehicles (CVRP) or time windows for delivery (VRPTW).

. The maximum flow problem represents a canonical optimisation problem over a weighted graph. Real world applications often require additional or different constraints on transport. For example, the vehicle routing problem [23] which requires that multiple vehicles service multiple destinations (or have different source depots). Variations to the problem include the introduction of capacity constraints on the vehicles (CVRP) or time windows for delivery (VRPTW).

2.2.2. The knapsack problem.

The knapsack problem is an NP-complete combinatorial optimisation problem that has applications in resource allocation. The knapsack problem is usually stated as follows: given a set of items, each with a given weight and value, determine the a collection of items that has total weight less than some maximum whilst also having maximum possible value. There are several variations on the problem, the simplest is the so-called '0-1 knapsack problem' that restricts each item to a single copy i.e. each item has  copies. This problem is stated mathematically as,

copies. This problem is stated mathematically as,

where vi and wi are values and weights respectively and W is the maximum total weight. The problem can be extended such that multiple copies of each item are permitted or also to include multiple weights e.g. monetary cost and mass of each item.

2.3. Continuous optimisation

Continuous optimisation problems involve variables that take on continuous values, such as real numbers [24]. The objective function in a continuous optimisation problem maps a set of decision variables to a scalar value that represents the objective of the problem. The decision variables can take on any value within a specified range or domain. The goal is to find the values of the decision variables that minimize or maximize the objective function. The constraints can be expressed as equations or inequalities, and they limit the feasible region of the decision variables. Examples of continuous optimisation problems include finding the shortest path between two points in a curved space, determining the optimal allocation of resources for a production process, or optimising the design of a complex system. Despite the fact that the fields of continuous and discrete optimisation both aim to solve optimisation problems, they are in fact rather different and separate fields of study. In the remainder of this work we focus on discrete optimisation as this has been the sub-field of optimisation that has been studied the most extensively in the context of quantum computing.

3. Optimisation on analog quantum computers

Analog computing is perhaps an unfamiliar concept in the age of digital computing. Most are familiar with the digital (gate-based) model that utilises a series of discrete operations to perform a computation. Analog computing on the other hand, involves the continuous time evolution of an initial state to a final state. The goal is to setup and evolve the analog computer in such a way that the final state is useful e.g. it is the solution to a problem. There is a conceptual shift that has to be made when transitioning from thinking about digital to analog computing. In the digital model of computation it is possible to understand computation without considering the underlying hardware on which the computation is implemented. In the analog model this is no longer possible because the nature of the continuous evolution of the system is very much tied to the nature of the system itself i.e. the hardware. It is not possible to formulate a general hardware-independent model of analog computation.

In the case of quantum computing, the boundary between analog and digital is not as sharp as it is in the classical realm. Despite the fact that, in the gate-based model, computation is thought of as a series of discrete gates acting on a quantum state, in reality there is still a continuous time evolution of a quantum system. This means that, in some sense, all quantum computers are analog. In both analog and digital quantum computers, the quantum state prior to measurement is some continuous state that exists in the Hilbert space of the quantum computer. However, after measurement both types of quantum computer output a bit string (a binary number) i.e. both analog and digital quantum computers produce a digital output. Despite these similarities, one of the main distinguishing features of digital quantum computers is universality. We will discuss the concept of universality in more detail in the section on digital quantum computers but, it suffices to say here that analog quantum computers are not, in general, universal. This means that the analog quantum computers that exist today are only able to tackle very specific problems. The kind of problem a given analog quantum computer is able to solve is closely related to the form of the hardware itself.

At the time of writing, D-Wave has the most mature technology (superconducting qubits) in the space of analog quantum computing, specifically they develop quantum annealers [25, 26]. Recently, there have been considerable advances in neutral atom technology, a different approach that is able to blend the more traditional model of analog quantum computation with the gate-based model. This allows companies such as Pasqal [27] to, at least in principle, attain more generality whilst still retaining the advantages of the analog computing. While we will not discuss neutral atoms in detail, we refer the interested reader to some articles on the subject [28, 29] and would also like to highlight some impressive recent work that used Rydberg atoms to solve maximum independent set (MIS) problems with up to 289 vertices [30]. In this section we will focus on the well established adiabatic quantum computation (AQC) model of computation that is used to describe computation on certain kinds of analog quantum computers such as quantum annealers. We will first discuss AQC and then move onto quantum annealing (QA) which is closely related but subtly different. There is already a large literature including many reviews on adiabatic quantum computing [31] and quantum annealing [32–35]. We will therefore restrict ourselves to a brief introduction and discussion of the key concepts. The next section will then discuss optimisation for digital (gate-based) quantum computers which is the main focus of this paper.

To begin an adiabatic quantum computation, a quantum system is initialized in the ground state of a simple Hamiltonian. An external field is then applied to the system and gradually changed in order to slowly transform the initial state into the ground state of a different Hamiltonian that encodes a problem of interest e.g. a combinatorial optimisation problem. The final Hamiltonian should have a ground state that corresponds to the optimal solution of the problem. The concept of AQC can be demonstrated mathematically by considering the time-dependent Hamiltonian,

where  and

and  in equation (5) are the initial and problem Hamiltonians respectively. Initially

in equation (5) are the initial and problem Hamiltonians respectively. Initially  and

and  , over time this changes until the reverse is true. Provided the change is gradual enough, the adiabatic theorem guarantees that the quantum state remains in the ground state throughout the computation. Formally, whether or not the time taken to implement the change (i.e. the runtime of the computation, tf

) is in fact gradual enough depends on the size of the energy gap between the ground and first excited states of

, over time this changes until the reverse is true. Provided the change is gradual enough, the adiabatic theorem guarantees that the quantum state remains in the ground state throughout the computation. Formally, whether or not the time taken to implement the change (i.e. the runtime of the computation, tf

) is in fact gradual enough depends on the size of the energy gap between the ground and first excited states of  , denoted Δ. The smaller the gap, the more gradual the change has to be to guarantee adiabaticity. Typically tf

is of order O(

, denoted Δ. The smaller the gap, the more gradual the change has to be to guarantee adiabaticity. Typically tf

is of order O( ), although the worst case is O(

), although the worst case is O( ). One of the major concerns is that this gap can become exponentially small as the problem size increases [36].

). One of the major concerns is that this gap can become exponentially small as the problem size increases [36].

Quantum annealing is very closely related to adiabatic quantum computation. Quantum annealing is essentially an adiabatic quantum computation in which the requirement of adiabaticity is relaxed somewhat. Rather than requiring the system to remain in the ground state throughout the computation, the system is allowed to enter an excited state. This relaxation is largely for practical reasons; it is easier to realise quantum annealing in hardware as opposed to a perfectly adiabatic quantum computation. Furthermore, while AQC is universal [37], current implementations of quantum annealing are not universal. In practice, quantum annealing is actually stoquastic quantum annealing, another compromise made for the sake of practicality. Stoquastic quantum annealing is limited to stoquastic Hamiltonians, which are defined as Hamiltonians that, in a given basis, have off-diagonal elements that are real and non-positive. There is concern that stoquastic Hamiltonians are easy to simulate [31] and therefore stoquastic quantum annealers may not be able to provide a quantum advantage [38].

One of the main difficulties that arises when developing algorithms for quantum devices (analog or digital) is how to map a problem of interest to a quantum computer. In other words, how do we translate the problem into a form that is able to be encoded in qubits and then solved by a continuous time evolution of a quantum state. Quantum annealers have largely focused on combinatorial optimisation for two reasons. The first is that such a mapping exists for many CO problems and is relatively simple. The full details are given in the section on quadratic unconstrained binary optimisation (QUBO). However, it suffices to say here that many CO problems can be mapped to a problem of the form of finding the ground state of an Ising Hamiltonian [39]. It is evident that such problems are well-suited for quantum annealers. The problem Hamiltonian of equation (5) can simply be made an Ising Hamiltonian. The second reason is that CO problems have a huge variety of compelling real-world applications.

The current state of the field of quantum annealing is difficult to summarise. Despite the fact that the field is relatively young, the literature is already large and is growing quickly. It is fair to say that there is considerable debate over whether or not quantum annealers will ever achieve quantum advantage. Proponents of the QA approach are optimistic that once machines reach sufficient sizes, there is hope for an advantage in specific instances of optimisation problems [40]. However, at the time of writing, there has been no demonstration of quantum advantage using a quantum annealer. Sceptics claim that problems such as the exponential decrease in the energy gap Δ mean that advantage will never be realised on these devices. In fact, there is a thriving cottage industry of attempting to demonstrate that quantum annealers will not be able to provide an advantage [41]. There are also many approaches that take inspiration from quantum computing to improve classical methods. For example, Fujitsu has developed a so-called quantum-inspired digital annealer. This is a purpose-built silicon chip that is designed specifically for combinatorial optimisation problems and is purportedly better at solving these problems than a classical CPU but is also easier to scale up than an actual quantum annealer [42]. In summary, the field faces considerable challenges and considerable scepticism. In spite of this, there is still a large group of scientists that are optimistic and this optimism is not entirely unfounded. Regardless of the eventual outcome, the field has generated a body of scientific work that is highly interesting and will likely continue to do so for many years to come.

4. Optimisation on digital quantum computers

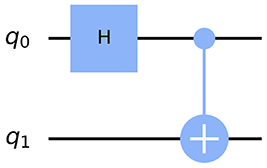

Compared to analog quantum computers, digital quantum computers (DQC) are, at least in some senses, more similar to conventional classical computers. Analogous to classical logic gates, digital quantum computers implement a set of quantum logic gates (often DQC's are referred to as gate-based quantum computers). Each quantum gate corresponds to a unitary operator acting on a small number of qubits (typically 1-3 qubits) and they can be combined to build up a quantum circuit. A given quantum circuit is a representation of a computation that can be implemented on a quantum computer. A simple example of a quantum circuit to prepare a Bell state is shown in figure 2. The quantum circuit picture is a useful abstraction largely because it is a simple and clear way of representing the evolution of a quantum state. It is often easier to understand what is happening during a quantum computation when looking at a quantum circuit compared to a long sequence of tensor products of operators. While there are many similarities between the classical and quantum gate models, there are also some key differences. For example, the fact that quantum gates correspond to unitary operators means that quantum gates must be reversible. Classical logic gates have no such restrictions.

Figure 2. A simple example of a quantum circuit that prepares a Bell state, drawn using Qiskit. There are two qubits denoted q0 and q1, H is the Hadamard gate and the second gate is a CNOT (2-qubit) gate.

Download figure:

Standard image High-resolution imageOne of the most important concepts in the gate-based model of computing is that of universality. Formally, a set of logic gates (quantum or classical) is universal if it is able to reproduce the action of all other logic gates. For classical logic gates, the NAND gate (or the NOR gate) alone forms a universal gate set. A complication arises in the case of quantum gates because there are uncountably many possible unitary operations that could be applied to a quantum state (consider a rotation gate with an angle that may take any real value). A quantum gate set is therefore considered universal if it is able to approximate any unitary operation. The Solovay–Kitaev theorem [43] ensures that this approximation can be to done to within a given error efficiently (the number of gates grows logarithmically as a function of the error). Broadly speaking, the benefit of universality is generality. A universal digital quantum computer can, at least in principle, be applied to solve any problem of interest. Analog quantum computers on the other hand are considerably more limited. The form of the hardware determines the exact type of problem that can be solved on the hardware. Analog quantum computers pay the price of loss of generality but hope to gain a potential advantage in certain very specific situations. Note, an interesting consequence of universality is that a universal DQC is able to perform any computation that can be performed in a more restricted framework i.e. it is possible to simulate a quantum annealer using a universal DQC. A further advantage of the digital approach to quantum computation is the potential for error correction. Encoding information digitally permits the possibility of error correction which is believed to be crucial for achieving fault-tolerant quantum computing. It remains to be seen which of the analog digital approaches (if either) will reach quantum advantage first.

The universality of digital quantum computers has enabled the development of quantum algorithms across a large number of application areas including simulation of many-body quantum systems, machine learning and optimisation problems. In this work, we will focus on quantum algorithms for optimisation problems, specifically combinatorial optimisation problems. Combinatorial optimisation problems are often NP-hard to solve exactly meaning the most common approaches are heuristic methods that solve these problems approximately. There have been decades of work developing ever-improved approximate classical algorithms to solve combinatorial optimisation problems. This work has largely been driven by the fact that these problems are so widely applicable across a huge number of industries. By comparison to classical algorithms, quantum algorithms for combinatorial optimisation are still very much in their infancy. There are very few quantum algorithms for optimisation in existence and, at the time of writing, it is still very much an open question whether or not any of them will prove to be useful.

Despite the fact that no advantage has been established yet, there are at least theoretical grounds to think that combinatorial optimisation problems may be a suitable candidate for quantum advantage. The outcome of both classical and quantum computations is a bit string that may correspond to a solution of a given problem. However, unlike a classical computer, while a calculation is in progress on a quantum computer, the quantum state exists in a 2N

dimensional Hilbert space (where N is the number of qubits). Importantly, the quantum state can be in a superposition of many states in this Hilbert space. The hope is that a well-designed quantum algorithm can extract a low-lying state (a good quality solution) from such a superposition more efficiently than classical algorithms which do not have the ability to exploit superposition. The difficulty arises because, even though a given initial superposition of states may contain the ground state (the optimal solution to the optimisation problem), if you measure the superposition, you will obtain one of the states at random. Specifically, for a superposition of n states, each state will be measured with probability  . This means that superposition alone gives no natural advantage over a classical computer. Tackling this problem is the real challenge of quantum algorithm design. Fundamentally a quantum algorithm seeks to boost the probability of measuring some desired state that corresponds to a solution to a problem. In general, this is a very challenging task. Examples of algorithms that achieve this successfully include amplitude amplification [44] which is able to take an initial superposition of states and greatly increase the probability of measuring some desired state.

. This means that superposition alone gives no natural advantage over a classical computer. Tackling this problem is the real challenge of quantum algorithm design. Fundamentally a quantum algorithm seeks to boost the probability of measuring some desired state that corresponds to a solution to a problem. In general, this is a very challenging task. Examples of algorithms that achieve this successfully include amplitude amplification [44] which is able to take an initial superposition of states and greatly increase the probability of measuring some desired state.

One of the key limitations faced in the field of quantum optimisation (and more broadly in quantum computing) is the limited availability of hardware and the noisy nature of the hardware that is available. We are currently in the so-called noisy intermediate-scale quantum (NISQ) era. The combination of small numbers of qubits and noise means that only small problem sizes can be attempted on real hardware with algorithms that are low circuit depth. These small problems are typically efficiently solvable by classical means and therefore do not stand to truly benefit from quantum computers. While machines with increasing numbers of qubits are being developed at a fairly consistent pace, the issue of reducing noise is more challenging. Circuit depth is a key limitation of current hardware that makes anything but the simplest algorithms (or simplest versions of a given algorithm)impossible to implement. Without reduction in noise on the scale of several orders of magnitude, the only real hope is error correction which itself drastically increases the overhead in terms of numbers of qubits. In the NISQ era, the challenge is to develop algorithms that have some degree of resilience to noise and to identify problems that, even for small sizes, may still benefit from quantum hardware. With this in mind, many of the current approaches are hybrid quantum–classical. A suitable, difficult part of a given problem is identified and ported to a quantum computer while the part of the problem that can be efficiently solved classically remains on a conventional computer. Variational quantum algorithms fall into this category, utilising classical optimisers to find optimal parameters for quantum circuits.

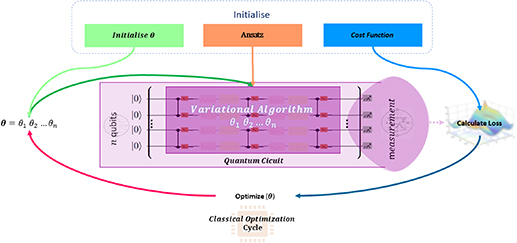

There is a vast literature on variational quantum algorithms (VQA's) [45–47], in this work we will comment on just a few specific algorithms. VQA's are popular as they are a class of algorithms that are thought to be particularly well suited to NISQ devices. However, there is a 'hidden' cost that is associated with VQA's that is not always discussed or factored into complexity theory discussions. VQA's rely on optimisation of a set of variational parameters which are typically a set of angles θi for single qubit rotation gates. The optimisation of these parameters is performed by a classical optimisation algorithm (see figure 3 for a schematic of a VQA). The θi cost landscape generally has many local minima and often has flat regions (referred to as barren plateaus) that prevent further optimisation of parameters. In general, the problem of finding the global minimum is an NP-hard problem. The cost landscape can be made increasingly favourable by overparameterising the VQA i.e. adding more θi [48, 49]. However, the number of parameters (and therefore quantum gates) required to do this for some of the most common ansatzes increases exponentially making this approach impractical [50]. In this work we will discuss the specific merits (or otherwise) of a given quantum algorithm. However, when the algorithm is a variational one, it is important to bear this classical overhead in mind.

Figure 3. A schematic of a variational quantum algorithm. Variational parameters θ are optimised using a classical computer.

Download figure:

Standard image High-resolution imageWhen using VQAs in practice, as the size of the problem and therefore the number of variational parameters increases, the classical optimisation problem can become prohibitively difficult. This issue is particularly pertinent when discussing quantum algorithms for optimisation. Often the optimisation problems that we hope to solve using a quantum computer are NP-hard. A cynic may notice that, in the VQA paradigm, you must first solve one NP-hard problem classically (the optimisation of the variational parameters) in order to then solve the NP-hard optimisation problem. In other words, despite using a quantum computer, you still have to tackle an NP-hard problem classically. This viewpoint has a degree of merit but, there are reasons to be optimistic nonetheless. Good classical optimisation algorithms exist that can efficiently provide approximate solutions. The hope is that these solutions are good enough to still yield good solutions using the VQA.

In the following sections, we will review the major quantum algorithms for quantum optimisation problems. We begin each section by giving an introduction to the conceptual and mathematical description of each algorithm. For the interested reader, we then detail a number of case studies. The case studies are far from exhaustive but are selected because they either show useful examples of the algorithm in action (simulated or on real hardware) or they propose interesting modifications to the core algorithm.

4.1. Quantum approximate optimisation algorithm

The quantum approximate optimisation algorithm (QAO algorithm) was introduced by Farhi et al [51] in 2014 and is a hybrid quantum–classical variational algorithm. Note that we refer to the quantum approximate optimisation algorithm as the QAO algorithm (rather than QAOA) in order to distinguish it more easily from the quantum alternating operator ansatz which we discuss later. In the QAO algorithm, a problem-specific cost function C is mapped to a Hamiltonian  with an associated parameterised unitary

with an associated parameterised unitary  . In particular, the possible solutions to the problem are each associated with an eigenstate of the Hamiltonian such that it is diagonal in the computational basis,

. In particular, the possible solutions to the problem are each associated with an eigenstate of the Hamiltonian such that it is diagonal in the computational basis,

The unitary  corresponds to time evolution under the cost Hamiltonian

corresponds to time evolution under the cost Hamiltonian  where the time parameter has been replaced by a variational parameter γ. In addition, a mixer Hamiltonian

where the time parameter has been replaced by a variational parameter γ. In addition, a mixer Hamiltonian  is introduced with associated parameterised unitary

is introduced with associated parameterised unitary  . A single block of the circuit, as shown in figure 4, corresponds to the application of the cost and mixer unitaries on an initial state

. A single block of the circuit, as shown in figure 4, corresponds to the application of the cost and mixer unitaries on an initial state  ,

,

Figure 4. Schematic of the circuit for the QAO algorithm. The circuit show has depth p = 1, for higher depths, the entire circuit is repeated p times.

Download figure:

Standard image High-resolution imageChoosing a depth hyperparameter  so that the circuit consists of p blocks

so that the circuit consists of p blocks  associated with 2p parameters, we can now implement the overall circuit as follows,

associated with 2p parameters, we can now implement the overall circuit as follows,

The cost is then evaluated as an expectation value,

where a classical optimiser can be used to iteratively update the parameters  in order to extremise the cost. The original formulation of the QAO algorithm utilised the so-called transverse-field mixing Hamiltonian which is a sum of local operators. In particular

in order to extremise the cost. The original formulation of the QAO algorithm utilised the so-called transverse-field mixing Hamiltonian which is a sum of local operators. In particular  where Xi

is the Pauli X-operator (bit flip) acting on the ith qubit. Conceptually, the QAO algorithm can be thought of as time evolution of a quantum state combined with the power of 'hopping' between states. The time evolution is generated by the cost Hamiltonian and the 'hopping' is facilitated by the mixer unitary. Specifically, the mixer unitary is required to ensure that the dynamics of the system are non-trivial. If the system were evolved using only the cost Hamiltonian, the energy associated with the cost Hamiltonian (the cost) would be conserved i.e. no choice of γ would alter the cost and therefore it would not be possible to perform an optimisation. By choosing a mixing Hamiltonian that does not commute with the cost Hamiltonian, the cost is no longer conserved and a minimum can be found by varying γ, µ.

where Xi

is the Pauli X-operator (bit flip) acting on the ith qubit. Conceptually, the QAO algorithm can be thought of as time evolution of a quantum state combined with the power of 'hopping' between states. The time evolution is generated by the cost Hamiltonian and the 'hopping' is facilitated by the mixer unitary. Specifically, the mixer unitary is required to ensure that the dynamics of the system are non-trivial. If the system were evolved using only the cost Hamiltonian, the energy associated with the cost Hamiltonian (the cost) would be conserved i.e. no choice of γ would alter the cost and therefore it would not be possible to perform an optimisation. By choosing a mixing Hamiltonian that does not commute with the cost Hamiltonian, the cost is no longer conserved and a minimum can be found by varying γ, µ.

As we have already discussed, the boundary between analog and digital quantum computation is not necessarily a sharp one. It is therefore not necessarily straightforward to place algorithms such as QAO entirely in one category or the other. The vast majority of research regarding the QAO algorithm is done in the context of DQCs but, there are examples where this is not the case. For example, Ebadi et al utilised Rydberg atoms and parameterised pulse durations and phases in order to implement a scheme that strongly resembles the QAO algorithm but in an analog context [30].

Throughout the paper we will refer to a quantity called the approximation ratio that is often used to evaluate approximate methods such as the QAO algorithm. The approximation ratio r is defined in terms of the maximum and minimum (optimal) cost, Cmax and Cmin respectively,

As the cost found by the QAO algorithm,  approaches the optimal value Cmin

, the approximation ratio tends towards 1.

approaches the optimal value Cmin

, the approximation ratio tends towards 1.

4.1.1. Quadratic unconstrained binary optimisation.

Quadratic unconstrained binary optimisation (QUBO) is an NP-hard combinatorial optimisation problem. It is primarily of interest because there are many real world problems for which embeddings into QUBO have been formulated [39]. Problems include Max-Cut, graph coloring and a number of machine learning problems to name but a few. Note that despite the utility of QUBO, it is limited by the fact that it cannot be applied to constrained CO problems. The QUBO problem is formulated by considering a binary vector of length n,  and an upper triangular matrix

and an upper triangular matrix  . The elements Qij

are weights for each pair of indices

. The elements Qij

are weights for each pair of indices  in

in  . Consider the function

. Consider the function  ,

,

where xi

is the ith element of  . Solving a QUBO problem means finding a binary vector

. Solving a QUBO problem means finding a binary vector  that minimises fQ

,

that minimises fQ

,

The difficulty of solving such a problem is readily apparent as the number of binary vectors grows exponentially (2n ) with the size n. Hard instances of QUBO are known to require exponential time to solve classically. As such, classical methods to solve these methods are typically heuristics that provide approximate solutions. The hope that is that heuristic quantum algorithms such as the QAO algorithm may be able to find better approximate solutions to QUBO problems than existing classical methods.

QUBO and Ising problems are closely linked and in fact a QUBO problem can be linearly transformed into an simple Ising problem (one with no external magnetic field). Ising problems are good candidates for quantum algorithms because there is a simple mapping that enables them to be executed on a quantum computer. With the goal of linking QUBO to an Ising problem and then mapping this to a quantum computer, we will rewrite the objective function in equation (11) as a QUBO Hamiltonian,

where the second term in equation (13) has been simplified using the fact that, for binary variables,  . The Ising Hamiltonian with no external magnetic field can be written in a similar form,

. The Ising Hamiltonian with no external magnetic field can be written in a similar form,

In the Ising problem  are spin variables. The similarities between equations (13) and (14) are readily apparent. A simple linear transformation of the form

are spin variables. The similarities between equations (13) and (14) are readily apparent. A simple linear transformation of the form  relates the two problems. Without loss of generality we therefore proceed to map the Ising problem to a quantum computer by promoting to

relates the two problems. Without loss of generality we therefore proceed to map the Ising problem to a quantum computer by promoting to  to

to  ,

,

Note that this promotion of spin variables to Pauli Z matrices is sensible because the eigenvalues of Z are ±1. The Hamiltonian  in equation (15) can now be used as the cost Hamiltonian in the QAO algorithm thereby allowing it to be applied to a large number of combinatorial optimisation problems.

in equation (15) can now be used as the cost Hamiltonian in the QAO algorithm thereby allowing it to be applied to a large number of combinatorial optimisation problems.

4.1.2. Case studies.

The assessment of whether or not the QAO algorithm is a compelling candidate for quantum supremacy [52] or even advantage has been the subject of considerable research and debate. The quantum approximate optimisation algorithm is a metaheuristic, which is a procedure for generating a heuristic (approximate) algorithm. This makes it very difficult to derive any rigorous scaling behaviour outside of specific examples. It is therefore imperative that the QAO algorithm is tested as extensively as possible using simulations and, more importantly, actual hardware. In this section we will discuss a number of relevant case studies of that utilise the QAO algorithm in order to give an overview of the current state of the field.

Initially the QAO algorithm (depth p = 1) was the best known approximate algorithm for MAX-3-LIN-2 [53] but a better classical algorithm was found soon after [54]. Several studies have been rather pessimistic in regards to the outlook for the QAO algorithm applied to problems such as Max-Cut. For example, Guerreschi and Matsuura [55] explored time to solution for the QAO algorithm on the Max-Cut problem on 3-regular graphs. Here the QAO algorithm was simulated under 'realistic' conditions including noise and circuit decomposition and applied to problems of up to 20 vertices. Their time to solution also included the cost associated with executing multiple shots for each circuit. It was demonstrated that, under these conditions, for a given time to solution the QAO algorithm (p = 4,8) was only able to solve a graph 20 times smaller than the best classical solver. By extrapolation under a few assumptions, it was concluded that the QAO algorithm would require hundreds or even thousands qubits to meet the threshold for quantum advantage on realistic near-term hardware. There have been a number of other studies comparing the QAO algorithm in certain instances to classical algorithms [56, 57]. For example, a paper by Marwaha [58] demonstrated that the QAO algorithm (p = 2) was outperformed by local classical algorithms for Max-Cut problems on high-girth regular graphs. Specifically for all D-regular graphs with D  2 and girth above 5, they find 2-local classical Max-Cut algorithms outperform the QAO algorithm (p = 2).

2 and girth above 5, they find 2-local classical Max-Cut algorithms outperform the QAO algorithm (p = 2).

Despite the pessimism of the papers that have been discussed so far, there is still hope that the QAO algorithm might be able to outperform classical algorithms at higher depths than have currently been explored. Whilst Guerreschi and Matsuura's work [55] does not bode well for the QAO algorithm on current generation devices that, at the time of writing have at most 127 qubits (IBM Eagle), their work does suggest that the QAO algorithm might offer an advantage over classical methods once a sufficient number of qubits is reached. This claim requires an extrapolation of their data and the authors are careful to make it clear that such an extrapolation is highly uncertain due to the possibility of small-size effects in their results. An earlier paper studied the QAO algorithm for Max-Cut problems and found that the approximation ratio improved as the QAO algorithm depth, p increased [59]. They compared to classical methods and found that for values of p > 4, the QAO algorithm (simulated) was found to attain a better approximation ratio than the classical algorithm for problem sizes of 6-17 vertices. This appears to offer some hope that the QAO algorithm will be useful at larger values of p. However, as Guerreschi and Matsuura point out in their paper, this study does not compare the actual time to solution of the quantum and classical methods and therefore neglects the potentially very costly process of optimising the variational parameters. Indeed Geurreschi and Matsuura take a worst case approach and extrapolate their data using an exponential due to the increasing cost of finding variational parameters as number problem size increases.

As we have already described, the problem of finding optimal values of the QAO algorithm variational parameters becomes increasingly difficult as the depth p increases (this is a problem shared by all variational quantum algorithms). As such, there have been several papers that have attempted to address this problem by exploring improved strategies for setting parameters [60–65]. For example, Zhou et al [66] employed a heuristic strategy for the initialisation of the 2p variational parameters applied to the Max-Cut problem to reduce the number of classical optimisation runs to  . This is in comparison to random initialisation which is identified as requiring

. This is in comparison to random initialisation which is identified as requiring  optimisation runs (see the worst case exponential scaling adopted by Geurreschi and Matsuura described above). Specifically, they develop an optimisation heuristic for a p + 1 depth circuit based on known optimisations for depth p. Using simulations it was shown that the optimisation curves for the values of the p-level variational parameters changed only slightly for p + 1. Hence, it is possible to use results from optimisation over a p-depth circuit as an appropriate initialisation for the variational parameters in a p + 1 depth circuit.

optimisation runs (see the worst case exponential scaling adopted by Geurreschi and Matsuura described above). Specifically, they develop an optimisation heuristic for a p + 1 depth circuit based on known optimisations for depth p. Using simulations it was shown that the optimisation curves for the values of the p-level variational parameters changed only slightly for p + 1. Hence, it is possible to use results from optimisation over a p-depth circuit as an appropriate initialisation for the variational parameters in a p + 1 depth circuit.

Streif and Leib [67] developed a method of determining optimal parameters for the QAO algorithm that aimed to reduce or even remove the need for a classical optimisation loop. They considered applications of the QAO algorithm with constant p to the problem of Max-Cut on 3-regular graphs and 2D spin glasses. They observed that the optimal parameter values tended to cluster around particular points, with decreasing variance for larger graph sizes. The optimal parameters were found to be dependent on the topological features of the problem's graph rather than the specific problem instance or system size. They use tensor network theory to develop 'tree-QAOA' in which the circuit is reduced to a tensor network, associated with a tree subgraph for which contraction can be performed classically. This removes the need for calls to the quantum processing unit (QPU) when determining the variational parameters. Their numerical results for this method on Max-Cut for 3-regular graphs show results comparable to using the Adam classical stochastic gradient descent optimiser.

There have been a number of papers that have proposed modifications to the core QAO algorithm in order to improve performance and/or in an attempt to make the method more amenable to execution on near term devices [68–70]. Guerreschi took a divide and conquer approach to QUBO problems using the QAO algorithm and was able to achieve an average reduction of 42% in estimates of required qubits for 3-regular graphs of up to 600 vertices [71]. Zhou et al developed an approach to solving large Max-Cut problems with fewer qubits than the standard approach [72]. Their strategy is to partition the graph into smaller instances that are optimised with the QAO algorithm in parallel, followed by a merging procedure into a global solution also using the QAO algorithm. Their procedure, dubbed 'QAOA-in-QAOA' (QAOA2), potentially offers a solution to tackling large graph optimisation problems with limited qubit devices. Perhaps surprisingly, they demonstrate that their method is able to perform on par with or even outperform the classical Goemans-Williamson algorithm [73] on graphs with 2000 vertices. Their work appears to suggest that the real power of the QAO algorithm may come when tackling large, dense graphs. However, their results are from simulations only and therefore more work is needed to determine the utility of the method on actual hardware.

While many studies of the QAO algorithm omit noise, there is a growing body of work examining the effects of noise on the QAO algorithm [74–76]. For example, França et al developed a framework for studying quantum optimisation algorithms in the context of noise [77]. The presence of noise in a quantum computation drives the quantum state towards a maximally mixed state which corresponds to a Gibbs state with β = 0 (infinite temperature). They utilise the fact that there are polynomial time classical algorithms for sampling Gibbs states for certain values of  . As a quantum computation progresses in the presence of noise it will eventually cross over from the space of Gibbs states that cannot be efficiently sampled with a classical algorithm to the space of states that can be efficiently sampled. The circuit depth at which this happens will depend on a number of factors such as the degree of noise and number of 2 qubit gates etc. Their framework can therefore be used to estimate circuit depths for which there can be no quantum advantage on current hardware. They present such a bound for the QAO algorithm. A recent paper built on this work by examining the QAO algorithm in the context of various SWAP strategies [78]. Note that SWAP gates are required due to the limited connectivity of qubits in most modern QPU's. Two qubit gates can only be applied to connected qubits, if a circuit requires 2 qubit gates to be applied to non-connected qubits, then a number of SWAP gates must be employed to facilitate this. This leads to the gate count of the actual circuit that is executed on hardware being larger than the gate count of the high level circuit. This work therefore provides more realistic estimates of how noise will impact the QAO algorithm on real quantum hardware. They demonstrate that, at least for dense problems, gate fidelities would need to be significantly below fault tolerance thresholds to achieve any advantage.

. As a quantum computation progresses in the presence of noise it will eventually cross over from the space of Gibbs states that cannot be efficiently sampled with a classical algorithm to the space of states that can be efficiently sampled. The circuit depth at which this happens will depend on a number of factors such as the degree of noise and number of 2 qubit gates etc. Their framework can therefore be used to estimate circuit depths for which there can be no quantum advantage on current hardware. They present such a bound for the QAO algorithm. A recent paper built on this work by examining the QAO algorithm in the context of various SWAP strategies [78]. Note that SWAP gates are required due to the limited connectivity of qubits in most modern QPU's. Two qubit gates can only be applied to connected qubits, if a circuit requires 2 qubit gates to be applied to non-connected qubits, then a number of SWAP gates must be employed to facilitate this. This leads to the gate count of the actual circuit that is executed on hardware being larger than the gate count of the high level circuit. This work therefore provides more realistic estimates of how noise will impact the QAO algorithm on real quantum hardware. They demonstrate that, at least for dense problems, gate fidelities would need to be significantly below fault tolerance thresholds to achieve any advantage.

Finally, it is important to discuss the prospects of the QAO algorithm on current or near term hardware [78–80]. One important study that tested the QAO algorithm on real hardware was performed by Harrigan et al using Google's Sycamore QPU [81]. They studied both planar and non-planar graph problems using up to 23 qubits. The planar problems had the advantage of mapping nicely to the topology of the QPU whereas the non-planar problems required compilation using SWAP gates. They found that both planar and non-planar problem instances performed comparably during noiseless simulations. However, when tests were done on quantum hardware, the extra circuit depth incurred by compilation for non-planar problems meant that performance quickly degraded as problem size increased. By contrast, the planar graph problems were found to have performance that was roughly constant regardless of problem size. This is a useful result given that most modern QPUs have limited connectivity suggesting that only relatively simple problems can be trivially mapped to them. This casts some doubt over whether or not the QAO algorithm will be useful for more complex, interesting problems in the near-term. Further tests investigated the relationship between the QAO algorithm depth p and performance. Interestingly, performance was found to consistently increase as depth increased during the noiseless simulations. However, during the tests on the real device, the extra circuit depth associated with increasing p meant that noise quickly degraded performance. Despite this, the result is still a good example of empirical evidence to backup an often made claim about the QAO algorithm which is that poor performance is due to limited depth p of the algorithm.

A different study by Streif et al [82] also provides some evidence to support the claim that increasing p can lead to improved performance. They studied the binary paint shop problem (BPSP) using the QAO algorithm. BPSP is a particularly interesting test case because there is no known classical algorithm that is able to provide an approximate solution to within a constant factor of the optimal solution in polynomial time for all problem instances. They ran numerical studies up to a problem size of 100 (corresponding to 100 qubits) and experiments on an IONQ QPU of problem sizes up to 11 qubits. They study the probability of achieving an approximate solution that is to within factor α of the optimal solution. For the hardest problem instances and at fixed p, they found that the QAO algorithm requires the number of samples to increase exponentially with problem size in order to maintain a constant α factor approximation. However, allowing p to increase polynomially with system size enables a constant factor approximation factor to be maintained efficiently. This is tentative evidence to suggest the QAO algorithm can achieve better performance for BPSP than the best known classical algorithms provided p is allowed to increase with problem size. However, the authors make it clear that their tests were not performed on all possible problem instances meaning no generic conclusions can be drawn.

4.2. Constrained optimisation and the quantum alternating operator ansatz

One of the limitations of the QAO algorithm is that, in its original form, it can only be applied to unconstrained optimisation problems. However, many interesting combinatorial optimisation problems have constraints that must be satisfied. Hence, there is considerable motivation to move beyond unconstrained problems. One approach to account for constraints is to add penalty terms to the cost Hamiltonian that introduce large cost penalties for solutions that violate constraints [83, 84]. This is often called a soft constraint approach and it allows a constrained problem to be treated as unconstrained but significantly lowers the probability of getting infeasible (constraint violating) solutions. Solutions that violate constraints must then be pruned post facto. For unconstrained problems, the total search space of the problem is equal to the space of feasible (constraint satisfying) solutions. However, constraints reduce the size of the space of feasible solutions relative to the total search space. Soft constraint approaches may therefore become inefficient as they search parts of solution space that do not satisfy the constraints and are therefore infeasible. This is particularly problematic in cases where the infeasible solution space is much larger than the feasible solution space.

An alternative approach to introducing penalty terms is to construct the mixer unitary in such a way that only feasible states are explored. The quantum alternating operator ansatz (QAOA) was proposed by Hadfield et al [85, 86] as a generalisation of the QAO algorithm. The goal of this generalisation was, at least in part, to address the issue of optimisation problems with hard constraints. Rather than the cost and mixer unitaries taking the form of time evolution, they are able to take any form. The authors suggest certain principles that should be adhered to when designing mixer unitaries:

- The feasibility of the state is preserved, that is,

maps feasible states to feasible states.

maps feasible states to feasible states. - For any two feasible basis states

. That is, for any two feasible solutions there is always some parameterisation that allows us to evolve one state such that we measure the other with non-zero probability. In particular, it should be possible to evolve toward a more optimal feasible solution with the correct parameterisation.

. That is, for any two feasible solutions there is always some parameterisation that allows us to evolve one state such that we measure the other with non-zero probability. In particular, it should be possible to evolve toward a more optimal feasible solution with the correct parameterisation.

The original quantum alternating operator ansatz paper presented formulations of mixer unitaries for a large number of different problems. Since then there has been considerable further exploration of this topic [87–89]. The hope is that, by designing problem specific mixer unitaries, it might be possible to reduce resource requirements and improve the quality of results. For example, Fuchs et al [90] formulated a generalisation of the XY-mixer. The family of XY-mixers [85, 91] preserve Hamming weight and are therefore useful in for problems with hard constraints. Examples include the ring mixer which utilises a Hamiltonian HR ,

The ring mixer applies to cases where there are N qubits with periodic (ring) boundary conditions. An alternative is the complete graph mixer Hamiltonian,

where the sum is over all pairs of qubits on a complete graph Kn . The generalisation of Fuchs et al enables the construction of mixers that preserve a feasible subspace specified by an arbitrary set of feasible basis states. The issue with their approach is that the exact way of constructing mixers scales poorly as the number of basis states increases. This is especially problematic as this number can often increase rapidly with problem size. The authors suggest the need for heuristic algorithms to approximately construct mixers which may solve this issue but, this is still an open problem.

Saleem et al [92] developed a modified version of the quantum alternating operator ansatz algorithm called dynamic quantum variational ansatz (DQVA). This approach utilises a 'warm start' in which a polynomial time classical algorithm is used to find local minima that are used as inputs for the quantum algorithm. DQVA also employs dynamic switching on or off of parts of the ansatz in order to reduce resource requirements for near term devices. Their results are promising on simulators but are limited to relatively small graphs of up to 14 nodes (with edge probability between 20% and 80%) due to lack of computational resources. Note that there is a large literature on warm starting quantum optimisation algorithms that is not covered in detail here [93–95].

4.2.1. Physics inspired approaches.

One of the more interesting strategies for designing mixers in the quantum alternating operator ansatz framework is the method of appealing to physics for inspiration. In this section we will discuss several papers that take this approach to design mixers for both physics and non-physics applications. For example, Kremenetski et al [96] applied QAOA to quantum chemistry problems. The authors utilise the full second-quantized electronic Hamiltonian as their cost function. Their mixer unitary is time evolution generated by the Hartree–Fock (HF) Hamiltonian. This mixer naturally preserves feasibility as states can only evolve into other chemically allowed states. The method is tested on P2, CO2, Cl2 and CH2 using classical simulations. The paper represents a nice proof of concept of a physics inspired mixer for a physics application of QAOA.

Another recent paper applied the quantum alternating operator ansatz to the problem of protein folding on a lattice. Building on previous quantum annealing work, [97] Fingerhuth et al developed a novel encoding for a lattice protein folding problem on gate-based quantum computers [98]. The method utilises 'one-hot' encoding to encode turns on a lattice in order to simulate protein folding. They also develop several problem specific mixer Hamiltonians that encode constraints that, for example, ensure the protein does not fold back on itself. The authors find that their results improve significantly when they initialise the system in a superposition over feasible states rather than a superposition over all states. The authors demonstrated a proof of concept of their method on a Rigetti 19Q-Acorn QPU. However, hardware constraints meant that they had to split the problem into two parts, each only 4 qubits and had to initialise the state as a uniform superposition. They achieved a 6.05% probability of reaching the ground state in their test on the real quantum device.

There has been some work exploring symmetries in the context of QAOA [99]. Recent work by Zhang et al exploits the fact that symmetries and conserved quantities are intimately linked [100]. In this case, the authors exploit gauge invariance in quantum electrodynamics (QED) to formulate a mixer Hamiltonian that naturally conserves flow in the context of network flow problems. Network flow problems satisfy the constraint that flow must originate and terminate only at sources and sinks respectively. A consequence of this constraint is conservation of flow i.e. the amount flowing into a given node must equal the amount flowing out of a given node (unless it is a source or sink). The authors draw an analogy between these properties of network flow problems and lattice QED. The analogy is justified by noticing that, when Gauss' law  is written in discrete form, it is of the same form as equation (1). The authors therefore equate positive and negative charges to sources and sinks respectively. Electric charge is equivalent to the amount of goods and the electric field

is written in discrete form, it is of the same form as equation (1). The authors therefore equate positive and negative charges to sources and sinks respectively. Electric charge is equivalent to the amount of goods and the electric field  represents a flow.

represents a flow.

In order to derive their mixer Hamiltonian, Zhang et al write down a minimal gauge invariant lattice QED Hamiltonian. Making use of the analogy outlined above, the authors propose this as a QED-mixer Hamiltonian for network flow problems. The gauge symmetry of the Hamiltonian means that flow is naturally conserved without the need for extra penalty terms. Despite satisfying the constraint of flow conservation, the QED-mixer Hamiltonian can generate solutions that contain isolated closed loops of flow that are unconnected to any source or sink and are therefore not feasible solutions. The authors therefore propose a modified, restricted QED-mixer that solves this problem at the cost of increased circuit complexity.

Zhang et al show that their mixer performs well compared to the standard X-mixer for a number of relatively simple test cases. Specifically they study single source shortest path (SSSP) problems on triangle graphs with 2-4 triangles and 2-pair edge-disjoint path (EDP) problems on undirected graphs with 3 × 3, 3 × 4 and 4 × 4 grids. All test cases were performed using numerical simulations rather than actual quantum devices. Despite the fact that the test cases are relatively limited in scope, the paper clearly demonstrates that potential of developing physics-inspired mixers. Considerable research effort relating to QAOA involves the development of improved mixers and physics inspired approaches like the ones discussed in this section offer a clear, rigorous path to improvements.

4.2.2. Case studies of constrained optimisation.

Case studies of QAOA with hard constraints on actual quantum devices have been somewhat rare due to hardware limitations both in terms of number and fidelity of qubits. However, as hardware continues to improve, QAOA is increasingly able to be tested. For example, Niroula et al [101] recently performed an insightful study comparing unconstrained (penalty term) QAOA, XY-QAOA and the layer variational quantum eigensolver (L-VQE) algorithm [102]. They studied the problem of extractive summarisation (ES) which is an example of a constrained optimisation problem that has real world application. Tests were performed using noiseless and noisy simulators as well as Honeywell's H1-1 20 qubit trapped ion quantum device. In their tests, both L-VQE and QAOA account for constraints by including a penalty term whereas in XY-QAOA the constraints are encoded directly into the mixer. The XY-QAOA method also requires preparation of an initial state that is in a superposition over all feasible states. 14 and 20 qubit experiments were performed with 10 repetitions for all methods apart from XY-QAOA which was only repeated three times due to high circuit depth. Their results contain several key insights into how best to treat constraints and the impact of noise.

Across all platforms (noiseless/noisy simulator and QPU) the penalty term QAOA had very poor probability of generating feasible solutions (worse than random in the 14 qubit tests). The authors show that this poor performance arises because introducing a penalty term leads to a trade-off between terms in the Hamiltonian. L-VQE does not suffer from the same issue in these test cases because it is expressive enough to be able to solve the problems exactly. However, this will not be the case in general for L-VQE when applied to larger problem instances that become more difficult to optimise as the number of parameters increases. These tests also demonstrate that XY-QAOA is highly sensitive to noise. On the noiseless simulator, XY-QAOA delivers feasible solutions with 100% probability but on the noisy simulator and quantum device, the probability drops to less than 40%. The authors suggest that this is likely due to the increased circuit depth and number of 2 qubit gates required for XY-QAOA relative to both the other methods. In particular, the state preparation step for XY-QAOA is non-trivial and already introduces noise.

These results appear to support the idea that, at least for QAOA, it is crucial to setup the mixer unitary in such a way that it naturally encode constraints. However, the increased cost in terms of circuit depth and number of 2 qubit gates associated with this approach means that such implementations are more susceptible to noise and are therefore less likely to be useful on current generation devices. The comparison between L-VQE and XY-QAOA in this instance is inconclusive as, for the small problems studied, the two methods perform comparably.