Abstract

This paper presents the design and deployment of the Hydrogen Epoch of Reionization Array (HERA) phase II system. HERA is designed as a staged experiment targeting 21 cm emission measurements of the Epoch of Reionization. First results from the phase I array are published as of early 2022, and deployment of the phase II system is nearing completion. We describe the design of the phase II system and discuss progress on commissioning and future upgrades. As HERA is a designated Square Kilometre Array pathfinder instrument, we also show a number of "case studies" that investigate systematics seen while commissioning the phase II system, which may be of use in the design and operation of future arrays. Common pathologies are likely to manifest in similar ways across instruments, and many of these sources of contamination can be mitigated once the source is identified.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

The Hydrogen Epoch of Reionization Array (HERA, DeBoer et al. 2017) is a staged experiment targeting precision measurements of the 21 cm Hydrogen line during the formation of the first stars in the Cosmic Dawn and the subsequent Epoch of Reionization (EoR) when the intergalactic medium (IGM) was ionized. HERA targets the redshifted 21 cm hyperfine transition line of neutral hydrogen. In this paper we describe the as-built design of the HERA instrument and discuss lessons learned in the process.

Simulations suggest a wide range of possible models for the formation of the first stars and how emerging sources of radiation might heat and ionize the IGM. Observations of the redshifted neutral hydrogen (HI) emission or absorption signature trace the neutral gas regions through Cosmic Dawn and the EoR, offering a window into the early universe (Furlanetto et al. 2006; Morales & Wyithe 2010; Pritchard & Loeb 2010).

Other experiments searching for the high redshift 21 cm signal include the Precision Array for Probing the EoR (PAPER, Parsons et al. 2010), the Giant Metrewave Radio Telescope (Paciga et al. 2011), the Murchison Widefield Array (Tingay et al. 2013 & Wayth et al. 2018), the LOw Frequency ARray (van Haarlem et al. 2013), the Canadian Hydrogen Intensity Mapping Experiment (CHIME, The Chime Collaboration et al. 2014), and the Large-Aperture Experiment to Detect the Dark Age (Price et al. 2018). Upcoming experiments include the Square Kilometre Array (SKA, Mellema et al. 2013), The Canadian Hydrogen Observatory and Radio-transient Detector (Vanderlinde et al. 2019), and the Hydrogen Intensity and Real-time Analysis eXperiment (Saliwanchik et al. 2020).

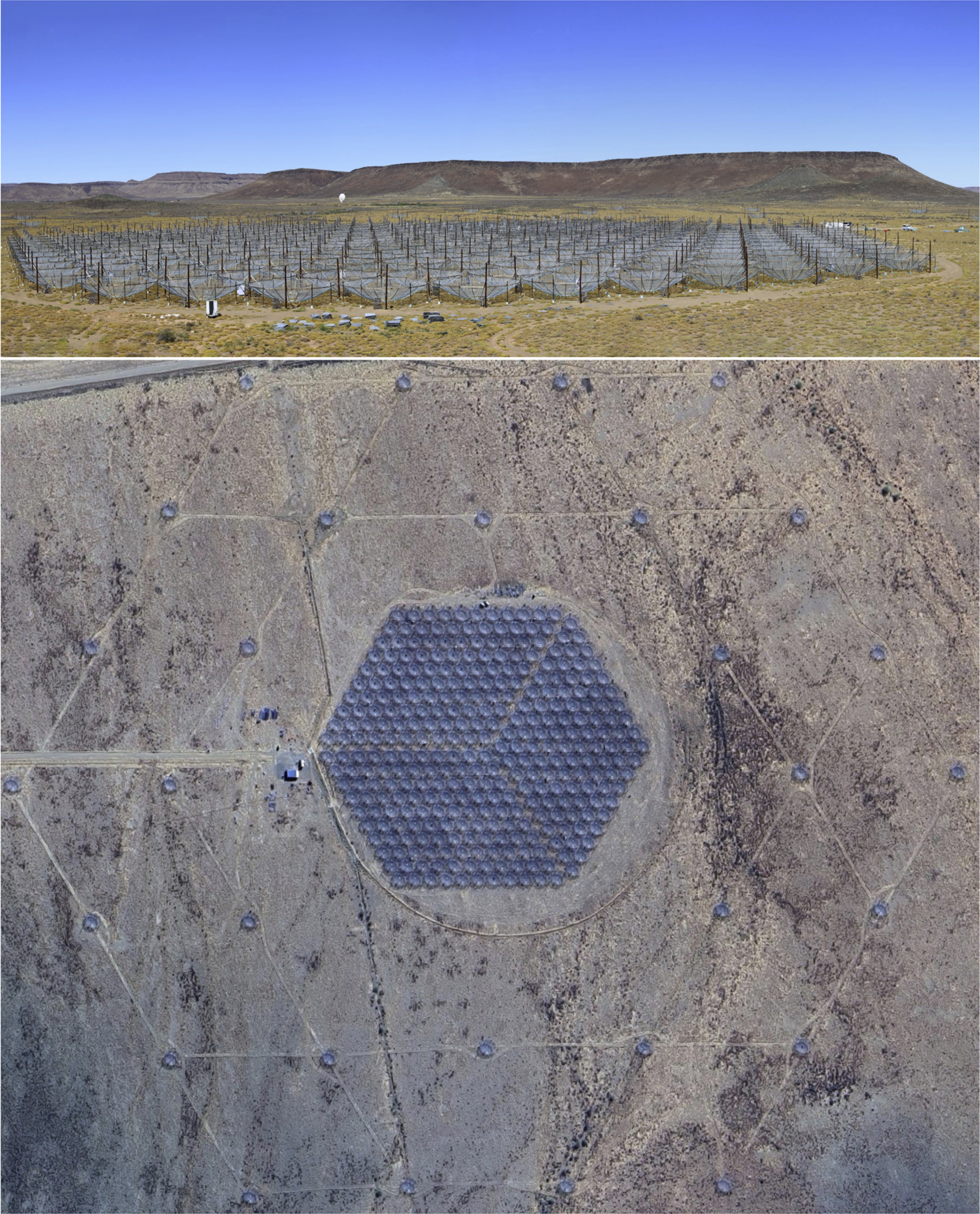

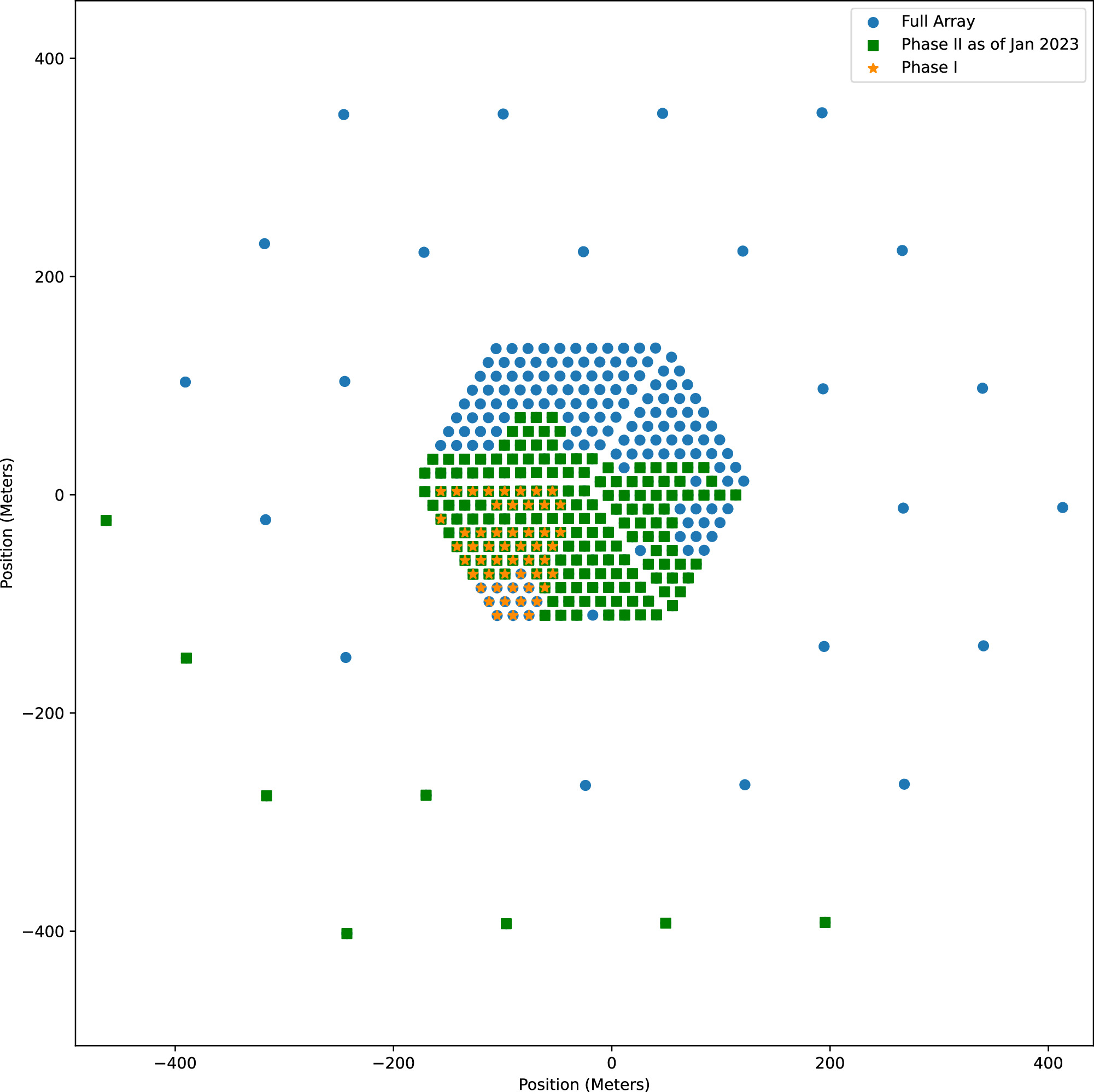

HERA is an interferometer consisting of 350 parabolic 14 m dishes, organized into a 320 antenna core and a 30 antenna outrigger configuration. The constructed core is shown in Figure 1, from a side view (top) and an overhead view (bottom). The 2 layers of outrigger antennas are also visible in the overhead view. This design is optimized for power spectrum detection as the redundant configuration bolsters sensitivity on the baselines corresponding to reionization and Cosmic Dawn, and the dishes provide a large collecting area. The first stage ("phase I") of HERA reused the dipole feeds, cables, Post Amplifier Modules (PAMs), ROACH2 signal processing boards with 2 × 16 input ADCs, and an xGPU correlator from its predecessor, the PAPER, and refit the Front End Modules (FEMs) to be a 75 Ohm version of the future HERA FEM in order to match the impedance of the PAPER system. The phase I array consisted of a limited number of antennas. The "phase II" experiment upgrades the feed to a wideband design and replaces the digital and analog signal chains entirely. Additionally, phase II will encompass the full planned 350 antennas. This paper will describe the design and operation of the phase II array.

Figure 1. (top) The fully constructed hexagonal core. A Meerkat dish can be seen in the background. (bottom) The HERA array from an overhead view. The 3 sectors of the hexagonal array and 2 layers of outriggers can be seen. (Imagery ©2024 Airbus, CNES/Airbus, Maxar Technologies, Map Data ©2024 Google).

Download figure:

Standard image High-resolution image2. Science with HERA-350

2.1. Primary Science Justification: From Cosmic Dawn to Reionization

HERA's primary scientific goal is to understand the processes driving the evolution of the 21 cm brightness temperature of the IGM during Cosmic Dawn and reionization. The array observes redshifts associated with Cosmic Dawn and reionization by tracing 21 cm emission from neutral hydrogen. The 21 cm hyperfine transition line is produced when a neutral hydrogen atom undergoes a spin-flip transition and emits a photon at a characteristic wavelength of 21 cm. As the first astronomical objects formed, they emitted radiation that ionized the primordial IGM. By observing the fluctuations of the 21 cm emission over time, HERA provides a tool to study the processes governing the early universe. HERA-350 has been optimized for detecting and characterizing the three-dimensional power spectrum of the cosmological 21 cm signal, where two dimensions map transverse to the line-of-sight and one maps redshift to line-of-sight distance. Figure 2(a) shows a 2D slice from the Muñoz et al. (2022) simulation of the 21 cm Brightness Temperature with respect to the CMB temperature (δ TB). Panel (b) shows a single 1.5 Mpc pixel through redshift illustrating the variation in brightness temperature for one line of sight, as well as the globally average signal. Panel (c) shows the expected foreground temperature for a spot away from galactic center, which follows a power law relationship. Panel (d) shows a few power spectra for specific redshifts within the simulated lightcone.

Figure 2. (a) A slice of the 21 cm Brightness Temperature (δ TB) EoR Lightcone from the fiducial model produced for Muñoz et al. (2022). (b) A line of sight through the slice showing the variation in δ TB for a single 1.5 Mpc pixel through redshift, and the global average. (c) Nominal foreground temperature for a patch of sky away from galactic center, described by a power law. (d) Power spectra for a few selected redshifts for the fiducial lightcone.

Download figure:

Standard image High-resolution imageFigure 3 shows the results of a Markov Chain Monte Carlo pipeline for fitting models to emulated multi-redshift 21 cm power spectrum data, reproduced from Kern et al. (2017). The underlying models are based on the excursion-set formalism of Furlanetto et al. (2004) and the 21cmfast code (Mesinger et al. 2011). HERA-350 potentially delivers ≲10%-level constraints on these parameters, which are essentially unconstrained by current observations (Greig & Mesinger 2017), especially those describing the pre-reionization heating epoch (X-ray production efficiency for early star formation (fX) and spectral slope/hardness of the first X-ray sources (αX)). For more detail about the forecast, see Kern et al. (2017). Other HERA forecasts, for example, include Mason et al. (2023) and Greig & Mesinger (2017).

Figure 3. 68% and 95% credible intervals for six key Cosmic Dawn parameters. The marginalized distribution across each model parameter is shown on the diagonal. EoR parameters include: the ionizing efficiency of star-forming galaxies (ζ); mean free path of ionizing photons in the IGM (Rmfp); and minimum virial temperature (i.e., mass) of galaxies contributing to reionization ( ). Parameters covering heating during Cosmic Dawn include: X-ray production efficiency for early star formation (fX); spectral slope/hardness of the first X-ray sources (αX); and minimum frequency of X-rays not absorbed by the ISM (

). Parameters covering heating during Cosmic Dawn include: X-ray production efficiency for early star formation (fX); spectral slope/hardness of the first X-ray sources (αX); and minimum frequency of X-rays not absorbed by the ISM ( ). The crosshairs mark the true parameter of the mock observation used in the forecast. Existing constraints on these parameters are weak (Greig & Mesinger 2017); however, HERA will nominally be able to constrain them to within ∼10% with foreground-avoidance techniques. Reproduced from Kern et al. 2017. © 2017. The American Astronomical Society. All rights reserved.

). The crosshairs mark the true parameter of the mock observation used in the forecast. Existing constraints on these parameters are weak (Greig & Mesinger 2017); however, HERA will nominally be able to constrain them to within ∼10% with foreground-avoidance techniques. Reproduced from Kern et al. 2017. © 2017. The American Astronomical Society. All rights reserved.

Download figure:

Standard image High-resolution imageThe telescope has had two phases of operation which differed in practical terms in the redshift range measured: phase I of HERA covered 6 < z < 13 and phase II extends this range to 5 < z < 27, encompassing the redshifts expected of the Cosmic Dawn. The HERA collaboration's first upper limits on the power spectrum of 21 cm fluctuations at a redshift of approximately 8 and 10 from phase I data have already been published (The HERA Collaboration et al. 2022a, 2022b), and the most sensitive limits are mostly consistent with thermal noise over a wide range of k (wavenumber).

While HERA's results are the most sensitive in the field at the time of this paper, they do not yet report a detection of the EoR. However, these results were extracted from data with a small subset of the array, only 39 of 52 working antennas, and the observations used the phase I signal chain described in DeBoer et al. (2017). Future HERA results will be published with data from the phase II signal chain described in this paper, and will use more antennas.

2.2. Secondary Science Goal: Constraints on Fundamental Physics

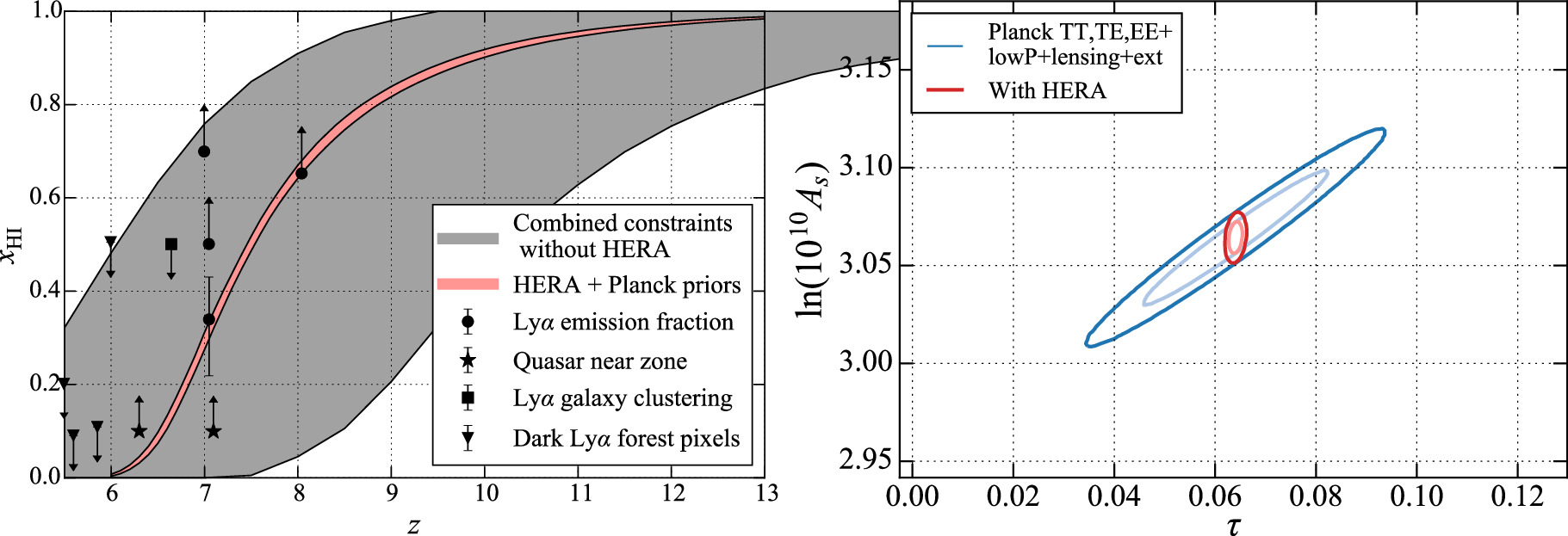

HERA's constraints on reionization history also help constrain fundamental physics. As a direct probe of reionization, HERA observations can remove the optical depth τ as a nuisance parameter in CMB studies. Though the inference of τ from the 21 cm data is model-dependent, such a measurement can still have a significant impact. As shown in Figure 4, knowing τ breaks the internal CMB degeneracy with the amplitude of primordial scalar fluctuations, As , effectively reducing errors on As by a factor of four (Liu et al. 2016a). For more details about forecasts for HERA, see Liu & Parsons (2016).

Figure 4. (left) Ionization history constraints from current high-z observational probes (black points). With Planck priors (Greig & Mesinger 2017), the inferred 95% confidence region (gray) reduces to the red region by adding HERA-350 measurements. (right) HERA-350's ionization history constraints break CMB parameter degeneracies, enabling improved constraints on As , σ8, and the sum of the neutrino masses. For more details about these parameter forecasts for HERA, see Liu & Parsons (2016).

Download figure:

Standard image High-resolution imageIncluding HERA constraints on τ also reduces the errors on ∑mν , the sum of the neutrino masses, to ∼12 meV, providing a ∼5σ detection of the neutrino mass even if ∑mν ∼ 58 meV, the current minimum allowed value. The breaking of degeneracies provided by 21 cm measurements become even more useful for measuring ∑mν if future cosmological data sets demand more than the current six parameters of ΛCDM.

2.3. Secondary Science Goal: Cross Correlations with other Early Universe Probes

In addition to statistical power spectra, deep, foreground-cleaned image cubes, spanning 0.8 × 0.8 × 18 Gpc3 and 5 < z < 27 with Δz/z < 0.05, could provide additional information when combined with current and future data sets. In principle, informative cross-correlations using higher-point statistics are possible with patchy reionization from the kinetic SZ effect (McQuinn et al. 2005). Galaxy populations, characterized by spectroscopic tracers and the intensity mapping of lines such as Lyα, Ly-break, CO, or Cii, provide another cross-correlation opportunity (Beane et al. 2019; McBride & Liu 2023).

On large scales, the cross-power spectra between these emission lines and the 21 cm line are negative because the cross-correlations are driven by fluctuations in ionization. On small scales, all lines trace density fluctuations, giving rise to positive cross-correlations (Gong et al. 2012). Measuring the scale at which the correlations change sign provides robust information on both the ionization state of the IGM and the characteristic spatial scale of ionized bubbles. The former provides independent confirmation of the ionization history constraints provided by HERA alone, while the latter allows one to test—rather than assume—theoretical models of the effects of inhomogeneous recombination in the ionized IGM. Moreover, cross-correlations provide an invaluable opportunity to test one's data for residual foreground signals and other systematic errors.

Though not optimized for imaging, the HERA team is investigating foreground subtraction and imaging techniques, which will produce data sets that could be used in combination with other high redshift tracers. Foreground subtracted images can be compared with or cross-correlated against other high redshift observables such as the Lyα forest (e.g with SPHEREx, Cox et al. 2022), CO and C ii lines (e.g., Carilli 2011; Gong et al. 2011), the kinetic Sunyaev–Zeldovich effect (e.g., with the Simons Observatory, La Plante et al. 2020), and even direct observations of high redshift galaxies (e.g., with existing surveys, Pagano & Liu 2021, with JWST, Beardsley et al. 2015, or with the Nancy Grace Roman Space Telescope, La Plante et al. 2023).

3. Approach to Power Spectrum Measurements

The HERA-350 design was optimized to maximize sensitivity to the power spectrum while minimizing the impacts of bright foregrounds.

One of the difficulties faced by EoR experiments is that of strong astronomical foregrounds, which are expected to be up to 6 orders of magnitude higher than the EoR signal (Santos et al. 2005). These foregrounds are mainly synchotron and free–free radiation from our own galaxy and other galaxies, which are spectrally smooth phenomena. HERA takes a delay spectrum approach to statistical power spectrum calculation, where the power spectrum is first calculated as the Fourier transform along frequency for each baseline before combining data from many baselines. Smooth spectrum emission (such as that shown in Figure 2 panel (c)) is isolated to a small number of Fourier modes, while the sharp-edged 21 cm background (such as that shown in Figure 2 panels (a) and (b)) persists to higher delay modes. HERA-350, with redundant baselines sensitive to Fourier modes of interest, is designed to take advantage of this analysis method. However, other power spectrum techniques are under investigation as well and will be discussed briefly.

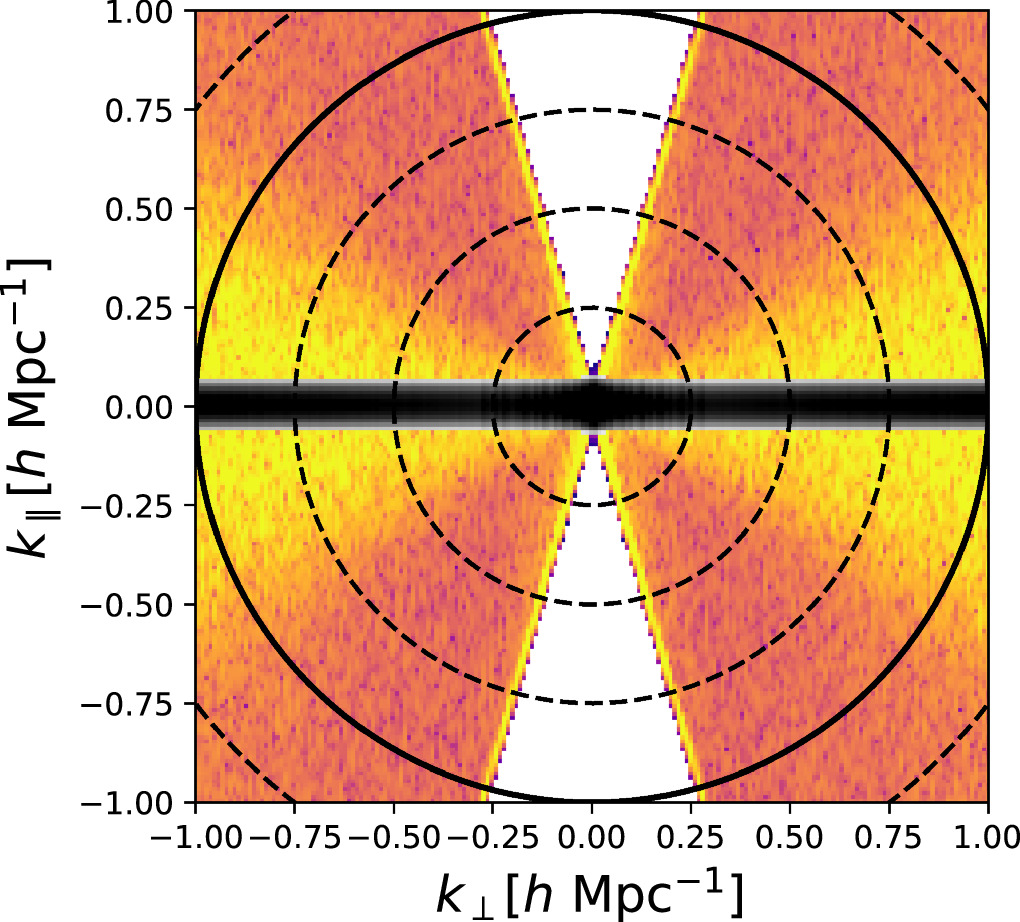

Though 21 cm observations offer a full 3D probe of the early universe, a 2D cylindrical average is a useful space to discuss how array design approaches impact sensitivity in Fourier space. Figure 5 illustrates how foregrounds and background map to line-of-sight k∥ modes, and perpendicular to line-of-sight modes k⊥. The range of k∥ is set by the instrument bandwidth as the lower bound and the spectral resolution as the higher bound, while each baseline type samples a different k⊥ mode. The longest baselines probe the finest angular scales at the upper bound, and the lower bound is determined by the shortest baselines. As HERA is a compact array with dishes touching nearly edge to edge, this bound is equivalent to the 14.6 m dish spacing.

Figure 5. Smooth-spectrum foregrounds up to 106 times brighter than the EoR signal are intrinsically confined to low-order k∥ modes (black region). The chromatic response of an interferometer scatters power in a characteristic wedge shape (colored region). HERA's foreground avoidance strategy uses smooth instrumental responses to produce a foreground-free window (white) for detecting the EoR.

Download figure:

Standard image High-resolution imageForeground avoidance takes advantage of the expected characteristics of the foregrounds and the EoR signal in k-space. Foregrounds are expected to be spectrally smooth and are therefore confined to the lower order k∥ modes. However, this natural sequestering of foregrounds in k-space is disturbed by instrumental effects. Interferometer baselines probe specific angular scales scaling inversely with baseline length; however, these scales also vary with frequency. Because of this inherent chromaticity, foregrounds spread beyond the lowest k∥ modes, forming a wedge shape in 2D k-space (see Figure 5). The extent of this wedge may also be impacted by systematics, such as calibration errors, which can spread foreground power outside of the wedge and into the EoR window (Barry et al. 2019). The wedge and other mode mixing effects are theoretically discussed in Morales et al. (2012), Parsons et al. (2012), Vedantham et al. (2012), Dillon et al. (2013), Hazelton et al. (2013), Thyagarajan et al. (2013), Liu et al. (2014a), Liu et al. (2014b), Thyagarajan et al. (2015), and Liu et al. (2016b). The leading approach in most analyses is to minimize instrumental wedge-causing systematics and then avoid including any areas which do become contaminated.

Figure 5 demonstrates the characteristic features in k-space. The foregrounds (black) are intrinsically confined to low k∥ modes, however, instrument chromaticity spreads their power into other modes, creating a characteristic wedge shape (color region). The white area represents the foreground free EoR window. Contours indicate 21 cm power spectrum amplitude (P(k)) which is predicted to peak at the smallest k-modes.

The HERA approach is to discard power spectrum bins containing foregrounds (any colorized pixel in Figure 5). The array design focuses on maximizing sensitivity on the non-foreground contaminated modes with a large collecting area and a highly compact redundant configuration. The redundancy offers a particularly large sensitivity boost (Parsons et al. 2012). Additionally, noise averages down faster when coherently combining visibilities than it does when combining power spectrum measurements. This approach and a consideration of the tradeoffs is discussed in Dillon & Parsons (2016).

The delay spectrum technique is the primary approach for HERA analysis pipelines. It has the advantage of simplicity, since it turns visibilities (the natural measurement basis of an interferometer) directly into power spectra without intermediate steps. However, there are a number of benefits to pursuing imaging based pipelines. Images are interesting in their own right for science, as well as for testing array performance and mapping foregrounds. Rotation and spectral synthesis mean the same point in UV space is measured multiple times, even with different baselines. This improves sensitivity over the delay spectrum approach as we are able to combine information from non-identical baselines. These types of analyses do not require raw visibilities to be stored after map-making, meaning the data are significantly compressed at this stage, which can help reduce data storage requirements. Imaging can also assist with recovery of Fourier modes contaminated by foregrounds. In order to obtain measurements in the contaminated regions of k-space, foreground subtraction is necessary. While a foreground model can be simulated and subtracted as a part of the delay spectrum analysis, it is more naturally part of an imaging analysis where errors in source model amplitude can be tracked to locations on the sky.

For a more in depth discussion of the techniques and tradeoffs of various EoR analyses, see Morales et al. (2018) and Liu & Shaw (2020).

4. The HERA Telescope

HERA is located at the South African Radio Astronomy Observatory site outside the town of Carnarvon in the Northern Cape of South Africa. This is also the site of the Meerkat telescope and the future site of the SKA Mid array (Dewdney et al. 2017). HERA was built in two phases. The first phase was built with parts recycled from the PAPER experiment previously operated at the same location. In this configuration the telescope was limited to redshifts 6 < z < 13 and could support at most 75 antennas. In the second phase a feed and signal chain upgrade extended the redshift limits to 5 < z < 27 and the digital system was upgraded to support all 350 antennas. Near real time analysis steps such as flagging and calibration have been improved to better support rapid detection and diagnostics of system issues and have led to faster turn-around of subsequent data analysis. Due to bright and highly variable solar radiation in the radio band, as well as limited cooling capacity, HERA's observations are limited to the night time, and therefore these real time cycles operate on a daily cadence.

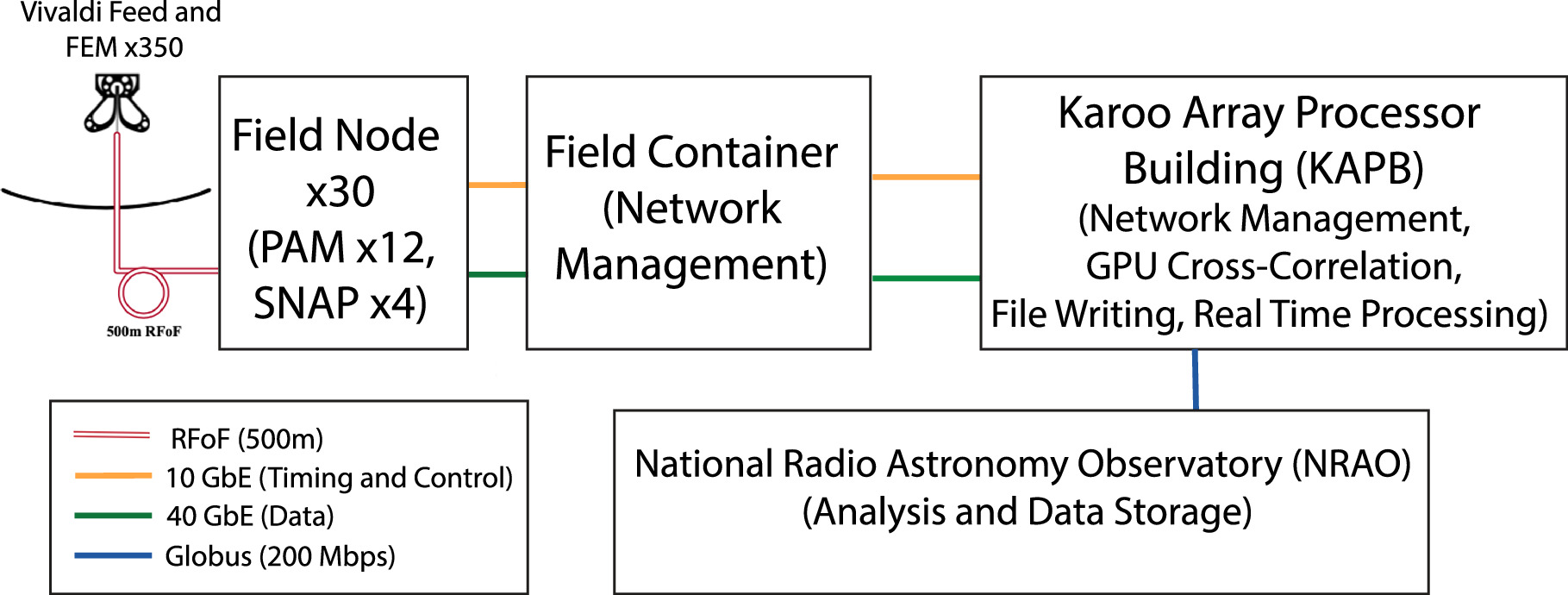

An overview of the full system is included in Figure 6, showing the array support structures and their roles. Each of the 350 array elements is a 14 m mesh dish with a suspended feed that contains an embedded amplifier module. This FEM acts as balun, amplifier and RF over Fiber (RFoF) converter. The main RF signal is sent via 500 m of fiber to one of 30 nodes where it is converted back to radio frequency by a PAM and then digitized by a Smart Network ADC Processor (SNAP). The 500 m length was chosen in order to place reflections well outside of delays corresponding to cosmological modes of interest. The SNAP boards output packets of channelized spectra which are routed to the GPU correlators for cross-correlation in the nearby Karoo Array Processor Building (KAPB), which also hosts the cluster for real-time processing (RTP) of first round flagging and calibration. Once the RTP has finished and data products are written, they are transferred off site to the National Radio Astronomy Observatory in Socorro, NM for analysis and long term storage.

Figure 6. HERA's signal path from antenna to data archive. Front end modules (FEMs) embedded in the feed convert RF signals to optical fiber and send them to the Post Amplifier Modules (PAMs) over a 500 m RF over Fiber (RFoF) connection. Signals are received in one of 30 nodes distributed about the array. Smart Network ADC Processors (SNAPs) digitize, Fourier transform, and output packets over optical fiber, which are sent over a 10 km fiber bundle to the Karoo Array Processing Building (KAPB). The KAPB hosts the GPU correlator, which cross multiplies, averages to 100 ms, and sends products to a data catcher for further averaging and writing to disk. The Real-Time Processor (RTP) calibrates and flags data on a nightly cadence. Final products are transferred to the National Radio Astronomy Observatory (NRAO).

Download figure:

Standard image High-resolution image4.1. Array Layout

The HERA array layout is shown in Figure 7, with the phase I sub-array and the phase II array as of 2023 January highlighted. The array is arranged using hex packing which allows neighboring dishes to share supports. The stationary, zenith pointing, dishes are arranged in a compact hexagonal core, with dishes nearly touching end to end and 14.6 meter center-to-center spacing. This densely packed redundant layout maximizes sensitivity on a small number of modes, especially short baselines—as desired based on the approaches laid out in Section 3—though this also makes the array less suited to tomographic mapping. While HERA primarily targets delay spectrum based approaches, investigations into alternative imaging based power spectrum pipelines are under development. Therefore, a few design choices were made to improve imaging capabilities. This motivated the addition of two rings of outriggers giving longer baselines, and motivated splitting the core 320 antennas into three sub-sections. Each sub section is moved with respect to the others by 1/3 of a grid spacing to fill in the repeating gaps in the uv plane.

Figure 7. The HERA array layout at a few stages of construction. The phase I sub-array, and the phase II array as of 2023 January are highlighted. The full array configuration is underplotted.

Download figure:

Standard image High-resolution imageAnother benefit of regularly spaced antennas is the possibility of redundant calibration (Wieringa 1992; Liu et al. 2010). We can take advantage of the fact that grids of antennas make many measurements of the same sky fringe. This situation significantly reduces the number of free parameters to be constrained by a sky model. Despite the split core configuration and outriggers, HERA's full array still can be calibrated redundantly, leaving only four free parameters per frequency and polarization (one parameter each for amplitude, overall phase, East-West tip/tilt, and North-South tip-tilt) which must be solved for with the introduction of the method described in Dillon et al. (2018). In practice it has been found that sky model errors can still introduce unwanted chromaticity (Barry et al. 2016; Ewall-Wice et al. 2017; Byrne et al. 2019) but this can be partially mitigated by down-weighting long baselines (Ewall-Wice et al. 2017; Orosz et al. 2019), gain smoothing (Kern et al. 2020a), or an approach which combines redundant and non-redundant methods (Byrne et al. 2021). HERA's outriggers and split-core configuration also makes it particularly suitable to calibration using spectral redundancy, which can dramatically reduce the number of free parameters after redundant baseline calibration from a few per frequency to just a handful over the whole band (Cox et al. 2023).

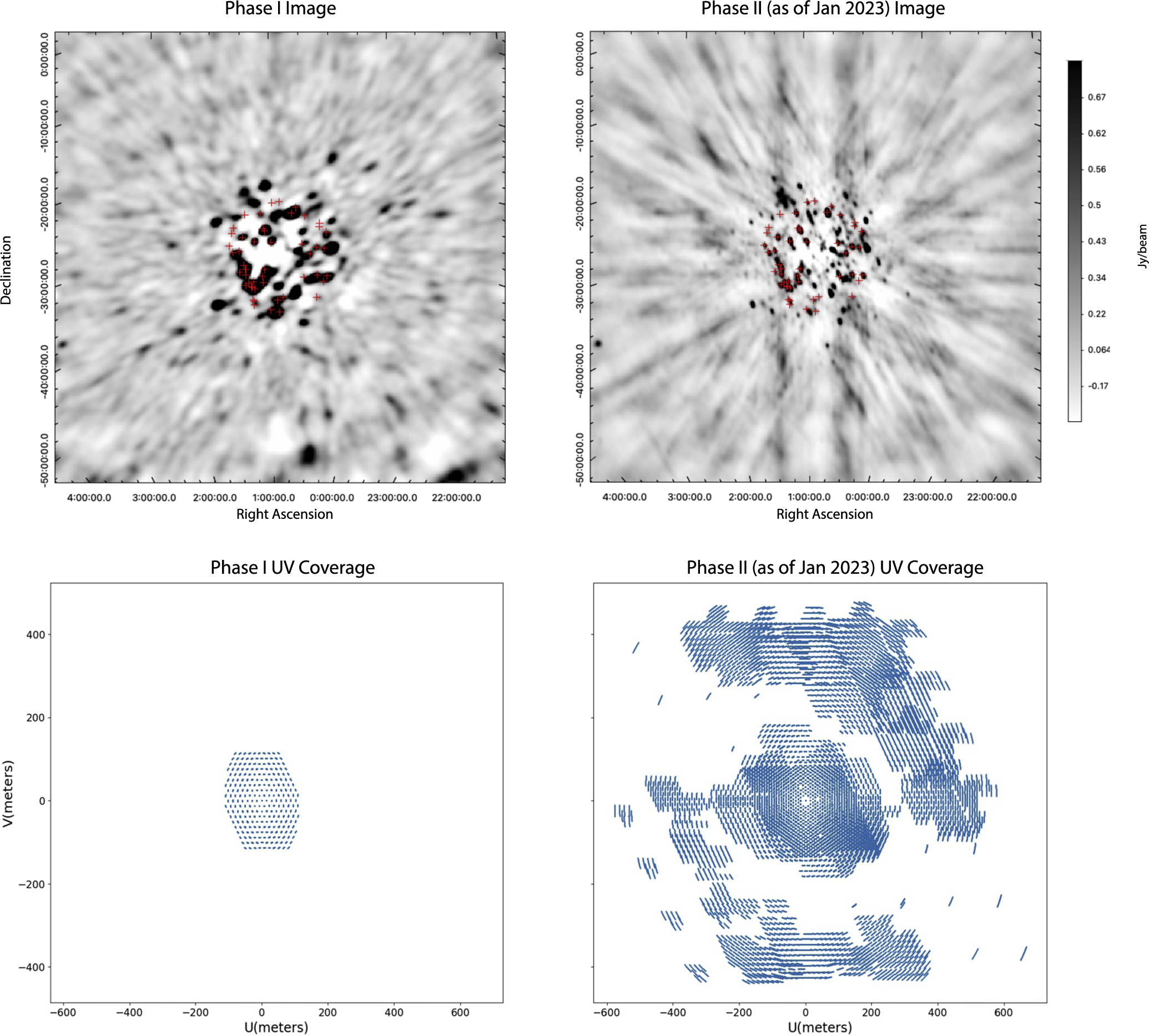

Figure 8 shows a comparison of the sky imaged with the phase I and phase II commissioning arrays, as well as the UV plane coverage for the images. It is important to note that the phase II data shown here is not the full expected array, and contains only the antennas built as of 2023 January. The antennas used for the images are highlighted as the respective sub-arrays in Figure 7. The phase II array data used the new signal chains and contained a subset of the array with 183 antennas, as compared to 52 antennas with the old signal chains in the phase I data. A subset of the GLEAM catalog (Hurley-Walker et al. 2017) is plotted over the image to cross-reference objects. All plotted GLEAM sources have an obvious counterpart in the HERA images, although they may appear faint for a number of reasons (e.g., the HERA image is wideband and the source may have less flux at other wavelengths, the source is not well localized) The images cover the same field and frequency range (100–200 MHz), as well as both using roughly 20 minutes worth of data. The images are made with the CASA package (CASA Team et al. 2022). The phase II array image shows markedly improved resolution, shown by the better localization of point sources as well as the increase in the number of resolved sources. This can be attributed to the much broader UV plane coverage in the phase II data, even with only a portion of the phase II antennas built out.

Figure 8. (top) Synthesis images demonstrating the HERA configuration and overall performance. (top, left) Phase I with 52 antennas and (top, right) Phase II with 183 antennas, as of 2023 January. The red crosses indicate the top 50 brightest sources in peak flux at 122 MHz in the GLEAM catalog (Hurley-Walker et al. 2017) within 7° of the image center. Sources that appear bright in the image but not in the top brightest 122 MHz GLEAM catalog sources are likely blended sources. Roughly 20 minutes of data and a frequency range of 100–200 MHz was used to make the images. The phase I image shows clearly less well localized point sources, as well as fewer sources in total. The Phase II image shows improved resolution. (bottom) Corresponding UV coverage for the images in the top panel. (bottom, left) Phase I with 52 antennas and (bottom, Right) Phase II with 183 antennas. The Phase II image covers a broader UV range, and contains a denser UV plane.

Download figure:

Standard image High-resolution image4.2. Dish and Construction

The HERA dish surface is a 5mm wire mesh optimized for reflecting wavelengths within HERA's bandwidth. It is supported by radial arms made from PVC pipe radiating from a concrete hub at dish center. The radials are supported at one point by vertical spars which are also tied back to the hub. Once weighted by mesh, the spars form a faceted parabola surface. One panel of the mesh contains a small removable door and bridge to allow for access into the dish. A detailed description of the dish construction and design can be found in DeBoer et al. (2017).

The feed, described in the next section, is suspended from 3 Aramid fiber ropes strung via telephone poles. Three antennas share a pole to balance forces and reduce the total number of required supports. On the edges of the array, perimeter poles are stayed with guy lines. The feeds are lofted via winches and high tension springs are inserted onto the ropes as a safety measure. Originally these springs were placed near the feed, but it was noted that this caused undesired resonances in the passband of the antenna, and they were moved near the poles. An isolated view of an outrigger antenna is included in Figure 9 to more clearly show feed, rope, and pole construction. Each pole also has a winch per antenna to raise and lower of feeds for maintenance, and to allow trimming of feed heights across the array. This winch is shown in Figure 10.

Figure 9. A single HERA dish. The Vivaldi feed is suspended by non conducting marine grade kevlar rope to poles on the perimeter of the dish. For servicing, the feed is lowered by hand winch and reached via a removable reflector panel.

Download figure:

Standard image High-resolution imageFigure 10. The feeds are raised and lowered with a hand winch. Each pole serves 3 surrounding antennas, therefore each pole also supports 3 winches, except for edge cases such as the one in this figure. Also visible is the extension spring which allows safe adjustment of tension force. Originally these were located at the feed attachment point, but were observed to introduce notable distortions in antenna response.

Download figure:

Standard image High-resolution image4.3. Phase II Feed

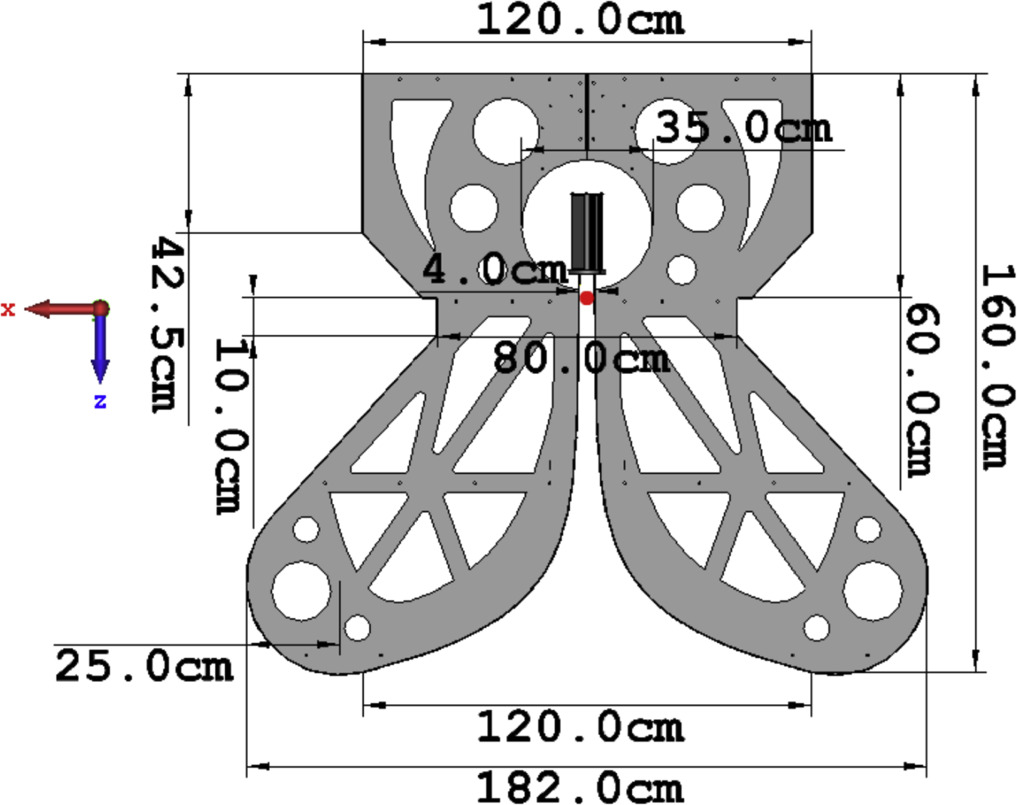

The phase I iteration of HERA reused the dipoles from the PAPER experiment suspended over the 14 m diameter zenith pointing dish. This feed had a frequency range of 100–200 MHz. Phase II replaced this feed with a Vivaldi type antenna which extends the useful science bandpass down to include the Cosmic Dawn band at 50–100 MHz (de Lera Acedo et al. 2018; Fagnoni et al. 2021), and the later stages of reionization above 200 MHz. The full range of the Vivaldi feed spans 50–250 MHz. The dimensions of the Vivaldi blade are shown in Figure 11.

Figure 11. Dimensions of a Vivaldi blade, © 2021 IEEE. Reprinted, with permission, from Fagnoni et al. 2021.

Download figure:

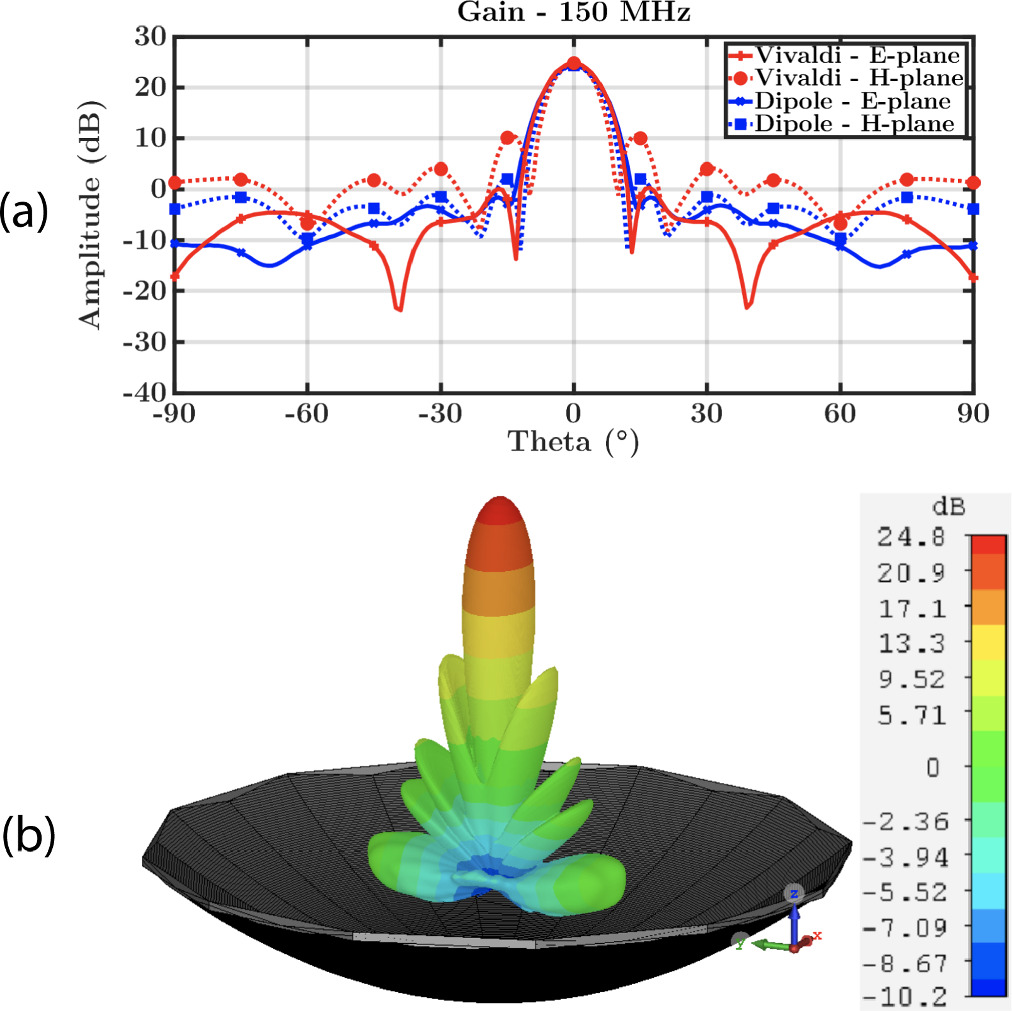

Standard image High-resolution imageThe system uses a feed that does not require a backplane, which radiates toward the dish with minimal backwards gain. The shape and matching network are optimized to match between the feed output and the receiver. The Vivaldi feed and its position in the dish were designed to minimize unwanted spectral structure. For example, reflections between dish and feed can cause ripples at frequency scales corresponding to target line-of-sight Fourier modes. The phase II feed has a lower reflection coefficient leading to lower standing waves within the dish. The gain of the vivaldi antenna and dish in the E and H-planes at 150 MHz as compared to the dipole and dish system is shown in Figure 12. By removing the sharp cutoffs at 100 and 225 MHz the bandwidth has increased substantially while the spectral variation is kept to a similar level. The 3D beam pattern of the X-polarization of the dish and feed at 150 MHz is also shown in Figure 12.

Figure 12. (a) Cut of the antenna gain in the E and H-planes from electromagnetic simulations at 150 MHz. (b) 3D beam for the X-polarization at 150 MHz. © 2021 IEEE. Reprinted, with permission, from Fagnoni et al. 2021.

Download figure:

Standard image High-resolution imageNumerical electromagnetic simulations showed the gain spectrum to be very sensitive to the positioning of the feed. This was subsequently confirmed experimentally by varying feed height and observing changes in autocorrelations. Kim et al. (2022) found in simulation that feed positioning should be accurate to the 1 centimeter level in position and 1° in tilt to minimize foreground leakage in power spectra. This leakage is caused by calibration errors, as the feed positioning offset impacts array redundancy. Kim et al. (2023) showed that with mitigation techniques, this requirement could be relaxed to 2 centimeters in position and 2° in tilt. To control the feed position, a levelling jig with a laser plumb bob was devised. When placed on the dish hub, the levelling and distance lasers point at a set of targets on the feed seen in Figure 13. With this system the feed can be positioned to better than 2 cm in X and Y, and 10 mm in Z. The tilt is measured by an IMU embedded within the analog module that is placed in the feed (discussed in Section 4.4), and remains nominally below 2° with some excursions up to 3° .

Figure 13. A view of the underside of a Vivaldi feed, showing the targets for the leveling jig. This jig ensures that the feed is positioned correctly using a laser plumb bob. The cable wrapping coming from the feed center extends to the bottom of the image.

Download figure:

Standard image High-resolution image4.4. Analog Signal Path and Node

The design goal of the signal chain is to minimize spectral structure as well as minimize response to out of band signals. Phase II replaced the analog signal chains with upgraded FEMs and PAMs, and changed from 75 Ohm cables to optical fiber. The system is further described in Razavi-Ghods et al. (2017). The FEM is mounted on the Vivaldi feeds, and covered in a weather protective cover. This mounting can be seen in Figure 14, in a dish that has had its feed lowered for maintenance. The FEM takes in balanced, dual polarization signals from the feed, amplifies them and converts them to single ended 50 Ohm impedance.

Figure 14. A lowered Vivaldi feed sitting in a 14 m dish. The Front End Module (FEM) which acts as balun, LNA, and RFoF converter is the white rectangle mounted in the circular void within the top half of the feed. It is covered in a weather resistant bag to reduce environmental degradation.

Download figure:

Standard image High-resolution imageIn addition to filtering and amplification components, the FEM includes a integrating Dicke switching radiometer (Dicke 1946). Between the first LNA and the second stage amplifier is a switch which can select a 50 Ohm load instead of the antenna. This load circuit also has a calibrated noise source that can be enabled. By measuring sky, load, and noise parameters in field, the downstream portion of the signal chain can be absolutely calibrated. Integration of this calibration scheme is a work in progress. Currently, the load and noise settings are used often for commissioning and array quality assurance.

The FEM also contains the initial 180° phase switch of a crosstalk mitigation system, based on the Walsh switching technique (Thompson et al. 2001). This system is currently being commissioned and has not been in use for previous seasons of HERA observing. In order to reduce spurious signals introduced along the analog signal chain, orthogonal Walsh functions are generated downstream on the signal processing boards and fed to the phase switch in the FEM over differential lines to enable synchronous switching of the signal chain phase. The phase of each polarization in the FEM can be controlled independently.

Several digital devices in the FEM are included to provide useful diagnostic telemetry. These include sensors for voltage, current, magnetic heading, barometric altitude, and tilt. Control signals for all of these devices are sent via an I2C bus which is extended to long distances via a differential CAN bus transceiver over twisted pair lines in Cat7 ethernet cables. Wire pairs in this cable also transport the phase switching signal.

A notable change in the phase II design is the replacement of 35 m coaxial cable for the connection between the FEM and PAM with a 500 m RFoF system. In order to minimize signal loss, the original coaxial cables were designed to be as short as possible while still allowing for multiple signals to be directed to a central node. However, the reflections in the coaxial cable introduced chromaticity at cosmologically interesting modes, and they were found to leak RF, which generated unwanted correlation between antennas. Therefore, the coaxial cables were passed over in favor of RFOF. A 500 m length was chosen in order to place reflections well outside of delays of interest. With signals propagating at optical wavelengths there is no risk of unwanted re-radiation or mutual coupling within the cable runs.

Cabling to the feed includes a pair of signal fibers, a coaxial power cable, and a Cat7 ethernet cable carrying digital monitoring and switching. Electromagnetic simulations suggested the bundle should be sheathed in a grounded cover, but that contact of sheath with feed could result in additional chromatic structure. The solution is an additional layer of weather wrapping and a carefully laced route through the antenna. Figure 13 shows wrapping for the cables extending to the bottom of the dish.

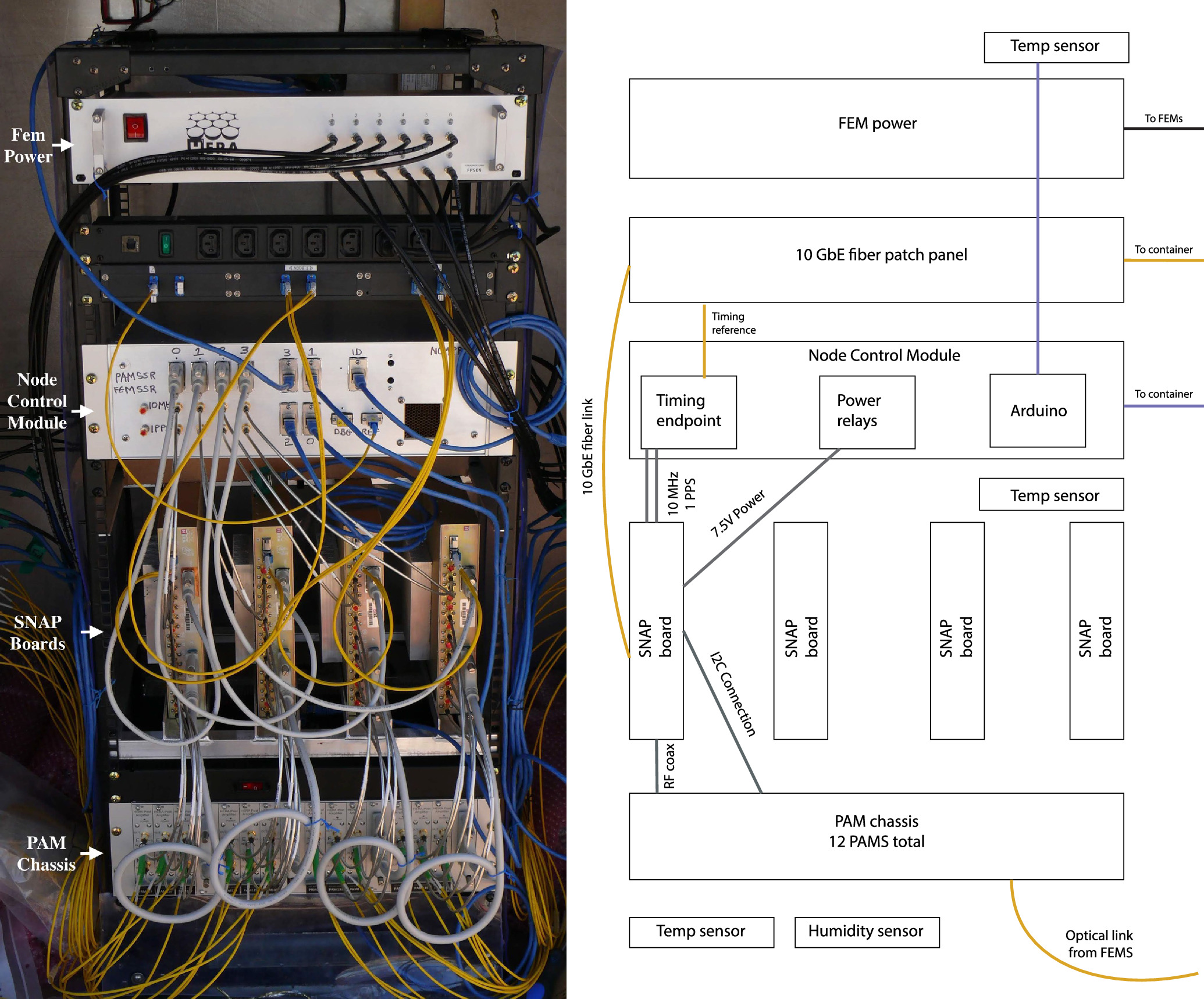

At the node, a patch panel feeds the RFoF cables through to the PAM receivers. The node serves up to 12 antennas, and contains part of the analog signal chain, signal processing, and digital control systems. Figure 15 shows a photo of the inside of the node along with a schematic view. The node structure is an RFI tight enclosure which is cooled by air forced through a buried ground loop. The PAM receiver chassis can be seen at the bottom of the node. Additional node components will be discussed in their respective sections.

Figure 15. (left) The internals of a node which support up to 12 nearby antennas. (right) A diagram of the node. Cable colors correspond as closely as possible to those in the photograph. Bottom to top: RF over Fiber conversion in the PAMs, digitization and channelization in SNAPS, node control module supplies power switching and timing synchronization. At the top a patch panel links 10 and 1 GbE fibers back to the central switch bank. Cool air enters from ground loop beneath and exits at the top. Polarized signals travel in pairs. SNAPs also read out and control sensors and settings embedded in the signal chain.

Download figure:

Standard image High-resolution imageEach PAM receiver converts both polarizations of one antenna back to RF. It applies gain and filtering of those signals, acting as the anti-aliasing filter for the analog to digital converter (ADC). Each PAM also has a digitally controllable attenuator, which can be set independently for each polarization, and allows for the input signals to the ADC to be levelled by up to 14 dB. The PAM sits on the same I2C network as the FEM and provides a physical connection point for the digital cable to the FEM.

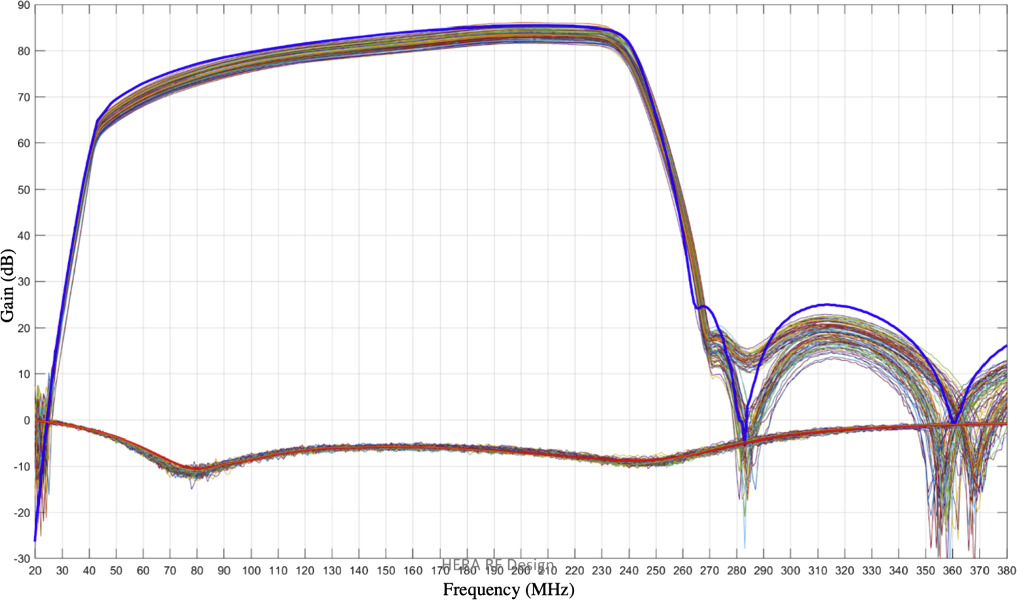

Figure 16 compares the expected gain spectrum of the analog chain with several modules measured in the lab. The S21 measurements with a two port VNA show the throughput gain of the system, with the simulated data highlighted in blue. The full gain of the analog system measures around 70 dB at the low end of the band, and 85 dB at the high end. The noise figure is approximately 90k in the middle of the band. To approximate the sky temperature for a "quiet" patch of sky away from the Galactic plane, we can use a power law (Furlanetto 2016):

Figure 16. 3-port VNA measurements of the FEM—PAM system, as compared with simulation values. The simulated S21 measurement is highlighted in blue, and the S11 measurement in red. 24 lab modules are measured for comparison. These modules were designed to introduce minimal extra chromaticity in the 50–250 MHz passband. The total gain of the analog system in the middle of the band is approximately 80 dB.

Download figure:

Standard image High-resolution imageWe recover a sky temperature of ≈290 k at 150 MHz.

The S21 response is suitably spectrally smooth within the 50–250 MHz bandwidth. The S11 measurements show the power reflected from the analog system, with the simulation data highlighted in red. The reflected power is also minimally chromatic within the HERA band.

4.5. The Correlator

The correlator system digitizes, channelizes, and cross correlates between all antennas. The system begins at digitization and ends at file writing. To minimize the number of components the correlator system is also responsible for ancillary services like RF signal chain telemetry, timing, and networking. The correlator uses the FX architecture, where inputs are channelized first and cross multiplied second, and has a scalable design based on the framework described in Parsons et al. (2008).

The system is distributed across multiple embedded and traditional compute systems. Settings and status values are synchronized via a REDIS 38 in-memory data store. REDIS captures the current state of the correlator and related systems, but does not contain any history. REDIS is polled regularly and the current state is timestamped and stored in a database with historical capabilities, described further in Section 4.6.3.

4.5.1. F-engine

The phase II array upgrades the signal processing boards from the Roach II boards and 16 input ADCs used by PAPER to a SNAP board designed by the Collaboration for Astronomy Signal Processing and Electronics Research (CASPER) (Hickish et al. 2016) in collaboration with the NRAO. A top-down view of the SNAP is shown in Figure 17. Input signals are digitized by HMCAD1511 ADCs and read out by a Kintex 7 FPGA. The board is controlled by a microblaze softcore processor on the FPGA that is accessible via a Python programming interface. The SNAP acts as both the digitizer and the "F-engine," taking in analog signals over coaxial cable and outputting Fourier-transformed spectra over 10 GbE fiber. Each SNAP reads out 3 dual polarization antennas (six signal chains) and samples them at 500 Msps for a 250 MHz bandwidth. In order to achieve this speed, the ADCs are run in an interleaving mode. Each ADC device has four digitizer channels with two interleaved channels digitizing each signal. The SNAP is clocked from a 10 MHz reference and a 1 PPS timing signal generated by a timing endpoint within the node control module (NCM). The endpoint used relies on the White Rabbit technology (Moreira et al. 2009), developed partially by CERN. The 10 MHz reference is converted by the on-board synthesizer to a 500 MHz clock for the ADCs and a 250 MHz clock for the FPGA.

Figure 17. Top-down view of a Smart Network ADC Processor (SNAP) board in its chassis. The daughter board is connected through the Raspberry Pi/custom card interface. 12 analog inputs are available, though due to the necessary ADC processing speed, only 6 are used for HERA. SMA inputs are also available for the reference clock and PPS timing signals, which can be up-converted by the onboard synthesizer. The SNAP has 3 onboard HMCAD1511 ADCs, as well as a Kintex 7 FPGA. Three interfaces on the back of the board allow for external connections, over a ZDOK, UART connector, or Raspberry Pi interface. HERA uses the Raspberry Pi interface to mount the digital control daughter board, but does not use the other interfaces. The SNAP can be controlled via a Raspberry Pi processor, but the HERA firmware substitutes a microblaze softcore processor.

Download figure:

Standard image High-resolution imageWhile these boards could be mounted in the node directly, to limit crosstalk and self interference each board is encased in a custom RFI tight chassis. The chassis also acts as a passive thermal control system conducting thermal energy from the FPGA into the aluminum case. Four of these modules are mounted in each node as shown in Figure 15. The SNAP outputs spectrum packets over a 10 GbE SFP+ connector, visible in the figure as yellow fiber connections.

The FPGA design contains a polyphase filter bank, Fourier transform, equalization, as well as a number of other useful functions, including an onboard correlator for rapid testing and prototyping of the system. Additionally, the Walsh patterns for phase switching are generated on the SNAP and converted to differential signals by a custom daughter card for off board communication to PAMs and FEMs.

The F engine channelizes to 213 (8192) channels for a resolution of 30 kHz. However, due to bandwidth limitations in the 10 GbE connection, only 178.5MHz of bandwidth (6252 channels) is sent.

4.5.2. Networking and X-engine

Spectrum packets from a node's four SNAPs are sent over 10 GbE ethernet to one of 3 10/40 GbE Ethernet switches in a shielded container adjacent to the array. These switches form part of the the "corner turn" data transpose which rebroadcasts antennas to multiple GPU "X-engines" for cross multiplication. From here these switches are crosslinked over a 10 km fiber bundle to the KAPB and a matching set of switches, which complete the corner turn transpose and send the reordered data to the GPU X-engines. The KAPB also hosts all other HERA on-site compute including monitoring and on-site data archiving.

Cross multiplication is performed by 32 total GPU-based X-engines, housed two to a server, with each GPU allocated a 10 GbE Network Interface Card (NIC). Each X-engine receives 1/16 of the spectra from every antenna with half the engines reading in the even time samples and the other half odd. The engine first averages by a factor of four to 122 kHz channels, cross multiplies all pairs of antennas, and every 8 seconds sends an averaged visibility to a dedicated catcher machine which saves data to disk. An overview of the networking setup is shown in Figure 18.

Figure 18. An overview of the networking scheme. The field nodes send out packets over 10 GbE fiber. These connect to "corner turn" switches in the field container, which send antennas to the GPU X-engines. These fibers are then bundled and sent over a 10 km link to the KAPB, where a second set of switches completes the corner turn transpose. This allows the NIC and GPU in the X-box to perform parallelized cross correlation. The outputs of the correlator are averaged and sent to a dedicated catcher machine which writes raw visibility files. These are then converted to UVH5 format, uploaded to the on-site Librarian (described in Section 4.6.5), and then synced to NRAO. Yellow connections indicate a 1 GbE link, green indicates 40 GbE transport, blue is the Globus 200 Mbps link to NRAO.

Download figure:

Standard image High-resolution image4.5.3. Data Catching and Compression

Interferometers with a large number of antennas are constrained by data volume and network bandwidth concerns. The connection between the Karoo site and NRAO has an average 200 Mbps transfer bandwidth, and the on-site storage availability is approximately 1.1 Petabytes. Reducing data volume is a high priority concern, especially as HERA builds out to the full 350 antennas. Averaging data in frequency or time can reduce the data size, but can cause smearing effects that degrade instrument performance particularly on the longest baselines.

Data are written in a two step process to minimize the impact of time spent applying compression on data recording. The correlator writes a raw custom format with minimal metadata information. Once written these are converted to an HDF5 format called UVH5 readable by the pyuvdata 39 (Hazelton et al. 2017) library with compression using the Bitshuffle algorithm (Masui et al. 2015), originally developed for the CHIME telescope, to reduce the volume. This method is independent of time or frequency averaging, and can be applied alongside such schemes. In practice, for HERA this offers a 2:1 compression ratio.

One avenue under exploration to further reduce the data output of the telescope is baseline-dependent averaging (BDA). BDA has been investigated in the context of the SKA (Wijnholds et al. 2018) and is expected to greatly reduce visibility data sizes with minimal loss to instrument performance. As the shortest baselines in the array do not require short integration times and fine channel resolution to limit smearing and decorrelation effects, these baselines can be averaged for longer and in broader frequency chunks, reducing the data volume. In the context of EoR science with HERA, we will only target time domain BDA, as the EoR measurement is very sensitive to spectral features and we do not want to risk introducing frequency dependent structure. As the majority of HERA's baselines are relatively short, this solution has the potential to greatly reduce data volume.

The averaging time for each a baseline can be set to match the amount of allowed decorrelation experienced. The decorrelation is highest for angles corresponding to high fringe rates, generally large angles with sources far from zenith (Bridle & Schwab 1999).

For example we might allow, for a source 10° off zenith, a maximum decorrelation of 10%, and calculate the maximum allowed integration times for each baseline. Realistically, there are also a few extra limitations imposed. Sources moving across the stationary beam lead to apparent flux density changes with time. Averaging these sources over long periods of time will introduce uncertainty in the source amplitude. Practically this limitation amounts to a few minutes for our reference sky position. Additionally, the size of buffers in the GPU X-engine impose a roughly 30 s limit on integration time. Combining this all together gives a potential compression factor of 12.

However BDA does require adapting a long standing assumption of uniform integration time in many downstream data analysis steps. As a practical first step in this direction, data from the 2023 season will be split into two files, one averaged suitable for core baselines and a second faster cadence including outrigger baselines to prevent decoherence. This will allow for testing of the BDA infrastructure in expectation of a full future deployment.

4.6. Monitoring and Data Storage

The collection of systems which make up HERA adds up to hundreds of computers, switches, and sensors. Detailed monitoring and data management is essential to obtaining high quality data across 8 months of continuous observing. Applying the same logic, data quality must be assessed in near real time with a first round of analysis. Ancillary systems for tracking, cabling, configuration, and managing the data archive have all been designed with the goal of maximizing the uptime and quality of every antenna.

4.6.1. Configuration Management

At 350 dishes there are many connections to keep track of. Careful record keeping is essential to reconstruct the full state of the system at any point in HERA's lifetime. This also minimizes telescope down time due to miscabling or mislabelling. Similar systems are in place on CHIME (Hincks et al. 2015) and other large N interferometers.

Each antenna connects through nine RF interconnect points and more than 8 digital interconnects. The Configuration Management (CM) system tracks these connections and provides this information to downstream systems to map input numbers to antenna numbers and provide data in a ready-to-use form.

Real time analysis products use part connection information to display data sorted according to location by node, SNAP, etc. This is extremely useful for diagnosing systematics due to part malfunction.

In addition to tracking part connections, CM stores the location, serial number, and history of antenna parts for the purposes of inventory and identifying long term failure modes.

4.6.2. Digital Control

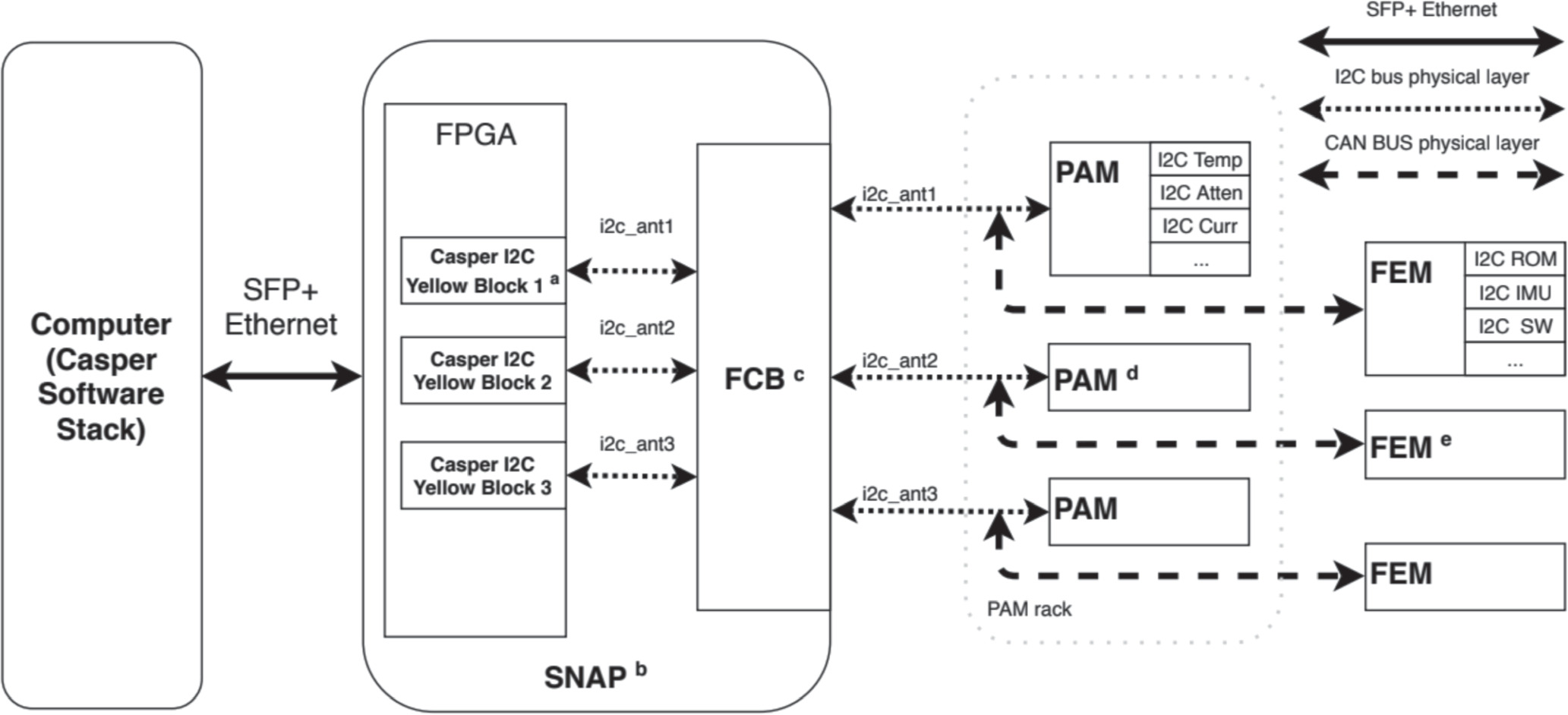

The correlator system includes digitization and cross correlation, but also ancillary elements related to the node health. Figure 19 shows the control scheme. The SNAP board provides digital logic link to the digital components in the RF signal chain. These, plus the F-engine itself are controlled and monitored via CASPER software in Python on a dedicated computer on the SNAP network.

Figure 19. The digital control scheme. Software running on a control computer uses the CASPER Python API to communicate with I2C blocks on the FPGA firmware. These act as the primary controller on a network which extends, via the daughter differential transceiver board (FCB) to the PAMs and FEMS. The PAM and FEM communicate over a CAN BUS physical layer. This allows for commands to be passed from the computer through to the antenna front end, for controlling switches in the FEM, as well as allowing telemetry data to be communicated back to the host computer.

Download figure:

Standard image High-resolution imageEnvironmental, power and White Rabbit timing services in a node are located in the NCM, which can be seen labeled in Figure 15. The NCM contains an Arduino microprocessor which operates sensors and power relays and a central server that sends and receives microprocessor messages and reports to a database. This system controls power to SNAPs, PAMs, and FEMs and monitors temperature and humidity within the node. Temperature sensors are placed at high, mid, and low locations within the node to monitor heat flow through the node stack.

4.6.3. System Monitoring

HERA is a large distributed set of systems which each provide logs, measurements, and system checks to a central Monitor and Control system. Many of these systems also accept commands or configuration information, or are configured by hand in a way that must be tracked. The M&C system is designed to be able to fully reconstruct the historical state of the system, to allow the telescope team to trace failures, and to allow data analysts to trace systematics in historical data. It acheives this goal using a central database which is accessible via an application layer Python package called hera_mc. 40 Since the system touches every other system, it must also be reliable and fault tolerant so it does not limit the uptime of the telescope.

Data stored in M&C comes from a wide variety of subsystems that include the logging from the correlator, NCM, analog components, the site weather station, Librarian, RTP, and compute state reporter (memory, uptime, ip address, raid errors).

These data are collected by either polling subsystems through their provided interface or via push from the subsystem itself using the M&C software interface. Information is stored in an Postgresql database, which can be read in many different ways. The hera_mc package offers a Python API built on SQLAlchemy (Bayer 2012), and version controls the database schema using the Alembic database migration tool. All items are tagged with GPS time (seconds since 1 January 1980). This time standard was chosen over unix epoch for robustness across leap seconds and over Julian date which requires large floating point precision to be accurate to the second.

The M&C database is mirrored as "hot standby" to several off site locations including the NRAO where it can be used for analysis and to a web server for display. The database is also regularly backed up on-site. The web backup is used to update live dashboards described in Section 4.7.2.

4.6.4. On-site Real Time Processing

Thousands of observing hours with many antennas are necessary to reach HERA's desired sensitivity level. Obtaining this much data requires continuous observing through a seasonal 8 month long campaign. Storms, interference, computer failure, and cable breaks do occur. The more subtle system failures are not necessarily detected in telemetry monitoring but are obvious during data reduction as an increase in noise, a calibration error, or other flag triggers. For this reason we have increasingly moved the best understood parts of the data reduction pipeline into an on-site analysis step which runs on a nightly basis.

The Real Time Processor (RTP) system is a collection of steps including calibration and flagging executed by a customized automated pipeline infrastructure. The infrastructure system used to run the pipeline is well described in La Plante et al. (2021). The compute resources are a relatively small cluster of 8 machines with common access to recently captured data in a Network File System and indirect access to a large archive (the "Librarian" described in Section 4.6.5) via rsync. Like the other HERA systems running on-site, it runs unattended with limited remote operator oversight. This requirement places a premium on automation and redundancy. The resulting analysis is posted nightly to a web server for inspection by observers.

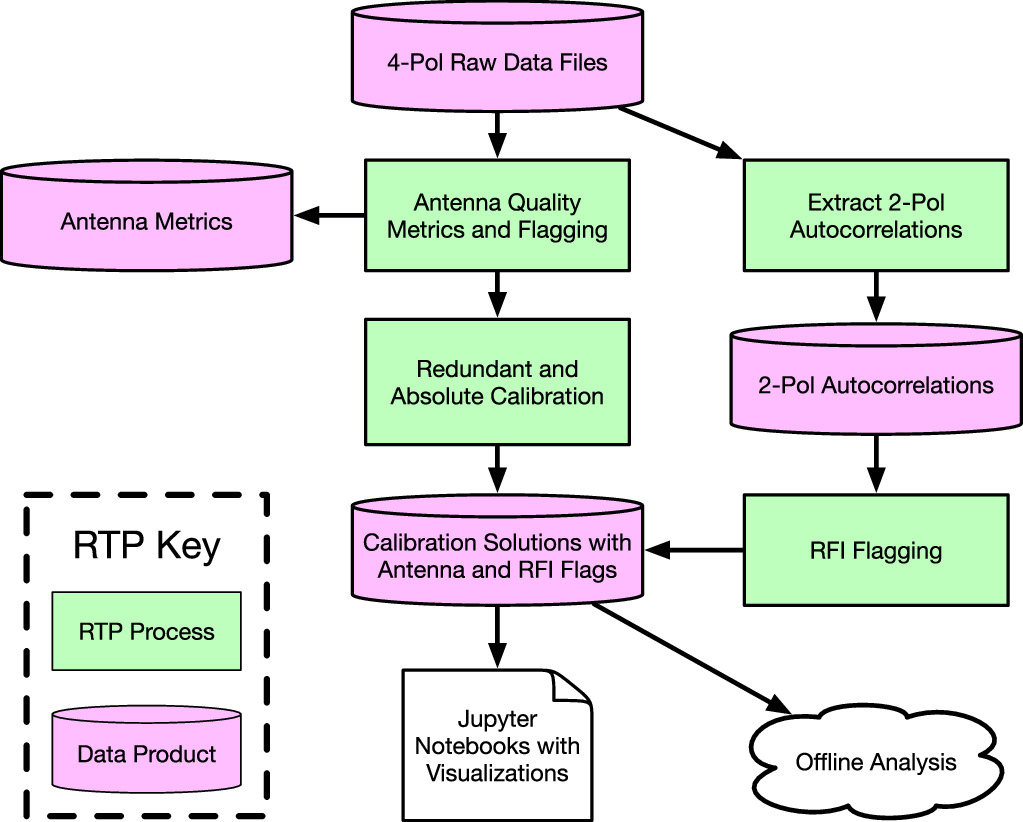

The on-site pipeline steps include flagging, calibration, filtering, and smoothing. It separates out autocorrelations into their own files, produces calibration solutions using both redundant calibration and a calibration model, and derives various quality metrics. The flow of these steps is shown in Figure 20. Summary plots for inspection are output as Jupyter Notebooks. These are discussed in Section 4.7.3.

Figure 20. A simplified view of the flow of analysis steps applied in near-real time by RTP. The key tasks are antenna and RFI flagging and calibration, all of which are useful for rapid assessment of overall data quality and array health. Further processing is done offline at NRAO.

Download figure:

Standard image High-resolution imageRTP launches automatically at the end of data recording every night starting with the raw data files written by the correlator and to stay current must complete before the resumption of observing the next day. Figure 21 shows a typical nightly cycle of resource usage in the on-site cluster. Makeflow is used to construct a logical execution order which takes into account dependencies between tasks. A custom wrapper around Makeflow, called hera_opm 41 (HERA online processing module), applies rules devised specific to HERA processing such as "time chunking" of data which batches together analysis steps like RFI excision which require multiple time samples. Makeflow executes new tasks once previous tasks have produced any pre-requisite inputs. For example, notebooks which plot an entire nights-worth of calibration solutions must wait for all files to be completed. Makeflow executes tasks using Slurm (Yoo et al. 2003) which assigns jobs to compute resources according to available capacity and the requirements of the job.

Figure 21. (top) Pipeline computer loads captured by the monitor and control system illustrate a sustainable processing schedule over a few observing days. (bottom) Data volume vs. free space for a few observing days. First, for this observing season, data are captured at a rate of approximately 0.3 TiB/hour, seen here as a decline in free space and increase in data volume in the bottom panel. Once observing finishes in the morning, processing begins, seen here as sustained CPU load in the top panel. Then at 0600 the second night observing completes and the process repeats. At this time, 18 hr time lapsed between compute cycles so a backlog night was added at 1800 hr.

Download figure:

Standard image High-resolution imageThe current storage available on-site is approximately 1.1 Petabytes distributed across seven servers. Each server hosts a set of disks in the "RAID 60" configuration with three "hot spare" disks that can be used by any individual RAID 6 partitions. This configuration incurs approximately a 25% penalty of data storage capacity compared to the raw disk size, but allows for at least 5 individual drive failures before data are lost.

Raw data and pipeline products are streamed for offsite backup and further analysis to the NRAO data center in Socorro, NM. Figure 22 shows an overview of the RTP on-site and off-site systems, and how they interact. In the on-site cluster, the raw data storage can be accessed by the compute nodes and the head node for processing, and is also uploaded to the on-site Librarian stores. The connection between the Karoo site and NRAO has an average 200 Mbps transfer bandwidth and a typical latency of 100–500 ms. Data are transferred to the off-site cluster at NRAO via rsync or Globus (Ananthakrishnan et al. 2015) depending on service availability. Globus uses a third party coordinator to manage parallel UDP connections to significantly increase transfer speeds over high latency connections.

Figure 22. The archiving and processing architecture on and off site are functionally mirrors. The real time processing (RTP) system executes complex pipelines while the Librarian system manages and provides an interface to the data archive. The on-site pipeline calibrates and flags a nights worth of data starting when observing finishes for the night and ending before observing starts again the next night. The Librarian manages the ∼1.1 PB of on-site storage and transfers data off to the ∼2.2 PB storage at the NRAO using a database to track associated analysis products and to safely delete data once it has been safely moved off site.

Download figure:

Standard image High-resolution imageIn addition to reports presented to observers, the near real time returns from nightly calibration and flagging are also stored in M&C (see Section 4.6.3) for analysis of long term trends which can be tracked in a dashboard system discussed further in Section 4.7.2.

4.6.5. Data Archives

A typical night's observation generates 1800 raw files and RTP processing generates of order ten more per file. These files are stored across several disk servers, where they can be quickly found and retrieved. This set of requirements was found to need a custom solution. The Librarian (La Plante et al. 2021) was developed to meet these unique needs. Each Librarian instance consists of several independent RAID server nodes and a head running the API server and database. One instance is located at the telescope and another at the primary archive where data are stored for long term. Additional sites have been operated for local use as necessary.

Data storage requirements have increased as antennas have been added with each season. The capture rate in the 2023 season is forecast to be 0.454 TiB per hr, assuming 256 operating antennas with the current settings of 8 seconds averaging time and 2048 channels. Over an entire 2000 hr observing season, this will amount to almost 850 TB of data. The data must be synced across multiple sites for end-product users, and must be easily filtered using metadata. Additionally, since there is not enough storage on-site for the lifetime of the project, data must be regularly deleted once it is transferred off site. Deletion must be done only after verification of successful transfer. File locations and status are logged in a PSQL database while a service program provides functions for uploading, transferring, and safely deleting copies of files. The server is accessed through an application programming interface (API) which can be used by automated analysis pipelines to get and put data as well as a web-based front end for human management.

The primary functions of the Librarian are data ingest, location tracking, and transfer. On a nightly basis, the raw correlator data are output to a temporary buffer disk. It is then converted to the UVH5 file type and uploaded to the Librarian server, which creates a staging area for the upload and generates a one-use rsync command to be run by the uploader process. Additionally, also on a nightly basis, data are transferred via Globus from the on-site Librarian to the NRAO. Figure 22 shows the architecture of this transfer, as well as how it interconnects to the RTP. On the remote or on-site servers, users can search for this data to stage to their own working area. Using an SQL query through a web interface, the user can specify on a number of search parameters (such as unique file name, observation ID, Julian Date range, etc...) to find all files associated with the search. They can then copy those files locally for analysis.

The telescope Librarian instance is co-located with the X-engines and RTP cluster where it can ingest data at the highest possible rate. From there data are moved to the primary long term storage instance at National Radio Astronomy Observatory (NRAO) in Socorro, NM, which also supports post-processing analysis. The processing cluster at NRAO has fourteen compute nodes with 8-core processors and 256 GB of RAM. The compute nodes have no local disk storage, but they are connected to a distributed parallel filesystem (Lustre) via a 40Gbit Infiniband network. Data can be staged from the archive to Lustre via the web portal or API.

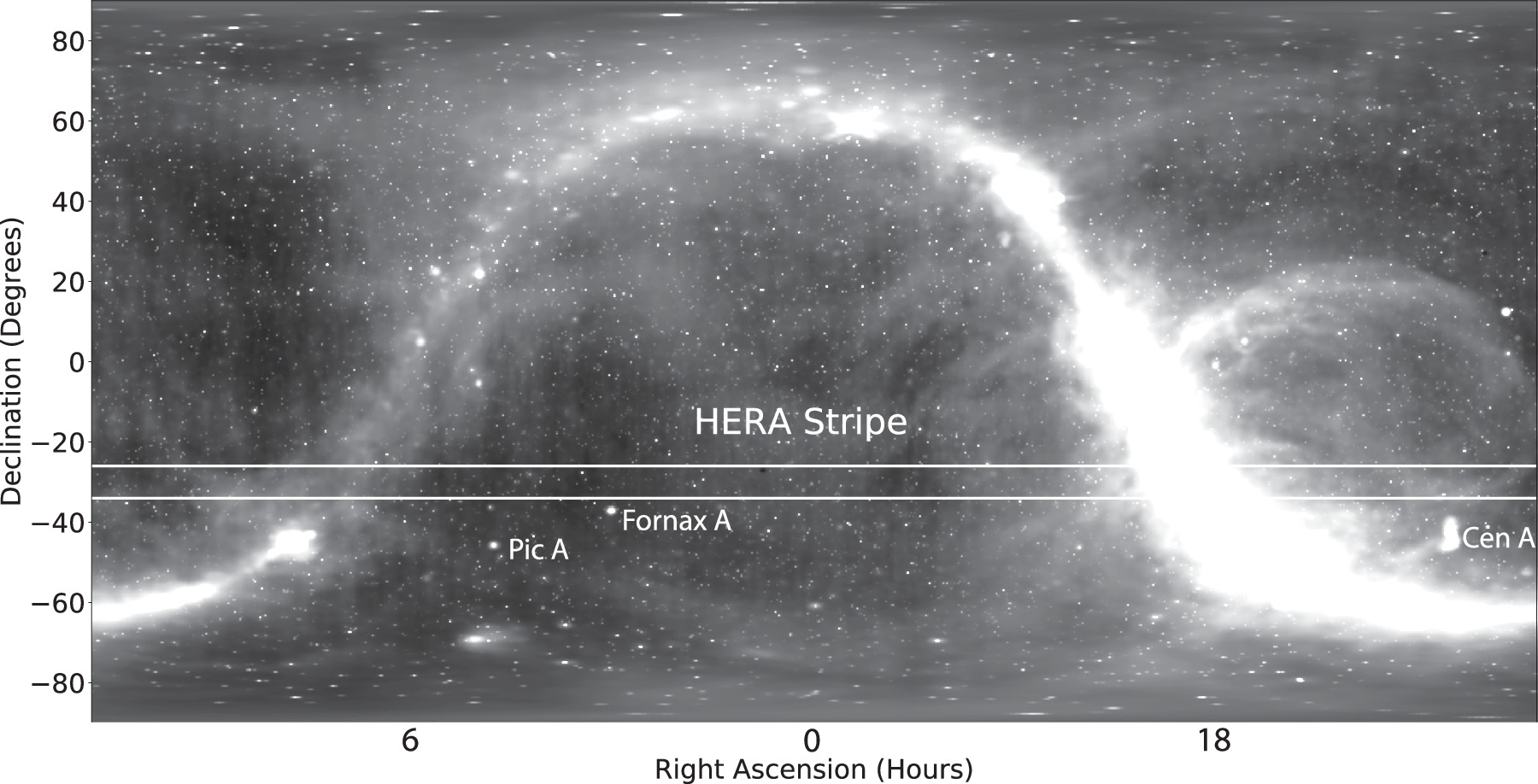

4.7. Observing

The cosmological observing program must maximize time spent on the coldest foreground regions of the sky while allowing sufficient down-time for maintenance and improvements. The array location and drift scan design makes an observable sky region of a 8°-wide scan at −30° decl. This stripe is visualized in Figure 23. This stripe crosses both the bright Galactic center, and South Galactic Pole where diffuse foregrounds are dimmest. The south Galactic pole is up at night in the Austral spring and summer. The combination of these factors result in an observing season beginning in August and lasting until April. During this time, sensitivity is maximized by recording with the largest number of antennas on the most hours possible. A continuous program of observing is required.

Figure 23. HERA's observing stripe, 8° wide centered at approximately −30° in decl. A few nearby bright radio sources are labeled. The background image is the radio sky including point sources and diffuse emission, combining data from the GSM (de Oliveira-Costa et al. 2008), NRAO VLA sky survey (Condon et al. 1998), and the Sydney University Molonglo Sky Survey (Mauch et al. 2003). Diffuse and point sources are not on the same scale, and are relatively scaled for visual clarity.

Download figure:

Standard image High-resolution image4.7.1. Observers

During science observing all points of the system from server health to data quality are monitored by remote observers. Observing nightly across a season stretching over eight months is a continuous effort by volunteers from across the collaboration.

The HERA collaboration members sign up for weeklong observing duty, with up to three observers per week. HERA observers have 3 primary goals. The first is to ensure automated observing started correctly and monitor the data capture process for disruption. The second is to make a rough judgement of data quality. This determination reflects major issues such as a known failure of a correlator component or a large number of unusual autocorrelation spectra. Lastly, observers report on whether the pipeline has run successfully and whether data are transferring to the off site archive.

A large number of observers have volunteered to help maintain a 200+ day campaign of nightly observing. Many are students or have expertise in other areas. Observing instructions and displays have been designed to require minimal expertise. All monitoring systems are accessed through a web browser. No software installation or training in data access is required.

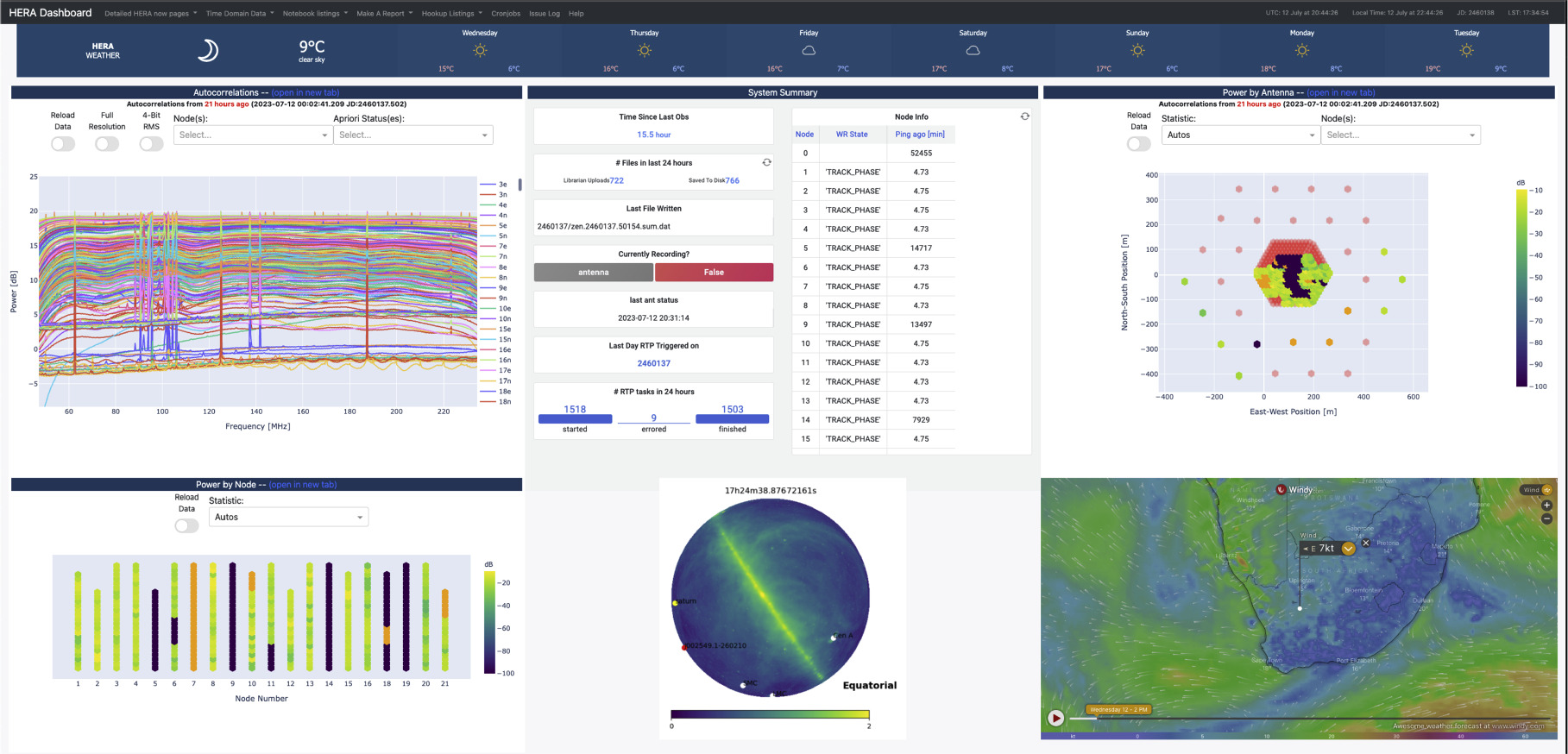

4.7.2. Dashboards

Web-based dashboards 42 provide a current and historical view of the telescope status derived largely from the M&C database. The page includes live autocorrelation spectra, RF power levels organized by subsystem, and other relevant information needed to make a quick assessment about the overall array health. Figure 24 shows a snapshot of the home page.

Figure 24. The home page of the dashboards hosted at heranow.reionization.org. The landing page offers a live plot of the autocorrelations from the array, a table of observing status, a median autocorrelation power plot in two views (by array position and node), major sources in the current sky above HERA, and a current weather widget. Observers can use this dashboard to make a quick assessment of the health of the array.

Download figure:

Standard image High-resolution imageAdditional dashboards provide a finer grained view into subsystems. Most use Grafana, where dashboard panes are constructed around an SQL query which can be further refined by user controlled variables like time range or node number. Figure 21 showing RTP usage is one such example of a Grafana dashboard used by observers.

4.7.3. Notebooks and Quality Metrics

Another part of observing is inspection of nightly analysis products. Several pipeline tasks produce Jupyter notebooks which are then automatically uploaded to a public web server.

The notebooks include plots of raw data, interference detection, and calibration solutions. They also include full-day summaries of various array performance statistics. Several notebooks focus on antenna autocorrelations, which are small well understood data sets used to detect common system issues. Autocorrelation notebooks assess power levels that are too low (malfunctioning gain stage or broken fiber), too high (too much gain causing saturation), or show an abnormal passband for a spectrum, which may indicate a problem with the feed. Example cross-correlation metrics include redundancy between repeated baselines and correlation of antennas (Storer et al. 2022). These metrics are useful as automated functions to flag broken or misbehaving antennas, but they are also useful for observers to identify unusual phenomena by eye. In practice, they have been used to identify a number of array issues, such as spurious correlations between antennas or timing failure identifiable as a loss of correlation. A few of these are discussed below in Section 5. The RTP is increasingly comprised of Jupyter notebooks executed with nbconvert, allowing for simultaneous analysis and visualization. They serve as self-documenting logs, enabling quick debugging and identification of novel data issues.

5. Array Performance and Commissioning

HERA has operated continuously through a construction and test period spanning several years. As an array, we can operate and analyze sub-arrays of antennas as they come online. During this time, several systematics were identified in the phase II system which are worth describing in some detail because each degraded the overall performance, and fixing or mitigating the issue significantly improved the instrument. Here we largely show commissioning data, which is used to evaluate array performance, but does not constitute final science quality data products. A detailed description of the data from each season, the steps used to calibrate, flag, and all the other steps needed to obtain design sensitivity are left to future phase II results. For a description of these steps for the phase I system, see The HERA Collaboration et al. (2022a) and The HERA Collaboration et al. (2022b).

Systematics are a general category of unexpected instrument or analysis effects that degrade the data quality. To detect the EoR, the HERA telescope and analysis must be able to separate the EoR signal from from foregrounds which are far brighter than the EoR signal. Systematics that mix the bright foregrounds into regions of parameter space that HERA uses to detect the EoR, described in 3, are of particular concern. Examples include unmodeled gain variation and digital nonlinearity. All published deep 21 cm limits contend with one or more such behavior through a custom analysis which filters, flags, or calibrates away the unwanted effect, usually at the cost of additional simulation analysis to understand the impact on the resulting power spectrum (e.g., Harker et al. 2010; Barry et al. 2019; The HERA Collaboration et al. 2022a, 2022b). HERA was built to a reference specification designed to avoid known systematics, but in practice subtle unexpected effects remain. HERA's early observing program has presented the opportunity to find and correct several issues. Here we detail those which have had the largest impact on sensitivity.

5.1. Common Mode

A prevalent issue, particularly in dense arrays like HERA, is the appearance of unwanted correlation between antennas (e.g., Virone et al. 2018; Kern et al. 2019; Fagnoni et al. 2020; Josaitis et al. 2022; Kwak et al. 2023). This issue takes many forms, but can generally be reduced into two categories, which in this paper will be called "common mode" and "crosstalk." Common mode occurs when some unwanted signal leaks into two or more signal chains resulting in an excess correlation with a time dependence that is independent of sky fringes. This effect can possibly be ameliorated with better signal chain isolation, a phase switching system, or by temporally filtering the non-sky like components (Kern et al. 2019, 2020b). Meanwhile, crosstalk happens when sky signal is unintentionally communicated from one antenna to another through antenna to antenna mutual coupling (e.g., Josaitis et al. 2022) or leakage between parts of the RF system (The HERA Collaboration et al. 2022b).

For the HERA phase I system, mutual coupling is investigated in detail in Fagnoni et al. (2020). The paper simulates the chromatic effects of mutual coupling in the array and discusses the frequency dependent effects of the phase I system in detail. Josaitis et al. (2022) also details a semi-analytic method for mutual coupling mitigation in closely packed arrays. Similar investigations are under way for the phase II system (Pascua & Rath et al., in prep.).

In early HERA phase II observations, visibilities were observed to exhibit "common mode" correlation beyond the expected level. This was particularly strong between antennas within the same node, and consequently within the same PAM receiver rack. The correlation is easily seen when plotting the visibility matrix normalized by the auto correlations (Storer et al. 2022). This excess varied in time much faster than expected variation due to the sky suggesting another source of common mode or variation in the cross coupling path. It also, tellingly, was clearly visible even when antenna LNAs were turned off, suggesting a common source of RF power. The systematic was tracked in the lab to a gap in the aluminum cases enclosing individual PAM receiver cards. This gap allowed a pathway for common-mode signals to enter the system. It was demonstrated that it could be fixed by installing a conductive foam gasket in the gap. Once applied to the field system, excess correlation seen within a node was significantly reduced. Figure 25 illustrates the improvement with correlation matricies recorded with antenna switch state set to the 50 ohm internal load before and after application of gaskets around the RFoF cards. Each pixel represents a cross-correlation between two antennas in the array, meaning the diagonal is the autocorrelation. The antennas are sectioned by node. Excess correlation above the noise is visible across the array with much larger excess occurring within nodes. After the application of gaskets within all 350 PAM receivers excess correlation is significantly reduced across all visibilites and is now much closer to the noise level. The anomalous temporal structure is also significantly reduced.

Figure 25. Correlation matricies illustrating the impact of improved signal chain isolation. This antenna-antenna correlation matrix is formed from visibilities normalized by auto correlations using antennas set to their internal loads. Each pixel represents a cross-correlation betwen two antennas in the array, meaning the diagonal is the autocorrelation. Correlation uses even/odd separated visibilities and should be mean-zero with a noise-like variance. The matrix was averaged over approximately 1 minute of time and is scaled such that noise has a black to gray scale and anything non-noise like is on a color scale. Before applying gaskets (left) a significant excess is seen across the array with largest values within a node. After applying gaskets (right) the structure within a node boundary is significantly reduced. Excess is still seen within the boundaries of a SNAP, indicating some remaining pathway for cross-coupling or common mode.

Download figure:

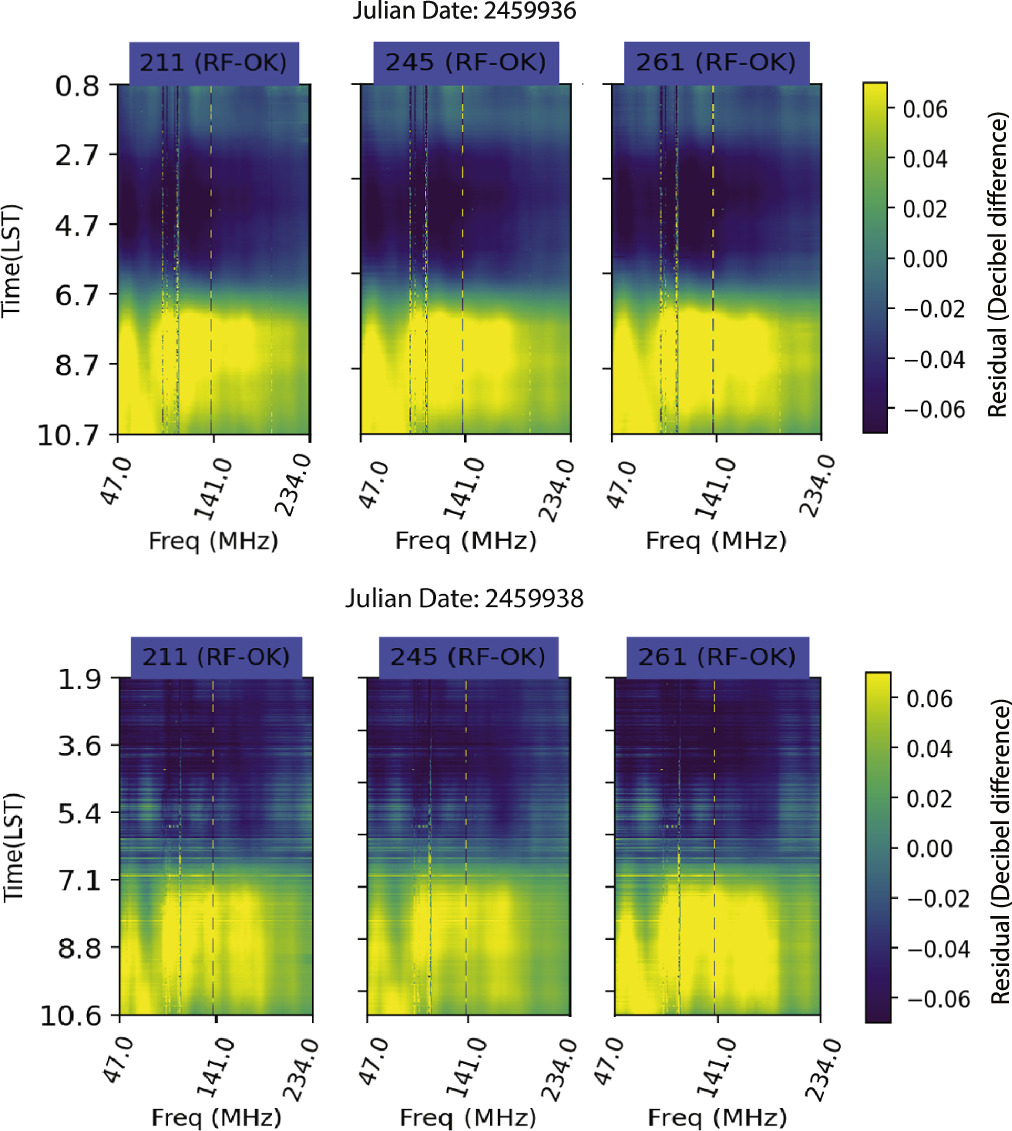

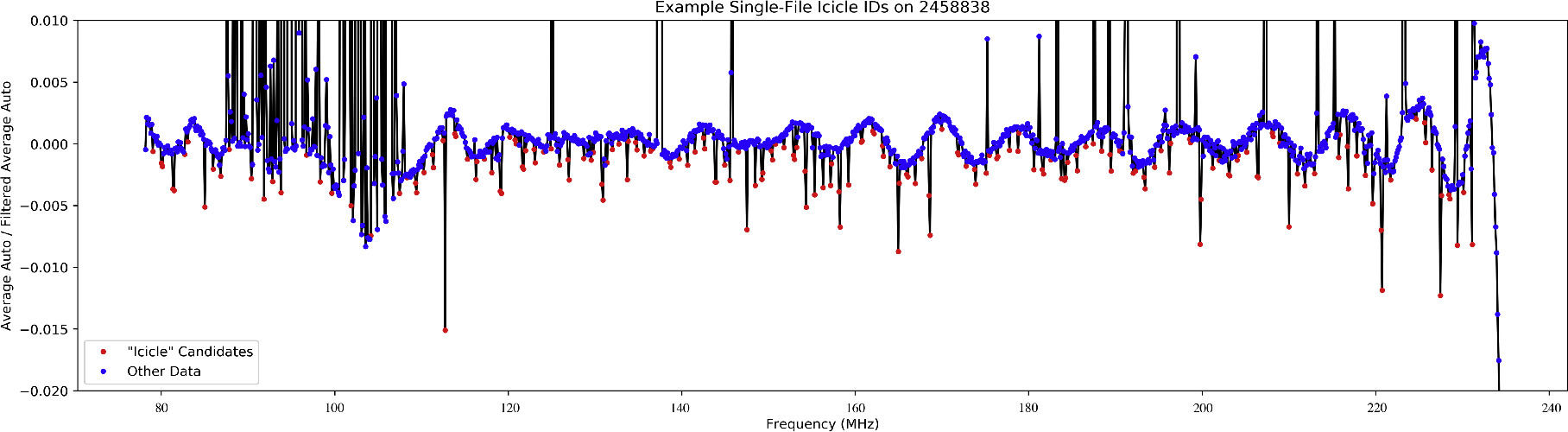

Standard image High-resolution image5.2. Environmental RF Interference

One of the most confounding systematics for radio instruments is radio frequency interference (RFI). Interference can come from a large variety of sources, both man-made and natural. The Karoo site was chosen due to the relatively quiet RFI environment. However with satellites, planes, and meteors and other modes for over horizon propagation, no place on Earth will be entirely free of artificial RFI. Furthermore, there are sources that cannot be mitigated by moving away from them, such as natural phenomena and self-interference.

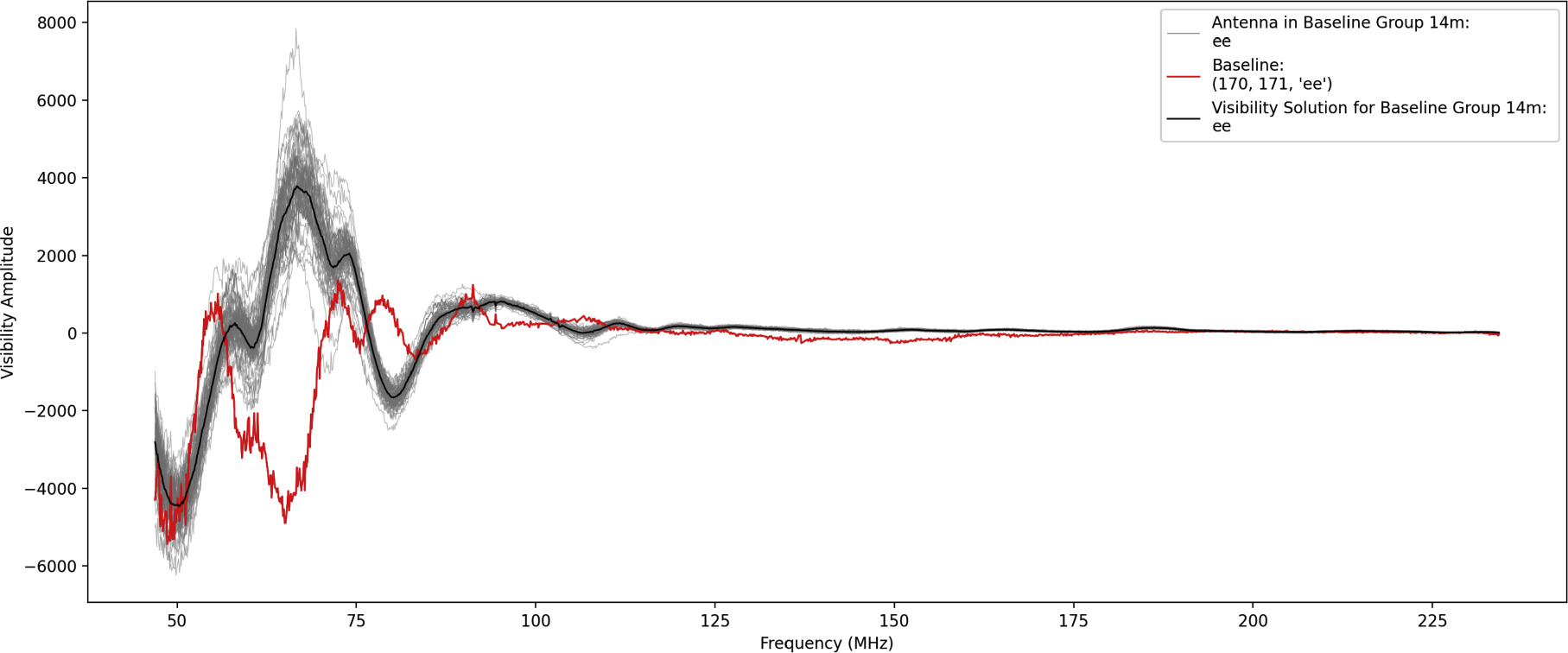

For human-made transmitters, the duty cycle of the transmitters can be quite high, causing the corresponding channels to be flagged out of the data at a very high percentage. Fortunately, many of the most egregious transmitters are well documented and can be identified easily in the data. Typical emitters seen at HERA include the ORBCOMM satellite constellations at 137 MHz and FM radio at 88–110 MHz. At other times intermittent transmission path changes or use patterns can cause interference to appear sporadically.