Abstract

Atomistic modelling of magnetic materials provides unprecedented detail about the underlying physical processes that govern their macroscopic properties, and allows the simulation of complex effects such as surface anisotropy, ultrafast laser-induced spin dynamics, exchange bias, and microstructural effects. Here we present the key methods used in atomistic spin models which are then applied to a range of magnetic problems. We detail the parallelization strategies used which enable the routine simulation of extended systems with full atomistic resolution.

Export citation and abstract BibTeX RIS

Content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

This article was made open access on 17 December 2014

1. Introduction

Atomistic models of magnetic materials, where the atoms are treated as possessing a local magnetic moment, originated with Ising in 1925 as the first model of the phase transition in a ferromagnet [1]. The Ising model has spin-up and spin-down only states, and is amenable to analytical treatment, at least in two dimensions. Although it is still extensively used in the study of phase transitions, it is limited in applicability to magnetic materials and cannot be used for dynamic simulations. A natural extension of the Ising model is to allow the atomic spin to vary freely in 3D space [2, 3] which yields the classical Heisenberg model, where quantum mechanical effects on the atomic spins are neglected [2]. Monte Carlo simulations of the classical Heisenberg model allowed the study of phase transitions, surface and finite size effects in simple magnetic systems. The study of dynamic phenomena however was intrinsically limited due to the use of Monte Carlo methods until the development of dynamic [4, 5] and stochastic Landau–Lifshitz–Gilbert atomistic spin models [6–8].

Today atomistic simulation of magnetic materials has become an essential tool in understanding the processes governing the complex behaviour of magnetic nanomaterials, including ultrafast laser-induced magnetization dynamics [9–11], exchange bias in core-shell nanoparticles [12–14] and multilayers [5, 15], surface anisotropy in magnetic nanoparticles [16, 17], microstructural effects [18–20], spin valves [21] and spin torque [22], temperature effects and properties [23–26] and magnetic recording media [27, 28]. A significant capability of the atomistic spin model is to bridge the gap between ab initio electronic structure calculations and micromagnetics by using a multiscale model [29–32]. Such a model is able to calculate effective parameters for larger scale micromagnetic simulations [33], such as anisotropies, and exchange constants [34]. The atomistic model is also able to interface directly with micromagnetic simulations to tackle extended systems by calculating interface properties atomistically while treating the bulk of the material with a micromagnetic discretization [35, 36]. Despite the broad applicability and importance of atomistic models, no easy-to-use and open-source software packages are presently available to researchers, unlike the mesoscopic micromagnetic approaches where several packages are currently available [37–39].

Today most magnetic modelling is performed using numerical micromagnetics in finite difference [37] and finite element [38, 39] flavours. The theoretical basis of micromagnetics is that the atomic dipoles which make up the magnetic material can be approximated as a continuous vector field where, due to the exchange interaction, the atomic dipoles in a small finite volume are perfectly aligned. Micromagnetics has proven to be an essential tool in understanding a range of complex magnetic effects [40–42] but due to the rapid pace of technological development in magnetic materials the continuum approximation at its heart precludes its application to many problems of interest at the beginning of the 21st century, such as heat assisted magnetic recording [43], ultrafast laser-induced demagnetization [44, 45], exchange bias in spin valves [46], surface and interface anisotropy [47, 48] and high anisotropy materials for ultrahigh density recording media such as FePt [49]. The common theme to these problems is a sub-nanometre spatial variation in the magnetization caused by high temperatures, atomic level ordering (anti- and ferrimagnets), or atomic surface and interface effects. To tackle these problems requires a formalism to take account of the detailed atomic structure to express its impact on the macroscopic behaviour of a nano particle, grain or complete device.

Some, but not all, of these problems can adequately be tackled by next-generation micromagnetic approaches utilizing the Landau–Lifshitz–Bloch equation [50–52], which is based on a physically robust treatment of the coupling of a macrospin to a heat bath, allowing the study of high temperature processes [53], ultrafast demagnetization [54, 55] and switching [56]. However, true atomic scale variations of the magnetization, as apparent in antiferromagnets, surfaces and interfaces, still require an atomistic approach. Additionally with the decreasing size of magnetic elements, finite size effects begin to play in increasing role in the physical properties of magnetic systems [57].

In this article we present an overview of the common computational methods utilized in atomistic spin simulations and details of their implementation in the open-source VAMPIRE software package3. Testing of the code is an essential part of ensuring the accuracy of the model and so we also detail important tests of the various parts of the model and compare them to analytic results while exploring some interesting physics of small magnetic systems.

VAMPIRE is designed specifically with these problems in mind, and can easily simulate nanoparticles, multilayer films, interfacial mixing, surface anisotropy and roughness, core-shell systems, granular media and lithographically defined patterns, all with fully atomistic resolution. In addition, truly realistic systems predicted by Molecular Dynamics simulations [19, 20, 59] can also be used giving unprecedented detail about the relationships between shape and structure and the magnetic properties of nanoparticles. In addition to these physical features VAMPIRE also utilizes the computing power of multiprocessor machines through parallelization, allowing systems of practical interest to be simulated routinely, and large-scale problems on the 100+ nm length scale to be simulated on computing clusters. Further details of the VAMPIRE code and its architecture are presented in appendix

2. The atomistic spin model

The physical basis of the atomistic spin model is the localization of unpaired electrons to atomic sites, leading to an effective local atomistic magnetic moment. The degree of localization of electrons has historically been a contentious issue in 3d metals [60], due to the magnetism originating in the outer electrons which are notionally loosely bound to the atoms. Ab initio calculations of the electron density [61] show that in reality, even in 'itinerant' ferromagnets, the spin polarization is well-localized to the atomic sites. Essentially this suggests that the bonding electrons are unpolarized, and after taking into account the bonding charge the remaining d-electrons form a well-defined effective localized moment on the atomic sites.

Magnetic systems are fundamentally quantum mechanical in nature since the electron energy levels are quantized, the exchange interaction is a purely quantum mechanical effect, and other important effects such as magnetocrystalline anisotropy arise from relativistic interactions of electronic orbitals with the lattice, which are the province of ab initio models. In addition to these properties at the electronic level, the properties of magnetic materials are heavily influenced by thermal effects which are typically difficult to incorporate into standard density functional theory approaches. Therefore models of magnetic materials should combine the quantum mechanical properties with a robust thermodynamic formalism. The simplest model of magnetism using this approach is the Ising model [1], which allows the atomic moments one of two allowed states along a fixed quantization axis. Although useful as a descriptive system, the forced quantization is equivalent to infinite anisotropy, limiting the applicability of the Ising model in relation to real materials. In the classical description the direction of the atomic moment is a continuous variable in 3D space allowing for finite anisotropies and dynamic calculations. In some sense the classical spin model is analogous to Molecular Dynamics, where the energetics of the system are determined primarily from quantum mechanics, but the time evolution and thermodynamic properties are treated classically.

2.1. The classical spin Hamiltonian

The extended Heisenberg spin model encapsulates the essential physics of a magnetic material at the atomic level, where the energetics of a system of interacting atomic moments is given by a spin Hamiltonian (which neglects non-magnetic effects such the as the Coulomb term). The spin Hamiltonian  typically has the form:

typically has the form:

denoting terms for the exchange interaction, magnetic anisotropy, and externally applied magnetic fields respectively.

The dominant term in the spin Hamiltonian is the Heisenberg exchange energy, which arises due to the symmetry of the electron wavefunction and the Pauli exclusion principle [60] which governs the orientation of electronic spins in overlapping electron orbitals. Due to its electrostatic origin, the associated energies of the exchange interaction are around 1–2 eV, which is typically up to 1000 times larger than the next largest contribution and gives rise to magnetic ordering temperatures in the range 300–1300 K. The exchange energy for a system of interacting atomic moments is given by the expression

where Jij is the exchange interaction between atomic sites i and j, Si is a unit vector denoting the local spin moment direction and Sj is the spin moment direction of neighbouring atoms. The unit vectors are taken from the actual atomic moment  and given by

and given by  . It is important to note here the significance of the sign of Jij. For ferromagnetic materials where neighbouring spins align in parallel, Jij > 0, and for antiferromagnetic materials where the spins prefer to align anti-parallel Jij < 0. Due to the strong distance dependence of the exchange interaction, the sum in equation (2) is often truncated to include nearest neighbours only. This significantly reduces the computational effort while being a good approximation for many materials of interest. In reality the exchange interaction can extend to several atomic spacings [29, 30], representing hundreds of pairwise interactions.

. It is important to note here the significance of the sign of Jij. For ferromagnetic materials where neighbouring spins align in parallel, Jij > 0, and for antiferromagnetic materials where the spins prefer to align anti-parallel Jij < 0. Due to the strong distance dependence of the exchange interaction, the sum in equation (2) is often truncated to include nearest neighbours only. This significantly reduces the computational effort while being a good approximation for many materials of interest. In reality the exchange interaction can extend to several atomic spacings [29, 30], representing hundreds of pairwise interactions.

In the simplest case the exchange interaction Jij is isotropic, meaning that the exchange energy of two spins depends only on their relative orientation, not their direction. In more complex materials, the exchange interaction forms a tensor with components:

which is capable of describing anisotropic exchange interactions, such as two-ion anisotropy [29] and the Dzyaloshinskii–Moriya interaction (off-diagonal components of the exchange tensor). In the case of tensorial exchange  , the exchange energy is given by the product:

, the exchange energy is given by the product:

Obtaining the components of the exchange tensor may be done phenomenologically, or via ab initio methods such as the relativistic torque method [62–65] or the spin-cluster expansion technique [30, 66–68]. The above expressions for the exchange energy also exclude higher-order exchange interactions such as three-spin and four-spin terms. In most materials the higher-order exchange terms are significantly smaller than the leading term and can safely be neglected.

While the exchange energy gives rise to magnetic ordering at the atomic level, the thermal stability of a magnetic material is dominated by the magnetic anisotropy, or preference for the atomic moments to align along a preferred spatial direction. There are several physical effects which give rise to anisotropy, but the most important is the magnetocrystalline anisotropy (namely the preference for spin moments to align with particular crystallographic axes) arising from the interaction of atomic electron orbitals with the local crystal environment [69, 70].

The simplest form of anisotropy is of the single-ion uniaxial type, where the magnetic moments prefer to align along a single axis,  , often called the easy axis and is an interaction confined to the local moment. Uniaxial anisotropy is most commonly found in particles with elongated shape (shape anisotropy), or where the crystal lattice is distorted along a single axis as in materials such as hexagonal Cobalt and L10 ordered FePt. The uniaxial single-ion anisotropy energy is given by the expression:

, often called the easy axis and is an interaction confined to the local moment. Uniaxial anisotropy is most commonly found in particles with elongated shape (shape anisotropy), or where the crystal lattice is distorted along a single axis as in materials such as hexagonal Cobalt and L10 ordered FePt. The uniaxial single-ion anisotropy energy is given by the expression:

where ku is the anisotropy energy per atom. Materials with a cubic crystal structure, such as iron and nickel, have a different form of anisotropy known as cubic anisotropy. Cubic anisotropy is generally much weaker than uniaxial anisotropy, and has three principal directions which energetically are easy, hard and very hard magnetization directions respectively. Cubic anisotropy is described by the expression:

where kc is the cubic anisotropy energy per atom, and Sx, Sy, and Sz are the x, y, and z components of the spin moment S respectively.

Most magnetic problems also involve interactions between the system and external applied fields, denoted as Happ. External fields can arise in many ways, for example a nearby magnetic material, or as an effective field from an electric current. In all cases the applied field energy is simply given by:

2.2. A note on magnetic units

The subject of magnetic units is controversial due to the existence of multiple competing standards and historical origins [60]. Starting from the atomic level however, the dimensionality of units is relatively transparent. Atomic moments are usually accounted for in multiples of the Bohr magneton (μB), the magnetic moment of an isolated electron, with units of J T−1. Given a number of atoms of moment μs in a volume, the moment per unit volume is naturally in units of J T m−3, which is identical to the SI unit of A m−1. However, the dimensionality (moment per unit volume) of the unit A m−1 is not as obvious as J T−1m−3, and so the latter form is used herein.

Applied magnetic fields are hence defined in Tesla, which comes naturally from the derivative of the spin Hamiltonian with respect to the local moment. The unit of Tesla for applied field is also beneficial for hysteresis loops, since the area enclosed a typical M–H loop is then given as an energy density (J m−3). A list of key magnetic parameters and variables and their units are shown in table 1.

Table 1. Table of key variables and their units.

| Variable | Symbol | Unit | |

|---|---|---|---|

| Atomic magnetic moment | μs | Joules/Tesla | (J T−1) |

| Unit cell size | a | Angstroms | (Å) |

| Exchange energy | Jij | Joules/link | (J) |

| Anisotropy energy | ku | Joules/atom | (J) |

| Applied field | H | Tesla | (T) |

| Temperature | T | Kelvin | (K) |

| Time | t | Seconds | (s) |

| Parameter | Symbol | Value | |

| Bohr magneton | μB | 9.2740 × 10−24 J T−1 | |

| Gyromagnetic ratio | γ | 1.76 × 1011 T−1 s−1 | |

| Permeability of free space | μ0 | 4π × 10−7 T2 J−1 m3 | |

| Boltzmann constant | kB | 1.3807 × 10−23 J K−1 | |

3. System parameterization and generation

Unlike micromagnetic simulations where the magnetic system can be partitioned using either a finite difference or finite element discretization, atomistic simulations generally require some a priori knowledge of atomic positions. Most simple magnetic materials such as Fe, Co or Ni form regular crystals, while more complex systems such as oxides, antiferromagnets and Heusler alloys possess correspondingly complex atomic structures. For ferromagnetic metals, the details of atomic positions are generally less important due to the strong parallel orientation of moments, and so they can often (but not always) be represented using a simple cubic discretization. In contrast, the properties of ferrimagnetic and antiferromagnetic materials are inherently tied to the atomic positions due to frustration and exchange interactions, and so simulation of these materials must incorporate details of the atomic structure.

In addition to the atomic structure of the material, it is also necessary to parameterize the terms of the spin Hamiltonian given by equation (1), principally including exchange and anisotropy values but also with other terms. There are generally two ways in which this may be done: using experimentally determined properties or with a multiscale approach using ab initio density functional theory calculations as input to the spin model.

3.1. Atomistic parameters from ab initio calculations

Ab initio density functional theory (DFT) calculations utilize the Hohenberg–Kohn–Sham theory [71, 72] which states that the total energy E of a system can be written solely in terms the electron density, ρ. Thus, if the electron density is known then the physical properties of the system can be found. In practice, the both electron density and the spin density are used as fundamental quantities in the total energy expression for spin-polarized systems [73]. In many implementations DFT-based methods only consider the outer electrons of a system, since the inner electrons play a minimal role in the bonding and also partially screen the effect of the nuclear core. These effects are approximated by a pseudopotential which determines the potential felt by the valence electrons. In all-electron methods, however, the core electron density is also relaxed. By energy minimization, DFT enables the calculation of a wide range of properties, including lattice constants, and in the case of magnetic materials localized spin moments, magnetic ground state and the effective magnetocrystalline anisotropy. Standard software packages such as VASP [74], CASTEP [75, 76] and SIESTA [77] make such calculations readily accessible. At present determining site resolved properties such as anisotropy constants and pairwise exchange interactions is more involved and requires ab initio Green's functions techniques such as the fully relativistic Korringa–Kohn–Rostoker method [78, 79] or the LMTO method [80, 81] in conjunction with the magnetic force theorem [62]. An alternative approach for the calculation of exchange parameters is the utilization of the generalized Bloch theorem for spin-spiral states in magnetic systems [82] together with a Fourier transformation of k-dependent spin-spiral energies [83, 84].

A number of studies have determined atomic magnetic properties from first principles calculations by direct mapping onto a spin model, including the principal magnetic elements Co, Ni and Fe [81], metallic alloys including FePt [29], IrMn [31], oxides [85] and spin glasses [86], and also bilayer systems such as Fe/FePt [87]. Such calculations give detailed insight into microscopic magnetic properties, including atomic moments, long-ranged exchange interactions, magnetocrystalline anisotropies (including surface and two-ion interactions) and other details not readily available from phenomenological theories. Combined with atomistic models it is possible to determine macroscopic properties such as the Curie temperature, temperature-dependent anisotropies, and magnetic ground states, often in excellent agreement with experiment. However, the computational complexity of DFT calculations also means that the systems which can be simulated with this multi scale approach are often limited to small clusters, perfect bulk systems and 2D periodic systems, while real materials of course often contain a plethora of defects disrupting the long range order. Some studies have also investigated the effects of disorder in magnetic systems combined with a spin model mapping, such as dilute magnetic semiconductors [88] and metallic alloys [89].

Magnetic properties calculated at the electronic level have a synergy with atomistic spin models, since the electronic properties can often be mapped onto a Heisenberg spin model with effective local moments. This multiscale electronic and atomistic approach avoids the continuum approximations of micromagnetics and treats magnetic materials at the natural atomic scale.

3.2. Atomistic parameters from macroscopic properties

The alternative approach to multiscale atomistic/density-functional-theory simulations is to derive the parameters from experimentally determined values. This has the advantage of speed and lower complexity, whilst foregoing microscopic details of the exchange interactions or anisotropies. Another key advantage of generic parameters is the possibility of parametric studies, where parameters are varied explicitly to determine their importance for the macroscopic properties of the system, such as has been done for studies of surface anisotropy [17] and exchange bias [13].

Unlike micromagnetic simulations, the fundamental thermodynamic approach of the atomistic model means that all parameters must be determined for zero temperature. The spin fluctuations then determine the intrinsic temperature dependence of the effective parameters which are usually put into micromagnetic simulations as parameters. Fortunately determination of the atomic moments, exchange constants and anisotropies from experimental values is relatively straightforward for most systems.

3.2.1. Atomic spin moment

The atomic spin moment μs is related to the saturation magnetization simply by:

where Ms is the saturation magnetization at 0 K in J T−1m−3, a is the unit cell size (m), and nat is the number of atoms per unit cell. We also note the usual convention of expressing atomic moments in multiples or fractions of the Bohr magneton, μB owing to their electronic origin. Taking BCC iron as an example, the zero temperature saturation magnetization is 1.75 MA m−1 [90], unit cell size of a = 2.866 Å, this gives an atomic moment of 2.22 μB/atom.

3.2.2. Exchange energy

For a generic atomistic model with z nearest neighbour interactions, the exchange constant is given by the mean-field expression:

where kB is the Boltzmann constant and Tc is the Curie temperature z is the number of nearest neighbours.  is a correction factor from the usual mean-field expression which arises due to spin waves in the 3D Heisenberg model [91]. Because of this

is a correction factor from the usual mean-field expression which arises due to spin waves in the 3D Heisenberg model [91]. Because of this  is also dependent on the crystal structure and coordination number, and so the calculated Tc will vary slightly according to the specifics of the system. For Cobalt with a Tc of 1388 K and assuming a hexagonal crystal structure with z = 12, this gives a nearest neighbour exchange interaction Jij = 6.064 × 10−21 J/link.

is also dependent on the crystal structure and coordination number, and so the calculated Tc will vary slightly according to the specifics of the system. For Cobalt with a Tc of 1388 K and assuming a hexagonal crystal structure with z = 12, this gives a nearest neighbour exchange interaction Jij = 6.064 × 10−21 J/link.

3.2.3. Anisotropy energy

The atomistic magnetocrystalline anisotropy ku is derived from the macroscopic anisotropy constant Ku by the expression:

where Ku in given in J m−3. In addition to the atomistic parameters, it is also worth noting the analogous expressions for the anisotropy field Ha for a single domain particle:

where symbols have their usual meaning. At this point it is worth mentioning that the measured anisotropy is a free energy difference. While the intrinsic ku remains (to a first approximation) temperature independent, at a non-zero temperature the free energy in the easy/hard directions is increased/decreased due to the magnetization fluctuations. Thus the macroscopic anisotropy value decreases with increasing temperature, vanishing at Tc. The thermodynamic basis of atomistic models makes them highly suitable for the investigation of such phenomena, as we show later. Applying the above, parameters for the key ferromagnetic elements are given in table 2.

3.2.4. Ferrimagnets and antiferromagnets

In the case of ferrimagnets and antiferromagnets the above methods for anisotropy and moment determination do not work due to the lack of macroscopic measurements, although the estimated exchange energies apply equally well to the Néel temperature provided no magnetic frustration (due to lattice symmetry) is present. In general, other theoretical calculations or formalisms are required to determine parameters, such as mean-field approaches [9] or density functional theory calculations [31].

Table 2. Table of derived constants for the ferromagnetic elements Fe, Co, Ni and Gd.

| Fe | Co | Ni | Gd | Unit | |

|---|---|---|---|---|---|

| Crystal structure | bcc | hcp | fcc | hcp | — |

| Unit cell size a | 2.866 | 2.507 | 3.524 | 3.636 | Å |

| Interatomic spacing rij | 2.480 | 2.507 | 2.492 | 3.636 | Å |

| Coordination number z | 8 | 12 | 12 | 12 | — |

| Curie temperature Tc | 1043 | 1388 | 631 | 293 | K |

Spin-wave MF correction [91, 92]  |

0.766 | 0.790 | 0.790 | 0.790 | — |

| Atomic spin moment μs | 2.22 | 1.72 | 0.606 | 7.63 | μB |

| Exchange energy Jij | 7.050 × 10−21 | 6.064 × 10−21 | 2.757 × 10−21 | 1.280 × 10−21 | J/link |

| Anisotropy energy [93] k | 5.65 × 10−25 | 6.69 × 10−24 | 5.47 × 10−26 | 5.93 × 10−24 | J/atom |

3.3. Atomistic system generation

In addition to determining the parameters of the spin Hamiltonian, an essential part of the atomistic model is the determination of the nuclear, or atomic, positions in the system. In the multiscale approach utilizing ab initio parameterization of the system, the spin Hamiltonian is intrinsically tied to the atomic positions. The additional detail offered by first principles calculations is highly desirable even for perfect crystals and atomically sharp interfaces, however the computational complexity of the calculations limits the ability to parameterize a spin Hamiltonian for systems of extended defects over 10 nm+ length scales, including physical effects such as vacancies, impurities, dislocations and even amorphous materials.

For systems modelled using the nearest neighbour approximation, the atomic structures are much less restricted, allowing for simulations of material defects such as interface roughness [13] and intermixing [21], magnetic multilayers [94], disordered magnetic alloys [9], surface [17] and finite size effects [57]. VAMPIRE includes extensive functionality to generate such systems, the details of which are included in appendix

4. Integration methods

Although the spin Hamiltonian describes the energetics of the magnetic system, it provides no information regarding its time evolution, thermal fluctuations, or the ability to determine the ground state for the system. In the following the commonly utilized integration methods for atomistic spin models are introduced.

4.0.1. Spin dynamics

The first understanding of spin dynamics came from ferromagnetic resonance experiments, where the time-dependent behaviour of a magnetic materials is described by the equation derived by Landau and Lifshitz [98], and in the modern form given by:

where m is a unit vector describing the direction of the sample magnetization, H is the effective applied field acting on the sample, γ is the gyromagnetic ratio and α is a phenomenological damping constant which is a property of the material. The physical origin of the Landau–Lifshitz (LL) equation arises due to two distinct physical effects. The precession of the magnetization (first term in equation (12)) arises due to the quantum mechanical interaction of an atomic spin with an applied field. The relaxation of the magnetization (second term in equation (12)) is an elementary formulation of energy transfer representing the coupling of the magnetization to a heat bath which aligns the magnetization along the field direction with a characteristic coupling strength determined by α. In the LL equation the relaxation rate of the magnetization to the field direction is a linear function of the damping parameter, which was shown by Gilbert to yield incorrect dynamics for materials with high damping [99]. Subsequently Gilbert introduced critical damping, with a maximum effective damping for α = 1, to arrive at the Landau–Lifshitz–Gilbert (LLG) equation. Although initially derived to describe the dynamics of the macroscopic magnetization of a sample, the LLG is the standard equation of motion used in numerical micromagnetics, describing the dynamics of small magnetic elements.

The same equation of motion can also be applied at the atomistic level. The precession term arises quantum mechanically for atomic spins and the relaxation term now describes direct angular momentum transfer between the spins and the heat bath, which includes contributions from the lattice [100] and the electrons [101]. A distinction between the macroscopic LLG and the atomistic LLG now appears in terms of the effects included within the damping parameter. In the macroscopic LLG, α includes all contributions, both intrinsic (such as spin–lattice and spin–electron interactions) and extrinsic (spin-spin interactions arising from demagnetization fields, surface defects [102], doping [103] and temperature [50]), while the atomistic LLG only includes the local intrinsic contributions. To distinguish the different definitions of damping we therefore introduce a microscopic damping parameter λ. Although the form of the LLG is identical for atomistic and macroscopic length scales, the microstructural detail in the atomistic model allows for calculations of the effective damping including extrinsic effects, such as rare-earth doping [103]. Including a microscopic damping λ the atomistic Landau–Lifshitz–Gilbert equation is given by

where Si is a unit vector representing the direction of the magnetic spin moment of site i, γ is the gyromagnetic ratio and  is the net magnetic field on each spin. The atomistic LLG equation describes the interaction of an atomic spin moment i with an effective magnetic field, which is obtained from the negative first derivative of the complete spin Hamiltonian, such that:

is the net magnetic field on each spin. The atomistic LLG equation describes the interaction of an atomic spin moment i with an effective magnetic field, which is obtained from the negative first derivative of the complete spin Hamiltonian, such that:

where μs is the local spin moment. The inclusion of the spin moment within the effective field is significant, in that the field is then expressed in units of Tesla, given a Hamiltonian in Joules. Given typical energies in the Hamiltonian of 10 μeV –100 meV range. This gives fields typically in the range 0.1–1000 T, given a spin moment of the same order as the Bohr magneton (μB).

4.0.2. Langevin dynamics

In its standard form the LLG equation is strictly only applicable to simulations at zero temperature. Thermal effects cause thermodynamic fluctuations of the spin moments which at sufficiently high temperatures are stronger than the exchange interaction, giving rise to the ferromagnetic-paramagnetic transition. The effects of temperature can be taken into account by using Langevin Dynamics, an approach developed by Brown [104]. The basic idea behind Langevin Dynamics is to assume that the thermal fluctuations on each atomic site can be represented by a Gaussian white noise term. As the temperature is increased, the width of the Gaussian distribution increases, thus representing stronger thermal fluctuations. The established Langevin Dynamics method is widely used for spin dynamics simulations and incorporates an effective thermal field into the LLG equation to simulate thermal effects [105–107]. The thermal fluctuations are represented by a Gaussian distribution  in three dimensions with a mean of zero. At each time step the instantaneous thermal field on each spin i is given by:

in three dimensions with a mean of zero. At each time step the instantaneous thermal field on each spin i is given by:

where kB is the Boltzmann constant, T is the system temperature, λ is the Gilbert damping parameter, γ is the absolute value of the gyromagnetic ratio, μs is the magnitude of the atomic magnetic moment, and Δt is the integration time step. The effective field for application in the LLG equation with Langevin Dynamics then reads:

Given that for each time step three Gaussian distributed random numbers are required for every spin, efficient generation of such numbers is essential. VAMPIRE makes use the Mersenne Twister [108] uniform random number generator and the Ziggurat method [109] for generating the Gaussian distribution.

It is useful at the this point to address the applicability of the atomistic LLG equation to slow and fast problems respectively. In reality the thermal and magnetic fluctuations are correlated at the atomic level, arising from the dynamic interactions between the atoms and lattice/electron system. These correlations may be important in terms of the thermal fluctuations experienced by the atomistic spins. In the conventional Langevin dynamics approach described above, the noise term is completely uncorrelated in time and space. For short timescales however, the thermal fluctuations are correlated in time, and so the noise is coloured [110]. The effect of the coloured noise is to lessen the effect of sudden temperature changes on the magnetization dynamics. However, the existence of ultrafast magnetization dynamics [11, 44], and that it is driven by a thermal rather than quantum mechanical process [111], requires that the effective correlation time is short, with an upper bound of around 1 fs. Given that the correlation time is close to the integration timestep, the applicability of the LLG to problems with timescales ≥ 1 fs is sound. There will be a point however where the LLG is no longer valid, where direct simulation of the microscopic damping mechanisms will be necessary. Progress has been made in linking molecular dynamics and spin models [100, 112, 113] which enables the simulation of spin–lattice interactions, which is particularly relevant for slow problems, such as conventional magnetic recording where switching occurs over nanosecond timescales. However, it is also essential to consider spin–electron effects [101, 114] necessary for ultrafast demagnetization processes, although the physical origins are still currently debated [115].

4.0.3. Time Integration of the LLG equation

In order to determine the time evolution of a system of spins, it is necessary to solve the stochastic LLG equation, as given by equations (13) and (16), numerically. The choice of solver is limited due to the stochastic nature of the equations. Specifically, it is necessary to ensure convergence to the Stratonovich solution. This has been considered in detail by Garcia-Palacios [105], but the essential requirement [116] is that the solver enforces the conservation of the magnitude of the spin, either implicitly or by renormalization. The most primitive integration scheme uses Euler's method, which assumes a linear change in the spin direction in a single discretized time step, Δt. An improved integration scheme, known as the Heun method [105] is commonly used, which allows the use of larger time steps because of its use of a predictor–corrector algorithm. Other more advanced integration schemes have been suggested, such as the midpoint method [117] and modified predictor–corrector midpoint schemes [103, 118]. The principal advantage of the midpoint scheme is that the length of the spin vector is preserved during the integration which allows larger time steps to be used. However for the midpoint scheme the significant increase in computational complexity outweighs the benefits of larger time steps [118]. Modified predictor–corrector schemes [103, 118] reduce the computational complexity of the midpoint scheme, but with a loss of accuracy, particularly in the time-dependent dynamics [103]. For valid integration of the stochastic LLG equation it is also necessary for the numerical scheme to converge to the Stratonovich solution [105, 119]. Although proven for the midpoint and Heun numerical schemes, the validity of the predictor–corrector midpoint schemes for the stochastic LLG have yet to be confirmed. On balance the Heun scheme, despite its relative simplicity, is sufficiently computationally efficient that it is still the most widely used integration scheme for stochastic magnetization dynamics, and so we proceed to describe its implementation in detail.

In the Heun method, the first (predictor) step calculates the new spin direction,  , for a given effective field

, for a given effective field  by performing a standard Euler integration step, given by:

by performing a standard Euler integration step, given by:

where

The Heun scheme does not preserve the spin length and so it is essential to renormalize the spin unit vector length Si after both the predictor and corrector steps to ensure numerical stability and convergence to the Stratanovich solution. After the first step the effective field must be re-evaluated as the intermediate spin positions have now changed. It should be noted that the random thermal field does not change between steps. The second (corrector) step then uses the predicted spin position and revised effective field  to calculate the final spin position, resulting in a complete integration step given by:

to calculate the final spin position, resulting in a complete integration step given by:

where

The predictor step of the integration is performed on every spin in the system before proceeding to evaluate the corrector step for every spin. This is then repeated many times so that the time evolution of the system can be simulated. Although the Heun scheme was derived specifically for a stochastic equation with multiplicative noise, in the absence of the noise term the Heun method reduces to a standard second order Runge–Kutta method [120]. In order to test the implementation of the Heun integration scheme, it is possible to compare the calculated result with the analytical solution for the LLG. For the simple case of a single spin initially along the x-axis in an applied field along the z-axis, the time evolution [121] is given by:

The expected and simulated time evolution for a single spin with H = 10 T, Δt = 1 × 10−15 s and λ = 0.1,0.05 is plotted in figure 1. Superficially the simulated and expected time evolution agree very well, with errors around 10−6. The error gives a characteristic trace the size and shape of which is indicative of a correct implementation of the Heun integration scheme.

Figure 1. Time evolution of a single isolated spin in an applied field of 10 T and time step of 1 fs. Magnetization traces (a) and (c) show relaxation of the magnetization to the z-direction and precession of the x component (the y-component is omitted for clarity) for damping constants λ = 0.1 and λ = 0.05 respectively. The points are the result of direction integration of the LLG and the lines are the analytical solution plotted according to equation (21). Panels (b) and (d) show the corresponding error traces (difference between the expected and calculated spin components) for the two damping constants for (a) and (c) respectively. For λ = 0.1 the error is below 10−6, while for lower damping the numerical error increases significantly due to the increased number of precessions, highlighting the damping dependence of the integration time step.

Download figure:

Standard image High-resolution imageIdeally one would like to use the largest time step possible so as to simulate systems for the longest time. For micromagnetic simulations at zero temperature, the minimum time step is a well defined quantity since the largest field (usually the exchange term) essentially defines the precession frequency. However, for atomistic simulations using the stochastic LLG equation with Langevin dynamics, the effective field becomes temperature dependent. The consequence of this is that for atomistic models the most difficult region to integrate is in the immediate vicinity of the Curie point. Errors in the integration of the system will be apparent from a non-converged value for the average magnetization. This gives a relatively simple case which can then be used to test the stability of integration schemes for the stochastic LLG model. A plot of the mean magnetization as a function of temperature is shown in figure 2 for a representative system consisting of 22 × 22 × 22 unit cells with generic material parameters of FePt with an fcc crystal structure, nearest neighbour exchange interaction of Jij = 3.0 × 10−21 J/link and uniaxial anisotropy of 1.0 × 10−23 J/atom. The system is first equilibrated for 10 ps at each temperature and then the mean magnetization is calculated over a further 10 ps.

Figure 2. Time step dependence of the mean magnetization for different reduced temperatures for the Heun integration scheme. Low (T ≪ Tc) and high (T ≫ Tc) temperatures integrate accurately with a 1fs timestep, but in the vicinity of Tc a timestep of around 10−16 is required for this system.

Download figure:

Standard image High-resolution imageFirst, comparing the effect of temperature on the minimum allowable time step, the data show that for low temperatures reasonably large time steps of 1 × 10−15 give the correct solution of the LLG equations, while near the Curie point (690 K) the deviations from the correct equilibrium value are significant. Consequently for simulations studying high temperature reversal processes time steps of 1 × 10−16 s are necessary. It should be noted that the time steps which can be used are material-dependent—specifically if a material with higher Curie temperature is used then the usable time steps will be correspondingly lower due to the increased exchange field.

From a practical perspective a significant advantage of the spin dynamics method is the ability to parallelize the integration system by domain decomposition, details of which are given in appendix

4.1. Monte Carlo methods

While spin dynamics are particularly useful for obtaining dynamic information about the magnetic properties or reversal processes for a system, they are often not the optimal method for determining the equilibrium properties, for example the temperature-dependent magnetization. The Monte Carlo Metropolis algorithm [122] provides a natural way to simulate temperature effects where dynamics are not required due to the rapid convergence to equilibrium and relative ease of implementation.

The Monte Carlo metropolis algorithm for a classical spin system proceeds as follows. First a random spin i is picked and its initial spin direction Si is changed randomly to a new trial position  , a so-called trial move. The change in energy

, a so-called trial move. The change in energy  between the old and new positions is then evaluated, and the trial move is then accepted with probability

between the old and new positions is then evaluated, and the trial move is then accepted with probability

by comparison with a uniform random number in the range 0–1. Probabilities greater than 1, corresponding with a reduction in energy, are accepted unconditionally. This procedure is then repeated until N trial moves have been attempted, where N is the number of spins in the complete system. Each set of N trial moves comprises a single Monte Carlo step.

The nature of the trial move is important due to two requirements of any Monte Carlo algorithm: ergodicity and reversibility. Ergodicity expresses the requirement that all possible states of the system are accessible, while reversibility requires that the transition probability between two states is invariant, explicitly  . From equation (22) reversibility is obvious since the probability of a spin change depends only on the initial and final energy. Ergodicity is easy to satisfy by moving the selected spin to a random position on the unit sphere, however this has an undesirable consequence at low temperatures since large deviations of spins from the collinear direction are highly improbable due to the strength of the exchange interaction. Thus at low temperatures a series of trial moves on the unit sphere will lead to most moves being rejected. Ideally a move acceptance rate of around 50% is desired, since very high and very low rates require significantly more Monte Carlo steps to reach a state representative of true thermal equilibrium.

. From equation (22) reversibility is obvious since the probability of a spin change depends only on the initial and final energy. Ergodicity is easy to satisfy by moving the selected spin to a random position on the unit sphere, however this has an undesirable consequence at low temperatures since large deviations of spins from the collinear direction are highly improbable due to the strength of the exchange interaction. Thus at low temperatures a series of trial moves on the unit sphere will lead to most moves being rejected. Ideally a move acceptance rate of around 50% is desired, since very high and very low rates require significantly more Monte Carlo steps to reach a state representative of true thermal equilibrium.

One of the most efficient Monte Carlo algorithms for classical spin models was developed by Hinzke and Nowak [123], involving a combinational approach using a mixture of different trial moves. The principal advantage of this method is the efficient sampling of all available phase space while maintaining a reasonable trial move acceptance rate. The Hinzke–Nowak method utilizes three distinct types of move: spin flip, Gaussian and random, as illustrated schematically in figure 3.

Figure 3. Schematic showing the three principal Monte Carlo moves: (a) spin flip; (b) Gaussian; and (c) random.

Download figure:

Standard image High-resolution imageThe spin flip move simply reverses the direction of the spin such that  to explicitly allow the nucleation of a switching event. The spin flip move is identical to a move in Ising spin models. It should be noted that spin flip moves do not by themselves satisfy ergodicity in the classical spin model, since states perpendicular to the initial spin direction are inaccessible. However, when used in combination with other ergodic trial moves this is quite permissible. The Gaussian trial move takes the initial spin direction and moves the spin to a point on the unit sphere in the vicinity of the initial position according to the expression

to explicitly allow the nucleation of a switching event. The spin flip move is identical to a move in Ising spin models. It should be noted that spin flip moves do not by themselves satisfy ergodicity in the classical spin model, since states perpendicular to the initial spin direction are inaccessible. However, when used in combination with other ergodic trial moves this is quite permissible. The Gaussian trial move takes the initial spin direction and moves the spin to a point on the unit sphere in the vicinity of the initial position according to the expression

where  is a Gaussian distributed random number and σg is the width of a cone around the initial spin Si. After generating the trial position

is a Gaussian distributed random number and σg is the width of a cone around the initial spin Si. After generating the trial position  the position is normalized to yield a spin of unit length. The choice of a Gaussian distribution is deliberate since after normalization the trial moves have a uniform sampling over the cone. The width of the cone is generally chosen to be temperature dependent and of the form

the position is normalized to yield a spin of unit length. The choice of a Gaussian distribution is deliberate since after normalization the trial moves have a uniform sampling over the cone. The width of the cone is generally chosen to be temperature dependent and of the form

The Gaussian trial move thus favours small angular changes in the spin direction at low temperatures, giving a good acceptance probability for most temperatures.

The final random trial move picks a random point on the unit sphere according to

which ensures ergodicity for the complete algorithm and ensures efficient sampling of the phase space at high temperatures. For each trial step one of these three trial moves is picked randomly, which in general leads to good algorithmic properties.

To verify that the random sampling and Gaussian trial moves give the expected behaviour, a plot of the calculated trial moves on the unit sphere for the different algorithms is shown in figure 4. The important points are that the random trial move is uniform on the unit sphere, and that the Gaussian trial move is close to the initial spin direction, along the z-axis in this case.

Figure 4. Visualization of Monte Carlo sampling on the unit sphere for (a) random and (b) Gaussian sampling algorithms at T = 10 K. The dots indicate the trial moves. The random algorithm shows a uniform distribution on the unit sphere, and no preferential biasing along the axes. The Gaussian trial moves are clustered around the initial spin position, along the z-axis.

Download figure:

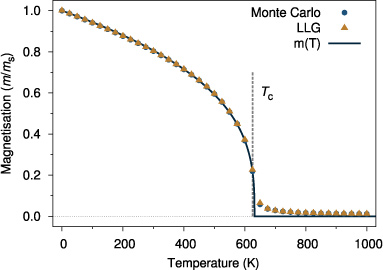

Standard image High-resolution imageAt this point it is worthwhile considering the relative efficiencies of Monte Carlo and spin dynamics for calculating equilibrium properties. Figure 5 shows the simulated temperature-dependent magnetization for a test system using both LLG spin dynamics and Monte Carlo methods. Agreement between the two methods is good, but the spin dynamics simulation takes around twenty times longer to compute due to the requirements of a low time step and slower convergence to equilibrium. However, Monte Carlo algorithms are notoriously difficult to parallelize, and so for larger systems LLG spin dynamic simulations are generally more efficient than Monte Carlo methods.

Figure 5. Comparative simulation of temperature-dependent magnetization for Monte Carlo and LLG simulations. Simulation parameters assume a nearest neighbour exchange of 6.0 × 10−21 J/link with a simple cubic crystal structure, periodic boundary conditions and 21952 atoms. The Monte Carlo simulations use 50 000 equilibration and averaging steps, while the LLG simulations use 5000 000 equilibration and averaging steps with critical damping (λ = 1) and a time step of 0.01 fs. The value of Tc ∼ 625 K calculated from equation (9) is shown by the dashed vertical line. The temperature-dependent magnetization is fitted to the expression  (shown by the solid line) which yields a fitted Tc = 631.82 K and exponent

(shown by the solid line) which yields a fitted Tc = 631.82 K and exponent  .

.

Download figure:

Standard image High-resolution image5. Test simulations

Having outlined the important theoretical and computational methods for the atomistic simulation of magnetic materials, we now proceed to detail the tests we have refined to ensure the correct implementation of the main components of the model. Such tests are particularly helpful to those wishing to implement these methods. Similar tests developed for micromagnetic packages [124] have proven an essential benchmark for the implementation of improved algorithms and codes with different capabilities but the same core functionality.

5.1. Angular variation of the coercivity

Assuming a correct implementation of an integration scheme as described in the previous section, the first test case of interest is the correct implementation of uniaxial magnetic anisotropy. For a single spin in an applied field and at zero temperature, the behaviour of the magnetization is essentially that of a Stoner–Wohlfarth particle, where the angular variation of the coercivity, or reversing field, is well known [125]. This test serves to verify the static solution for the LLG equation by ensuring an easy axis loop gives a coercivity of Hk = 2ku/μs as expected analytically. For this problem the Hamiltonian reads

where ku is the on-site uniaxial anisotropy constant and Happ is the external applied field. The spin is initialized pointing along the applied field direction, and then the LLG equation is solved for the system, until the net torque on the system S ×Heff ≤|10−6| T, essentially a condition of local minimum energy.

The field strength is decreased from saturation in steps of 0.01 H/Hk and solved again until the same condition is reached. A plot of the calculated alignment of the magnetization to the applied field ( ) for different angles from the easy axis is shown in figure 6. The calculated hysteresis curve conforms exactly to the Stoner–Wohlfarth solution.

) for different angles from the easy axis is shown in figure 6. The calculated hysteresis curve conforms exactly to the Stoner–Wohlfarth solution.

Figure 6. Plot of alignment of magnetization with the applied field for different angles of from the easy axis. The 0° and 90° loops were calculated for very small angles from the easy and hard axes respectively, since in the perfectly aligned case the net torque is zero and no change of the spin direction occurs.

Download figure:

Standard image High-resolution image5.2. Boltzmann distribution for a single spin

To quantitatively test the thermal effects in the model and the correct implementation of the stochastic LLG or Monte Carlo integrators, the simplest case is that of the Boltzmann distribution for a single spin with anisotropy (or applied field), where the probability distribution is characteristic of the temperature and the anisotropy energy. The Boltzmann distribution is given by:

where θ is the angle from the easy axis. The spin is initialized along the easy axis direction and the system is allowed to evolve for 108 time steps after equilibration, recording the angle of the spin to the easy axis at each time. Since the anisotropy energy is symmetric along the easy axis, the probability distribution is reflected and summed about π/2, since at low temperatures the spin is confined to the upper well (θ < π/2). Figure 7 shows the normalized probability distribution for three reduced temperatures.

Figure 7. Calculated angular probability distribution for a single spin with anisotropy for different effective temperatures ku/kBT. The lines show the analytic solution given by equation (27).

Download figure:

Standard image High-resolution imageThe agreement between the calculated distributions is excellent, indicating a correct implementation of the stochastic LLG equation.

5.3. Curie temperature

Tests such as the Stoner–Wohlfarth hysteresis or Boltzmann distribution are helpful in verifying the mechanical implementation of an algorithm for a single spin, but interacting systems of spins present a significant challenge in that no analytical solutions exist. Hence it is necessary to calculate some well-defined macroscopic property which ensures the correct implementation of interactions in a system. The Curie temperature Tc of a nanoparticle is primarily determined by the strength of the exchange interaction between spins and so makes an ideal test of the exchange interaction. As discussed previously the bulk Curie temperature is related to the exchange coupling by the mean-field expression given in equation (9). However, for nanoparticles with a reduction in coordination number at the surface and a finite number of spins, the Curie temperature and criticality of the temperature-dependent magnetization will vary significantly with varying size [57].

To investigate the effects of finite size and reduction in surface coordination on the Curie temperature, the equilibrium magnetization for different sizes of truncated octahedron nanoparticles was calculated as a function of temperature. The Hamiltonian for the simulated system is

where Jij = 5.6 × 10−21 J/link, and the crystal structure is face-centred-cubic, which is believed to be representative of Cobalt nanoparticles. Given the relative strength of the exchange interaction, anisotropy generally has a negligible impact on the Curie temperature of a material, and so the omission of anisotropy from the Hamiltonian is purely for simplicity. The system is simulated using the Monte Carlo method with 10 000 equilibration and 20 000 averaging steps. The system is heated sequentially in 10 K steps, with the final state of the previous temperature taken as the starting point of the next temperature to minimize the number of steps required to reach thermal equilibrium. The mean temperature-dependent magnetization for different particle sizes is plotted in figure 8.

Figure 8. Calculated temperature-dependent magnetization and Curie temperature for truncated octahedron nanoparticles with different size. A visualization of a 3 nm diameter particle is inset.

Download figure:

Standard image High-resolution imageFrom equation (9) the expected Curie temperature is 1282 K, which is in agreement with the results for the 10 nm diameter nanoparticle. For smaller particle sizes the magnetic behaviour close to the Curie temperature loses its criticality, making Tc difficult to determine. Traditionally the Curie point is taken as the maximum of the gradient dm/dT [57], however this significantly underestimates the actual temperature at which magnetic order is lost (which is, by definition, the Curie temperature). Other estimates of the Curie point such as the divergence in the susceptibility are probably a better estimate for finite systems, but this is beyond the scope of the present article. Another effect visible for very small particle sizes is the appearance of a magnetization above the Curie point, an effect first reported by Binder [126]. This arises from local moment correlations which exist above Tc. It is an effect only observable in nanoparticles where the system size is close to the magnetic correlation length.

5.4. Demagnetizing fields

For systems larger than the single domain limit [33] and systems which have one dimension significantly different from another, the demagnetizing field can have a dominant effect on the macroscopic magnetic properties. In micromagnetic formalisms implemented in software packages such as OOMMF [37], MAGPAR [38] and NMAG [39], the calculation of the demagnetization fields is calculated accurately due to the routine simulation of large systems where such fields dominate. Due to the long-ranged interaction the calculation of the demagnetization field generally dominates the compute time and so computational methods such as the fast-Fourier-transform [127, 128] and multipole expansion [129] have been developed to accelerate their calculation.

In large-scale atomistic calculations, it is generally sufficient to adopt a micromagnetic discretization for the demagnetization fields, since they only have a significant effect on nanometre length scales [7]. Additionally due to the generally slow variation of magnetization, the timescales associated with the changes in the demagnetization field are typically much longer than the time step for atomistic spins. Here we present a modified finite difference scheme for calculating the demagnetization fields, described as follows.

Figure 9. (a) Visualization of the macrocell approach used to calculate the demagnetization field, with the system discretized into cubic macrocells. Each macrocell consists of several atoms, shown schematically as cones. (b) Schematic of the macrocell discretization at the curved surface of a material, indicated by the dashed line. The mean position of the atoms within the macrocell defines the centre of mass where the effective macrocell dipole is located. (c) Schematic of a macrocell consisting of two materials with different atomic moments. Since the magnetization is dominated by one material, the magnetic centre of mass moves closer to the material with the higher atomic moments.

Download figure:

Standard image High-resolution imageThe complete system is first discretized into macrocells with a fixed cell size, each consisting of a number of atoms, as shown in figure 9(a). The cell size is freely adjustable from atomistic resolution to multiple unit cells depending on the accuracy required. The position of each macrocell pmc is determined from the magnetic 'centre of mass' given by the expression

where n is the number of atoms in the macrocell, μi is the local (site-dependent) atomic spin moment and α represents the spatial dimension x, y, z. For a magnetic material with the same magnetic moment at each site, equation (29) corrects for partial occupation of a macrocell by using the mean atomic position as the origin of the macrocell dipole, as shown in figure 9(b). For a sample consisting of two materials with different atomic moments, the 'magnetic centre of mass' is closer to the atoms with the higher atomic moments, as shown in figure 9(c). This modified micromagnetic scheme gives a good approximation of the demagnetization field without having to use computationally costly atomistic resolution calculation of the demagnetization field.

The total moment in each macrocell mmc is calculated from the vector sum of the atomic moments within each cell, given by

Depending on the particulars of the system, the macrocell moments can vary significantly depending on position, composition and temperature. At elevated temperatures close to the Curie point, the macrocell magnetization becomes small, and so the effects of the demagnetizing field are much less important. Similarly in compensated ferrimagnets consisting of two competing sublattices the overall macrocell magnetization can also be small again leading to a reduced influence of the demagnetizing field.

The demagnetization field within each macrocell p is given by

where r is the separation between dipoles p and q,  is a unit vector in the direction p→q, and

is a unit vector in the direction p→q, and  is the volume of the macrocell p. The first term in equation (31) is the usual dipole term arising from all other macrocells in the system, while the second term is the self-demagnetization field of the macrocell, taken here as having a demagnetization factor 1/3. Strictly this is applicable only for the field at the centre of a cube. However, the non-uniformity of the field inside a uniformly magnetized cube is not large and the assumption of a uniform demagnetization field is a reasonable approximation. The self-demagnetization term is often neglected in the literature, but in fact is essential when calculating the field inside a magnetic material. Once the demagnetization field is calculated for each macrocell, this is applied uniformly to all atoms as an effective field within the macrocell. It should be noted however that the macrocell size cannot be larger than the smallest sample dimension, otherwise significant errors in the calculation of the demagnetizing field will be incurred.

is the volume of the macrocell p. The first term in equation (31) is the usual dipole term arising from all other macrocells in the system, while the second term is the self-demagnetization field of the macrocell, taken here as having a demagnetization factor 1/3. Strictly this is applicable only for the field at the centre of a cube. However, the non-uniformity of the field inside a uniformly magnetized cube is not large and the assumption of a uniform demagnetization field is a reasonable approximation. The self-demagnetization term is often neglected in the literature, but in fact is essential when calculating the field inside a magnetic material. Once the demagnetization field is calculated for each macrocell, this is applied uniformly to all atoms as an effective field within the macrocell. It should be noted however that the macrocell size cannot be larger than the smallest sample dimension, otherwise significant errors in the calculation of the demagnetizing field will be incurred.

The volume of the macrocell Vmc is an effective volume determined from the number of atoms in each cell and given by

where  is the number of atoms in the macrocell,

is the number of atoms in the macrocell,  is the number of atoms in the unit cell and Vuc is the volume of the unit cell. The macrocell volume is necessary to determine the magnetization (moment per volume) in the macrocell. For unit cells much smaller than the system size, equation (32) is a good approximation, however for a large unit cell with significant free space, for example a nanoparticle in vacuum, the free space contributes to the effective volume which reduces the effective macrocell volume.

is the number of atoms in the unit cell and Vuc is the volume of the unit cell. The macrocell volume is necessary to determine the magnetization (moment per volume) in the macrocell. For unit cells much smaller than the system size, equation (32) is a good approximation, however for a large unit cell with significant free space, for example a nanoparticle in vacuum, the free space contributes to the effective volume which reduces the effective macrocell volume.

5.4.1. Demagnetizing field of a platelet

To verify the implementation of the demagnetization field calculation it is necessary to compare the calculated fields with some analytic solution. Due to the complexity of demagnetization fields analytical solutions are only available for simple geometric shapes such cubes and cylinders [130], however for an infinite perpendicularly magnetized platelet the demagnetization field approaches the magnetic saturation − μ0Ms. To test this limit we have calculated the demagnetizing field of a 20 nm × 20 nm × 1 nm platelet as shown in figure 10. In the centre of the film agreement with the analytic value is good, while at the edges the demagnetization field is reduced as expected.

Figure 10. Calculated cross-section of the demagnetization fields in a 20 nm × 20 nm × 1 nm platelet (visualization inset) with magnetization perpendicular to the film plane. A macrocell size of 2 unit cells is used. In the centre of the film the calculated demagnetization field is − 2.236 T which compares well to the analytic solution of Hdemag = −μ0M = −2.18 T. Note that the 2D grid used slightly overestimates the demagnetization field.

Download figure:

Standard image High-resolution imageFigure 11. Simulated hysteresis loops and computational efficiency for a 40 nm × 40 nm × 1 nm nanodot for different cell sizes (multiples of unit cell size) ((a), (b)) and update rates (seconds between update calculations) ((c), (d)).

Download figure:

Standard image High-resolution image5.4.2. Performance characteristics

In micromagnetic simulations, calculation of the demagnetization field usually dominates the runtime of the code and generally it is preferable to have as large a cell size as possible. For atomistic calculations however, additional flexibility in the frequency of updates of the demagnetization field is permitted due to the short time steps used and the fact that the magnetization is generally a slowly varying property.

To investigate the effects of different macrocell sizes and the time taken between updates of the demagnetization field we have simulated hysteresis loops of a nanodot of diameter 40 nm and height of 1.4 nm. Figure 11(a) shows hysteresis loops calculated for different macrocell sizes for the nanodot. For most cell sizes the results of the calculation agree quite well, however, for a cell size of 4 unit cells, the calculated coercivity is significantly larger, owing to the creation of a flat macrocell (with dimensions 4 × 4 × 1 unit cells). This illustrates that for systems with small dimensions, extra care must be taken when specifying the macro cell size in order to avoid non-cubic cells. In general, the problem with asymmetric macrocells is not trivial to solve within the finite difference formalism, since the problem arises due to a modification of both the intracell and intercell contributions to the demagnetizing field.

Figure 11(b) shows the runtime for a single update of the demagnetizing field on a single CPU for different macrocell size discretizations. Noting the logarithmic scale for the simulation time, single unit cell discretizations are computationally costly while not giving significantly better results than larger macrocell discretizations. Although the demagnetization field calculation is an  problem, it is possible to pre-calculate the distances between the macrocells at the cost of increased memory usage. Due to the computational cost of calculating the position vectors, this method is often much faster than the brute force calculation. However, due to the fact that memory usage increases proportionally to

problem, it is possible to pre-calculate the distances between the macrocells at the cost of increased memory usage. Due to the computational cost of calculating the position vectors, this method is often much faster than the brute force calculation. However, due to the fact that memory usage increases proportionally to  , fine discretizations for large systems can require many GBs of memory.

, fine discretizations for large systems can require many GBs of memory.

By collating terms in equation (31) it is possible to construct the following matrix Mpq for each pairwise interaction:

where rx, ry, rz are the components of the unit vector in the direction p→q, and rpq is the separation of macrocells. Since the matrix is symmetric along the diagonal only six numbers need to be stored in memory. The total demagnetization field for each macrocell p is then given by:

The relative performance of the matrix optimization is plotted for comparison in figure 11(b), showing a significant reduction in runtime. Where the computer memory is sufficiently large, the recalculated matrix should always be employed for optimal performance.

In addition to variable macrocell sizes, due to the small time steps employed in atomistic models and that the magnetization is generally a slowly varying property, it is not always necessary to update the demagnetization fields every single time step. Hysteresis loops for different times between updates of the demagnetization field are plotted in figure 11(c). In general hysteresis calculations are sufficiently accurate with a picosecond update of the demagnetizing field, which significantly reduces the computational cost.

In general good accuracy for the demagnetizing field calculation can be achieved with coarse discretization and infrequent updates, but fast dynamics such as those induced by laser excitation require much faster updates, or simulation of domain wall processes in high anisotropy materials requires finer discretizations to achieve correct results.

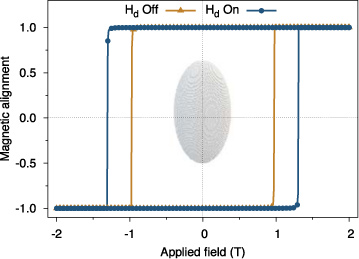

5.4.3. Demagnetizing field in a prolate ellipsoid

Since the macrocell approach works well in platelets and nanodots, it is also interesting to apply the same method to a slightly more complex system: a prolate ellipsoid. An ellipsoid adds an effective shape anisotropy due to the demagnetization field, and so for a particle with uniaxial magnetocrystalline anisotropy along the elongated direction (z), the calculated coercivity should increase according to the difference in the demagnetization field along x and z, given by:

where ΔN = Nz − Nx. The demagnetizing factors Nx, Ny, and Nz are known analytically for various ellipticities [131], and here we assume a/c = b/c = 0.5, where a, b, and c are the extent of the ellipsoid along x, y and z respectively.

To verify the macrocell approach gives the same expected increase of the coercivity we have simulated a generic ferromagnet with atomic moment 1.5 μB, an FCC crystal structure with lattice spacing 3.54 Å and anisotropy field of Ha = 1 T. The particle is cut from the lattice in the shape of an ellipsoid, of diameter 10 nm and height of 20 nm, as shown inset in figure 12. A macrocell size of 2 unit cells is used, which is updated every 100 time steps (0.1 ps).

Figure 12. Simulated hysteresis loops for an ellipsoidal nanoparticle with an axial ratio of 2 showing the effect of the demagnetizing field calculated with the macrocell approach. A visualization of the simulated particle is inset.

Download figure:

Standard image High-resolution imageAs expected the coercivity increases due to the shape anisotropy. From [131] the expected increase in the coercivity is  which compares well to the simulated increase of 0.33 T.

which compares well to the simulated increase of 0.33 T.

6. Parallel implementation and scaling

Although the algorithms and methods discussed in the preceding sections describe the mechanics of atomistic spin models, it is important to note finally the importance of parallel processing in simulating realistic systems which include many-particle interactions, or nano patterned elements with large lateral sizes. Details of the parallelization strategies which have been adopted to enable the optimum performance of VAMPIRE for different problems are presented in appendix

To demonstrate the performance and scalability of VAMPIRE , we have performed tests for three different system sizes: small (10 628 spins), medium (8 × 105 spins), and large (8 × 106 spins). We have access to two Beowulf-class clusters; one with 8 cores/node with an Infiniband 10 Gbps low-latency interconnect, and another with 4 cores/node with a Gigabit Ethernet interconnect. For parallel simulations the interconnect between the nodes can be a limiting factor for increasing performance with increasing numbers of processors, since as more processors are added, each has to do less work per time step. Eventually network communication will dominate the calculation since processors with small amounts of work require the data from other processors in shorter times, leading to a drop in performance. The scaling performance of the code for 100 000 time steps on both machines is presented in figure 13.

The most challenging case for parallelization is the small system size, since a significant fraction of the system must be communicated to other processors during each timestep. On the Ethernet network system for the smallest system size reasonable scaling is seen only for 4 CPUs due to the high latency of the network. However larger problems are much less sensitive to network latency due to latency hiding, and show excellent scalability up to 32 CPUs. Essentially this means that larger problems scale much better than small ones, allowing more processors to be utilized. This is of course well known for parallel scaling problems, but even relatively modest systems consisting of 105 spins show significant improvements with more processors.

For the system with the low-latency Infiniband network, excellent scalability is seen for all problems up to 64 CPUs. Beyond 64 CPUs the reduced scalability for all problem sizes is likely due to a lack of network bandwidth. The bandwidth requirements are similar for all problem sizes, since smaller problems complete more time steps in a given period of time and so have to send several sets of data to other processors. Nevertheless improved performance is seen with increasing numbers of CPUs allowing for continued reductions in compute time. Although not shown, initial tests on an IBM Blue Gene class system have demonstrated excellent scaling of

VAMPIRE up to 16 000 CPUs, allowing the real possibility for atomistic simulations with lateral dimensions of micrometres. Additional scaling tests for systems including calculation of the demagnetizing field and a long-ranged exchange interaction are presented in appendix

Figure 13. Runtime scaling of VAMPIRE for three different problem sizes on the Infiniband network (a) and Ethernet network (b), normalized to the runtime for 2 cores for each problem size.

Download figure:

Standard image High-resolution image7. Conclusions and perspectives

We have described the physical basis of the rapidly developing field of atomistic spin models, and given examples via its implementation in the form of the VAMPIRE code. Although the basic formalism underpinning atomistic spin models is well established, ongoing developments in magnetic materials and devices means that new approaches will need to be developed to simulate a wider range of physical effects at the atomistic scale. One of the most important phenomena is spin transport and magnetoresistance which is behind an emergent field of spin–electronics, or spintronics. Simulation of spin transport and spin torque switching is already in development, and must be included in atomistic level models in order to simulate a wide range of spintronic materials and devices, including read sensors and MRAM (magnetic random access memory). Other areas of interest include ferroelectrics, the spin Seebeck effect [132], and Coloured noise [110] where simulation capabilities are desirable, and incorporation of these effects are planned in future. In addition to modelling known physical effects, it is hoped that improved models of damping incorporating phononic and electronic mechanisms will be developed which enable the study of magnetic properties of materials at sub-femtosecond timescales.