Abstract

Since the early years of follow-up of the Japanese atomic-bomb survivors, it has been apparent that childhood leukaemia has a particular sensitivity to induction by ionising radiation, the excess relative risk (ERR) being expressed as a temporal wave with time since exposure. This pattern has been generally confirmed by studies of children treated with radiotherapy. Case-control studies of childhood leukaemia and antenatal exposure to diagnostic x-rays, a recent large cohort study of leukaemia following CT examinations of young people, and a recent large case-control study of natural background γ-radiation and childhood leukaemia have found evidence of raised risks following low-level exposure. These findings indicate that an ERR/Sv for childhood leukaemia of ∼50, which may be derived from risk models based upon the Japanese atomic-bomb survivors, is broadly applicable to low dose or low dose-rate exposure circumstances.

Export citation and abstract BibTeX RIS

1. Introduction

It is apparent from epidemiological studies of groups of people exposed to moderate and high doses of ionising radiation that childhood leukaemia is especially sensitive to induction by radiation [1–3]. This has prompted interest in the degree of risk of childhood leukaemia resulting from low doses of radiation received either in utero or in the early years of postnatal life from, for example, medical exposure for diagnostic purposes or the intake of radionuclides from radioactive contamination of the environment [4]. Recently, the attention paid to this subject has increased, in part because of reports of elevated rates of leukaemia incidence among children living near certain nuclear installations [5], but also because of the rising frequency of modern methods of medical radiography, such as paediatric computed tomography (CT), that tend to deliver higher doses to patients than previous radiographic techniques [6]. In this paper the epidemiological evidence relating to the risk of childhood leukaemia consequent to exposure to ionising radiation will be reviewed.

2. The Japanese atomic-bomb survivors

2.1. The Life Span Study (LSS)

2.1.1. Background.

Leukaemia was the first malignant disease recognised to be in excess among the Japanese survivors of the atomic-bomb explosions over Hiroshima and Nagasaki in August 1945, when in 1948 the number of cases of leukaemia among the survivors was sufficiently raised to be noticed by alert clinicians [7]. This was one reason behind the establishment, through the Japanese national census of October 1950, of a cohort of Japanese atomic-bomb survivors for epidemiological study, the Life Span Study (LSS), the follow-up of which still continues today, some 65 years after the atomic bombings [8].

The LSS found a large relative risk of childhood leukaemia (conventionally, leukaemia diagnosed before the age of 15 years), with 10 cases observed after October 1950 among the survivors exposed after birth (i.e. those subjects in the LSS with an age at exposure 0–9 years, and an age at diagnosis 5–14 years) [9] while only about 1.6 cases would be expected on the basis of Japanese national leukaemia rates for the 1950s (Linda Walsh, personal communication). There is little doubt that a pronounced excess relative risk of childhood leukaemia must have existed before the LSS commenced, but the absence of systematic recording of leukaemia among the survivors does not permit a definitive conclusion to be drawn as to the magnitude of this excess risk, or when it started.

The LSS consists of ∼93 500 survivors, ∼86 500 of whom have been assigned estimates of the radiation doses received as a consequence of the bombings, and of these ∼49 000 were non-trivially exposed to radiation (i.e. they received an assessed dose of ≥5 mSv); the LSS includes almost all of the survivors who were closest to the detonations [8, 10]. The LSS is a study of members of the general public of both sexes and all ages who were not selected for exposure for a particular reason (e.g. a medical condition)—the cohort members just happened to be in the wrong place at the wrong time. Considerable effort has been expended on ensuring that the data generated by the LSS are as complete and accurate as possible, including the assessment of organ doses received by each survivor in the cohort, the latest doses being those in the Dosimetry System 2002 (DS02), which has replaced the previous Dosimetry System 1986 (DS86) database [8]. A wide range of doses was received by the survivors: around two-thirds of the non-trivially exposed survivors received doses less than 100 mSv (i.e. low doses) whereas just over 2000 individuals received doses exceeding 1 Sv (i.e. high doses).

Mortality among the LSS cohort is determined through the Japanese koseki family registration system and death certificate information. Cancer incidence data are collected through two specialist cancer registries based in Hiroshima and Nagasaki, from 1950 (for haematopoietic and lymphatic cancers) and 1958 (for all other cancers, i.e. malignant solid tumours). The collection, collation and initial analysis of data relating to the Japanese atomic-bomb survivors is the responsibility of the Radiation Effects Research Foundation (RERF), a joint Japanese/US organisation, and since nearly one-half of survivors were still alive at the last analysis of mortality in the LSS database [8], studies continue today with much of the evidence on the lifetime risk of those exposed at a young age still to be obtained.

The most recent study of mortality in the LSS for the period 1950–2003 [8] includes an analysis of leukaemia using DS02 red bone marrow (RBM) doses—the RBM dose is understood to be the relevant dose in respect of the induction of leukaemia—and shows a clear and pronounced dose-related excess risk of leukaemia [8, 10]. For deaths at all ages and both sexes, the excess relative risk (ERR, the proportional increase over background) at 1 Gy RBM absorbed dose over the entire study period of more than half a century was 3.1 (95% confidence interval (CI): 1.8, 4.3), a highly statistically significant increase [8]. The dose–response for the RBM dose range 0–2 Sv exhibits upwards curvature in the upper part of this dose range so that the best fit to the data is a linear-quadratic dose–response model, with downward curvature occurring at higher doses (i.e. >2 Sv) as a result of the increasing influence of the competing effect of sterilisation of those cells that had the potential to be leukaemogenically transformed [8, 10]. The leukaemia mortality data are more unstable statistically in the 0–0.5 Sv dose range, due to fluctuations inevitably generated by small numbers of any excess cases in this dose range, but the linear-quadratic dose–response fitted to data in the 0–2 Sv range still provides a reasonable description in the low dose region, where the dose–response is essentially linear [8, 10]. Nonetheless, the excess risk of leukaemia at low RBM doses as determined by the LSS data is uncertain—the ERR at 0.1 Gy is estimated to be 0.15 (95% CI: −0.01, 0.31) [8], i.e. of borderline statistical significance—and the data are compatible with the absence of an excess leukaemia risk following low doses (<0.1 Gy). From the best fitting model to the leukaemia mortality data for all ages, about half of the ∼200 leukaemia deaths during 1950–2000 among the survivors who were non-trivially exposed are attributable to irradiation during the bombings [8]. The most recent analysis of leukaemia incidence for the period 1950–87 used RBM doses from the previous DS86 dosimetry system and found, for cases at all ages and both sexes over the entire study period, an ERR at 1 Sv RBM dose of 4.8 (90% CI: 3.6, 6.4) [11]. An update of leukaemia incidence among the Japanese atomic-bomb survivors (using DS02 RBM doses) is expected soon.

2.1.2. Childhood leukaemia following postnatal exposure.

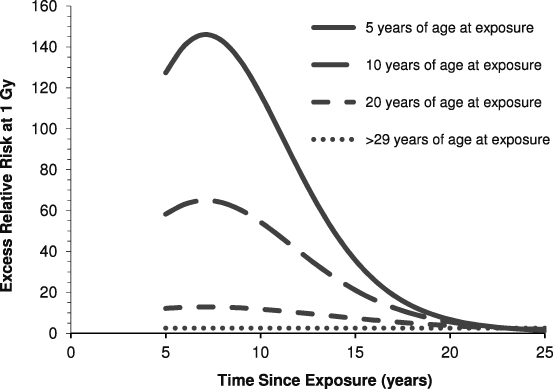

Richardson et al [12] examined the leukaemia mortality data for the Japanese atomic-bomb survivors during 1950–2000 using the DS02 RBM doses, and their results demonstrated the marked variation of ERR with age at exposure, the risk being notably higher at younger ages at exposure. Further, the ERR fell away with increasing time since exposure, particularly for those exposed in childhood. (These general features of radiation-induced leukaemia risk among the Japanese atomic-bomb survivors had been established for some time—see, for example, the BEIR V Report [13].) So, for those irradiated during the atomic bombings as children, the ERR of leukaemia was manifest as a temporal 'wave': for an individual aged 10 years at the time of the bombing, the ERR at 1 Gy RBM dose peaks at ∼65 some 7 years after exposure and then attenuates with time since exposure such that at 25 years after the bombings the ERR was still raised but at a level (approximately twofold) comparable with the ERR experienced by those exposed as adults this long after exposure—for those ≥30 years of age at the time of bombing the ERR is essentially flat with time since exposure, illustrating the marked variation of the expression of leukaemia ERR with age at exposure [12] (figure 1).

Figure 1. Variation of the excess relative risk (ERR) of leukaemia mortality at 1 Gy red bone marrow (RBM) dose among the Japanese atomic-bomb survivors exposed after birth and included in the Life Span Study (LSS), by age at exposure and time since exposure. After Richardson et al [12] and Walsh and Kaiser [14]. (The LSS started in October 1950, so data before this date are not available.)

Download figure:

Standard imageHowever, Walsh and Kaiser [14] urged caution in developing complex models, such as that developed by Richardson et al [12] from the LSS leukaemia mortality data, without taking into account various sources of uncertainty, including modelling uncertainty, and noted that the parameters in the leukaemia risk model derived by Richardson et al need to be viewed with this in mind—for example, the exact shape of the temporal 'wave' of excess leukaemia risk following exposure in childhood (figure 1) is not well established by the LSS data [14]. Indeed, it will be seen from figure 1 that high ERRs (>100 a few years after the receipt of a dose of 1 Gy) are predicted for those exposed as young children, and these values must be treated with some circumspection. Nonetheless, there is no doubt that a markedly raised relative risk of childhood leukaemia was experienced by the Japanese atomic-bomb survivors after October 1950: the observed number of 10 cases compares with the approximately 1.6 cases that might be expected in the absence of exposure based on Japanese national rates for the relevant period, although the details of the distribution of this risk with age at exposure and time since exposure is rather uncertain.

2.2. Childhood leukaemia following intrauterine exposure

Given the large relative risk of childhood leukaemia among the Japanese atomic-bomb survivors who were irradiated after birth, a matter that has attracted some comment is the absence of cases of childhood leukaemia among the survivors who were exposed in utero [15]. (The Japanese survivors irradiated in utero are a separate cohort from the LSS, which consists of survivors exposed after birth.) However, only about 800 individuals received a dose of ≥10 mGy while in utero (the average dose was ∼0.25 Gy), so only around 0.2 case of childhood leukaemia would be expected in the absence of exposure on the basis of mid-20th century Japanese national rates (the mid-P upper 95% confidence limit on the observed to expected case ratio being 15), and there is also the possibility that cases of childhood leukaemia incident during the 1940s, before systematic collection of data began in 1950, may have gone unrecorded or have been overlooked (e.g. because the involvement of leukaemia in an infectious disease death had not been recognised in the difficult years following the end of the war) [9].

Of some interest in this respect is the study of Ohtaki et al [16] of stable chromosome translocations in peripheral blood lymphocytes sampled from 331 survivors who were exposed in utero (150 of whom received a dose ≥5 mSv) and 13 mothers. Surprisingly, although the stable chromosome translocation frequency for the group of mothers was consistent with the upwardly curving dose–response that had previously been found among those survivors irradiated as adults [17], the translocation frequency for those irradiated in utero was unrelated to the dose received, apart from a small, but statistically significant, increase in the 5–100 mSv dose range. Ohtaki et al [16] interpreted their findings as being indicative of two subpopulations of lymphoid precursor cells present in utero: one, relatively small in number, is sensitive to the induction of translocations and cell-killing, the latter effect becoming increasingly dominant for doses above 50 mSv, while the other subpopulation is largely insensitive to biological damage manifest as chromosome aberrations. If childhood leukaemia was to arise from radiation-induced damage in the first subpopulation of cells then their particular sensitivity to cell-killing by acute doses exceeding 50 mSv could be a significant contributory factor in the absence of cases of childhood leukaemia among those survivors who received moderate and high doses in utero.

The findings of Ohtaki et al [16] beg the question as to whether the effect they observed for chromosome aberrations in blood cells taken from those exposed in utero persists after birth, and if so, at what level and for how long—for example, no case of leukaemia has been observed among Japanese survivors exposed during the first nine months of postnatal life [18], although as with those exposed in utero, the number expected in the absence of irradiation is small. If cell-killing at moderate doses suppresses the risk of leukaemia to a material extent after exposure not just in utero but also in the first few years after birth, then it is possible that leukaemia risk estimates derived directly from the experience of only those Japanese atomic-bomb survivors irradiated in the early years of postnatal life could underestimate the level of risk posed by low-level radiation shortly after birth (as would appear to be the case for exposure in utero).

2.3. Limitations of the Japanese survivor data

Although the studies of the Japanese atomic-bomb survivors are impressive in the detailed information on radiation risks that they provide, they cannot generate direct information on all aspects of radiation-induced risks. The bomb survivors received briefly delivered doses of mainly external γ-radiation, and some of the (obviously retrospective) dose estimates remain uncertain. The exposed population was malnourished at the end of a long war, and to enter the LSS the survivors had to have lived until October 1950 in conditions that were far from ideal (especially if they had suffered tissue reactions from the receipt of high radiation doses), which raises the possibility of bias due to the 'healthy survivor effect'—potentially, those entering the epidemiological studies were stronger individuals not representative of the general population in terms of radiation-induced cancer risks. Further, data for the period before October 1950 were not collected systematically, and this is especially important for leukaemia, since it is clear that excess cases were occurring in the late-1940s—hence, for example, the Japanese atomic-bomb survivor data cannot, by themselves, determine the minimum latent period for leukaemia, although the data are compatible with the value of two years that is usually assumed for this period [1–3].

There is also the matter of how the findings derived from a Japanese population exposed in 1945, with its particular range of background cancer risks (e.g. a relatively high risk of stomach cancer but a relatively low risk of female breast cancer), should be applied to another population, say, from present-day Western Europe, with a different range of background cancer risks (e.g. a relatively low risk of stomach cancer but a relatively high risk of female breast cancer)—is the excess relative risk (ERR, the proportional increase in risk compared to the background absolute risk in the absence of exposure) or the excess absolute risk (EAR, the additional risk above the background risk) or some mixture of the two to be transferred between populations, including to the present-day Japanese population with a different set of background cancer risks? The incidence of childhood leukaemia during the 1950s was materially lower in Japan than in Great Britain or the USA (or in modern-day Japan) [9], so how the radiation-induced excess risk of childhood leukaemia in the Japanese atomic-bomb survivors relates to that in other populations is a pertinent question [9], which is discussed further below.

3. Groups exposed for medical purposes

Those aspects of radiation risk that cannot be addressed directly by studies of the Japanese atomic-bomb survivors illustrate the importance of having results from other exposed populations to complement the risk estimates derived from the Japanese survivors. Medical practice provides a number of opportunities to study groups exposed to radiation for therapeutic or diagnostic purposes [1–3, 19].

3.1. Therapeutic exposure

Most of the epidemiological investigations of groups of patients who have received radiotherapy, as a treatment for either a benign or a malignant condition, have confirmed the high relative risk of childhood leukaemia following irradiation in the early years of life that is apparent from the Japanese atomic-bomb survivors [2]. These studies broadly indicate that the risk of childhood leukaemia increases a few years after irradiation, supporting the short minimum latent period for radiation-induced leukaemia that has been inferred from the experience of the Japanese atomic-bomb survivors. Radiotherapy frequently involves a number of exposures separated in time, so the effect of these fractionated doses may be compared with the single brief dose received by the Japanese survivors.

3.1.1. Thymus irradiation.

A statistically significant excess risk of childhood leukaemia was found in a cohort study of nearly 3000 infants from New York State who were irradiated with x-rays for supposed enlargement of the thymus, 96% of whom were ≤1 year of age at treatment [20]: 7 cases of leukaemia were observed among this cohort before 15 years of age against an expected number of 1.1 cases based on the experience of unexposed siblings, a highly statistically significant excess [21]. Analyses based upon assessed RBM doses have yet to be conducted, so leukaemia risk coefficients derived from these data are not available, although a large ERR/Gy RBM dose for childhood leukaemia seems clear.

3.1.2. Scalp irradiation.

Children whose heads were irradiated to treat tinea capitis (ringworm of the scalp) exhibited a subsequent excess risk of leukaemia that commenced a few years after exposure in both an Israeli cohort of almost 11 000 children (mean age at treatment, 7.1 years) [22] and a smaller cohort of just over 2000 children from New York City (mean age at treatment, 7.8 years) [23]. In the larger group of Israeli children, with an assessed individual RBM dose of 0.3 Gy averaged over the whole body, the ERR/Gy for leukaemia at all ages over the entire period of follow-up (an average of 26 years) was 4.4 (95% CI: 0.7, 8.7) [2]; the ERR of leukaemia was highest (and statistically significant) within ten years of exposure and for those <10 years of age at exposure [22]. Shore [23] reported a standardised incidence ratio of 3.2 (95% CI: 1.5, 6.1) for leukaemia at all ages in the group of exposed children from New York, an excess that was apparent in the early years of follow-up [24], and he noted that the RBM dose was ∼4 Gy on average to the ∼10% of the RBM in the skull [23].

3.1.3. Treatment for childhood cancer.

The increasingly successful treatment of childhood cancers has led to studies of the subsequent health of survivors, and particularly of the effects of therapy, including radiotherapy, upon the risk of second primary cancers, including leukaemia. The interpretation of the results of such studies is not, however, straightforward because of, inter alia, frequent co-treatment with powerful chemotherapeutic drugs and the possibility that the occurrence of certain cancers (e.g. hereditary retinoblastoma) inherently increases the risk of a second primary cancer. Further, the effect upon cancer risk of the killing of normal cells by therapeutic doses of radiation has to be considered [25], and also that different radiotherapy treatment regimes may have led to different temporal distributions of RBM doses being received by different groups of patients [26, 27], so that the risk associated with radiotherapy cannot be assumed to be the same for these groups. The leukaemogenic risk from chemotherapy, especially of acute myeloid leukaemia, is considerably greater than that from radiotherapy, and this may have masked any radiation effect if treatment also included chemotherapy [26–29]. Further, studies of cancer patients usually only consider those who have survived a certain length of time after treatment, and these periods can be as long as five years for some study groups, which can lead to early therapy-induced cases that occurred before the start of follow-up being omitted from studies. In consequence, the circumstances of radiotherapeutic treatment of childhood cancers produce difficulties of interpretation as far as the estimation of leukaemogenic risk is concerned. Nonetheless, it is clear that the risk of leukaemia is raised following radiotherapy for the treatment of childhood cancer, and Tukenova et al [29] have inferred that the consequent risk of leukaemia is usually greatest at about 5–9 years after radiotherapy. Hawkins et al [26] demonstrated, having controlled for exposure to chemotherapeutic drugs, a statistically significant radiation dose–response for leukaemia at all ages following treatment for childhood cancer, from which Little [30] has derived an average ERR at 1 Gy of 0.24 (95% CI: 0.01, 1.28), i.e. much less than the equivalent ERR estimate that may be derived from the Japanese atomic-bomb survivors (calculated to be 16.05 (95% CI: 7.22, 37.63) at 1 Gy [30]), which Little has attributed to cell-killing by therapeutic doses of radiation.

3.1.4. Irradiation for skin haemangioma.

The evidence from studies of groups receiving radiotherapy is not, however, entirely consistent, and a raised risk of childhood leukaemia has not been clearly detected among those exposed to radiation in infancy for the treatment of skin haemangioma, although risk estimates derived from the Japanese atomic-bomb survivors suggest that an excess risk should be apparent [31].

In Sweden, a study has been conducted of a cohort of ∼12 000 infants treated (88% while less than 1 year of age) with 226Ra applicators or needles in Göteborg [32]. The mean RBM dose was not presented by Lindberg et al [32], but would appear to be ∼100 mGy from the results of the estimation of doses to other tissues. A statistically non-significant excess of leukaemia incidence at all ages was found, an observed to expected case ratio of 1.54 based upon 13 observed cases, but no specific results for childhood leukaemia were reported and the authors warned that follow-up during the first ten years after treatment was limited [32]. In France, a cohort of ∼5000 infants treated (82% while aged <1 year) with radiation at Villejuif has been studied. The mean dose to the RBM was calculated to be 37 mGy and, overall, 1 leukaemia death was observed (in childhood) against 2.3 expected [33].

The most detailed study of leukaemia following radiotherapy for skin haemangioma was of a cohort of around 14 500 infants treated (all at an age less than 18 months; mean age at treatment, 6 months) with radiation, mainly from 226Ra applicators or needles/tubes, in Stockholm [31]. The mean weighted dose to the RBM was calculated to be 130 mGy, although the local RBM dose per treatment was 2.6 Gy, the highest doses being received by RBM in the skull. Eleven deaths from leukaemia in childhood were observed in the cohort (all <10 years of age at death), against 9.8 expected in the absence of exposure, a small, statistically non-significant excess [31]. Lundell and Holm [31] calculated that, based on the experience of the Japanese atomic-bomb survivors, 21 radiation-induced leukaemia deaths at all ages would be predicted in the cohort, which when added to the expected number of deaths from Swedish national rates of 17, would give a total of 38 deaths as compared to the 20 leukaemia deaths at all ages observed in the cohort. Indeed, based upon an ERR of 4.78, derived from a mean weighted RBM dose of 130 mGy input to the leukaemia risk model presented in the BEIR V Report [13], a rough estimate of 47 radiation-induced childhood leukaemia deaths would be predicted in the cohort, indicating the large discrepancy between the predicted and observed excesses. However, somewhat paradoxically, a (statistically non-significant) positive trend of childhood leukaemia risk with weighted RBM dose across three dose categories was reported by Lundell and Holm [31], the 5 deaths observed in the >100 mGy highest dose category comparing with 1.57 deaths expected on the basis of the ≤10 mGy reference category, a statistically significant excess; for the >100 mGy dose category the ERR at 1 Gy was estimated to be 5.1 (95% CI: 0.1, 15) [31]. It is unclear how this (limited) evidence for an apparent dose–response for childhood leukaemia risk might be compatible with the small difference between the observed number of childhood leukaemia deaths and the number expected from Swedish national rates, given the relatively large number of radiation-induced cases predicted by leukaemia risk models derived from the experience of the Japanese atomic-bomb survivors.

The absence of a detectable excess of childhood leukaemia mortality among infants from Stockholm irradiated to treat skin haemangioma is of some interest because these infants were first treated at a mean age of six months. As noted above, Ohtaki et al [16] reported the absence of a dose–response, above ∼100 mSv, for chromosome translocations in the peripheral blood lymphocytes of Japanese atomic-bomb survivors irradiated in utero, and suggested that this may be related to the killing of particularly sensitive cells in the RBM by moderate doses. Should such an effect persist for some time after birth, the predicted number of cases of leukaemia among infants receiving moderate RBM doses could be overestimated by risk models based upon older ages at exposure. However, the Japanese atomic-bomb survivors were exposed briefly to radiation whereas infants exposed in the treatment of skin haemangioma experienced protracted exposure that would be expected to be less effective at cell-killing [1], although unlike the RBM doses received during the atomic bombings, those received during radiotherapy were highly heterogeneous. It may be that a complex combination of the killing of RBM cells by high localised doses received during radiotherapy and the hypersensitivity to sterilisation of a particular subpopulation of these cells by irradiation in infancy could explain the results of the skin haemangioma studies; but such an explanation should also be capable of explaining the results of the other studies of the radiation treatment of benign conditions in the early years of postnatal life, in particular the findings of the cohort study of those irradiated in infancy for thymus enlargement. This has yet to be fully investigated, but the heterogeneity of the RBM dose together with the hypersensitivity to cell-killing of the cells in which leukaemia originates may be found to play an important role in the explanation of these findings of irradiation in infancy, as it would appear to do for irradiation in utero.

3.1.5. Limitations of radiotherapy studies.

Groups of children who have been treated with radiotherapy provide valuable information on the radiation-induced risk of childhood leukaemia, but there are a number of cautionary points that need to be borne in mind. Radiation is used to treat disease, and the presence of disease may affect the consequent radiation-induced risk of cancer, so that generalisation to a healthy population is not straightforward. Further, as noted above, radiotherapy requires high doses designed to kill abnormal cells and these doses are frequently highly localised. This means that tissues in the vicinity of the target cells may also experience doses that are sufficient to kill substantial numbers of normal cells, leading to a reduction in the cancer risk per unit dose in these tissues when compared with that resulting from low or moderate doses, as a consequence of the competing effect of cell-killing, and this may be a particularly important issue for the magnitude of the leukaemogenic risk arising from therapeutic doses received in utero or soon after birth. Also, tissue-specific doses to regions of the body away from the target of radiotherapy (largely due to radiation scattering) are difficult to calculate and accurate dose estimates are often lacking in medical studies so that the resulting risk coefficients can be unreliable. Substantial effort is presently being devoted to the reconstruction of doses in several epidemiological studies of medical exposures, using modern radiation transport modelling techniques [34].

3.2. Diagnostic exposure

3.2.1. Postnatal exposure.

The low doses generally received from radiation exposures of patients for medical diagnostic purposes offer the opportunity to directly examine the risks arising from low-level exposure. Unfortunately, there has been an absence of a sufficient understanding of the size of the studies required to provide adequate statistical power to detect the predicted excess risk of childhood leukaemia. An ERR of 0.5 for a RBM dose of 10 mSv received in infancy—the rough level of excess risk implied by the experience of the Japanese atomic-bomb survivors—gives only a 50% increase in the risk of childhood leukaemia above the background absolute risk (which is a risk of about 1 in 1800 live births in developed countries [35]), so that even assuming a 10% prevalence of exposure in the infant population requires around 1000 cases in an unmatched case-control study (with the number of controls equal to the number of cases) to give a power of 80% of detecting the risk at a two-sided significance level of 0.05. Few studies of childhood leukaemia and diagnostic exposure have achieved this level of power, and with the decrease of doses received during medical radiography in recent decades (at least, until the arrival of CT scans—see below) even larger studies would be needed to achieve adequate power.

Unfortunately, until recently, there are, taken as a whole, disappointing deficiencies in the studies of postnatal diagnostic exposure to radiation that render a reliable interpretation of findings problematic. Studies have used different periods to exclude exposures occurring close to the time of diagnosis, have not always age-matched controls, suffer from the potential for recall bias where exposure is based upon interview data rather than medical records, and have used different types of radiographic procedures that could include different RBM doses [36]. Overall, it is not possible to draw firm conclusions from these studies—they offer some weak support for a small risk at around the level predicted by standard risk models (see, for example, the recent studies of Rajaraman et al [37] and Bartley et al [38]), but they do not provide any reasonable basis for rejecting the notion of an absence of risk at low doses [36].

A recent matched case-control study of exposures from diagnostic radiography (mainly chest radiography) in early infancy, which was based upon medical records and avoided a number of other defects that have afflicted earlier studies, found a RR of childhood leukaemia (excluding cases occurring within two years of exposure) of 1.35 (95% CI: 0.81, 2.27) [37], but the very low doses of <1 mSv received during chest radiography limit the conclusions that may be drawn from this study. Similarly, a large cohort study of childhood cancer following diagnostic exposure in a hospital in Munich based upon doses reconstructed from medical records found a childhood leukaemia standardised incidence ratio of 1.08 (95% CI: 0.74, 1.52), based on an observed number of 33 cases [39, 40]; but the assessed doses were extremely low (median dose, 7 μSv).

Follow-up of around 4000 children exposed to radiation (at a mean age of just less than 4 years) from diagnostic cardiac catheterisation in Toronto detected 3 cases of leukaemia against 1.9 expected at all ages over the entire period of follow-up [41]. However, it is of note that all three cases were aged <10 years at diagnosis. In a smaller study of nearly 700 children who underwent cardiac catheterisation in Israel (at a mean age of about 9 years), no case of childhood leukaemia was found, although only a very small number of cases would be expected in the absence of exposure [42].

With the advent of a relatively high population prevalence of CT scans, including paediatric CT scans that typically deliver effective doses of several millisieverts, an opportunity currently exists to directly assess the risks resulting from these low-level exposures, based upon records of exposure. Several large studies are underway around the world to investigate the magnitude of the cancer risk that may arise from the low doses received from CT scans and these studies may be able to shed light on the risk of childhood leukaemia following low-level exposure.

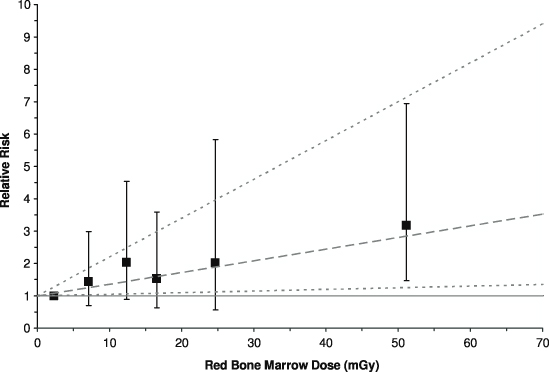

Recently, the first results were published from an historical cohort study of >175 000 patients without previous cancer diagnoses who were examined with CT in Great Britain during 1985–2002 when ≤21 years of age [43]. Data on CT scans were obtained from the records kept by participating hospitals. Cancers incident during 1985–2008 were identified from a central registry, and the initial analyses assessed the radiation-induced risks of leukaemia and brain tumours (malignant and benign), the latter being included mainly because of the dose received by the brain during a head CT scan—head CT scans made up almost 2/3 of the greater than a quarter of a million CT scans included in the study. Doses based on specific scan information were not uniformly available and so estimates were derived using data from scanner surveys carried out in 1989 and 2003; doses to the RBM and brain per CT scan were reconstructed taking into account procedure, age, year of scan and other factors relevant to exposure. Follow-up excluded the initial 2 years after the first CT scan for leukaemia, and the initial 5 years for brain tumours, to reduce the influence of scans being conducted because of an undiagnosed cancer. Although age at diagnosis could be as old as 44 years, >50% of the 1720 984 person-years of follow-up for leukaemia, and nearly 2/3 of cases, were for attained ages <20 years. The mean RBM and brain doses for the cohort were 12.4 mGy and 44.6 mGy, respectively, and included in the study were 74 cases of leukaemia and 135 cases of brain tumours. Significant positive trends with tissue dose were found: the ERR/mGy was 0.036 (95% CI: 0.005, 0.120) for leukaemia (figure 2) and 0.023 (95% CI: 0.010, 0.049) for brain tumours [43]. Although there may be residual doubts over whether an initial head CT scan might have been conducted because of early signs of a brain tumour that was only diagnosed several years later (even though extending the excluded period of follow-up for brain tumours from 5 to 10 years did not materially affect the association) and that this may have contributed towards the association, it is difficult to envisage how such an explanation could be viable for leukaemia [44]. The ERR coefficient for leukaemia is compatible with that derived from the LSS, and it is of interest that although when grouped by estimated RBM dose the only significantly raised relative risk (RR=3.2; 95% CI: 1.5, 6.9, with the <5 mGy dose group as the reference category) is for the ≥30 mGy category (mean dose, 51.13 mGy), higher doses will, in general, have been composed of a number of temporally separated scans, each delivering several milligray of x-rays to the RBM, suggesting that doses of ∼10 mGy from specific CT scans are sufficient to increase the risk of leukaemia. Hopefully, further details of the findings of this study will be made available soon.

Figure 2. Variation of the relative risk of leukaemia with the assessed absorbed dose received by the red bone marrow (RBM) from computed tomography (CT) scans. Points show relative risks by dose groups with 95% confidence intervals, and the dashed line shows the fitted linear excess relative risk per milligray with 95% confidence limits as dotted lines. After Pearce et al [43].

Download figure:

Standard image3.2.2. Antenatal exposure.

Of some interest, given the low doses involved (∼10 mGy), are the studies of childhood cancer in relation to prior abdominal diagnostic x-ray examinations of the pregnant mother. The first, and largest, of the case-control studies was the Oxford Survey of Childhood Cancers (OSCC), which started in Great Britain in the early-1950s, and continued until 1981 (eventually including >15 000 case-control pairs), and found a highly significant statistical association between the risk of mortality from childhood leukaemia and from other cancers in childhood and an antenatal x-ray examination of the maternal abdomen. The initial report of the statistical association, in 1956 [45], was greeted with some scepticism—because, inter alia, of concerns about the influence of recall bias, the early findings being based upon maternal recall of x-ray examinations during pregnancy (which was later checked against medical records [21])—but the association has now been confirmed by many case-control studies carried out around the world (including studies based upon medical records of antenatal exposure, such as that conducted in north-eastern USA [46]) and the association is now accepted as real, although some remain sceptical of a causal interpretation [15].

The most recent result from the OSCC for childhood leukaemia as a separate disease entity was published in 1975 when a RR of 1.47 (95% CI: 1.33, 1.67) was reported [47]. Appropriately combining in a meta-analysis the results of case-control studies other than those produced by the OSCC gives a childhood leukaemia RR of 1.28 (95% CI: 1.16, 1.40) [36], and the inclusion of data from two recent case-control studies [38, 48] in the meta-analysis of other studies does not affect the overall RR estimate. So, the association between childhood leukaemia and an antenatal x-ray examination found both by the OSCC and by all other case-control studies combined is highly statistically significant—the lower RR found by the combined other studies may be due, among other things, to later case-control studies examining periods when the fetal doses received during obstetric radiography were lower than in earlier years. When the results of more recent case-control studies (those published during 1990–2006) are appropriately combined, a leukaemia RR of 1.16 (95% CI: 1.00, 1.36) is obtained [49], and the inclusion of the later case-control studies of Bartley et al [38] and Bailey et al [48] in this meta-analysis of studies published from 1990 onwards produces a RR of childhood leukaemia associated with maternal x-ray exposure during pregnancy of 1.17 (95% CI: 1.01, 1.35)—lower than when results from studies published before 1990 are included, but still raised to a (borderline) statistically significant extent.

Considerable debate has surrounded the interpretation of the statistical association between leukaemia and other cancers in childhood and antenatal diagnostic radiography [9, 15, 21]. Many of the objections to a cause-and-effect explanation have now been met [21, 36]. For example, cohort studies of antenatal exposure to diagnostic x-rays have not found a raised risk of childhood leukaemia, but the only such cohort study with sufficient statistical power to seriously challenge the findings of the OSCC was that of Court Brown et al [50] and one of the authors of this study (Richard Doll) later questioned the accuracy of the linkage between the mothers and their children in this study, and felt that the findings of the study could not be relied upon [21, 51]. A recent cohort study in Ontario based on record linkage of around 5500 mothers exposed to diagnostic radiation during pregnancy and their exposed offspring identified 4 cases of childhood cancer against 5.5 expected from unexposed children born in Ontario (O/E=4/5.5=0.72; 95% CI: 0.23, 1.75) [52]—further details of the cases (e.g. cancer type or diagnostic procedure) could not be reported because of patient confidentiality considerations. Of interest in this study is that almost three-quarters of the antenatal examinations were CT scans, about a quarter of which were of the pelvis, abdomen or spine; but in the absence of detailed dosimetry the interpretation of this study is problematic, and the very small number of cases almost certainly means that the results are compatible with the findings of earlier case-control studies.

However, the finding that the relative risk of childhood leukaemia and that of all the other typical cancers of childhood are raised to a similar extent, unlike the pattern of risk when exposure occurs after birth, is an outstanding issue that requires a satisfactory resolution [15, 21]. Nonetheless, the two cases of cancer other than leukaemia incident before the age of 15 years among the Japanese atomic-bomb survivors irradiated in utero—a fatal hepatoblastoma and a non-fatal Wilms' tumour—compare with, at most, 0.28 case expected from contemporaneous Japanese national rates, and represent a statistically significant excess—O/E=2/0.28=7.14 (95% CI: 1.20, 23.60) [9]. This finding offers support to the idea that intrauterine exposure increases the risk of the common childhood cancers, but with the exception of leukaemia (and thyroid cancer, which is rare in childhood), that this sensitivity to induction by radiation becomes much less (or is absent) after birth. One explanation could be that the cells of origin of the typical cancers of childhood other than leukaemia remain sensitive throughout gestation, but 'switch off' at birth, a suggestion that has important general implications for the aetiology of the common childhood cancers [21].

To obtain a risk estimate (excess risk per unit dose) from the case-control studies of medical diagnostic exposure, estimates of fetal doses are required. The only study for which a reliable estimate of fetal dose is available (the Adrian Committee's estimate of 6.1 mGy for 1958 [53, 54]) and which is large enough to give an acceptably precise risk estimate, is the OSCC, and an ERR coefficient of 0.051 (95% CI: 0.028, 0.076) mGy−1 at fetal doses of ∼10 mGy for all childhood cancers combined is obtained [9]. Since the results of the OSCC indicate that similar values of RR exist for both childhood leukaemia and other common childhood cancers [47], this ERR coefficient is taken be applicable to childhood leukaemia. Applying this ERR coefficient to the baseline incidence rate of childhood leukaemia in Great Britain during the period when the OSCC was conducted of about 600 cases per million live births gives an excess absolute risk (EAR) coefficient of 3 (95% CI: 2, 5) × 10−5 mGy−1 [9]. However, the uncertainties surrounding these risk estimates are considerable, and there are reasons to believe that the data obtained from the later years of the OSCC could be less reliable so that these estimates may overestimate the risk by perhaps a factor of four if the observed increase in the relative risk associated with an antenatal x-ray examination for births in the early-1970s is an artefact [9], although this is a tentative proposition since the appreciable uncertainty associated with the magnitude of fetal doses received during antenatal radiography extends into the 1970s [55].

These ERR and EAR coefficients obtained from the OSCC may be compared with those derived from the Japanese atomic-bomb survivors (see section 2.2 above). An ERR coefficient for childhood leukaemia may be derived from those survivors exposed in utero as 0 (95% CI: 0, 50) Gy−1, and although the absence of cases among these Japanese survivors is noteworthy, the 95% confidence interval of (0, 50) Gy−1 for the ERR coefficient is not incompatible with that derived from the OSCC data, (28, 76) Gy−1 [9], and as discussed above, there may well be other reasons for the absence of leukaemia cases among the survivors irradiated in utero. When this ERR coefficient is applied to the background absolute rate of childhood leukaemia mortality in Japan for the mid-20th century of 270 deaths per million live births (which at this time was effectively equivalent to the incidence rate, and is <50% of the incidence rate in Great Britain when the Oxford Survey was conducted), an upper 95% confidence limit for the EAR coefficient of 0.014 Gy−1 is obtained [9], which is not statistically compatible with the equivalent EAR coefficient obtained from the OSCC of 0.03 (95% CI: 0.02, 0.05) Gy−1. This statistical incompatibility of the EAR coefficients contrasts with the compatibility of the ERR coefficients, illustrating the importance of the assumptions made about the transfer of the risk (whether ERR or EAR, or some combination of the two) between populations when the background rate differs to a material extent—in other words, how much (if at all) does radiation interact with those factors that determine the risk of childhood leukaemia in the absence of exposure to radiation. This evidence offers support, albeit weak, to the idea that transfer of the ERR is more relevant than transfer of the EAR for childhood leukaemia [56, 57]; but if there is a biological basis to the absence of childhood leukaemia among the Japanese survivors irradiated in utero [16] then comparisons with the Oxford Survey findings for, in general, much lower levels of exposure could be spurious.

In this respect, it is of interest that the ERR coefficient of 51 (95% CI: 28, 76) Gy−1 (at ∼10 mGy) for childhood leukaemia implied by the OSCC is compatible with that found in the Japanese LSS (of survivors exposed after birth) of, for example, 34.4 (95% CI: 7.1, 414) Sv−1 RBM dose using leukaemia incidence data [9]. A tentative inference may be drawn from this evidence that exposure of the fetus to low doses (<0.1 Gy) produces an ERR of childhood leukaemia per unit RBM dose that does not differ greatly from that applying to moderate doses (0.1–1.0 Gy) received in the early years of postnatal life, although the many caveats involved in reaching such a conclusion must be borne in mind. The recent cohort study of CT scans of young people found an ERR/Gy RBM dose for leukaemia of 36 (95% CI: 5 120) [43], so this compatibility would appear to extend to exposure to low doses in childhood. However, the contrast with the common cancers of childhood other than leukaemia is noteworthy in that the ERR/Sv for these other childhood cancers following low dose exposure of the fetus is similar to that for leukaemia, but there is little evidence that exposure after birth increases the risk of these typical childhood cancers to any material extent (with the exception of thyroid cancer, which is not a typical childhood cancer), although there is substantial evidence that childhood exposures increase the risk of solid cancers in adult life [1–3, 8, 10, 11], and evidence that exposure in utero also increases the risk of these adult cancers [58].

4. Occupational exposure

4.1. Intrauterine exposure

Clearly, it is most unusual for children to be directly exposed to radiation in the workplace. However, studies have been conducted of cancer among the children of mothers who were occupationally exposed to radiation during pregnancy [59, 60]. There is some, rather weak, evidence from these studies [59, 60] of a possible influence of the doses received in utero upon the risk of childhood cancer, which is somewhat stronger for cancers other than leukaemia, but the average intrauterine doses in these studies are very low at <1 mGy, so these findings should not be over-interpreted.

The results of a study of offspring of women who were employed at the Mayak nuclear complex in Russia while pregnant have recently been published [61]. Among the 3226 exposed offspring (mean dose received in utero, 54.5 mGy), 4 childhood cancer deaths were identified, 2 from leukaemia, which generated a borderline statistically significant ERR for childhood cancer of 0.05 (95% CI: −0.0001, 1.334) per mGy [61]. The similarity of this ERR coefficient to that found using OSCC data is notable, although the small number of deaths upon which this estimate is based leads to a wide confidence interval.

4.2. Preconceptional exposure

A statistical association between the risk of childhood leukaemia and the recorded dose of external radiation received by men while working at the Sellafield nuclear installation in Cumbria, England, before the conception of their children suggested a major heritable genetic link between radiation exposure of the testes of fathers and leukaemia in their offspring [62]. The authors of this case-control study proposed that the association could explain statistically a notable 'cluster' of childhood leukaemia cases in the village of Seascale, adjacent to Sellafield, that was reported in 1983. However, the association was based upon just four cases of childhood leukaemia (and a similarly small number of controls) with cumulative paternal preconceptional doses in excess of 100 mSv, and the association had not been found by previous epidemiological studies, notably by studies of cancer in the offspring of the Japanese atomic-bomb survivors [63]. Nonetheless, the association received considerable attention, and a large programme of research was initiated. One important finding of an early follow-on study was that although the original association was driven by three cases of childhood leukaemia born to mothers resident in Seascale, only 7% of Cumbrian births with fathers who had received a preconceptional dose as a Sellafield employee were to mothers resident in the village, and these Seascale births tended to be associated with lower occupational doses, which was a very unusual distribution if a causal interpretation of the association was to be correct [64].

After substantial investigation, very little support for a cause-and-effect interpretation of the original statistical association between childhood leukaemia and paternal preconceptional irradiation has been found. Table 1 shows the results of the principal epidemiological studies of paternal preconceptional exposure to radiation and childhood leukaemia that have been reported since the original study of Gardner et al [62], and demonstrates that these studies have not confirmed the statistical association—the hypothesis of a substantial link between childhood leukaemia and paternal preconceptional irradiation has now been effectively abandoned [72, 73].

Table 1. The relative risk (RR), and 95% confidence interval (CI), of leukaemia and non-Hodgkin's lymphoma combined (LNHL) at 100 mSv cumulative recorded paternal preconceptional dose from external sources of radiation received while working at the Sellafield nuclear complex, as reported by Dickinson and Parker [65] from their cohort study of live births in Cumbria during 1950–91. Results for the offspring of Sellafield workers are given for births in the village of Seascale and in Cumbria outside Seascale. The Sellafield findings are compared with the results of five studies that have used independent data. (Table after Wakeford [66].)

| Study | Dose–response modela | RR (95% CI) |

|---|---|---|

| All Sellafield radiation workersb,c | Exponential d | 1.6 (1.0, 2.2) |

| Seascale subgroup | Exponentiald | 2.0 (1.0, 3.1) |

| Outside Seascale subgroup | Exponentiald | 1.5 (0.7, 2.3) |

| Japanese atomic-bomb survivors | Linear | <0.98 (<0.98,1.10) |

| (paternal dose only used in analysis)e,f,g | Exponential | 0.76 (<0.31, 1.03) |

| Ontario radiation workersh,f,g | Linear | 0.63 (<0.27, 3.40) |

| Exponential | 0.75 (0.07, 3.31) | |

| Danish Thorotrast patientsb,f | Linear | <0.97 (<0.97, 1.56) |

| Exponential | <0.11 (<0.11, 1.11) | |

| British radiation workers (RLS)h,i,j | Exponentialk | 0.92 (0.28, 2.98) |

| US 'Three Site' radiation workersh,l | Linear | 0.75 (<0.75, 3.5) |

aLinear or exponential dose–response model fitted to the data. bAge at diagnosis: 0–24 years. cThe original study of Gardner et al [62] reported a RR of 8.30 (95% CI: 1.36, 50.56) for the cumulative paternal preconceptional dose category ≥100 mSv and using 'local controls', based on 4 cases and 3 controls; 3 of the cases were born to mothers resident in Seascale. The reasons for the difference in RR from the case-control study of Gardner et al [62] and that from the cohort study of Dickinson and Parker [65] are set out by Dickinson et al [67]. dExponential dose–response model assumed to have been used by the authors. eAge at diagnosis: 0–19 years. fBased on the results of Little et al [68]. gLeukaemia only. hAge at diagnosis: 0–14 years. iRLS: record linkage study [69]. jOverlap with Dickinson and Parker [65] of one case (born in Cumbria outside Seascale and diagnosed after the end of the period studied by Gardner et al [62]; paternal preconceptional dose <50 mSv). kAdjusted by the authors for radiation worker status. lHanford, Idaho Falls, Oak Ridge workers; Sever et al [70] (see Wakeford [71]).

5. Environmental exposure

5.1. Natural sources

Recently developed risk models for leukaemia suggest that ubiquitous natural background radiation in Great Britain, where the average annual equivalent dose to the RBM of children from this source is ∼1.3 mSv, may account for around 15% of cases of childhood leukaemia, although the uncertainties associated with this estimate are considerable [56, 57, 74]. Epidemiological studies have been unable, in general, to detect the influence of natural background radiation upon the risk of childhood leukaemia, but this may well be due to a lack of statistical power resulting from small RBM doses and insufficient geographical variation in exposure. Little et al [75] have demonstrated that a case-control study covering all of Great Britain would require at least 8000 cases of childhood leukaemia to have adequate statistical power (∼80%) of detecting the predicted influence of natural sources of exposure to external γ-radiation and inhaled radon upon childhood leukaemia risk; most of the RBM equivalent dose, and therefore most of the childhood leukaemia risk, is from external sources of γ-rays rather than radon. Record-based case-control studies of this size in Great Britain are feasible (see below) through use of the National Registry of Childhood Tumours (NRCT), although for the large numbers of cases and controls included in these studies individual doses have to be estimated from a database of available measurements rather than specific measurements in the homes of study subjects, and the geographical resolution of γ-ray dose-rate measurements is rather limited at present.

A nationwide record-based case-control study of childhood cancer in Denmark reported a statistically significant association between childhood acute lymphoblastic leukaemia (ALL) and residential exposure to radon [76]; the study included 860 cases of ALL diagnosed during 1968–94. The authors suggested that, on the basis of this association, 9% of Danish childhood ALL cases could be attributable to radon; but while not explicitly reported, the 95% confidence interval for this attributable proportion can be calculated from data presented in the paper to be (1%, 21%), a lower 95% confidence limit that is unlikely to be incompatible with what would be predicted by conventional risk assessments. However, the study is considerably underpowered [75], implying that the nominally significant association is likely to be due to chance. The findings would need to be confirmed by a study of sufficient size using independent data before any reliable conclusion can be drawn about a radical underestimation of the risk of childhood leukaemia from exposure to radon and its decay products by conventional models. This Danish study did not consider exposure to external γ-radiation, but would have had insufficient power to do so effectively.

An illuminating contrast between the association found by this Danish study [76] and that found by the UK Childhood Cancer Study (UKCCS, an interview-based nationwide case-control study of childhood cancer diagnosed in Great Britain during the early-1990s) [77] may be made: whereas the Danish study found a significantly positive trend of childhood ALL risk and domestic radon exposure the UKCCS found a significantly negative trend (based upon 805 cases with measurements conducted in the home). However, in addition to lacking sufficient power to investigate this putative association, the UKCCS suffered from a low level of participation in this part of the study, which was related to socio-economic status and may well have introduced bias that could explain this unusual result. Lack of power and participation bias could also be major contributors to the absence of an association between childhood leukaemia and external γ-radiation reported by the UKCCS [75].

The Danish case-control study [76] used predicted residential radon concentrations calculated from a model based on a previous measurement programme and a number of explanatory variables such as house type and geology. These model predictions of radon concentrations in homes avoid the bias potentially associated with limited participation in a measurement programme conducted as an integral part of a case-control study, which has been a major problem in some studies (such as the UKCCS [77], see above); but given the variation in domestic radon concentrations, the model estimates inevitably introduce uncertainties that require further investigation in relation to their influence upon risk estimates. However, the case-control study approach using individually assessed doses and measures of background factors that could influence the risk of childhood leukaemia (and therefore potentially confound associations) avoids the shortcomings of geographical correlation studies using group-averaged doses and incidence rates, which often lead to difficulties in the interpretation of the findings of such studies, such as has apparently occurred in the geographical correlation study of residential exposure to radon and lung cancer [78].

Harley and Robbins [79] have suggested that the results of the Danish study might be explained by the dose received from inhaled radon and its decay products by circulating lymphocytes while present in the tracheobronchial epithelium. However, while the dose to individual lymphocytes in the tracheobronchial epithelium can be substantial and higher than the average dose to the RBM, circulating lymphocytes spend only a limited time within the tracheobronchial epithelium and the average dose received by the whole population of lymphocytes is likely to be of most relevance to the consequent risk of childhood ALL. Further, the dose received by haematopoietic stem cells within the RBM is conventionally understood to be the most pertinent with respect to the risk of radiation-induced childhood acute leukaemia, and radon delivers a relatively small component of the dose from natural background radiation to these cells. That the effect of radon exposure upon the risk of childhood leukaemia can be detected by a study of this size seems rather unlikely, and the positive association should not be over-interpreted; very large case-control studies involving tens of thousands of cases would be required to satisfactorily investigate the issue of the influence of radon upon the risk of childhood leukaemia [75].

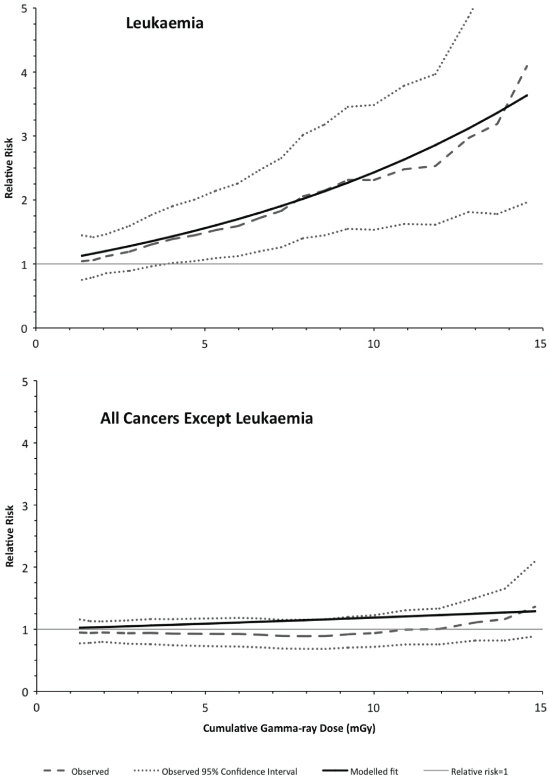

Recently, the initial findings of a nationwide record-based case-control study of childhood leukaemia and other cancers in Great Britain during 1980–2006 and exposure to natural sources of γ-radiation and radon have been published [80]. The study included 27 447 cases from the NCRT and 36 793 matched controls, of which 9058 cases were of leukaemia matched with 11 912 controls. Cumulative exposures to radiation were based upon estimates for the mother's residential address at the child's birth, using a predictive map based on a large database of residential measurements of radon, and the average of measurements taken in the county district (intermediately sized administrative areas) of residence for γ-rays. Owing to geographical matching of cases and controls, only 52% of potentially available cases contributed to the γ-ray analysis (which compares with >95% for the radon analysis), although the power to detect the predicted excess risk of childhood leukaemia from γ-ray exposure was still ∼50%. For childhood leukaemia, an ERR of 0.12 (95% CI: 0.03, 0.22) per mSv of cumulative RBM dose from γ-radiation was found (figure 3), whereas for radon the ERR was 0.03 (95% CI: −0.04, 0.11) per mSv. For childhood cancers other leukaemia, no significant associations with exposure from either γ-rays (figure 3) or radon were found. The result for childhood leukaemia and radon suggests that the proportion of childhood leukaemia incidence attributable to radon (if not zero) is at the lower end of the confidence interval reported from the much smaller Danish case-control study. Although work is in hand to improve the estimates of individual doses from γ-rays, it is of interest that the initial results of this study conform to what might be anticipated from prior evidence: a detectable association between childhood leukaemia and cumulative γ-ray exposure, but not for cumulative radon exposure, and no discernible association with either exposure for other childhood cancers. Further, the childhood leukaemia and γ-ray RBM dose association is at a level compatible with the predictions of conventional models [80]. The initial findings of this study suggest that the childhood leukaemia risk estimates derived from data for the Japanese atomic-bomb survivors are broadly applicable to the very low dose-rates received from naturally occurring background γ-radiation.

Figure 3. Variation of the observed (and associated 95% confidence interval) and fitted relative risk with the assessed cumulative dose from natural background gamma-rays, for childhood leukaemia (upper panel) and all childhood cancers other than leukaemia (lower panel). After Kendall et al [80].

Download figure:

Standard image5.2. Radioactive contamination

Reports of excesses of childhood leukaemia incidence near certain nuclear installations have led to suggestions that, since conventional risk assessments have found that assessed radiation doses from radioactive discharges are far too low to account for the excess cases, the risk of childhood leukaemia resulting from the intake of man-made radionuclides has been grossly underestimated (by a factor in excess of 100, in most instances greatly in excess of 100) [81–83]. There is little doubt that 'clusters' of cases of childhood leukaemia have occurred in the vicinity of the Sellafield and Dounreay nuclear establishments in the UK, and of the Krümmel nuclear power station in Germany [5], but in the absence of a detailed understanding of how the major causes of childhood leukaemia may influence temporal and geographical patterns of incidence the accurate interpretation of these findings is problematical. So, for example, there is evidence for a general heterogeneity in the geographical distribution of childhood leukaemia incidence in Great Britain, which points to a non-uniform distribution of major risk factors in the population of children [84], and the most extreme recorded cluster of childhood leukaemia has been reported from Fallon in Nevada, far from any nuclear facility [85]. Nevertheless, the possibility of a serious underestimation of the risk from 'internal emitters' has been examined in some detail [86].

Atmospheric nuclear weapons testing in the late-1950s and early-1960s led to ubiquitous exposure to the radioactive debris of these explosions [87, 88] and to the intake of a range of radionuclides very similar to that released from nuclear reactors and spent nuclear fuel reprocessing plants, providing the possibility of an investigation of the influence of weapons testing fallout upon childhood leukaemia incidence around the world to examine the suggestion of a serious underestimation of the risk of childhood leukaemia from internally deposited anthropogenic radioactive material. Such a study is not, however, straightforward because large-scale accurate and complete registration of childhood leukaemia in the early-1960s and before was not commonplace, and the use of childhood leukaemia mortality data is not an acceptable alternative since treatment, and hence survival, was becoming increasingly successful after 1960. Nonetheless, the childhood leukaemia incidence data from eleven large-scale registries from three continents has been collated and examined by Wakeford et al [89] who found no evidence that the marked peak of intake of man-made radionuclides present in nuclear weapons testing fallout has detectably influenced the subsequent risk of childhood leukaemia substantially beyond the predictions of conventional leukaemia risk models, providing strong evidence against the suggestion that the risk arising from the intake of anthropogenic radionuclides can account for the reports of raised levels of childhood leukaemia near some nuclear installations.

It would be wrong to infer from this, however, that nuclear weapons testing fallout has not increased the risk of childhood leukaemia to the extent expected from standard models, and Darby et al [90] found that childhood leukaemia incidence in the Nordic countries during the period following the highest fallout from test explosions was slightly, and marginally significantly, higher than in adjacent periods. The small observed increase was compatible with that predicted by the BEIR V Committee leukaemia risk model [13]. Stevens et al [91] conducted a case-control study of leukaemia deaths in south-west Utah and found a significantly raised relative risk of acute leukaemia mortality among young people who had received the highest assessed doses (>6 mGy) from radioactive fallout from nuclear weapons explosions at the Nevada Test Site in the neighbouring state. Their results were compatible with the prediction of the number of radiation-induced leukaemia deaths based upon reconstructed doses and the risk models of the BEIR V Report [13]. Excesses of childhood leukaemia incidence have been reported from the area around the Semipalatinsk Nuclear Test Site in present-day Kazakhstan [92], and there is an indication from the preliminary findings of a nested case-control study of leukaemia at all ages in settlements downwind of the site of a raised relative risk at high estimated doses (>2 Sv) [93]; but as yet, no detailed investigation of childhood leukaemia in relation to the doses assessed to have been received as a result of nuclear explosions has been carried out.

Studies of childhood leukaemia and exposure to radioactive contamination resulting from large-scale releases from nuclear facilities have provided mixed findings. Following the Chernobyl nuclear reactor accident in 1986 investigations have failed to find unequivocal evidence for a raised risk of childhood leukaemia [94]. A detailed case-control study of 421 cases of acute leukaemia among those in utero or <6 years of age at the time of the accident and diagnosed before the end of 2000 while resident in heavily contaminated areas of the former USSR was conducted by Davis et al [95]. The mean RBM dose assessed to have been received by the affected children was around 10 mGy, so this study has limited power. While age at diagnosis could be as old as 19 years, the median age at diagnosis was 7 years. Davis et al [95] found a statistically significant positive dose–response in Ukraine, but not in Belarus or Russia [95]; for all three countries combined, the ERR was 0.032 (95% CI: 0.009, 0.084) per mGy, which is not incompatible with what would be expected from recent risk models. However, Davis et al [95] noted 'a disproportionate number of controls from less heavily contaminated [districts]' of Ukraine, and a similar case-control study in Ukraine [96] obtained a dose–response, an ERR of 0.018 (95% CI: 0.002, 0.081) per mGy, that while significantly positive, had a slope that was substantially lower than that previously found for Ukraine by Davis et al [95], an ERR of 0.079 (95% CI: 0.022, 0.213) per mGy, raising serious questions about the reliability of the data used in these studies, in particular the representativeness of the controls selected in Ukraine [97]. Childhood leukaemia incidence in Europe outside the former USSR does not appear to have been perceptibly influenced by contamination from the Chernobyl accident [98–101], which was at a much lower level than that in the most affected areas in the former USSR [94], although the Europe-wide study (ECLIS) only considered cases diagnosed before 1992 (i.e. during the  years following the accident) [98], a rather short post-accident period.

years following the accident) [98], a rather short post-accident period.

Studies of leukaemia in the riverside communities of the Techa River, which was heavily contaminated by early releases of highly radioactive liquid effluent from the Mayak nuclear complex in the Southern Urals of Russia, have found significantly raised risks related to the level of exposure [102, 103]. The most recent study [103] produced an ERR/Gy for leukaemia at all ages of 4.9 (95% CI: 1.6, 14.3), the mean RBM dose among about 30 000 cohort members being 0.3 Gy. Childhood leukaemia has not been considered separately in detail, but it would appear that only a few cases of leukaemia have occurred among exposed young persons [103]. However, the reconstruction of RBM doses for this study is complex, and RBM dose estimates are currently undergoing revision [104], but the contribution of 90Sr to the dose makes this study of particular interest.

Finally, in the early-1980s 60Co-contaminated steel was inadvertently used in the construction of buildings in Taiwan, which led to the increased exposure to γ-radiation of >10 000 people. Hwang et al [105] examined cancer incidence in a cohort of ∼6000 individuals assessed to have been exposed to an average cumulative dose of ∼50 mGy. They found a statistically significant association between the risk of leukaemia (excluding chronic lymphocytic leukaemia) at all ages and the reconstructed cumulative dose, but no details were given of childhood leukaemia specifically.

6. Conclusions

There is a broad consistency of results from the epidemiological study of childhood leukaemia following the receipt of moderate-to-high doses of ionising radiation: after a short latent period of around two years, the excess relative risk per unit RBM dose rises to a high level for a few years before gradually attenuating to a low level two decades or so after exposure—the radiation-related excess relative risk is expressed predominantly as a 'wave' with time since exposure. The evidence from case-control studies of antenatal medical exposure to diagnostic x-rays, suggests that the excess relative risk resulting from low dose exposure is compatible with the predictions of radiation-induced leukaemia risk models based upon the experience of the Japanese atomic-bomb survivors exposed after birth to moderate-to-high doses at a high dose-rate, although the uncertainties inherent in such a comparison are considerable. Studies of stable chromosome aberrations in Japanese atomic-bomb survivors exposed in utero indicate that the cells of origin for leukaemia in childhood could be hypersensitive to cell-killing. This effect has to be borne in mind when the childhood leukaemia risk estimates obtained from exposure in utero to low doses are compared with those derived from intrauterine exposure to moderate-to-high doses, since the latter could be reduced by such an effect; the effect might possibly persist for some time after birth, and this needs to be the subject of further research. The recently reported findings from large studies of the influence of paediatric CT scans and of natural background radiation upon childhood leukaemia risk provide further evidence that low-level exposure to radiation increases the risk of childhood leukaemia, to a degree that is consistent with the predictions of models based upon data from studies of moderate-to-high doses received after birth at a high dose-rate. It would appear from the collective evidence that the assumptions made about the radiation-induced risk of childhood leukaemia for the purposes of radiological protection are broadly correct.

Acknowledgments

The author wishes to acknowledge the many fruitful discussions held with many people over the years that have contributed to this review, and to the anonymous reviewers for valuable suggestions.