Abstract

Event-related potential (ERP)-based brain–computer interfaces (BCIs) employ differences in brain responses to attended and ignored stimuli. Typically, visual stimuli are used. Tactile stimuli have recently been suggested as a gaze-independent alternative. Bimodal stimuli could evoke additional brain activity due to multisensory integration which may be of use in BCIs. We investigated the effect of visual–tactile stimulus presentation on the chain of ERP components, BCI performance (classification accuracies and bitrates) and participants' task performance (counting of targets). Ten participants were instructed to navigate a visual display by attending (spatially) to targets in sequences of either visual, tactile or visual–tactile stimuli. We observe that attending to visual–tactile (compared to either visual or tactile) stimuli results in an enhanced early ERP component (N1). This bimodal N1 may enhance BCI performance, as suggested by a nonsignificant positive trend in offline classification accuracies. A late ERP component (P300) is reduced when attending to visual–tactile compared to visual stimuli, which is consistent with the nonsignificant negative trend of participants' task performance. We discuss these findings in the light of affected spatial attention at high-level compared to low-level stimulus processing. Furthermore, we evaluate bimodal BCIs from a practical perspective and for future applications.

Export citation and abstract BibTeX RIS

1. Introduction

1.1. Event-related potential-based brain–computer interfaces

Event-related potentials (ERPs) differ for attended and ignored stimuli. This makes them suitable for use in brain–computer interfaces (BCIs) where users can select options (e.g., the commands 'left' or 'right') by attending to the corresponding stimulus (target) while ignoring other stimuli (nontargets). The successive components in an ERP are related to different levels of stimulus processing, starting from low-level sensing up to high-level cognition. ERP-BCIs have focused on the high-level P300 ERP component which is stronger for targets than for nontargets (Farwell and Donchin 1988). It occurs rather late because a perceived stimulus first needs to be endogenously (with voluntary control) recognized and categorized as the target. Spatial attention can also modulate earlier ERP components, such as the N1 (low level) and N2 (intermediate level), which are enhanced if stimuli occur within the focus of attention (Mangun and Hillyard 1991, Wang et al 2010). This effect is useful for ERP-BCIs employing spatially distributed stimuli because targets and nontargets can be endogenously discriminated based on their location. Attention can be focused and sustained at the target location even before the target is presented, allowing endogenous modulation of high- and low-level ERP components. Although given much less attention in BCI research, early ERP components are indeed different for targets and nontargets (Shishkin et al 2009) and may influence BCI performance because the EEG of the corresponding intervals is usually included during classification (e.g., Krusienski et al 2008).

Most ERP-BCIs use visual stimuli to present the user with options (e.g., Farwell and Donchin 1988). The drawback of visual ERP-BCIs is that the effectiveness of these systems depends to a large extent on the ability of users to gaze at the visual stimuli (Brunner et al 2010, Treder and Blankertz 2010), which is not possible for either all users (e.g., due to paralysis) or in applications that require users to look elsewhere, such as during driving (Thurlings et al 2010). Therefore, novel approaches employ brain signals related to covert visual spatial attention in various types of BCIs (Allison et al 2008, Bahramisharif et al 2010) and gaze-independent visual-based ERP-BCIs are being developed (Acqualagna and Blankertz 2011, Liu et al 2011, Treder et al 2011). In addition, interest has grown in employing alternative sensory modalities such as audition (Höhne et al 2011, Nijboer et al 2008, Schreuder et al 2011). However, in many situations (e.g., in gaming or driving), both the visual and auditory sensory channels are already heavily loaded (Van Erp and Van Veen 2004). Recent research has shown that tactile stimuli may be a viable alternative. Brouwer and Van Erp (2010) demonstrated the feasibility of employing tactile stimuli (tactors) around the waist in a tactile ERP-BCI (see also Brouwer et al 2010). Tactors around the waist correspond naturally to navigation directions (Van Erp 2005), which makes a tactile ERP-BCI especially interesting for navigation applications.

1.2. Effects of multisensory integration on task performance and the ERP

The ERP-BCIs described above all rely on the sensory-specific (and higher level) processing of one modality only. Often, however, sensory inputs of more than one modality are integrated in the brain, causing additional neuronal activity. This phenomenon is called multisensory integration (for reviews, see Driver and Noesselt 2008, Ernst and Bülthoff 2004, and Stein and Stanford 2008) and may take place at perceptual stages (Molholm et al 2002, Philippi et al 2008), higher cognitive stages (Schröger and Widmann 1998) and/or during motor preparation and execution (Giray and Ulrich 1993). We are interested in if and how effects of multisensory integration can be exploited in a BCI to boost BCI performance. To investigate this, we created a bimodal ERP-BCI by the simultaneous presentation of gaze-dependent visual stimuli and gaze-independent tactile stimuli.

Multisensory integration has been investigated in a number of behavioural and ERP studies. Task performance has repeatedly been shown to benefit from bimodal (compared to unimodal) stimulus presentation, exhibiting reduced reaction times (e.g., Gondan et al 2005a, Miller 1991, Molholm et al 2002) and increased accuracy (Talsma and Woldorff 2005). Associated results from ERP studies revealed that multisensory integration starts as early as 50 ms after stimulus onset for audio-tactile stimuli in central/postcentral areas (Foxe et al 2000), 46 ms after stimulus onset for audio-(covert) visual stimuli (Molholm et al 2002) and between 80 and 125 for visual–tactile stimuli (Sambo and Forster 2009). These studies focused on the start of the integration and therefore only investigated the EEG until approximately 200 ms after stimulus onset. Although previously multisensory integration was thought of as an exogenous process (automatic without voluntary control), recently—crucial to BCI—the role of endogenous attention was acknowledged (for a review, see Talsma et al 2010). Talsma and Woldorff (2005) found that multisensory integration of audiovisual stimuli is modulated by endogenous attention at different stages of processing: starting as early as 80 ms and peaking at approximately 100, 190 and 370 ms after stimulus onset.

Note that the multisensory integration studies mentioned above differ from those performed in a BCI context with respect to the participants' task and attention involved and the characteristics of targets and nontargets. In a BCI paradigm, observers are presented with rapid sequences of stimuli that are physically similar. Only the observers' endogenously focused attention distinguishes between targets and nontargets. To date, only two studies have reported on the possible benefit of bimodal stimulus presentation in an ERP-BCI context. The first is from Brouwer et al (2010), who investigated bimodal (covert) visual–tactile (compared to unimodal visual or tactile) stimulus presentation and found a slight local parietal enhancement of the P300 and an overall enhancement of offline classification accuracies. However, that study was not fully in line with BCI because endogenous attention and exogenous attention were confounded, as the targets were always physically different from the nontargets. The second study (Belitski et al 2011) showed the positive effects of a bimodal audio-visual ERP-BCI paradigm (compared to both unimodal variants) on offline classification accuracies, but the underlying ERP components were not investigated.

1.3. Research questions and hypotheses

In the current study, our research questions are as follows:

- 1.To what extent does (spatially) attending to visual–tactile stimuli enhance ERP components compared with unimodal stimuli in an ERP-BCI paradigm?

- 2.If enhanced bimodal ERP components are found, can BCI performance benefit from them?

- 3.How is participants' task performance affected by attending to visual–tactile (compared to unimodal) stimuli?

Our hypotheses are that participants, who are asked to count the number of targets, perform better and that early ERP components (< 200 ms) are enhanced when attending to visual–tactile targets compared to unimodal ones. Finally, we expect that related features of the EEG enhance offline classification accuracies. We investigated these questions and hypotheses in a navigation ERP-BCI context. First we determined the endogenous ERP components for attending targets in a visual, tactile and visual–tactile ERP-BCI context, and compared the ERP components to investigate the effect of stimulus modality. Subsequently, we explored how these endogenous ERP components can be employed in BCI and how stimulus modality affects BCI performance.

2. Method

2.1. Participants

Ten volunteers (seven men and three women with a mean age of 29.1 years and age range of 23–39 years) participated in this study. Prior to the experiment, two of them had participated in a tactile ERP-BCI experiment, and three had participated in a visual ERP-BCI experiment. All participants had normal or corrected-to-normal vision.

2.2. Task

The participants looked at a visual display (see figure 1(a)) that was divided into hexagons. Each hexagon was approximately 3.5° of visual angle in size. The participants navigated a blue disc (representing the participant's position) along a route, visualized by hexagons coloured a lighter shade of grey than the environment. The direction from the blue disc to the next hexagon on the route was the target direction (forward-right in figure 1(a)). The other directions were the five nontarget directions. Each direction corresponded to a unique stimulus; depending on the condition, this was an arrow on the visual display, a tactor in the tactile display (figure 1(a)) or both simultaneously. The six stimuli were presented sequentially in random order. The participants' tasks were to pay attention to the tactile, visual or visual–tactile stimulus corresponding to the desired navigation direction and to count the number of times it was presented. The participants reported the counted number at the end of each step by means of a keyboard. We used the reported numbers as a measure for task performance.

Figure 1. Overview of the experimental setup. (a) Top view: for each condition, the locations of the stimuli corresponding to all possible directions. Current targets are highlighted, and visual nontargets are here visualized semi-transparently for demonstration purposes only. (b) Side view. (c) Eighteen steps in each of two routes in one condition. (d) Ten repetitions of targets and nontargets in a step. (e) One target and five nontargets in each repetition.

Download figure:

Standard image2.3. Design

The experiment involved three conditions, named after the modality that the stimuli were presented in: visual, tactile and visual–tactile. Each condition was presented in each of two sessions. Within each session, one route for each condition was completed. The conditions were offered in random order during a session.

A route consisted of 18 navigation steps, back and forth over a path of 10 hexagons. We designed routes such that all directions were balanced, and these were randomly linked to conditions for each participant. Each of the 18 steps in a route consisted of 10 consecutive sequences of stimuli, i.e. 10 repetitions. In each repetition, each of the six stimuli was presented once in random order, with the constraint that there was at least one nontarget in between two targets of consecutive repetitions (see figures 1(c)–(e)). To prevent the number of targets in each step from always being equal to 10, we varied the number of presented targets for each step by adding one dummy sequence of six stimuli before and one after the 10 actual repetitions. In a dummy sequence, zero to three targets could occur, also with at least one nontarget in between two targets (see figure 1(d)). Thus, the number of targets within one step varied between 10 (from the ten repetitions) and 16. The EEG data recorded during the dummy sequences were not used in the analysis.

Each participant's position was represented by a blue disc in the centre of a hexagon. At the end of each step, after the participant reported the counted number of targets, the blue disc automatically went to the next hexagon on the route as long as the final hexagon had not yet been reached. Then, 2.5 s after the number was reported, the next step began. One step took 25.54 s – (6 stimuli * (10 actual +2 dummy repetitions) * (200 on + 120 ms off time)) + 2.5 s reporting time.

2.4. Materials

2.4.1. Visual stimuli and display

Visual stimuli were presented on a Samsung 19'' TFT monitor (1280 × 1024, 60 Hz) and consisted of yellow–green arrows starting from the blue disc in the current hexagon and pointing to a neighbouring hexagon (see figure 1(a)). These stimuli were sequentially presented for 200 ms (on time) and not visible in the following 120 ms (off time). Participants were allowed to gaze directly at the visual stimuli. The visual display was positioned horizontally (flat on a table) such that the visual and tactile stimuli were congruent in direction and corresponded with natural 2D navigation directions (see Thurlings et al 2012 for the importance of directional congruence in ERP-BCIs). The viewing distance was approximately 50 cm from the eyes to the centre of the visual display.

2.4.2. Tactile stimuli

Participants wore a vest (which could be adjusted to body dimensions) with integrated tactors. The tactors were custom built and consisted of a plastic case with a contact area of 1 × 2 cm containing a 160 Hz electromotor (TNO, the Netherlands, model JHJ-3, Van Erp et al 2007). To prevent the participants from perceiving auditory information from the tactors, we let them listen to pink noise via in-ear headphones during the experiment.

For this study, the tactors were arranged in groups of two at six locations in a circular layout on a horizontal plane (around the torso at approximately navel height). The locations of the tactors corresponded to the navigation directions of the task: left, right and two directions in between along both the frontal side and backside (see figures 1(a) and (b)). The on and off times of the tactors were the same as those for the visual stimuli (200 ms on and 120 ms off).

2.4.3. Visual–tactile stimuli

Bimodal visual–tactile stimuli were created by triggering corresponding visual and tactile stimuli simultaneously. Because the visual and tactile stimuli have varying technical properties, the corresponding intensity–time functions are different. This maximum intensity of the tactile stimulus is reached within 50 ms after the visual stimulus has reached its maximum intensity.

2.4.4. EEG recording equipment

EEG was recorded from 60 nose-referenced scalp electrodes that used a common forehead ground (Brain Products GmbH, Germany). The impedance of each electrode was below 20 kΩ, as was confirmed prior to and during the measurements. EEG data were recorded with a hardware filter (0.05–200 Hz) and sampled at a frequency of 1000 Hz.

2.5. Data analysis

EEG, BCI and task performance analyses were performed using Matlab 2007b (The Mathworks, Natick, MA, USA).

2.5.1. EEG preprocessing and selection

To prepare the recorded EEG for further processing, the data were once again bandpass filtered (0.5–30 Hz) and sampled with a sampling frequency of 100 Hz. Such a bandpass filter reduces influences from artefacts such as eye blinks (e.g., Guger et al 2009, Tereshchenko et al 2009). We are interested in the clean effects of endogenous attention on the ERP and attempted to isolate such effects in the ERP. To this end, we used two strict rejection criteria, which were only applied to select data for the ERP analysis and not for the classification analysis. Finally, a difference measure was computed to isolate endogenous effects. The rejection criteria will be described next, followed by the difference measure.

The first rejection criterion was that EEG was not used for the ERP analysis if it was expected to be contaminated by previous or following target responses relative to the stimulus response of current interest using an approach similar to that of Treder and Blankertz (2010). To this end, we selected only EEG data associated with (non)targets when the three preceding and the three following presented stimuli were nontargets, i.e. when there were no (other) target responses between –960 and 1280 ms relative to (non)target onset. For the selected (non)targets, epochs from all electrodes were extracted from –320 to 750 ms relative to stimulus onset. This resulted in an average number of 246 target epochs (range 230–262) and 189 nontarget epochs (range 167–214) per participant. Similar numbers of epochs were selected per condition. The epochs were baseline corrected relative to the average voltage during the 320 ms preceding the stimulus onset.

As a second rejection criterion, we discarded all epochs within one repetition if epochs recorded at both frontal electrodes (Fp1 and Fp2) contained amplitude differences exceeding 100 µV, indicating eye movements. This was the case for 5.6% of the measurements. For all conditions, this left us with averages of 227 target epochs (range 107–262) and 183 nontarget epochs (range 130–209) per participant. Subsequently, the selected target and nontarget epochs were averaged per participant, per condition and per electrode.

Finally, we subtracted the averaged clean nontarget epochs from the averaged clean target epochs for each participant, each condition and each electrode. In this step, we removed exogenous (involuntary or automatic) attention effects. Further analyses were performed regarding this difference ERP (or endogenous ERP).

2.5.2. Identifying ERP components triggered by endogenously attended stimuli

We aimed at identifying and quantifying all ERP components triggered by endogenously attended stimuli occurring during the interval until 750 ms after stimulus onset. First, we identified significant endogenous effects by performing a sample-by-sample t-test on the difference ERP for each electrode and condition. To correct for multiple testing, the method of Guthrie and Buchwald (1991) was applied (Molholm et al 2002, Rugg et al 1995, Vidal et al 2008). In our case, this implied that (at least) four consecutive samples (equivalent to 40 ms) had to be significantly different from zero to consider the corresponding samples as a stable segment. Second, we clustered these segments over electrodes (hierarchical clustering of pairwise objects) to label the elicited endogenous ERP components using the cluster's time interval, topography and polarity. The clustering of segments was based on the beginning and end of their time periods and their averaged amplitudes (using the following standard parameters: Euclidean distance in feature space, single linkage and maximum 15 clusters; Webb 2002). We also applied the method of Guthrie and Buchwald (1991) to correct for multiple testing with respect to the electrode dimension. Clusters were considered robust if they contained segments of at least four electrodes. Robust clusters that had overlapping intervals and comparable averaged amplitudes were assumed to be subcomponents of the same ERP component and were combined. These combined robust clusters defined the topographic distribution and the interval of the endogenous ERP components, taking the beginning of the earliest segment and the end of the latest segment in the clusters as ERP component intervals.

2.5.3. Quantifying and comparing endogenous ERP components

After identifying the elicited endogenous ERP components, we quantified these to compare them between conditions. To capture the strength of a local (at a certain electrode site) ERP component, regardless of its shape, we used the area-under-the-curve values (AUC; Allison et al 1999, Luck 2005, Puce et al 2007). To also allow for topographic distributions of an ERP component as a measure of the component's magnitude, we determined the sum of AUCs from the electrodes included in a defined ERP component to quantify endogenous ERP components in each condition and for each participant, i.e. AUCs from stable segments included in an ERP component were calculated and summed. We refer to the sum of topographic distributed AUCs from an ERP component as the tAUC. With the tAUC, we can describe the magnitude of an ERP component not only by taking the averaged amplitude and duration of the component into account but also by considering the topographic distribution. Endogenous ERP components with overlapping intervals and equal polarities between conditions were considered to reflect the same ERP component. Associated tAUCs were statistically compared. Note that this measure corresponds to perceptual and cognitive processes but not necessarily to discrimination, as in BCI classification, because the information of neighbouring electrodes in broadly distributed components can be redundant.

Additionally, we compared ERP components over conditions using the more traditional peak-picking (Luck 2005) to validate the tAUC value and to allow for a comparison of ERP components to be made between conditions when these were absent in one or more conditions. We looked for peaks in condition-specific intervals as defined in the previous step. If an ERP component was only detected in one or two but not in all three conditions, the (overlapping) interval of the detected ERP component was used to determine peaks in all conditions. Six electrodes were chosen for the determination of peak amplitudes for each ERP component based on the topographic distribution of that component.

2.5.4. Offline analysis of BCI performance

Classification accuracies were analysed offline by linear discriminate analysis (LDA) because previous BCI research showed good results using this relatively simple method (Blankertz et al 2011, Krusienski et al 2008, Zander et al 2011). We applied a stepwise LDA (SWLDA) with similar parameter settings as in Krusienski et al (2008): a maximum of 60 features employed in the model, a p-value of < 0.1 for features included in the model initially and a p-value of > 0.15 for features removed from the model backwards. Features were extracted from each electrode, and voltages were averaged within a subwindow of a specific ERP interval, which was determined according to the method described in section 2.5.2. For each condition, we divided each specific ERP interval into four subsequent subwindows of similar lengths. In total, the number of features prior to selection corresponded to the number of subwindows (4) * the number of ERP components involved (1, 2 or 3) * the number of electrodes (60). The training set was based on the first half and the test set on the second half of the recorded data from each participant and each condition and followed preprocessing steps similar to those described for the ERP analysis; however, no data were rejected to realistically assess potential online classification performance. To investigate how conditions interacted with the amount of data necessary to improve the signal-to-noise ratio, we investigated the effect of the number of repetitions for averaging over test repetitions (1–10).

A goal of this study was to determine whether increased bimodal (compared to unimodal) ERP components are usable in BCI, which we have approached both theoretically and practically. For the theoretical approach, we focused on the effect of bimodally increased ERP components on BCI performance only. In contrast, for the practical approach we included all ERP components that could boost BCI performance, to evaluate the effect of stimulus modality. The theoretical approach may produce relevant information for further development of bimodal BCIs, whereas the practical approach directly evaluates the visual–tactile BCI in a practical sense.

For the theoretical approach, we compared classification accuracies from all conditions based on the same ERP components, which were enhanced for bimodal, and based on the same number of repetitions. The number of repetitions included in this analysis was established according to a criterion by which successful BCI control could be expected. Successful BCI control was considered when a threshold for classification accuracies of 70% or higher was obtained (e.g., Schreuder et al 2010) by at least 80% of the participants.

For the practical approach, for each condition separately, we determined the most optimal classifier choices, i.e. on which ERP components (separately or combined) the classifier should be based and the number of repetitions that are required to result in the highest bitrates, while successful BCI control could be achieved. We calculated bitrates (Serby et al 2005), based on each participant's classification probability for each repetition. Using the most appropriate classifier and number of repetitions for each condition, bitrates were calculated and statistically compared.

2.5.5. Counting accuracy

As a measure of task performance, we determined the counting accuracies as the percentage of steps in which the number of targets was counted correctly for each participant and each condition.

2.5.6. Statistical analysis

ERP components' tAUC values and peak amplitudes, classification and counting accuracies were statistically analysed using Statistica 8.0 (StatSoft, Tulsa, OK, USA). We used one-way repeated-measures ANOVA with modality (three levels) as the independent variable to test for effects of stimulus modality on tAUCs, on classification accuracies and on counting accuracies (or paired t-tests if suitable). Two-way repeated-measures ANOVAs were applied to analyse peak amplitude (with the electrode as the second independent variable). We report the main effects of modality and interaction effects if these revealed local effects of modality. Tukey post-hoc tests were applied when appropriate.

2.6. Procedure

We helped participants into the tactile vest and seated them in front of the visual display. We checked for tactor saliency by activating the tactors successively and asking the participants for the corresponding directions. If necessary, we tightened or relaxed the tactile vest and/or repositioned one or more of the tactors. During EEG preparation, we explained the outline of the experiment and instructed participants to move as little as possible during tactor presentations. Before each recording, we informed the participants about the sensory modality of the stimuli employed in the current recording. Then, participants accustomed themselves to the current modality by activating the six stimuli by pushing keys 1–6 for a maximum period of 2 min. When the participants indicated that they were ready to begin, we started the recording. Each route recording lasted approximately 10 min, and recordings followed each other with 1–15 min breaks in between, depending on the participants' preferences.

3. Results

3.1. Endogenous ERP components

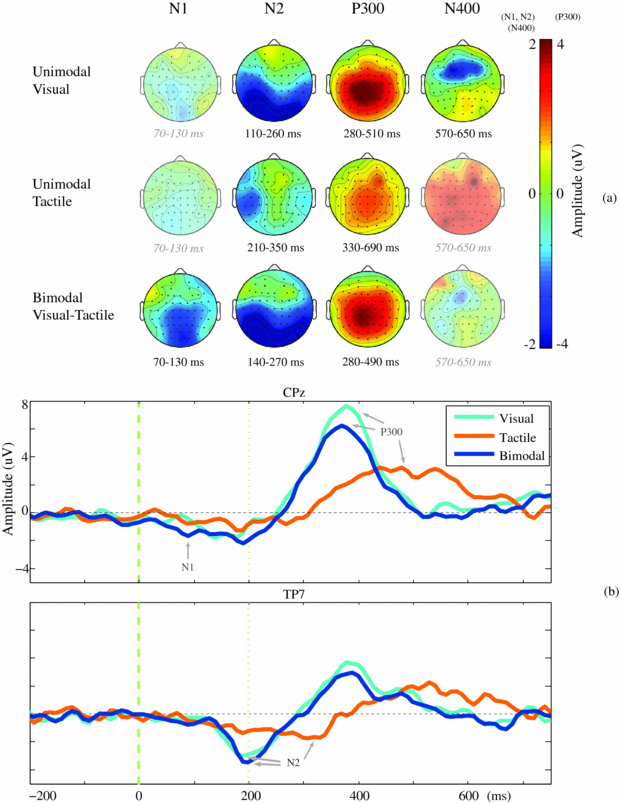

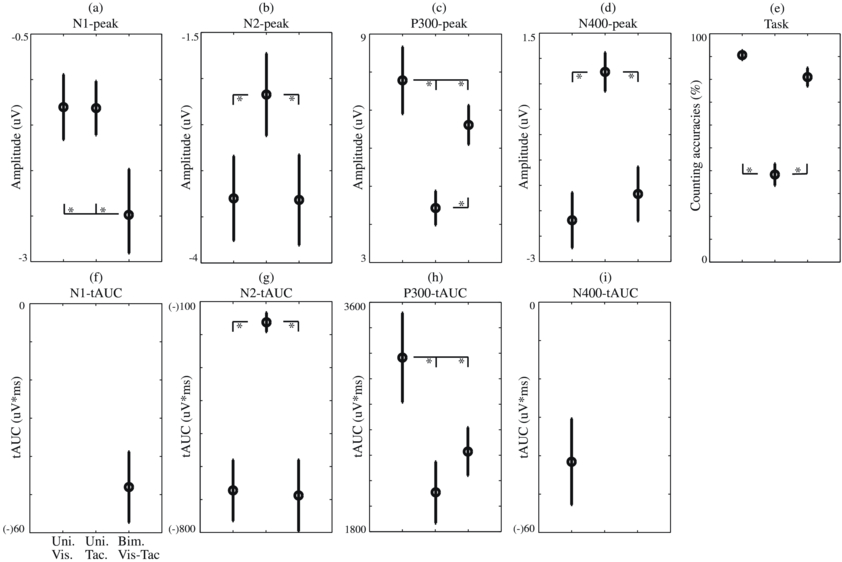

Spatiotemporal presentations of the amplitudes of the endogenous ERPs (see section 2.5.1) are presented in figure 2(a). For all conditions, endogenous activity was observed during multiple periods within the analysed interval from 0 until 750 ms after stimulus onset. In figure 2(b), spatiotemporal plots show the significant stable segments, according to the method described in section 2.5.2. The red and blue areas indicate the polarities (positive and negative, respectively) of the clustered segments that were found to be robust and were thus identified as endogenous ERP components. In figure 3(a), these ERP components are visualized by means of scalp plots (averaged amplitudes of the endogenous ERP at all electrodes, within the interval as defined in section 2.5.2). In figure 4, the main effects of modality on tAUC values and peak amplitudes (see section 2.5.3) are visualized for each condition and each identified ERP component.

Figure 2. Spatiotemporal representations of the difference ERP for each condition, with time (ms) on the x-axis and electrodes on the y-axis. Electrodes are structured as follows: left hemisphere (LH), midline electrodes, right hemisphere (RH), and substructured with the most frontal electrodes on top and occipital electrodes at the bottom. (a) The grand average of the amplitudes of the difference ERP (µV) for each condition. (b) The statistical significance of the difference ERP (p-values), clustered in ERP components, which are marked by coloured overlays in red and blue for positive and negative components, respectively.

Download figure:

Standard imageFigure 3. Difference ERPs and their components (reflecting endogenous attention) averaged over participants. (a) Scalp distributions of the difference ERP for the identified endogenous ERP components. Amplitudes (µV) are averages calculated within each ERP interval, as defined in section 2.5.2. If no ERP component was identified, the corresponding interval was used to visualize that activity for comparison. In that case, the scalp plot is left semi-transparent, and the corresponding interval is shown in grey and italics. (b) Grand average of the difference ERP. The averaged difference ERP is visualized for electrode CPz (standard, and N1 and P300 were present) and for electrode TP7 (N2 was present).

Download figure:

Standard imageFigure 4. Mean and standard errors averaged over participants of task performance and ERP components, separately for each condition. Condition pairs that significantly differed from each other are indicated by an asterisk (*) symbol. (a)–(d) Peak amplitudes (peaks averaged over the ERP-specific set of six electrodes used for the statistical analysis). (e) Counting accuracies. (f)–(i) tAUC values.

Download figure:

Standard imageAn endogenous ERP component reflecting an N1 was identified in the bimodal visual–tactile condition between 70 and 130 ms after stimulus onset, but not in the unimodal conditions (figures 2 and 3(b)). This ERP component had maximum amplitudes in the parietal area and was also present at the temporal area in the right hemisphere (figure 3(a)). An endogenous N2 was found for all conditions (figures 2 and 3(b)). Significant attention effects were associated with maximum amplitudes at the lateral-temporal and central-parietal sides between 110 and 260 ms after stimulus onset in the visual, between 210 and 350 ms in the tactile, and between 140 and 270 ms in the visual–tactile condition (figure 3(a)). Besides the N2, an endogenous ERP component reflecting a P300 was also identified in all three conditions (figures 2 and 3(b)). It had a central-parietal distribution for all conditions and was significant between 280 and 510 ms after stimulus onset in the visual condition, between 330 and 690 ms in the tactile condition, and between 280 and 490 ms in the visual–tactile condition (figure 3(a)). The intervals of both the N2 and P300 resembled one another in the visual–tactile and visual conditions (figure 3(b)). Finally, one ERP component was detected in the visual condition only, an N400 (figure 2), with a frontal distribution between 570 and 650 ms after stimulus onset (figure 3(a)). Similar but reduced activity appeared to be present in the visual–tactile condition (figure 2(a)); however, that activity was not robustly detected as an ERP component (figure 2(b)). The effects of modality on the tAUC values and peak amplitudes of ERP components are reported next.

3.1.1. Effect of modality on the N1

The N1 was only identified for the visual–tactile condition, with its tAUC values (figure 4(f)) differing significantly from zero (t(9) = 5.18; p < 0.001). Additionally, a comparison of peak amplitudes (figure 4(a); determined at electrodes: CPz, Pz, POz, Oz, FT8, Fp8) between all conditions, using the interval values of the visual–tactile condition, reveals an effect of modality (F(2,18) = 4.77; p < 0.05) caused by higher peak amplitudes for visual–tactile than for both unimodal conditions (both p < 0.05).

3.1.2. Effect of modality on the N2

The shape, latency and distribution of the N2 were remarkably similar for the visual and visual–tactile conditions. Indeed, statistical analyses of tAUC values (figure 4(g)) and peak amplitudes (figure 4(b); determined at electrodes: T7, TP7, CP5, T8, TP8, CP6) revealed an effect of modality on the N2 (F(2,18) = 24.86; p < 0.001 and F(2,18) = 5.39; p < 0.05, respectively), which was caused by a stronger N2 for visual and visual–tactile compared to tactile conditions (for respective analyses, both p < 0.001 and both p < 0.05). The significant interaction effect of modality × electrode (F(10,90) = 2.6; p < 0.01) on the N2 peak amplitude showed that the main effect is caused by higher amplitudes at electrodes TP7/8 and CP5/6 (all p < 0.05) and not T7/8.

3.1.3. Effect of modality on the P300

A main effect of modality was found on the tAUCs (figure 4(h)) of the P300 (F(2,18) = 16.35; p < 0.001). The post-hoc analysis showed that the P300 was stronger in the visual compared to visual–tactile and tactile conditions (both p < 0.01). The main effect of modality on the P300's tAUC was confirmed by an effect of modality on the P300 peak amplitudes (figure 4(c); determined at electrodes Cz, CPz, Pz, POz, CP1, CP2; F(2,18) = 15.15; p < 0.001). Similar to the tAUC value, the post-hoc analysis showed a stronger P300 for the visual compared to the tactile condition (p < 0.01), but also revealed higher P300 peak amplitudes for the visual–tactile compared to the tactile condition (p < 0.01). This highlights the different characteristics of the P300 with respect to modalities; the tactile P300 has lower amplitudes but lasts longer compared to the visual and visual–tactile P300. Such information is important for feature selection in the classification analysis. Additionally, an interaction effect of modality × electrode on the P300 peak amplitudes was found (F(10,90) = 3.49; p < 0.001). The post-hoc analysis revealed the same main effects of modality, indicating that the visual and visual–tactile P300 amplitudes were higher than the tactile amplitudes (reproducing the main effect of modality presented above) and that the visual P300 amplitudes were higher than visual–tactile amplitudes (p < 0.05 for all). The latter effect (indicating that adding a tactile stimulus to the visual stimulus did not enhance but reduced the P300) confirmed the results of the former analysis.

3.1.4. Effect of modality on the N400

The N400 was only detected to be robust in the visual condition, with a tAUC value (figure 4(i)) differing significantly from zero (t(9)= 3.71;p < 0.01). Additionally, we compared the N400 peak amplitudes (figure 4(d); determined at electrodes: Fz, F3, F4, FCz, FC3, FC4) with respect to the conditions using the interval detected for the visual N400 and found an effect of modality (F(2,18) = 13.63; p < 0.001). However, the post-hoc analysis indicated enhanced visual and visual–tactile amplitudes relative to the tactile amplitudes (both p < 0.001).

3.2. Offline BCI performance

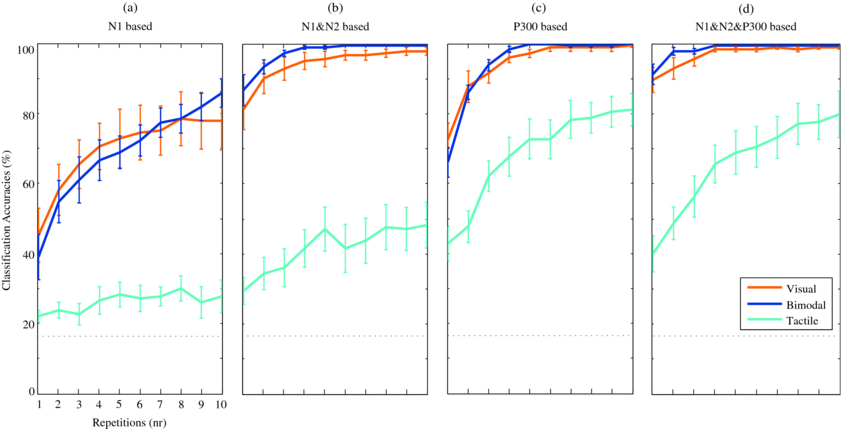

3.2.1. The effect of increased bimodal ERP components: a theoretical approach

Offline classification analyses (see section 2.5.4) were based on the N1, and on a combination of the N1 and N2. These ERP components were selected, because the visual–tactile N1 was increased with respect to the unimodal conditions, and although the visual–tactile and the visual N2 did not significantly differ, the N2 should provide a neutral basis to increase classification accuracies. Figures 5(a) and (b) show the classification results for N1-based and N1&N2-based, respectively. All participants scored 100% classification accuracy in the visual–tactile condition when data from sufficient repetitions were used (six or more) using the N1&N2-based classifier. Such homogeneous top performance was not reached in either of the unimodal conditions. To investigate the effect of stimulus modality, classification accuracies for the N1-based classifier were compared when successful BCI control is expected (80% of the participants achieved accuracies of 70% or higher). This criterion was met at the eighth repetition for the visual–tactile condition, and not met at all for the visual or tactile condition (figure 5(a)). A comparison of N1-based classification accuracies at the eighth repetition reveals a significant effect of modality (F(2, 18) = 32.73, p < 0.001), and the post-hoc analysis showed that tactile classification accuracies were lower than for visual and tactile (both p < 0.001). For the N1&N2-based classifier, the criterion was already met at the first repetition for both visual–tactile and visual (figure 5(b)). The statistical analysis showed a significant effect of modality (F(2, 18) = 63,76 p < 0.001), and the post-hoc analysis also indicated that tactile classification accuracies were lower than for visual and tactile (both p < 0.001). However, the enhanced trend in visual–tactile compared to visual classification accuracies was not significant.

Figure 5. Mean classification accuracies and standard errors of participants for all conditions, based on SWLDA. Features prior to automatic feature selection were averaged amplitudes for each of the 60 electrodes within each of four subwindows per ERP component. Chance level is visualized by the black dotted line. Classification accuracies based on the (a) N1 only, (b) N1&N2 only, (c) P300 only, (d) N1&N2&P300.

Download figure:

Standard image3.2.2. Evaluation of visual–tactile BCI performance: a practical approach

In addition to the N1-based and N1&N2-based classification, classification accuracies were also calculated with a P300-based (figure 5(c)) and N1&N2&P300-based classifier (figure 5(d)). Based on all classification results, bitrates were calculated as a function of the number of repetitions. In general, for all three conditions, averaged bitrates (see figure 6) were highest at the first repetitions and decreased when multiple repetitions were used. However, BCI operation at the first trial was not considered successful in all cases, because the corresponding criterion was not met (see section 2.5.4.) We determined the highest bitrates achieved for each condition when at least 80% of the participants had classification accuracies of 70% or higher. For visual 90% and for visual–tactile 100% of the participants exceeded the threshold using the N1&N2&P300-based classifier at the first repetition resulting in bitrates of 62.45 (SD 20.05) and 64.93 (SD 18.18) bits min–1, respectively. The best performance and successful BCI control may be expected for tactile at the seventh repetition: 6.52 (SD 3.18) bits min–1, since then 80% of the participants exceeded the threshold when the P300-based classifier was used. To statistically analyse the effect of modality on BCI performance for practical use, these condition-specific classifiers were used and the corresponding bitrates were compared. The effect of modality on bitrates was significant (F(2, 18) = 91.37, p < 0.001), and post-hoc analyses confirmed that bitrates for visual and visual–tactile were higher than for tactile (both p < 0.001), but did not differ from each other. However, similar to the theoretical approach using the N1&N2-based classifier, also with the practical approach using the N1&N2&P300-based classifier, only for the visual–tactile condition was a 100% classification accuracy achieved by all participants (for repetitions four and higher).

Figure 6. Mean bitrates (bits min–1) and standard errors of participants for all conditions, based on the classification probability (0–1), the number of possible selections and the time necessary to communicate a decision as determined by the stimulus presentation of the experimental setup. The maximum bitrate (classification probability is 1) is visualized by the black dotted line. Bitrates are calculated for classification accuracies based on the (a) N1 only, (b) N1&N2 only, (c) P300 only, (d) N1&N2&P300.

Download figure:

Standard image3.3. Task performance

In figure 4(e), the percentage of steps over which the number of presented targets was counted correctly is shown for each condition. Counting accuracy was significantly affected by modality (F(2, 18) = 57.3, p < 0.001). The post-hoc analysis showed that the percentages of correctly counted targets in the visual and visual–tactile conditions were significantly higher than those in the tactile condition (both p < 0.001). Thus, the conditions that contained visual stimuli differed from the conditions that did not. The lower counting accuracy for the visual–tactile compared to that of the visual condition was a non-significant trend (p = 0.17).

4. Discussion

4.1. General

In this study, we investigated the possible enhancement of ERP components for attending to bimodal visual–tactile stimuli compared to unimodal visual or tactile stimuli and explored if such an effect can be employed to increase BCI performance. Confirming our first hypothesis, we showed that an early (< 200 ms) ERP component, the N1 (70–130 ms), was elicited for attending to visual–tactile stimuli and was enhanced relative to either visual or tactile activity. A consecutive ERP component, the N2, was remarkably similar for attending to visual and visual–tactile stimuli and was stronger in both conditions compared to attending to tactile stimuli. Such early ERP components may be usable in BCIs and increase performance, for example, by increasing overall classification accuracies, reducing the time required to communicate a command and by diminishing the delay in the control loop in (future) applications. We will discuss the potential benefits of bimodal BCIs in the light of these advantages.

Although the effects related to the early stage of multisensory processing tended to be in favour of the visual–tactile condition, the results for participants' task performance were not. The non-significant trend was opposite to our expectations formulated in the third hypothesis, with task performance almost being lower for the visual–tactile compared to the visual condition rather than being higher (both were higher than for the tactile condition). A similar pattern was present for the P300, and the P300 was even significantly reduced with respect to the visual–tactile compared to the visual condition. The pattern of effects in the chain of ERP components suggests that multisensory information was processed differently over time than unimodal information, which may be explained by a difference in directing endogenous attention in early and late stages of processing multisensory information.

Below, we will first discuss the early stage of multisensory processing, including the effect of endogenous attention and how BCI performance may benefit from it, followed by a discussion of the later stages, which are associated with task performance. We conclude with a discussion of potential advantages of multisensory ERP-BCIs and evaluate them from a practical point of view.

4.2. Early multisensory effects: an enhanced bimodal N1 and its usefulness for BCIs

4.2.1. The endogenous visual–tactile N1 and its relation to early multisensory integration

Early processing of attended visual–tactile stimuli resulted in the elicitation of the N1. This bimodal N1 cannot be explained by either of the unimodal stimuli alone because the activity in the corresponding interval is significantly higher for attending to visual–tactile stimuli compared to either of the unimodal stimuli. The input from both sensory modalities contributed to the bimodal N1 through the (sum of the) two separate processes and possibly by additional integration activity due to crossmodal links in sensory processing at that early stage (Foxe et al 2000). The latency of our bimodal N1 is well in line with previously reported multisensory integration effects modulated by spatial (selective) attention: Talsma and Woldorff (2005) reported the first effect to peak at approximately 100 ms, and ours was present between 70 and 130 ms after stimulus onset. Furthermore, Talsma et al (2007) showed that it is required that both modalities of bimodal stimuli are attended for early ERP multisensory integration effects to occur and are not present if one modality is explicitly ignored. This suggests that the participants in our study correctly followed the instructions to attend to both modalities and did not ignore one modality in the bimodal condition.

4.2.2. Implications of the visual–tactile N1 for (real-time) applications

The effect of endogenous attention on the visual–tactile N1 in this study was already clearly present 70 ms after stimulus onset, which is earlier than what was observed for the unimodal stimuli, for which the earliest attentional effects were only locally present at 110 ms for visual stimuli and at 210 ms for tactile stimuli. This finding is in line with that of Forster et al (2009), who reported that attended unimodal stimuli are processed faster when the input of more than one modality is processed and crossmodal links are involved.

To investigate the theoretical advantage of visual–tactile stimulus presentation on classification accuracies, we analysed offline classification accuracies that were only based on the features related to early ERP components. Our results showed a nonsignificant positive trend for bimodal compared to unimodal stimuli. If ERP components are enhanced, we also expect higher classification accuracies, when the classifier is sensitive enough and the data have not reached a ceiling yet. Therefore, the absence of a significant effect of visual–tactile compared to visual classification accuracies may indicate that the classifier was not sensitive enough to fully employ the visual–tactile ERP component.

If we would hypothesize that in the future technology and knowledge will develop such that with a combination of appropriate electrodes and classifiers the difference between targets and nontargets could be detected almost immediately after the brain response, then the bottleneck for a fast response would be biologically determined. The small latency of the visual–tactile N1 is in that case especially interesting for applications for which (near) real-time interaction is desired, such as in gaming or steering and control tasks that are hampered by delays in the control loop. Using the N1-based classifier, only the visual–tactile BCI could be considered to be successfully operated, although bitrates were considerably higher when the classifier was also based on other ERP components.

4.2.3. Early ERP components may also be relevant for gaze-independent ERP-BCIs

Recent studies using a visual ERP-BCI showed that when participants gazed at a central point of fixation compared with gazing at targets directly, BCI performance dropped tremendously (Brunner et al 2010, Treder and Blankertz 2010). This result suggests that gaze-dependent BCIs are not (solely) based on the P300 and make use of low-level visually evoked potentials that are elicited by foveating targets, which is confirmed in the present study. Moreover, it appeared from the investigated relation between time and classification error by Treder and Blankertz (2010) that for gaze-dependent BCIs, the classification error was minimal at approximately 200–250 ms, an interval during which both early and late ERP components were present. A comparison of classification accuracies based on the early (including an early negative ERP component comparable to our N2) versus the late (including the P300) part of the ERP was made for a non-representative participant with a strong early negative ERP component, for whom the early part of the ERP appeared to be more influential (Bianchi et al 2010). We observed a similar dominance of the early ERP components for visual and visual–tactile ERP-BCI performance (compare visual and visual–tactile traces in figure 5(d) to those in 5(b) and 5(c)).

Besides gaze-dependent BCIs, also gaze-independent BCIs may benefit from the early ERP components. In our study, the visual ERP-BCI was gaze-dependent, and only elicited an N2, while the visual–tactile ERP-BCI elicited an N1 and an N2. Thus, by adding a tactile, gaze-independent stimulus, more early ERP activity was enhanced. Whether this N1 depends on gaze or not is not clear and we are currently investigating this. The tactile N2, however, is by definition gaze-independent. Interestingly, we detected this tactile N2 in our previous studies only when vision was involved for determining the target direction (Thurlings et al 2012), but not when the target direction was presented in the tactile modality (Brouwer and Van Erp 2010). Therefore, it seems plausible that the tactile N2 in this study also has some type of multisensory nature. In line with our observations are the results of Forster et al (2009), who found earlier effects of spatial attention on the tactile ERP when vision was involved, compared to when it was not. Our tactile N2 may contribute to the effectiveness of a tactile BCI, if the classifier is only based on the N2 and the P300, and cannot make use of earlier information. Thus, we hypothesize that the application of a tactile BCI when using a visual task, such as navigation, could result in enhanced BCI performance compared to if the visual modality is not involved at all.

4.3. Late multisensory effects: a reduced visual–tactile compared to visual P300 and corresponding task performance

The endogenous P300 was reduced for attending to visual–tactile compared to visual stimuli. This result is in line with the non-significant trend observed in task performance, with more accurate results for visual compared to visual–tactile stimuli. For tactile stimuli, the results of both the P300 and task performance were the lowest. These results indicate a reduction in allocated attention on visual–tactile compared to visual targets, contradicting our hypothesis. In general, it is recommended that signals are semantically, temporally and spatially congruent in order to optimize the integration of multisensory input (Driver and Noesselt 2008). Next, we will discuss the late multisensory effects in light of the congruency relations within the bimodal stimuli of our study and propose a possible explanation.

4.3.1. Semantic and temporal congruence within the bimodal stimuli

In our study, the bimodal stimuli were semantically congruent because the indicated directions of the visual and tactile stimuli were the same. Temporally, the visual and tactile stimuli within a bimodal stimulus were triggered congruently and may be considered temporally congruent. However, the course of stimulus intensity slightly differed between visual and tactile stimuli because of technical properties with a difference of less than 50 ms between the latencies of maximum stimulus intensity. Because previous research showed that multimodal signals are perceived as emanating from the same source if presented within a delay of approximately 100 ms (Vroomen et al 2004), we believe that this temporal difference is unlikely to be the cause of the degraded late multisensory effects.

4.3.2. Spatial incongruence within the bimodal stimuli

The semantic and temporal relations within the bimodal stimuli are unlikely to explain the absence of an advantage of bimodal stimuli on task performance, but the spatial relation may do so. The locations of the stimuli indicated directions, which referred to the participants' bodily anteroposterior axes for the tactile stimuli and to the participants' displayed current position on the route for the visual stimuli. Therefore, the indicated directions of the stimuli were congruent, but the stimuli had different locations and thus were location incongruent (for the effect of directional congruency in ERP-BCIs, see Thurlings et al at press).

Previous research has reported contradicting results regarding the necessity of spatial congruency for multisensory integration. While some researchers have reported enhanced task performance for spatially incongruent bimodal stimuli compared to unimodal stimuli (Gondan et al 2005b, Philippi et al 2008, Teder-Salejarvi et al 2005), others have reported the opposite (Ho et al 2009). We believe that the spatial incongruence of bimodal stimuli only hampers multisensory integration if the task involves spatial selective attention, which is the case in both our study and that of Ho et al (2009).

4.3.3. A spread of attention possibly caused by a distracting modality

The location incongruence within the visual–tactile stimuli may have degraded task performance because participants had to divide their attention between two locations (and modalities). The bimodal P300 in our study does not reflect a tactile P300 (which occurred relatively late and lasted long), suggesting that tactile targets were not specifically endogenously selected and attended in the visual–tactile condition. Although the bimodal N1 indicated that both modalities were attended in the early stage, endogenous selection occurs only after the first multisensory processing effects that occur within the (prelocated) focus of attention. Thus, spatial attention could have shifted between those two processes around the intermediate stage. One reason for such a shift may be that the tactile stimuli were distracting rather than cooperative and attracted attention away from the visual stimuli so that attention became more dispersed instead of focused on the target location(s). The effects of spatial congruency under various circumstances were previously related to higher perceptual-cognitive processes well after our N1 but around the latency of our N2 in the intermediate stage (Gondan et al 2005b, Teder-Salejarvi et al 2005, Zimmer et al 2010).

4.4. Possible advantages of bimodal ERP-BCIs

4.4.1. The potential-added value of bimodal stimulus presentation on the effectiveness of BCIs

In section 2.5.2, we discussed the potential-added value of the bimodal N1 to decrease the response times in future BCIs. In this study, we could not show significant improvement of performance for the visual–tactile compared to the visual condition, neither from a theoretical approach (on classification accuracies when only early ERP components were used, which were increased for bimodal compared to unimodal), nor from a practical approach (on bitrates when using the most appropriate ERP-based classifier and number of repetitions for each modality separately). Nevertheless, in both cases we did find some indications that bimodal BCIs could help the effectiveness of a BCI, as for example only for bimodal was 100% top performance reached for all participants. Future research should point out whether significant improvement can be reached when other classification techniques are used, when bimodal and visual BCIs are not gaze-dependent (and overall performance will be lower) and when bimodal stimulus presentation is offered location congruently. We are currently investigating both latter factors.

4.4.2. Other possible advantages of bimodal ERP-BCIs

A visual–tactile ERP-BCI as outlined in this study makes use of additional ERP components that are elicited or enhanced due to multisensory integration. In addition to the potential-added value of bimodal stimulus presentation on the effectiveness of a BCI, bimodal ERP-BCIs possess other potentially interesting properties. As discussed previously, the gaze-dependence is a problem for users with impaired vision or eye muscles and in situations in which gaze is needed elsewhere (e.g., while driving or gaming). However, the advantage of gaze-dependent visual ERP-BCIs is that these are associated with high BCI performance while performance of gaze-independent ERP-BCIs (visual, tactile or auditory) is relatively low. In a bimodal ERP-BCI, participants do not necessarily have to attend to both stimulus modalities, but they may decide to attend only one modality, thus alternating between the two ERP-BCIs. In that way, the advantages of both modalities may be exploited alternately, as appropriate for a specific situation. This flexibility during usage could be of interest to both able-bodied and disabled users and fits with the growing interest in flexibility by combining different BCIs, also referred to as hybrid-BCIs (e.g., Allison et al 2010). To realize such an alternating bimodal BCI, an additional classifier may be needed to detect the currently attended modality if the ERPs would differ when one or the other modality is attended, so that the appropriate classifier for the currently attended modality can be applied to distinguish targets from nontargets. Furthermore, for less able-bodied users with, for instance, a neuromuscular disorder, it might be helpful to use a bimodal ERP-BCI during the period when their ability to use their muscles is degrading so that a smooth transition from a visual gaze-dependent to a tactile BCI can be realized (Klobassa et al 2009).

4.4.3. The usability of bimodal ERP-BCIs

In this study, we investigated one aspect which is of importance to the evaluation of any system: effectiveness of the BCI. However, other aspects such as usability are relevant as well. Usability may be influenced by the limitations induced by the hardware required. For the use of the tactile or bimodal BCI, tactors have to be attached to the body. In this study, we used a vest in which tactors are integrated, but are connected with cables and other equipment. The limitations of this hardware seem less restricted though than the hardware necessary to record EEG. Also, recently a tactile wireless belt was developed (Eaglescience4 and TNO), which is as easy to wear as a regular belt.

5. Conclusions

We are interested in the possible facilitation of bimodal stimulus presentation for ERP-BCIs. In this study, we investigated the effect of visual–tactile stimulus presentation on the chain of ERP components, BCI performance (classification accuracies and bitrates) and task performance (counting targets). Visual–tactile stimulus presentation had positive effects at an early stage of attended stimulus processing (N1) compared to only visual or tactile stimulus presentation, but negative effects at a late stage (P300) compared to visual stimulus presentation. We discussed that early bimodal ERP effects (enhanced compared to unimodal) may be the result of (the sum of) two separate sensory-specific processes for visual and tactile stimulus processing and possibly also of a multisensory integration effect. Late bimodal ERP effects (reduced compared to visual) may be explained by affected spatial attention, caused by a (partly) spatially incongruent relation within the visual–tactile stimulus. Additionally, we evaluated the potential advantages of a bimodal BCI on effectiveness. Although bimodal (compared to visual) performance was not significantly enhanced, we did find indications that bimodal BCIs could be more effective than unimodal BCIs: only for the bimodal condition was 100% top performance reached for all participants. Future research should point out whether the gain of bimodal BCIs may be greater for bimodal gaze-independent and location congruent BCIs. Furthermore, the small latency of the bimodal N1 might become relevant in future applications when the delay of the feedback is directly determined by the biological response.

Acknowledgments

The authors gratefully acknowledge the support of the BrainGain Smart Mix Programme of the Netherlands, the Ministry of Economic Affairs and the Netherlands Ministry of Education, Culture and Science. This research has been supported by the GATE project, funded by the Netherlands Organization for Scientific Research (NWO) and the Netherlands ICT Research and Innovation Authority (ICT Regie). This work was further supported in part by grants from the Deutsche Forschungsgemeinschaft (DFG), MU 987/3-2 and Bundesministerium für Bildung und Forschung (BMBF), FKZ 01IB001A and 01GQ0850. The authors would also like to thank Márton Danóczy and Antoon Wennemers.

Footnotes

- 4

For more information, see contact details at www.eaglescience.nl.